Over the past few decades, evidence synthesis has greatly increased the effectiveness of medicine and other fields. The process of systematically combining findings from multiple studies into comprehensive reviews helps researchers and policymakers to draw insights from the global literature1. AI promises to speed up parts of the process, including searching and filtering. It could also help researchers to detect problematic papers2. But in our view, other potential uses of AI mean that many of the approaches being developed won’t be sufficient to ensure that evidence syntheses remain reliable and responsive. In fact, we are concerned that the deployment of AI to generate fake papers presents an existential crisis for the field.

What’s needed is a radically different approach — one that can respond to the updating and retracting of papers over time.

We propose a network of continually updated evidence databases, hosted by diverse institutions as ‘living’ collections. AI could be used to help build the databases. And each database would hold findings relevant to a broad theme or subject, providing a resource for an unlimited number of ultra-rapid and robust individual reviews.

Adding fuel to the fire

Currently, the gold standard for evidence synthesis is the systematic review. These are comprehensive, rigorous, transparent and objective, and aim to include as much relevant high-quality evidence as possible. They also use the best methods available for reducing bias. In part, this is achieved by getting multiple reviewers to screen the studies; declaring whatever criteria, databases, search terms and so on are used; and detailing any conflicts of interest or potential cognitive biases.

Scientists are building giant ‘evidence banks’ to create policies that actually work

Yet these reviews require considerable resources. Some studies suggest that Cochrane reviews — systematic reviews of specific topics in health care and health policy that meet internationally recognized criteria for the highest standards in evidence-based health care — generally cost more than US$140,000 and take more than two years to complete3,4.

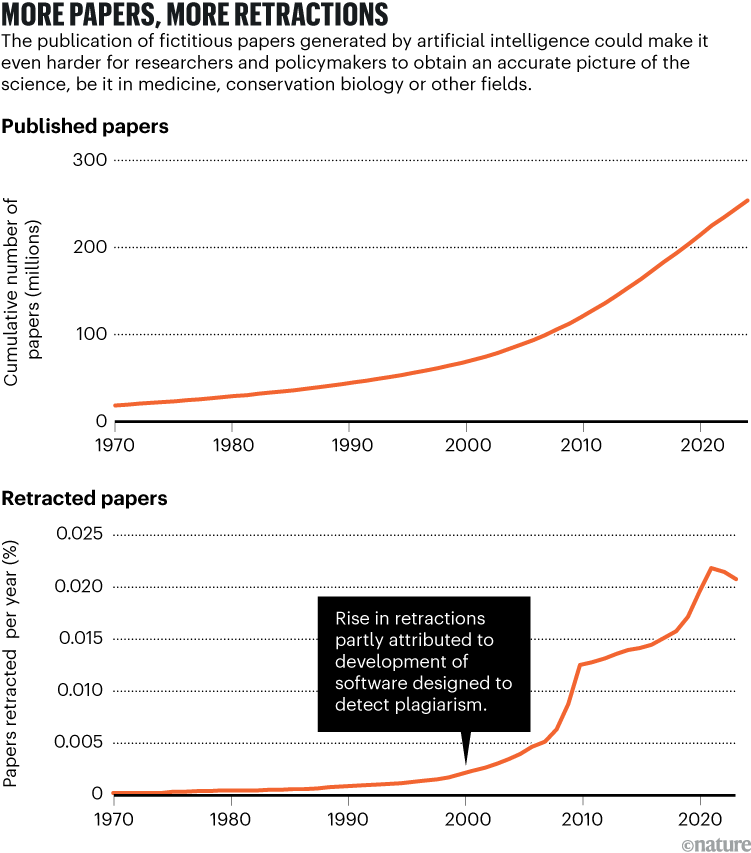

It is becoming ever harder for review authors to keep up with the rapidly expanding number of papers. The scientific literature is estimated5 to have doubled every 14 years since 1952.

Because each reviewer tends to have access to different publications, and because databases are continually updated, systematic reviews are plagued by reproducibility issues. A study published last year concludes that only 1% of reviews report a search strategy that is fully reproducible6. Furthermore, many systematic reviews unwittingly cite publications that have been retracted, including those removed from the literature because of methodological or ethical issues and fraud7.

We agree that AI could be part of the solution to these problems. It could help investigators to conduct reviews more comprehensively and more efficiently — by filtering many more papers, say, or by assessing the entire content of papers instead of just the title and abstract, as human reviewers tend to do as a first step. But one aspect seems to be underappreciated: the degree to which AI — particularly large language models (LLMs) — could exacerbate some of the problems.

At this point, little is known about how many scientific papers generated entirely by AI are being published. As announced in March, a scientific paper8 generated by AI Scientist (an AI tool developed by the company Sakana AI in Tokyo and its collaborators) passed peer review for inclusion in a workshop at a key AI meeting. The reviewers did not detect that an AI model had formulated the hypotheses, designed and run experiments, analysed the results, generated the figures and produced the manuscript.

Policymakers in China are using evidence synthesis to guide management of the invasive grass Spartina alterniflora.Credit: SIPA Asia/ZUMA Press/Alamy

And a preprint posted on arXiv estimates that at least 10% of all PubMed abstracts published in 2024 were written with the help of LLMs, on the basis that an abrupt increase in the frequency of certain words coincided with widespread access to LLMs9. That proportion has almost certainly gone up since.

Decision makers need constantly updated evidence synthesis

Even if LLMs are used widely, it is difficult to separate cases in which they have been deployed to fabricate papers from those in which authors are simply using them to improve their writing10. Yet generative AI is likely to make the production of fake manuscripts easier, irrespective of whether those who use LLMs maliciously do so to further their careers, to manipulate the conclusions of evidence syntheses because of a specific commercial or policy objective or simply to be disruptive. The use of multiple LLMs will also make it more difficult for humans to detect textual fingerprints associated with one particular model.

In other words, the use of generative AI is likely to supercharge the already growing problem of paper mills — businesses that sell fake work and authorships to researchers seeking journal publications to boost their careers. It could even replace the paper-mill market, given that fake papers can now be generated in minutes for free.

What to do?

The Campbell Collaboration (a group of researchers and policymakers dedicated to generating evidence syntheses for economic and social policy decisions) and Cochrane already provide guidance on how to identify studies that have raised concerns or that have been retracted11. This includes checking studies against the Retraction Watch database, which lists retractions gathered from publisher websites, and using the CENTRAL database, a repository for clinical-trial reports that flags retracted studies11. Cochrane guidance also states that the authors of published reviews containing retracted studies should recalculate all results and, while doing so, flag the review with an editorial note or withdraw it and then publish the updated version11.

Even now, this kind of reanalysis often fails to happen, presumably because the original review authors have limited resources and little incentive. In one assessment of systematic reviews of pharmaceutical compounds tested in clinical trials, retracted papers continued to be cited in 89% of the reviews one year after the review authors had been notified of the retraction7. With the ever-increasing production of both legitimate and spurious scientific literature, researchers’ ability to maintain an accurate picture of what the data show is likely to be outstripped (see ‘More papers, more retractions’).

Source: Data from Open Alex (https://openalex.org/).

So, what system might enable the continual and rapid removal — at scale — of fraudulent or otherwise problematic papers from databases?

Although not developed with this goal in mind, our work on the Conservation Evidence project — an information resource hosted by the University of Cambridge, UK, to support decisions about how to maintain and restore global biodiversity — has convinced us that a network of AI-enabled, continually updated evidence databases is one possible solution.

As part of this project, all of the authors of this article have been involved in developing subject-wide evidence synthesis. The aim here is to identify literature containing information that is relevant to a broad theme. For the Conservation Evidence project, this is the effectiveness of management actions for biodiversity conservation.

Evidence synthesis needs greater incentives