Some of the risks of large language models can be mitigated if the tools are subjected to peer review.Credit: Matteo Della Torre/NurPhoto/Getty

None of the most widely used large language models (LLMs) that are rapidly upending how humanity is acquiring knowledge has faced independent peer review in a research journal. It’s a notable absence. Peer-reviewed publication aids clarity about how LLMs work, and helps to assess whether they do what they purport to do.

Read the paper: DeepSeek-R1 incentivizes reasoning in LLMs through reinforcement learning

That changes with the publication1 in Nature of details regarding R1, a model produced by DeepSeek, a technology firm based in Hangzhou, China. R1 is an open-weight model, meaning that, although researchers and the public do not get all of its source code and training data, they can freely download, use, test and build on it without restriction. The value of open-weight artificial intelligence (AI) is becoming more widely recognized. In July, US President Donald Trump’s administration said that such models are “essential for academic research”. More firms are releasing their own versions.

Since R1’s release in January on Hugging Face, an AI community platform, it has become the platform’s most-downloaded model for complex problem-solving. Now, the model has been reviewed by eight specialists to assess the originality, methodology and robustness of the work. The paper is being published alongside the reviewer reports and author responses. All of this is a welcome step towards transparency and reproducibility in an industry in which unverified claims and hype are all too often the norm.

AI can learn to show its workings through trial and error

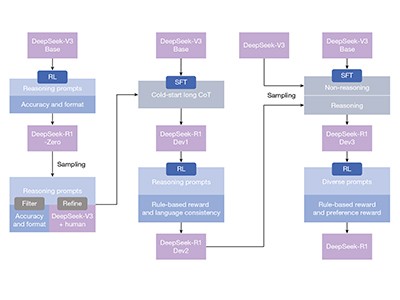

DeepSeek’s paper focuses on the technique that the firm used to train R1 to ‘reason’. The researchers applied an efficient and automated version of a ‘trial, error and reward’ process called reinforcement learning. In this, the model learns reasoning strategies, such as verifying its own working out, without being influenced by human ideas about how to do so.

In January, DeepSeek also published a preprint that outlined the researchers’ approach and the model’s performance on an array of benchmarks2. Such technical documents, which are often called model or system cards, can vary wildly in the information they contain.

In peer review, by contrast, rather than receive a one-way flow of information, external experts can ask questions and request more information in a collaborative process overseen and managed by an independent third party: the editor. That process improves a paper’s clarity, ensuring that authors justify their claims. It won’t always lead to major changes, but it improves trust in a study. For AI developers, this means that their work is strengthened and therefore more credible to different communities.

The peer-review crisis: how to fix an overloaded system

Peer review also provides a counterbalance to the practice of AI developers marking their own homework by choosing benchmarks that show their models in the best light. Benchmarks can be gamed to overestimate a model’s capabilities, for instance, by training on data that includes example questions and answers, allowing the model to learn the correct response3.

In DeepSeek’s case, referees raised the question of whether this practice might have occurred. The firm provided details of its attempts to mitigate data contamination and included extra evaluations using benchmarks published only after the model had been released.

Peer review led to other important changes to the paper. One was to ensure that the authors had addressed the model’s safety. Safety in AI means avoiding unintended harmful consequences, from mitigating inbuilt biases in outputs to adding guardrails that prevent AIs from enabling cyberattacks. Some see open models as less secure than proprietary models, because, once downloaded by users, they are outside of the developers’ control (that said, open models also allow a wider community to understand and fix flaws).

How China created AI model DeepSeek and shocked the world

Reviewers of R1 pointed out a lack of information about safety tests: for example, there were no estimates of how easy it would be to build on R1 to create an unsafe model. In response, DeepSeek’s researchers added important details to the paper, including a section outlining how they evaluated the model’s safety and compared it with rival models.

Firms are starting to recognize the value of external scrutiny. Last month, OpenAI and Anthropic, both based in San Francisco, California, tested each other’s models using their own internal evaluation processes. Both found issues that had been missed by their developers. In July, Paris-based Mistral AI released results of an environmental assessment of its model, in collaboration with external consultants. Mistral hopes that this will improve transparency of reporting standards across the industry.

Given the rapid pace at which AI is developing and being unleashed on society, these efforts are important steps. But most lack the independence of peer-reviewed research, which, despite its limitations, represents a gold standard for validation.

Scientists flock to DeepSeek: how they’re using the blockbuster AI model

Some companies worry that publishing could give away intellectual property — a risk, given the huge financial investment that such models have received. But, as shown with Nature’s publication of Google’s medical LLM Med-PaLM, peer review is possible for proprietary models4.

Peer reviews relying on independent researchers is a way to dial back hype in the AI industry. Claims that cannot be verified are a real risk for society, given how ubiquitous this technology has become. We hope, for this reason, that more AI firms will submit their models to the scrutiny of publication. Review doesn’t mean giving outsiders access to company secrets. But it does mean being prepared to back up statements with evidence and ensuring that claims are validated and clarified.