Methodology and background

The LCA method used in this study compares the environmental impacts of cold-plate-cooled data centres (CPD) and ICDs with those of ACDs. Immersion cooling included both one-phase (1P-ICD) and two-phase cooling (2P-ICD). This cradle-to-grave study (Fig. 3) characterizes the GHG emissions, primary energy and blue water consumption of the CPD, 1P-ICD, 2P-ICD and ACD by analysing the infrastructure components listed in Table 2. The IPCC’s Fifth Assessment Report (AR5) 100-year timescale, excluding the biogenic carbon method, was used for quantifying GHG emissions. It is measured in units of carbon dioxide equivalents (kgCO2e). Biogenic carbon emissions originate from natural carbon-cycle-related biological sources, such as plants, trees and soil; therefore, excluding biogenic carbon emissions quantifies GHG emissions from fossil sources. Although biogenic carbon is part of the natural cycle, fossil-derived carbon releases otherwise sequestered carbon to the atmosphere. The low heating value (or net calorific value) was used to determine the primary energy from non-renewable resources and is measured in megajoules (MJ) as a measurement of energy use from fossil resources that cannot be replenished. The blue water consumption metric captures the amount of water consumed by the system, which is the withdrawals less any water returned to the ecosystem in a usable form. The study models the production, use phase and EOL of all components over the lifetime of the data centre.

We estimated the use phase as the energy consumed by the data centre and modelled it based on the electricity consumed by the data centre from the 2021 US grid. The US electric grid is powered by fossil fuels, namely, coal (22% in 2021) and natural gas (38% in 2021) (ref. 35), which contribute the most to GHG emissions and primary energy demand. In 2015, thermoelectric power was the largest consumer of water at 41% (ref. 36). We estimated the EOL impacts for the previously mentioned components based on the extent of recycled components. Primary data are from Microsoft, and secondary data are from EPDs wherever possible. We used LCA FE and ecoinvent 3.6 data when primary or EPD data were unavailable.

Data centres provide cloud services delivered on demand over the internet. Services include everyday applications such as Microsoft 365, storage server space infrastructure and web-based environments of the cloud apps. These services are performed in the cloud and require specific levels of memory and processing. The smallest set of operations over a given amount of memory and processing capacity is known as a virtual core (Vcore). This study used the common functional unit of 1 Vcore to analyse all cooling technologies studied because it is the fundamental unit that represents the operations carried out to provide the different cloud services. A server Vcore results from the relationship between physical cores, logical cores and packing density. A physical core (also processing units) or just simply a core is a well-partitioned piece of logic capable of independently performing all functions of a processor, that is, the CPU in the case of a general-purpose microprocessor. A single physical core may correspond to one or more logical cores. Logical cores are the number of physical cores times the number of threads that can run on each core. Packing density is the number of devices (such as logic circuits) or integrated circuits per unit area of a silicon chip.

$$1\,{\rm{P}}{\rm{h}}{\rm{y}}{\rm{s}}{\rm{i}}{\rm{c}}{\rm{a}}{\rm{l}}\,{\rm{c}}{\rm{o}}{\rm{r}}{\rm{e}}=2\,{\rm{L}}{\rm{o}}{\rm{g}}{\rm{i}}{\rm{c}}{\rm{a}}{\rm{l}}\,{\rm{c}}{\rm{o}}{\rm{r}}{\rm{e}}{\rm{s}}=2\,{{\rm{V}}}_{{\rm{c}}{\rm{o}}{\rm{r}}{\rm{e}}{\rm{s}}}\,\times a$$

where a is packing density 0 < a < 1.5.

The impacts across all these stages are then aggregated to represent the impact of the data centre over its 15-year lifetime. Some individual components in the data centre were modelled based on the lifetime provided by Microsoft or specified in the documentation. For servers (compute, networking and storage), we assumed a 6-year lifetime based on expert opinion from Microsoft. We annualized support equipment based on the equipment lifetime provided in the EPDs or LCAs. Any support equipment modelled in LCA FE, using data from the ecoinvent 3.6 or LCA FE databases, is assumed to have the same lifetime as the data centre, that is, 15 years. We annualized the results using the lifetime and then normalized using the total number of Vcores.

The following are explanations of the parts of the data centre and how they were modelled. Table 3 shows the relative difference in the number of components between the cold-plate cooled, immersion-cooled and ACDs.

Building production and EOL

The building shell was modelled based on the bills of material (BOM) of Microsoft and Tally. Modelling for building GHG emissions and energy was conducted using the LCA software Tally Environmental Impact Tool (https://choosetally.com/overview/). Environmental Impact Tool was used to model the GHG emissions and the primary energy demand associated with the building shell, whereas blue water consumption was modelled in LCA FE based on materials used to make the data centre. The concrete associated with the pathways and floor slabs around air-cooled, cold-plate, one-phase immersion and 2P-ICDs were included in the model. We assumed that the concrete was 5,000 psi and of an average depth of 8 in. The databases used to model the building materials were ecoinvent 3.6 and LCA FE. The analysis excluded any interior non-structural partitions, ceilings and equipment.

EOL GHG emissions and water impacts were also modelled in Tally based on building materials. Water EOL impacts were modelled in LCA FE based on building materials. For water EOL impacts, the Environmental Protection Agency (EPA) data were used for metal recycling and landfill rates. All other materials were assumed to be landfilled.

Server production and EOL

The compute, network and storage server models were built in LCA FE. The compute, networking and storage servers contribute 70%, 10% and 20% to the data centre IT capacity, respectively. The compute server model was built based on the BOM provided by Microsoft. The compute server includes a processor, memory module, solid-state drives (SSDs), chassis, field-programmable gate array (FPGA), assembly, riser card, heat sinks, clips, inserts and cables. The storage servers consist of just a bunch of drives (JBOD) and head nodes. The JBOD consists of processors, hard disk drive (HDD), SSD, chassis, FPGA and motherboard. Each head node is the same as that of a compute server. Owing to data gaps, the network server is assumed to be equivalent to 1.3 times the embodied impacts of a compute server, based on internal expertise.

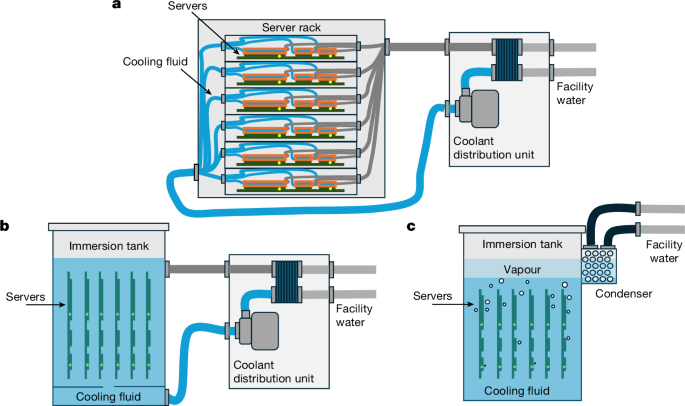

All server types were modelled as the same between the ACDs, cold-plate data centres and ICDs, except that the cold-plate servers included copper plates, tubing, manifold and CDUs, whereas the immersion-cooled servers had no heat removal devices. The immersion-cooled servers were modelled as air-cooled servers without fans, heat sinks or baffles. The embodied impacts of the air-cooled rack were scaled based on the number of servers.

The BOM consisted of electronic and non-electronic elements (structural elements of the server). The production of electronic elements was modelled using the LCA FE electronics extension database (for example, for resistors, transistors, capacitors, printed wiring board and IC). When an exact match for an electronic component was unavailable, we identified proxies and scaled based on the package type of the component and the die area. All electronics impacts in LCA FE are based on research that changed the industry48. It is important to note that this research is two decades old, and it is crucial to have newer data for electronics to reflect current technologies. The non-electronics elements were modelled based on the material type and weight of the component. For example, if there was a steel sheet for the enclosure, then the enclosure was modelled using the steel weight. The server memory was modelled using an ecoinvent memory dataset, because a datasheet for the memory was not publicly available. The SSDs were conservatively modelled as a 16-layer printed circuit board (PCB) of a specific dimension, a silicon wafer and dynamic random-access memory (DRAM). The wafer was estimated as one-third the area of the PCB, and the remaining two-thirds was the DRAM.

The EOL of all servers is modelled as 100% recycling. All server components are assumed to be recycled after 6 years. The electronics components are modelled as the recovery of precious metals, whereas the non-electronic components are modelled as material recycling. All EOL models were weight-based for each component.

Rack and tank production and EOL

The rack or server rack cabinet in an ACD and cold plate data centre houses and organizes all the important IT systems, including servers required to provide cloud and data services. The rack is enclosed to ensure the safety and security of the server. The tank performs the same function as a rack in the ICDs, but includes cooling fluid. All IT equipment is immersed in the fluid, which removes the need for fan-based cooling. The racks and tanks are assumed to have the same lifetime as the building, which is 15 years.

The production of the rack and tank was based on the material used to construct the rack and tank. Microsoft suppliers obtained raw material type and weight data for both the rack and the tank. The power distribution unit (PDU) and the power and management distribution unit are excluded from the rack and tank because no data were available for the ICDs. The assembly and testing energy for integrating the rack and tank with the servers is also accounted for (values provided by Microsoft). Because the production of racks and tanks was modelled primarily based on input metal components, the EOL impacts were calculated based on 100% recycling of these input metals.

Support equipment production and EOL

Support equipment within the data centre consists of the mechanical and electrical equipment required for cooling and power. Support equipment was primarily modelled using EPDs and LCAs from equipment lists and BOM provided by Microsoft. If no EPDs or LCAs were available, secondary data from LCA FE was used. Support equipment modelled in an ACD and CPD included AHUs, generators, uninterrupted power supplies (UPSs), transformers, PDUs, bus ducts, bus plugs and e-houses. Support equipment modelled in the ICDs included AHUs, generators, UPSs, transformers, PDUs and adiabatic coolers. Equipment was scaled by capacity (for example, cubic feet per minute, kilovolt-amperes and kilowatts). Several EPDs or LCAs included water use rather than water consumption. In those cases, water use was used. If no water data were available within an EPD or LCA, water data were proxied using data from LCA FE. If no data were found for a piece of equipment, the closest material or component proxies available were used to estimate the impacts. The following proxies were used because of a lack of data:

-

Adiabatic cooler: proxied with a chiller and radiator.

-

e-house: proxied with the weight of steel based on product dimensions.

-

Bus ducts: proxied with copper.

EOL impacts were modelled using data from the EPDs or LCAs. If no EOL information was provided, EOL impacts were estimated using the weight of component materials. EPA data were used for metal recycling and landfill rates. Transformer oil was treated as hazardous waste, and all other waste was considered wood products or inert material and landfilled.

Use phase

Use phase energy includes the electricity consumed to operate IT and non-IT equipment at a data centre. The annual use phase energy consumption was calculated based on the IT capacity of the data centre, its average PUE and the server use rate of the data centre (also referred to as load factor).

$$\begin{array}{l}{\rm{Annual}}\;{\rm{energy}}({\rm{MWh}}\,{{\rm{yr}}}^{-1})\,=\,{\rm{IT}}\;{\rm{capacity}}({\rm{MW}})\times {\rm{load}}\;{\rm{factor}}\\ \,\,\,\,\,\,\,\,\,\,\,\,\,\times \,{\rm{PUE}}\times \mathrm{8,760}\,({\rm{h}}\,{{\rm{yr}}}^{-1})\end{array}$$

At a data centre scale, the IT capacity is the capacity of the IT for which the data centre is built and is expressed in MW. The PUE is a ratio that describes how efficiently a data centre uses energy, and it gives the ratio of the total amount of energy used by the entire data centre to the energy delivered to the IT equipment. Finally, the load factor or operational capacity factor is the percentage of IT capacity that a specific data centre uses. The annual energy is modelled for the design capacity using a conservative assumption for the utilization rate. This conservative assumption helps in determining the capacity planning and the total number of servers and supporting equipment in the data centre.

Blue water consumption refers to surface and groundwater that the data centre uses during operation, including water use for cooling, irrigation, restrooms and cafés. Non-cooling water use (that is, restrooms, cafés and irrigation) was excluded from this analysis as it was assumed to be the same for ACDs, cold-plate-cooled data centres and ICDs because similar staffing requirements are anticipated for all types of data centre. Temperature-based use scenarios were modelled for air-cooled, cold plate-cooled and immersion-cooled scenarios. Two data centre locations in which temperatures can rise above a certain threshold were chosen to illustrate the differences in water required for cooling in a dry and a humid climate. When the temperature breaches the threshold, additional cooling may be required.

Cold-plate-cooled, immersion-cooled and air-cooled models all use air-handling units (AHUs) with evaporative cooling to provide cooling for the server rooms. Apart from AHUs for the server rooms, the cold-plate design includes copper tubing and CDUs, whereas the immersion-cooled model uses fluid coolers to reject server heat by a heat transfer fluid. In most climates, the fluid coolers can provide sufficient cooling without water (dry operation). However, in some environments, additional cooling capacity is required for parts of the year, in which case adiabatic cooling (wet operation) is used. Adiabatic systems pre-cool warm outdoor air with water when the outdoor air is above a user-specified temperature, taking advantage of the temperature decrease when water changes phases from liquid to vapour20,49. Adiabatic systems use more water to cool but have higher cooling efficiency than dry cooling systems, especially in warm, dry climates50. In this analysis, it was assumed that when the outdoor dry-bulb temperature is above 35 °C, the adiabatic cooling capacity of the fluid coolers is enabled. The dry-bulb temperature, or ambient temperature, is the air temperature measured by a thermometer not affected by the moisture of the air (https://www.engineeringtoolbox.com/dry-wet-bulb-dew-point-air-d_682.html). Fewer air-handling units and adiabatic coolers result in less water use for cooling in ICDs. A key metric for water use is cycles of concentration, a measure of the concentration of dissolved solids in the cooling tower process water. As water evaporates from a cooling tower, it leaves behind dissolved solids, which increase in concentration in the process water until there is a blow-down (flushing the system to prevent the buildup of solids). As the dissolved solids increase, so do the cycles of concentration (https://aquaclearllc.com/technical-info/cooling-tower-cycles-of-concentration-and-efficiency/). Higher cycles of concentration mean more fresh make-up water is required, resulting in greater overall water use.

Design assumptions for cooling technologies

Cold-plate technology is a functional hybrid of air-cooling and two-phase immersion cooling technologies. At the chip level, it has cooling ability similar to two-phase cooling, but at the data centre level, it could have a higher PUE than two-phase cooling because it still requires air cooling. Cold-plate technology uses racks, so the design for cold plates uses racks with the same power as two-phase immersion tanks. The compute server power for cold-plate technology is the average of the power of air-cooled and two-phase immersion servers. The PUE is also an average of the air-cooled and two-phase immersion PUE. To account for the cold-plate copper, tubing, manifolds and CDUs, the cold-plate embodied rack impacts were scaled by a suitable factor to be equivalent to the embodied impacts of one cold-plate rack, including the copper, tubing, manifolds and CDUs. Cold-plate cooling typically uses water or a water–propylene glycol mixture as the cooling fluid. For the model, the coolant PG25 was modelled using a water–propylene glycol mixture (75:25). EOL impacts were modelled as the treatment of a spent water–glycol mixture.

The one-phase immersion cooling has the same tank power, same compute server power, compute server count, data centre architecture, data centre footprint and PUE as the two-phase immersion cooling design. The fluid used in one-phase immersion cooling is different from the two-phase immersion fluid. The one-phase immersion fluid is modelled as a hydrocarbon derived from natural gas. To model the fluid, natural gas is produced and then converted to liquefied natural gas. Finally, the liquified natural gas is fractionated into hydrocarbons. After the useful lifetime of the one-phase immersion fluid, the fluid is assumed to be re-refined and reused or repurposed. EOL impacts after cycles of reuse and repurposing were modelled as incineration. The fluid quantity used for one-phase is the same as for two-phase, but the heat removal efficiency of the one-phase fluid is lower than that of the two-phase fluid, so the overclocking rate under the best-case scenario for one-phase immersion is capped at 10%. The tank embodied impacts for the one-phase fluid are the same as those for two-phase immersion cooling because the one-phase immersion tank has a higher number of CDUs, although the tank itself is simpler.

The two-phase immersion fluid and EOL impacts were modelled by the manufacturer and remain confidential.

Data quality assessment

A quantitative uncertainty assessment is not possible across all the datasets that are used, in part because standard deviation is not accounted for in most of the datasets available for LCA FE. Instead, a pedigree matrix was developed to qualitatively assess the data quality, which in turn provides an indication of variability and uncertainty in the model.

The quality of fit, or representativeness, of model inputs was evaluated across five indicator categories: reliability, completeness, temporal correlation, geographical correlation and technological correlation, as shown Extended Data Table 1. For each indicator, a score of 1–5 was assigned to each model input, where 1 indicates high representativeness of the product system and 5 indicates low representativeness51. The assessment was completed across life cycle stages for a final average score in each indicator.

Geographical resolutions have seven levels: global, continental, sub-region, national, province/state/region, county/city and site-specific51. The sub-region level refers to regional descriptions (for example, the United Arab Emirates), and the site-specific level, the most granular level, and includes the physical address of the site. The geographical correlation is scored based on the level of the input data and the level of the dataset that is available.

Technological correlation is represented using four categories: process design, operational conditions, material quality and scalability. Process design refers to the set of conditions in a process that affect the product. Operational conditions refer to variable parameters such as heat, temperature and pressure that are needed to make the product. Material quality refers to the type and quality of feedstock material. Scale refers to output per unit time or per line that needs to be described.

As described in the Supplementary Information, the data quality across all the phases that were modelled is between good and average. They scored between 2 and 3 across all data quality categories. Overall, the assessment has a score of 3 for data quality, which indicates that the variability in data is not large and is manageable. A key reason for this is the large impact that the use phase has on all three impact categories. The data quality for the use phase has consistently been high, and hence has had low uncertainty; the uncertainty of the overall results is also low.

PFAS: LCAs to inform sustainability regulations

As shown in this LCA, immersion cooling offers the most efficient cooling and heat rejection; however, all available fluid options have their challenges, including regulatory ones. An important application of LCAs should be in the design of regulatory approaches. This is important because of emerging PFAS regulations in the European Union and the United States, which will restrict its use for two-phase immersion cooling (and a wider range of industries and products, including firefighting, medicine and food packaging).

The chemical properties and the regulatory landscape for cooling fluids used in the different technologies are further discussed in this section.

Fluids that are important for two-phase immersion cooling are classified as per- and polyfluoroalkyl substances (PFAS), and more than 4,000 synthetic chemicals are used in industries and products such as firefighting, medicine and food packaging. Emerging PFAS regulations in the European Union and the United States are a potential barrier to their use in two-phase immersion cooling. On 7 February 2023, the European Chemicals Agency published the restriction proposal for PFAS substances26. Similarly, several US states are also working to restrict or ban non-essential PFAS use. A broad ban on more than 10,000 chemicals might be impractical, but science-based regulations based on overall LCA, persistence, bioaccumulation and toxicity may be effective.

The limitations on PFAS use may directly influence data centre immersion-cooling efforts. However, LCAs can calculate PFAS leakage impacts, help mitigate their associated risks and establish industry best practices such as sealed tanks and multiple levels of fluid containment.

For end users, LCAs are powerful tools in reducing GHG emissions, water consumption and energy demand. A holistic, multi-pronged risk-mitigation approach to use liquid-cooling fluids at scale includes the following:

-

alleviating the impacts of accidental spills by using chemicals that will not partition into groundwater and have lower solubility and reactivity with water streams;

-

ensuring that the fluid creation process follows all environmental, health and safety protocols, and that the production plant always acts as a good neighbour to its surrounding community;

-

tracking transportation and establishing the shortest possible routes;

-

using containers and secondary containers to prevent direct ground spills;

-

situating the data centres away from large bodies of water (above or below ground) in zones with low natural disaster risk and drier climates;

-

using asphalt and concrete barriers to catalyse evaporation if spill prevention fails; and

-

minimizing server and tank fluid usage, designing tanks for minimal fluid loss, implementing primary and secondary spill containment, monitoring vapour loss, implementing mechanical and chemical vapour traps at the exhaust systems and providing adequate air circulation in the data centre.