Will computers ever match or surpass human-level intelligence — and, if so, how? When the Association for the Advancement of Artificial Intelligence (AAAI), based in Washington DC, asked its members earlier this year whether neural networks — the current star of artificial-intelligence systems — alone will be enough to hit this goal, the vast majority said no. Instead, most said, a heavy dose of an older kind of AI will be needed to get these systems up to par: symbolic AI.

Will AI ever win its own Nobel? Some predict a prize-worthy science discovery soon

Sometimes called ‘good old-fashioned AI’, symbolic AI is based on formal rules and an encoding of the logical relationships between concepts1. Mathematics is symbolic, for example, as are ‘if–then’ statements and computer coding languages such as Python, along with flow charts or Venn diagrams that map how, say, cats, mammals and animals are conceptually related. Decades ago, symbolic systems were an early front-runner in the AI effort. However, in the early 2010s, they were vastly outpaced by more-flexible neural networks. These machine-learning models excel at learning from vast amounts of data, and underlie large language models (LLMs), as well as chatbots such as ChatGPT.

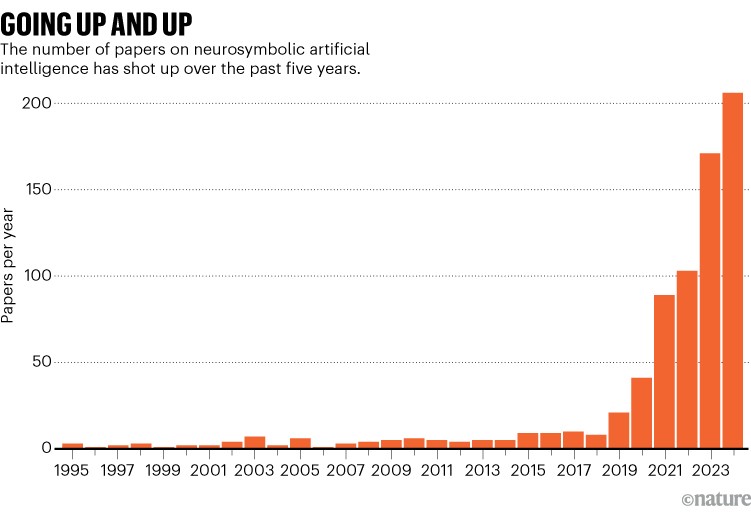

Now, however, the computer-science community is pushing hard for a better and bolder melding of the old and the new. ‘Neurosymbolic AI’ has become the hottest buzzword in town. Brandon Colelough, a computer scientist at the University of Maryland in College Park, has charted the meteoric rise of the concept in academic papers (see ‘Going up and up’). These reveal a spike of interest in neurosymbolic AI that started in around 2021 and shows no sign of slowing down2.

Plenty of researchers are heralding the trend as an escape from what they see as an unhealthy monopoly of neural networks in AI research, and expect the shift to deliver smarter and more reliable AI.

A better melding of these two strategies could lead to artificial general intelligence (AGI): AI that can reason and generalize its knowledge from one situation to another as well as humans do. It might also be useful for high-risk applications, such as military or medical decision-making, says Colelough. Because symbolic AI is transparent and understandable to humans, he says, it doesn’t suffer from the ‘black box’ syndrome that can make neural networks hard to trust.

Source: updated from ref. 2

There are already good examples of neurosymbolic AI, including Google DeepMind’s AlphaGeometry, a system reported last year3 that can reliably solve maths Olympiad problems — questions aimed at talented secondary-school students. But working out how best to combine neural networks and symbolic AI into an all-purpose system is a formidable challenge.

“You’re really architecting this kind of two-headed beast,” says computer scientist William Regli, also at the University of Maryland.

War of words

In 2019, computer scientist Richard Sutton posted a short essay entitled ‘The bitter lesson’ on his blog (see go.nature.com/4paxykf). In it, he argued that, since the 1950s, people have repeatedly assumed that the best way to make intelligent computers is to feed them with all the insights that humans have arrived at about the rules of the world, in fields from physics to social behaviour. The bitter pill to swallow, wrote Sutton, is that time and time again, symbolic methods have been outdone by systems that use a ton of raw data and scaled-up computational power to leverage ‘search and learning’. Early chess-playing computers, for example, that were trained on human-devised strategies were outperformed by those that were simply fed lots of game data.

This lesson has been widely quoted by proponents of neural networks to support the idea that making these systems ever-bigger is the best path to AGI. But many researchers argue that the essay overstates its case and downplays the crucial part that symbolic systems can and do play in AI. For example, the best chess program today, Stockfish, pairs a neural network with a symbolic tree of allowable moves.

AI models that lie, cheat and plot murder: how dangerous are LLMs really?

Neural nets and symbolic algorithms both have pros and cons. Neural networks are made up of layers of nodes with weighted connections that are adjusted during training to recognize patterns and learn from data. They are fast and creative, but they are also bound to make things up and can’t reliably answer questions beyond the scope of their training data.

Symbolic systems, meanwhile, struggle to encompass ‘messy’ concepts, such as human language, that involve vast rule databases that are difficult to build and slow to search. But their workings are clear, and they are good at reasoning, using logic to apply their general knowledge to fresh situations.

When put to use in the real world, neural networks that lack symbolic knowledge make classic mistakes: image generators might draw people with six fingers on each hand because they haven’t learnt the general concept that hands typically have five; video generators struggle to make a ball bounce around a scene because they haven’t learnt that gravity pulls things downwards. Some researchers blame such mistakes on a lack of data or computing power, but others say that the mistakes illustrate neural networks’ fundamental inability to generalize knowledge and reason logically.

Many argue that adding symbolism to neural nets might be the best — even the only — way to inject logical reasoning into AI. The global technology firm IBM, for example, is backing neurosymbolic techniques as a path to AGI. But others remain sceptical: Yann LeCun, one of the fathers of modern AI and chief AI scientist at tech giant Meta, has said that neurosymbolic approaches are “incompatible” with neural-network learning.

Can AI be truly creative?

Sutton, who is at the University of Alberta in Edmonton, Canada, and won the 2024 Turing prize, the equivalent of the Nobel prize for computer science, holds firm to his original argument: “The bitter lesson still applies to today’s AI,” he told Nature. This suggests, he says, that “adding a symbolic, more manually crafted element is probably a mistake”.

Gary Marcus, an AI entrepreneur, writer and cognitive scientist based in Vancouver, Canada, and one of the most vocal advocates of neurosymbolic AI, tends to frame this difference of opinions as a philosophical battle that is now being settled in his favour.

Others, such as roboticist Leslie Kaelbling at the Massachusetts Institute of Technology (MIT) in Cambridge, say that arguments over which view is right are a distraction, and that people should just get on with whatever works. “I’m a magpie. I’ll do anything that makes my robots better.”

Mix and match

Beyond the fact that neurosymbolic AI aims to meld the benefits of neural nets with the benefits of symbolism, its definition is blurry. Neurosymbolic AI encompasses “a very large universe”, says Marcus, “of which we’ve explored only a tiny bit”.

There are many broad approaches, which people have attempted to categorize in various ways. One option highlighted by many is the use of symbolic techniques to improve neural nets4. AlphaGeometry is arguably one of the most sophisticated examples of this strategy: it trains a neural net on a synthetic data set of maths problems produced using a symbolic computer language, making the solutions easier to check and ensuring fewer mistakes. It combines the two elegantly, says Colelough. In another example, ‘logic tensor networks’ provide a way to encode symbolic logic for neural networks. Statements can be assigned a fuzzy-truth value5: a number somewhere between 1 (true) and 0 (false). This provides a framework of rules to help the system reason.

Another broad approach does what some would say is the reverse, using neural nets to finesse symbolic algorithms. One problem with symbolic knowledge databases is that they are often so large that they take a very long time to search: the ‘tree’ of all possible moves in a game of Go, for example, contains about 10170 positions, which is unfeasibly large to crunch through. Neural networks can be trained to predict the most promising subset of moves, allowing the system to cut down how much of the ‘tree’ it has to search, and thus speeding up the time it takes to settle on the best move. That’s what Google’s AlphaGo did when it famously outperformed a Go grandmaster.