Braghieri, L., Levy, R. & Makarin, A. Social media and mental health. Am. Econ. Rev. 112, 3660–3693 (2022).

Van Bavel, J. J., Robertson, C. E., Del Rosario, K., Rasmussen, J. & Rathje, S. Social media and morality. Annu. Rev. Psychol. 75, 311–340 (2024).

Bail, C. Social-media reform is flying blind. Nature 603, 766 (2022).

Frewer, L. et al. Societal aspects of genetically modified foods. Food Chem. Toxicol. 42, 1181–1193 (2004).

Siegrist, M. & Hartmann, C. Consumer acceptance of novel food technologies. Nat. Food 1, 343–350 (2020).

Scott, S. E., Inbar, Y. & Rozin, P. Evidence for absolute moral opposition to genetically modified food in the United States. Perspect. Psychol. Sci. 11, 315–324 (2016).

Fernbach, P. M., Light, N., Scott, S. E., Inbar, Y. & Rozin, P. Extreme opponents of genetically modified foods know the least but think they know the most. Nat. Hum. Behav. 3, 251–256 (2019).

Cobb, M. D. & Macoubrie, J. Public perceptions about nanotechnology: risks, benefits and trust. J. Nanopart. Res. 6, 395–405 (2004).

Lee, C.-J., Scheufele, D. A. & Lewenstein, B. V. Public attitudes toward emerging technologies: examining the interactive effects of cognitions and affect on public attitudes toward nanotechnology. Sci. Commun. 27, 240–267 (2005).

Danaher, J. & Sætra, H. S. Mechanisms of techno-moral change: a taxonomy and overview. Ethical Theory Moral Pract. 26, 763–784 (2023). This article offers a precise and generative taxonomy of how technology reshapes moral life, providing a conceptual foundation for designing sci-fi-sci scenarios with mechanistic clarity.

Goldin, C. & Katz, L. F. The power of the pill: oral contraceptives and women’s career and marriage decisions. J. Pol. Econ. 110, 730–770 (2002).

Bailey, M. J. More power to the pill: the impact of contraceptive freedom on women’s life cycle labor supply. Q. J. Econ. 121, 289–320 (2006).

Baker, R. Before Bioethics (Oxford Univ. Press, 2013).

Shariff, A., Green, J. & Jettinghoff, W. The privacy mismatch: evolved intuitions in a digital world. Curr. Dir. Psychol. Sci. 30, 159–166 (2021).

Zuboff, S.The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power (PublicAffairs, 2019).

Collingridge, D. The Social Control of Technology (Pinter, 1980). This foundational work diagnoses the dilemma of control in technological development, which sci-fi-sci attempts to tackle by generating early empirical insights before lock-in occurs.

Fergnani, A. Mapping futures studies scholarship from 1968 to present: a bibliometric review of thematic clusters, research trends, and research gaps. Futures 105, 104–123 (2019).

Kuosa, T. Evolution of futures studies. Futures 43, 327–336 (2011).

Danaher, J. & Sætra, H. S. Technology and moral change: the transformation of truth and trust. Ethics Inf. Technol. 24, 35 (2022).

Hopster, J. K. & Maas, M. M. The technology triad: disruptive AI, regulatory gaps and value change. AI Ethics 4, 1051–1069 (2024).

Brey, P. Ethics of Emerging Technology 175–191 (Rowman & Littlefield, 2017).

Pohl, F. The great new inventions. Galaxy 27, 6 (1968).

Bonnefon, J.-F., Shariff, A. & Rahwan, I. The social dilemma of autonomous vehicles. Science 352, 1573–1576 (2016).

Shariff, A., Bonnefon, J.-F. & Rahwan, I. Psychological roadblocks to the adoption of self-driving vehicles. Nat. Hum. Behav. 1, 694–696 (2017).

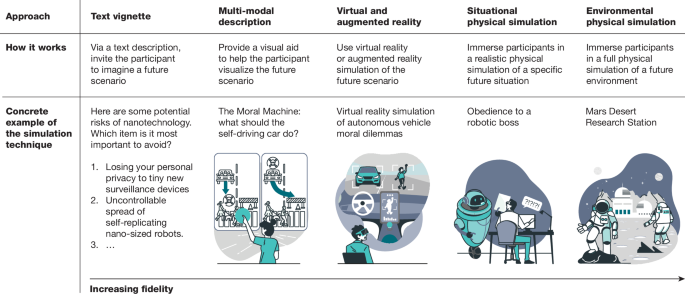

Awad, E. et al. The Moral Machine experiment. Nature 563, 59–64 (2018). This study is a classic example of sci-fi-sci, experimentally probing public moral preferences for a speculative technology (fully autonomous vehicles) through a massive global dataset of 40 million decisions.

Bonnefon, J.-F. et al. Ethics of connected and automated vehicles: recommendations on road safety, privacy, fairness, explainability and responsibility (European Commission, 2020).

Luetge, C. The German ethics code for automated and connected driving. Philos. Technol. 30, 547–558 (2017).

Santoni de Sio, F. The European Commission report on ethics of connected and automated vehicles and the future of ethics of transportation. Ethics Inf. Technol. 23, 713–726 (2021).

Adnan, N. Exploring the future: a meta-analysis of autonomous vehicle adoption and its impact on urban life and the healthcare sector. Transp. Res. Interdiscip. Persp. 26, 101110 (2024).

Tussyadiah, I. A review of research into automation in tourism: launching the annals of tourism research curated collection on artificial intelligence and robotics in tourism. Ann. Tour. Res. 81, 102883 (2020).

Zeng, Y., Liu, X., Zhang, X. & Li, Z. Retrospective of interdisciplinary research on robot services (1954–2023): from parasitism to symbiosis. Technol. Soc. 78, 102636 (2024).

Benkler, Y. Don’t let industry write the rules for AI. Nature 569, 161–162 (2019).

Cave, S. & Dihal, K. Hopes and fears for intelligent machines in fiction and reality. Nat. Mach. Intell. 1, 74–78 (2019).

Lazer, D. et al. Computational social science: obstacles and opportunities. Science 369, 1060–1062 (2020).

Lazer, D. et al. Meaningful measures of human society in the twenty-first century. Nature 595, 189–196 (2021).

Munger, K. The limited value of non-replicable field experiments in contexts with low temporal validity. Soc. Media Soc. 5, 2056305119859294 (2019).

Munger, K. Temporal validity as meta-science. Res. Politics 10, 20531680231187271 (2023). This article unpacks the concept of temporal validity, an essential concern for sci-fi-sci, as it exposes the limits of applying present-day empirical knowledge to future contexts.

Wilson, T. D. & Gilbert, D. T. Affective forecasting. Adv. Exp. Soc. Psychol. 35, 345–411 (2003).

Schönmann, M., Bodenschatz, A., Uhl, M. & Walkowitz, G. Contagious humans: a pandemic’s positive effect on attitudes towards care robots. Technol. Soc. 76, 102464 (2024).

Inhorn, M. C. & Birenbaum-Carmeli, D. Assisted reproductive technologies and culture change. Annu. Rev. Anthropol. 37, 177–196 (2008).

Dinh, C. T., Humphries, S. & Chatterjee, A. Public opinion on cognitive enhancement varies across different situations. Am. J. Bioethics 11, 224–237 (2020).

Mihailov, E., López, B. R., Cova, F. & Hannikainen, I. R. How pills undermine skills: moralization of cognitive enhancement and causal selection. Conscious. Cogn. 91, 103120 (2021).

Sattler, S. et al. Neuroenhancements in the military: a mixed-method pilot study on attitudes of staff officers to ethics and rules. Neuroethics 15, 11 (2022).

Lucas, S., Douglas, T. & Faber, N. S. How moral bioenhancement affects perceived praiseworthiness. Bioethics 38, 129–137 (2024).

Laakasuo, M. et al. What makes people approve or condemn mind upload technology? untangling the effects of sexual disgust, purity and science fiction familiarity. Palgrave Commun. 4, 84 (2018).

Salganik, M. J., Dodds, P. S. & Watts, D. J. Experimental study of inequality and unpredictability in an artificial cultural market. Science 311, 854–856 (2006).

Longoni, C. & Cian, L. Artificial intelligence in utilitarian vs. hedonic contexts: the ’word-of-machine’ effect. J. Mark. 86, 91–108 (2022).

Köbis, N. et al. Artificial intelligence can facilitate selfish decisions by altering the appearance of interaction partners. Preprint at https://doi.org/10.48550/arXiv.2306.04484 (2023).

Benvegnù, G., Pluchino, P. & Garnberini, L. Virtual morality: using virtual reality to study moral behavior in extreme accident situations. In 2021 IEEE Virtual Reality and 3D User Interfaces 316–325 (IEEE, 2021).

Sütfeld, L. R., Ehinger, B. V., König, P. & Pipa, G. How does the method change what we measure? comparing virtual reality and text-based surveys for the assessment of moral decisions in traffic dilemmas. PLoS ONE 14, e0223108 (2019).

Faulhaber, A. K. et al. Human decisions in moral dilemmas are largely described by utilitarianism: Virtual car driving study provides guidelines for autonomous driving vehicles. Sci. Eng. Ethics 25, 399–418 (2019).

Riek, L. D. Wizard of Oz studies in HRI: a systematic review and new reporting guidelines. J. Hum. Robot Interact. 1, 119–136 (2012).

Aroyo, A. M. et al. Will people morally crack under the authority of a famous wicked robot? In 2018 27th IEEE International Symposium on Robot and Human Interactive Communication 35–42 (IEEE, 2018).

Bishop, S. L., Kobrick, R., Battler, M. & Binsted, K. Fmars 2007: stress and coping in an Arctic Mars simulation. Acta Astronautica 66, 1353–1367 (2010).

Alfano, C. A., Bower, J. L., Cowie, J., Lau, S. & Simpson, R. J. Long-duration space exploration and emotional health: recommendations for conceptualizing and evaluating risk. Acta Astronautica 142, 289–299 (2018). This article reviews methods for forecasting emotional health risks during a Mars mission, an extreme case of sci-fi-sci aimed at predicting human responses in radically novel, high-stakes environments.

Riva, P., Rusconi, P., Pancani, L. & Chterev, K. Social isolation in space: an investigation of LUNARK, the first human mission in an Arctic Moon analog habitat. Acta Astronautica 195, 215–225 (2022).

Candy, S. & Potter, C. Design and Futures, vol. 1. J. Futures Stud. (2019).

Candy, S. & Potter, C. Design and Futures, vol. 2. J. Futures Stud. (2019). The two volumes of this special issue are a treasure trove of innovative methods for designing immersive future simulations, offering sci-fi-sci researchers a rich toolbox for experimental protocols grounded in a tangible experience.

Palmer, A. Too Like the Lightning (Tor Books, 2016).

Gall, T., Vallet, F. & Yannou, B. How to visualise futures studies concepts: revision of the futures cone. Futures 143, 103024 (2022).

Coolidge, F. L., Wynn, T., Overmann, K. A. & Hicks, J. M. in Human Paleoneurology (ed. Bruner, E.) 177–208 (Springer, 2015).

North, D. C. Structure and performance: the task of economic history. J. Econ. Lit. 16, 963–978 (1978).

von Schenk, A., Klockmann, V., Bonnefon, J.-F., Rahwan, I. & Köbis, N. Lie detection algorithms disrupt the social dynamics of accusation behavior. iScience 27, 110201 (2024).

Héder, M. From NASA to EU: the evolution of the TRL scale in public sector innovation. Innov. J. 22, 1–23 (2017). This article unpacks the evolution and potential misuses of the technology readiness level scale, which can help sci-fi-sci researchers to choose speculative technologies that are still uncertain, but not untethered from reality.

Coccia, M. Measuring intensity of technological change: the seismic approach. Technol. Forecast. Soc. Change 72, 117–144 (2005).

Goodall, N. J. Ethical decision making during automated vehicle crashes. Transp. Res. Rec. 2424, 58–65 (2014).

Hess, D. J. Incumbent-led transitions and civil society: autonomous vehicle policy and consumer organizations in the united states. Technol. Forecast. Soc. Change 151, 119825 (2020).

Kriebitz, A., Max, R. & Lütge, C. The German act on autonomous driving: why ethics still matters. Philos. Technol. 35, 29 (2022).

Mac Síthigh, D. & Siems, M. The Chinese social credit system: a model for other countries? Mod. L. Rev. 82, 1034–1071 (2019).

Orgad, L. & Reijers, W. A Dystopian Future? The Rise of Social Credit Systems. Technical Report RSCAS 2019/94 (Robert Schuman Centre for Advanced Studies, 2019).

Tirole, J. Digital dystopia. Am. Econ. Rev. 111, 2007–2048 (2021).

Purcell, Z. A. & Bonnefon, J.-F. Humans feel too special for machines to score their morals. PNAS Nexus 2, pgad179 (2023).

Proposal for a Regulation of the European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52021PC0206 (European Commission, 2021).

Turley, P. et al. Problems with using polygenic scores to select embryos. N. Engl. J. Med. 385, 78–86 (2021).

Anomaly, J. Creating Future People: The Ethics of Genetic Enhancement (Taylor & Francis, 2020).

Anomaly, J., Gyngell, C. & Savulescu, J. Great minds think different: preserving cognitive diversity in an age of gene editing. Bioethics 34, 81–89 (2020).

Gyngell, C. & Douglas, T. Stocking the genetic supermarket: reproductive genetic technologies and collective action problems. Bioethics 29, 241–250 (2015).

Cavaliere, G. Ectogenesis and gender-based oppression: resisting the ideal of assimilation. Bioethics 34, 727–734 (2020).

Hooton, V. & Romanis, E. C. Artificial womb technology, pregnancy, and EU employment rights. J. L. Biosci. 9, lsac009 (2022).

Horn, C. Ectogenesis, inequality, and coercion: a reproductive justice-informed analysis of the impact of artificial wombs. BioSocieties 18, 523–544 (2023).

MacKay, K. The ‘tyranny of reproduction’: could ectogenesis further women’s liberation? Bioethics 34, 346–353 (2020).

Lin, P. in Autonomous Driving: Technical, Legal and Social Aspects (eds Maurer, M.) 69–85 (Springer Vieweg, 2016).

Milakis, D. Long-term implications of automated vehicles: an introduction. Transp. Rev. 39, 1–8 (2019).

Soteropoulos, A., Berger, M. & Ciari, F. Impacts of automated vehicles on travel behaviour and land use: an international review of modelling studies. Transp. Rev. 39, 29–49 (2019).

Fernández Llorca, D. & Gómez, E. Trustworthy Autonomous Vehicles—Assessment Criteria for Trustworthy AI in the Autonomous Driving Domain (European Union, 2021).

Asimov, I. I, Robot (Gnome Press, 1950).

McDermott, D. in Machine Ethics (eds Anderson, M. & Anderson, S. L.) 88–114 (Cambridge Univ. Press, 2011).

Hirai, K., Hirose, M., Haikawa, Y. & Takenaka, T. The Development of Honda Humanoid Robot. In Proc. 1998 IEEE International Conference on Robotics and Automation Vol. 2, 1321–1326 (IEEE, 1998).

Ishiguro, H. et al. Robovie: an interactive humanoid robot. Ind. Robot 28, 498–504 (2001).

Breazeal, C. Designing Sociable Robots (MIT Press, 2004).

Jennings, N. R., Norman, T. J., Faratin, P., O’Brien, P. & Odgers, B. Autonomous agents for business process management. Appl. Artif. Intel. 14, 145–189 (2000).

Reijers, H. A. Business process management: the evolution of a discipline. Comput. Ind. 126, 103404 (2021).

Humanoid Robot Market Size, Share & Industry Analysis, by Motion Type (Biped and Wheel Drive), by Component (Hardware and Software), by Application (Industrial, Household, and Services), and Regional Forecast 2024–2032. Technical Report (Fortune Business Insights, 2024).

Dafoe, A. et al. Cooperative AI: machines must learn to find common ground. Nature 593, 33–36 (2021).

Acemoglu, D. & Restrepo, P. Automation and new tasks: how technology displaces and reinstates labor. J. Economic Perspect. 33, 3–30 (2019).

Crandall, J. W. et al. Cooperating with machines. Nat. Commun. 9, 233 (2018).

Ishowo-Oloko, F. et al. Behavioural evidence for a transparency–efficiency tradeoff in human–machine cooperation. Nat. Mach. Intell. 1, 517–521 (2019).

Karpus, J., Krüger, A., Verba, J. T., Bahrami, B. & Deroy, O. Algorithm exploitation: humans are keen to exploit benevolent AI. iScience 24, 102679 (2021).

Oudah, M., Makovi, K., Gray, K., Battu, B. & Rahwan, T. Perception of experience influences altruism and perception of agency influences trust in human–machine interactions. Sci. Rep. 14, 12410 (2024).

Makovi, K., Sargsyan, A., Li, W., Bonnefon, J.-F. & Rahwan, T. Trust within human–machine collectives depends on the perceived consensus about cooperative norms. Nat. Commun. 14, 3108 (2023).

Dong, M., Bonnefon, J.-F. & Rahwan, I. Toward human-centered AI management: methodological challenges and future directions. Technovation 131, 102953 (2024).

Bubeck, S. et al. Sparks of artificial general intelligence: early experiments with GPT-4. Preprint at https://doi.org/10.48550/arXiv.2303.12712 (2023).

Wu, Q. et al. Autogen: enabling next-gen LLM applications via multi-agent conversation framework. In First Conference on Language Modeling https://openreview.net/forum?id=BAakY1hNKS (OpenReview, 2024).

Bamberger, S., Clark, N., Ramachandran, S. & Sokolova, V. How generative AI is already transforming customer service. Boston Consulting Group www.bcg.com/publications/2023/how-generative-ai-transforms-customer-service (2023).