Samples and DNA extraction

We initially selected 1,064 samples from the 1kGP collection, sourcing genomic DNA from Coriell and discarding low-quality DNA. The study complied with all relevant regulations for work with human participants. DNA was extracted from lymphoblastoid cell lines and resuspended in TE buffer (10 mM Tris, pH 8.0, 1 mM EDTA).

Quality control before sequencing

DNA concentrations were determined with the Quant-iT dsDNA Broad-Range assay Kit (Q33130), using Varioskan LUX multimode microplate reader (VL0000D0). The purity evaluation of genomic DNA was performed on a DeNovix DS-11 Series Spectrophotometer. Optical density 260/280 and 260/230 ratio of 1.8 and 2 was maintained, respectively, for sequencing. Fragment length was measured on the Femto Pulse system (Agilent, M5330AA) using the Genomic DNA 165 kb Ladder Fast Separation assay with a separation time of 70 min (Agilent, FP-1002-0275).

Size selection

All DNA samples were size selected using the Circulomics Short Read Eliminator Kit (Circulomics, SS-100-101-01). According to supplier information, the kit uses size-selective precipitation to reduce the amount of DNA fragments below 25 kb in length. The kit was used according to the manufacturer’s recommendations (handbook v.2.0, 07/2019). Briefly, 60 μl of Buffer SRE was added to the sample tube (60-μl volume), gently mixed and the tube centrifuged at 10,000g for 30 min at room temperature. After supernatant removal, two washing steps were performed with 200 μl of 70% ethanol and a centrifugation at 10,000g for 2 min at room temperature. Finally, 50 μl Buffer EB was added and the tube was incubated at 50 °C for 30 min, followed by overnight incubation at room temperature to ensure efficient DNA elution.

LRS

Sequencing library preparation was carried out following the general guidelines from ONT, with modifications proposed by New England Biolabs (NEB) to ensure high-yield data generation and long-fragment sequencing. For library preparation, the following reagents were used: Ligation Sequencing Kit (ONT, SQK-LSK110), NEBNext Companion Module for ONT Ligation Sequencing (NEB, E7180S) and AMPure XP beads (made in-house by the Molecular Biology Service, Research Institute of Molecular Pathology). A DNA amount of 3 μg as input material was transferred into a 0.2-ml thin-walled PCR tube and the total volume was adjusted to 48 μl with nuclease-free water (Thermo Fisher, AM9937). DNA fragments were repaired and end-prepped as follows: 3.5 μl of NEBNext FFPE DNA Repair Buffer, 2 μl of NEBNext FFPE DNA Repair Mix, 3.5 μl of NEBNext Ultra II End Prep Reaction Buffer and 3 μl NEBNext Ultra II End Prep Enzyme Mix were added to each tube. After mixing and spinning down, the samples were incubated at 20 °C for 30 min, followed by a second incubation at 65 °C for 5 min. The prolonged incubation time allowed recovery of longer fragments. The solution from each tube was then transferred to a clean 1.5-ml Eppendorf DNA LoBind tube (Eppendorf) for clean-up. First, 60 μl of AMPure XP Beads was added to each tube. The samples were then incubated on a HulaMixer sample mixer (Thermo Fisher Scientific, 15920D) for 5 min at room temperature. Bead clean-up was performed with two washing steps on a magnetic rack, each time pipetting off the supernatant and adding 200 μl of freshly prepared 70% ethanol. The pellet was resuspended in 61 μl of nuclease-free water and incubated for 5 min at room temperature. Tubes were placed on a magnetic rack to collect the final eluate (1 μl was then taken out for quantification). For adaptor ligation and clean-up, 60 μl of DNA from the previous step was combined with 25 μl of Ligation Buffer, 10 μl of NEBNext Quick T4 DNA Ligase and 5 μl of Adapter Mix in a 1.5-ml Eppendorf DNA LoBind tube. The reaction was then incubated for 20 min at room temperature. A second AMPure bead clean-up step was carried out by adding 40 μl of bead solution to each tube, followed by incubation on a HulaMixer for 5 min at room temperature. After pipetting off the supernatant on a magnet rack, the beads were washed twice with 250 μl of Long Fragment Buffer. Finally, the supernatant was discarded, and the pellet was resuspended in 25 μl of Elution Buffer EB and incubated for 10 min at 37°C to collect the final library. Samples were quantified using a Qubit fluorimeter and diluted appropriately before loading onto the flow cells. The final mass loaded on the flow cells was determined on the basis of the molarity, dependent on average fragment size. Sequencing was carried out using FLO-PRO002 (R9.4.1) flow cells from ONT on the PromethION 48. The sequencing run was stopped after 24 h, the flow cell was washed using the Flow cell wash kit XL (ONT, EXP_WSH004-XL) and then the library was reloaded.

Base calling and adaptor trimming

Guppy v.6.2.1 was used for base calling the Fast5 input files in ‘sup’ accuracy mode with adaptor trimming and read splitting disabled and the output was converted to FASTA. For adaptor trimming and read splitting, we subsequently used Porechop61 v.0.2.4 on the generated FASTA files in chunks of 300,000 reads with default parameters.

Reference genome alignments

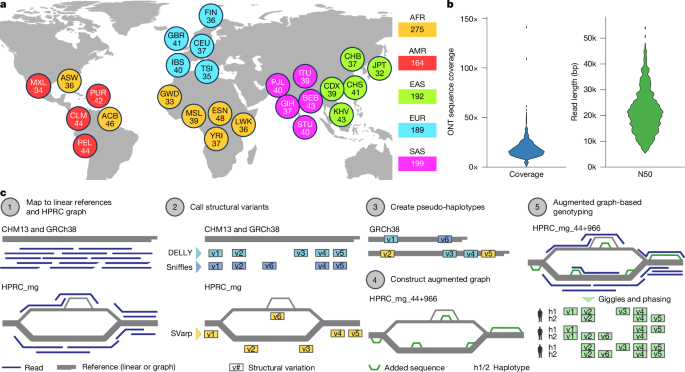

Reads were aligned to the GRCh38 (ref. 62) and CHM13 (ref. 63) linear reference genomes as well as the prebuilt human genome graph, denoted HPRC_mg, which was previously constructed by the HPRC12 (from HPRC year-1 samples; https://doi.org/10.5281/zenodo.6983934). We selected HPRC_mg as the pangenomic reference as it represents SVs while omitting SNPs and less than 50-bp indels12, thus comprising a compact graph structure facilitating analyses at the scale of a thousand long-read genomes. For the GRCh38 and CHM13, we used minimap2 (ref. 64) to map the ONT reads using the options ‘-a -x map-ont –rmq=yes –MD –cs -L’. Samtools65 was used to sort the alignments and convert to CRAM. Multiple ONT runs for the same sample were tagged using different read-groups. Minigraph21 v.0.20-r559 was used to map the ONT reads against the HPRC_mg graph genome using the options ‘–vc -cx lr’. During alignment, the ‘–vc’ flag enables the output of the alignments in vertex coordinates and the ‘lr’ option enables long-read mapping. The resulting graph alignments in GAF format21 were sorted using gaftools66 and compressed using bgzip.

Sample and alignment quality control

Using SNP calls of the high-coverage short-read data from the 1kGP cohort3, we implemented rigorous quality control measures to effectively eliminate sample swaps and cross-contaminations. We first genotyped all SNPs from the short-read haplotype reference panel with an allele count greater than or equal to 6 in all samples using bcftools67. Individual VCF files were merged using bcftools67 into a multi-sample VCF file that was then combined with the short-read haplotype reference panel. We then used VCFtools68 to calculate a relatedness statistic of the long-read sequenced samples compared with the short-read sequenced samples using the ‘–relatedness2’ option. This analysis identified a sample swap between HG01951 and HG01983, which we relabelled afterwards. We excluded all samples that seemed to be cross-contaminated during library preparation, namely HG00138, HG02807, HG02813, HG02870, HG02888, HG02890, HG03804 and HG03778. Alignments were analysed using NanoPack69 to determine median and N50 read length and genome coverage (Supplementary Figs. 2, 4 and 49). To compare linear and graph genome alignments in terms of percentage identity, number of aligned reads (bases) and largest Cigar I and D event, we used lorax70 (Supplementary Fig. 3).

SV discovery using linear references

We used Sniffles71 v.2.0.7 and an LRS-optimized version of DELLY72 (v.1.1.7) to discover SVs using linear reference genomes. For Sniffles, we converted CRAM files to BAM format using samtools and then calculated for each sample candidate SVs and the associated SNF file using Sniffles. We then used Sniffles population-calling mode on all SNF files to generate two multi-sample VCF files, one for GRCh38 and one for CHM13.

Similarly, we also used DELLY’s population-calling mode which first calls SVs by sample using the new long-read (lr) subcommand. We then merged all candidate SV sites using DELLY merge with the options ‘-p -a 0.05 -v 3 -c’ to select PASS sites that are precise at single-nucleotide resolution with a minimum variant allele frequency of 5% and a minimum coverage of 3×. We then genotyped this SV site list in all samples using delly lr and merged the results by id with bcftools merge using the option ‘-m id’. We then applied sansa (https://github.com/dellytools/sansa), a newly developed, multi-sample SV annotation method that detects SV duplicates on the basis of SV allele and genotype concordance, to remove redundant SV sites. The parameters for the sansa markdup subcommand were ‘-y 0 -b 500 -s 0.5 -d 0.3 -c 0.1’ to mark SV duplicates for sites that show an SV size ratio greater than 0.5, a maximum SV allele divergence of 30%, a maximum SV breakpoint offset of 500 bp and a minimum fraction of shared SV carriers of 10%. After removing duplicates, we generated the final multi-sample VCFs for GRCh38 and CHM13.

To ensure specificity and facilitate genome graph augmentation, we further generated for Sniffles and DELLY separately a consensus callset of SVs shared between the GRCh38 and CHM13 reference genomes. We therefore lifted the GRCh38 callsets to CHM13 using bedtools73 and the liftOver tool74 with the GRCh38 to CHM13 chain file. We then compared the lifted VCF with the original CHM13 VCF file to identify shared SVs using sansa’s compvcf subcommand with the options ‘-m 0 -b 50 -s 0.8 -d 0.1’ to identify SVs that have a size ratio greater than or equal to 0.8, a maximum SV breakpoint offset of 50 bp and an SV allele divergence of at most 10%. As the SV allele divergence filter requires a local assembly (for example, DELLY’s consensus SV allele sequence), we did not apply this filter to Sniffles. For genome graph augmentation, we subset the final VCFs to deletions and insertions only. All inversion-type SVs called by DELLY and Sniffles using the minimap2 alignments were integrated separately in the inversion analysis.

Inversion analysis

We developed a multi-tiered analytical pipeline to comprehensively ascertain inversions on the basis of LRS data. By inspecting previously known inversions in our dataset, along with simulating a range of small inversions (less than 1 kb) with a coverage closely mirroring our dataset (median 17×), we discovered that minimap2 frequently misaligned reads in small inversion regions, leading to increasing error rates in those genomic locations. To allow capturing of these inversions, we examined strategies for inversion discovery by simulating inversions of varied sizes (Supplementary Note 6). We generated simulated ONT reads from these augmented genomes, using SURVIVOR75, mimicking the sequencing coverage in our resource. Using pysamstats (https://github.com/alimanfoo/pysamstats), we first calculated the mismatch rate per base pair in 50-bp intervals, initially in the simulated datasets to tune parameters and subsequently in the real data to identify candidate inverted regions for re-alignment. This analytical process showed that most genomic regions maintained a mismatch rate below 20%; regions surpassing this rate were identified as having an unexpectedly high mismatch rate and selected for re-alignment with NGMLR71 (Supplementary Fig. 50). Post-exclusion of telomeric and centromeric regions, as well as of misaligned regions exceeding 1 kb in size, was then conducted to restrict the number of regions requiring remapping, thereby enhancing computational efficiency. The selected genomic segments underwent re-alignment using NGMLR and we then interrogated all realigned regions with DELLY72 to discover previously missed inversions. The final inversion calls in the remapped regions from all samples were consolidated using SURVIVOR merge75. For the rest of the regions not requiring re-alignment, inversion calling was conducted using both Sniffles and DELLY. Manual verification of true versus false positive calls was performed by examining dot plots and Integrative Genomics Viewer (IGV)-like plots generated with wally70 for each candidate inversion location for the largest ten reads per candidate region, ensuring the accuracy of our findings. Ultimately, we generated a final comprehensive inversion callset by merging all unique instances from each dataset with ‘bedtools merge’ (v.2.31.1)73. As the inversion analysis was conducted on the GRCh38 reference genome, regions were subsequently lifted over to the T2T-CHM13+Y genome, applying a 90% base remapping threshold to retain a region.

To evaluate our inversion dataset against two previous studies on 1kGP samples3,36, we used ‘bedtools intersect’ (v.2.31.1)73, defining inversions as ‘known’ if they exhibited a minimum of 50% reciprocal overlap with inversions from either previous dataset. Analysis against a previous study delineating inversions through whole-genome assembly36 shows the efficacy of our methodology in detecting a diverse range of inversions, both repeat-mediated and non-repeat-mediated: our results showed that 65% of non-repeat-associated inversions and 41% of SD-mediated inversions were successfully identified. Furthermore, we refined our comparison to a 1kGP-derived short-read inversion dataset3, for which we included only those inversions from the comparison dataset with quality scores of 30 or higher, to ensure the accuracy of our comparative analysis. This approach showed an overlap of 289 inversions, or 36.5% concordance (median size of 530 bp).

Regarding the flanking repeats in repeat-rich inverted regions, we conducted a detailed analysis by manually inspecting the repeat types and their orientations at inversion breakpoints. Repeat data were acquired from the RepeatMasker track and the SD annotations of the CHM13 reference (obtained from https://github.com/marbl/CHM13); an inversion was classified as repeat-mediated if it was bracketed by repeats in reverse orientation relative to each other, detected through dotplot analysis.

Inversion genotyping was conducted using the GeONTIpe pipeline (commit: 1b5db07) (https://github.com/RMoreiraP/GeONTIpe), which identifies reads spanning inversion breakpoints and determines sequence order and orientation using probes positioned on both sides of the breakpoints. We focused on those inversions not genotyped by Giggles, by excluding inverted duplications and twin priming events. Multiple probe sets were tested, and for each inversion, a validated set of probes was generated and verified on dotplot-confirmed inversion carrier samples. Once the expected orientation was confirmed, the validated set of probes was applied to genotype the rest of the samples. All regions classified as ‘inverted duplications’ were excluded because of pipeline limitations, yielding a final dataset of 520 inversions. Of these, 407 inversions (78.3%) were successfully genotyped, with both haplotypes identified in 393 cases.

Notably, five inversions exclusively exhibited the inverted haplotype in all samples, suggesting either an inversion in the reference genome or a potential assembly error. In four cases, samples expected to have the inverted haplotype were classified as low confidence, with no other samples exhibiting the inversion. For a further five cases, manual analysis showed either a misclassification as an inverted duplication or the presence of complex structural rearrangements in the putative reads with the inversion.

Phasing with the ONT reads

To conduct phasing, we first pursued phasing experiments with the ONT reads to check how well they compare to the statistical phasing done previously in high-coverage 1kGP short-read data generated by the New York Genome Center (NYGC)3. The NYGC phased VCFs and the NYGC raw genotypes were used. Using the NYGC raw genotypes, the phasing was done by WhatsHap76 (v.2.0) in three different ways: phasing with only the ONT reads (from hereon referred to as long-read phasing), trio phasing and trio phasing with the ONT reads (from hereon referred to as long-read–trio phasing). The trio phasing and long-read–trio phasing was conducted for the six complete family trios (family IDs: 2418, CLM16, SH006, Y077, 1463 (paternal side), 1463 (maternal side)) for which our resource has long-read data. The long-read phasing was conducted for all of the 967 samples in the intersection of our 1,019 sample set and the NYGC sample set of 3,202 (Supplementary Fig. 7 and Supplementary Table 23). The phasing was pursued for all autosomes, and each chromosome was phased separately to allow parallel processing. The commands used are as follows:

-

Long-read phasing: whatshap phase -o <output phased vcf> –chromosome <chromosome ID> –sample <sample name> -r <reference fasta> <NYGC raw genotypes for sample> <ONT CRAM for sample>

-

Trio phasing: whatshap phase -o <output phased vcf> –chromosome <chromosome ID> -r <reference fasta> –ped <pedigree data for the NYGC samples> <NYGC raw genotypes for samples in a family>

-

Long-read–trio phasing: whatshap phase -o <output phased vcf> –chromosome <chromosome ID> -r <reference fasta> –ped <pedigree data for the NYGC samples> <NYGC raw genotypes for samples in a family> <ONT CRAM for samples in a family>.

Comparison of the WhatsHap phased VCFs against NYGC statistical phasing

The phased VCFs produced by WhatsHap were compared against the NYGC statistical phasing using WhatsHap’s compare function. The commands are as follows:

-

For the samples without trio data: whatshap compare –sample <sample name> –names longread,nygc –tsv-pairwise <pairwise tsv file> –tsv-multiway <multiway tsv name> <input longread phased vcf> <input nygc statistical phasing vcf>

-

For the samples with trio data: compare –sample <sample name> –names trio,longread,trio-longread,nygc –tsv-pairwise <pairwise tsv file> –tsv-multiway <multiway tsv name> <input trio phased vcf> <input longread phased vcf> <input longread trio phased vcf> <input nygc statistical phasing vcf>.

Haplotype tagging of ONT reads

The ONT reads were haplotype-tagged (or haplo-tagged) using WhatsHap76 (v.2.0). The NYCG phased VCF3 was used as the reference for tagging the reads. The command used to tag the reads was: whatshap haplotag –skip-missing-contigs –reference <reference fasta> –sample <sample name> –output-haplotag-list <output file> –output /dev/null <NYGC phased VCF> <ONT CRAM>.

Although the main output of whatshap haplotag is a tagged alignment file, downstream tools used in this study required only a file containing the tag for each read which is given in –output-haplotag-list. Owing to the presence of pseudo-autosomal regions (PARs) in the phased VCF, the command was altered for the male samples. Instead of providing the entire NYGC phased VCF, the non-PAR records were removed and the haplo-tagging was performed. After haplo-tagging, the list of reads aligning to the non-PAR on chr. X were extracted, assigned manually as the maternal haplotype and added to the haplotag list.

SV discovery from the graph

The aim of SVarp77 is to discover SVs on graph genomes, including for haplotypes missing in a linear reference. SVarp (commit: 0acba75) calls novel phased variant sequences, called svtigs, rather than a variant callset, which we later use in the graph augmentation step. To discover phased SV assemblies (svtigs) on top of the pangenome graph, we used haplotag read information and the alignment file (that is, GAF alignments) as input to the SVarp algorithm using <svarp -a GAF-FILE -s 5 -d 500 -g GFA-FILE –fasta READS-FASTA-FILE -i SAMPLE_NAME –phase HAPLOTAG-FILE> command. With 967 samples, we found a total of 1,108,850 variants (approximately 1,145 per sample).

To find specific SV breakpoint loci with respect to a linear reference genome, we used the PAV tool22 to call SV breakpoints, using svtigs that SVarp generated as input. This yielded 1,258,880 and 1,241,252 SVs relative to the CHM13 and GRCh38 linear genomes, respectively, that are more than 50 bp. However, we realized that some svtigs give rise to multiple SVs in the output of PAV. To ensure that variants called from the same svtig end up on the same pseudo-haplotypes in the graph augmentation step, we generated a script to combine records arising from the same svtig into a single VCF record. This is achieved by connecting multiple smaller such SVs into a single SV record through adding reference sequence in-between. This yielded 564,661 and 562,311 SVs relative to CHM13 and GRCh38, respectively.

The single-sample VCFs (relative to GRCh38) generated with PAV from the SVarp svtigs were merged into a multi-sample VCF using bcftools merge67 (v.1.18) and then post-processed using truvari collapse78 (v.4.1.0). The latter step merges SV records probably representing the same event into a single record, removing redundancy. This reduced the number of SVs from 451,942 to 215,209. Finally, we filtered the resulting VCF by keeping only records present in at least two samples. This filtered set contained 70,932 SVs.

Graph augmentation

We developed a pipeline to add extra variants found by DELLY, Sniffles and SVarp across the 1kGP ONT samples to the minigraph graph so that they can be genotyped by Giggles (v.1.0)79. The main idea is to construct so-called ‘pseudo-haplotypes’ by implanting sets of non-overlapping variant calls into a reference genome and then adding them to the graph using minigraph21 (Extended Data Fig. 1). Our pipeline consists of the following steps. At first, we remove variant calls that fall into the centromere regions and mark the respective region in the reference genome by masking the sequence by Ns using the tool bcftools maskfasta (v.1.18). We used the GRCh38 reference genome and centromere annotations obtained from the UCSC genome browser. In the next step, we generate the pseudo-haplotypes as follows. Each of the SV discovery callsets (DELLY, Sniffles, SVarp) contains variants overlapping across samples. Thus, inserting all of them into one reference genome will fail. Therefore, we first group variants of each callset into sets of non-overlapping variants, and then generate a consensus sequence for each of these sets by implanting the variants into the reference genome using bcftools consensus (v.1.18). As a result, we obtain a whole-genome consensus sequence for each of these sets, which we call the pseudo-haplotypes. For the DELLY calls, we obtained 26 such pseudo-haplotypes, for Sniffles we got 69 and for SVarp we generated 117 pseudo-haplotypes. In total, these pseudo-haplotypes carry 154,319 DELLY SVs, 128,688 Sniffles SVs and 70,813 SVs detected by SVarp. In the last step, we insert all of these newly constructed genome sequences into the graph using the minigraph tool (v.0.20). Thereby, the minigraph algorithm incorporates a new SV allele only if it is sufficiently different (that is, shifted by more than or equal to 50 bp) from SV alleles already represented in the graph21. We first inserted all SVarp haplotypes, then all DELLY haplotypes and finally all Sniffles haplotypes into the graph using the command: minigraph -cxggs -t32 <minigraph> <SVarp Pseudo-Haplotype FASTAs> <DELLY Pseudo-Haplotype FASTAs> <Sniffles Pseudo-Haplotype FASTAs> > augmented-graph.gfa.

In the augmented graph, the number of bubbles (Supplementary Fig. 8) increases to 220,168 (102,371 in the original graph) and the total sequence represented in the graph increases from 3,297,884,175 bases to 3,477,266,061 bases. To identify bubbles in the augmented graph representing variation that was previously not represented in the original graph, we created BED files with coordinates of bubbles present in both graphs using gfatools bubble. Then we used bedtools closest to compute the distance between each bubble in the augmented graph and their respective closest bubble in the original graph (Supplementary Fig. 10). We defined all bubbles in the augmented graph whose distance to the closest bubble in the original graph is at least 1 kb as ‘new’ bubbles, representing novel SV sites.

To evaluate our augmented graph, we aligned ONT reads of human sample HG00513 (a sample not part of HPRC_mg) to HPRC_mg_44+966 using the command: minigraph -cx lr -t24 augmented-graph.gfa HG00513-reads.fa –vc 2 > alignments.gaf. For comparison, we aligned the same set of reads to the original graph (HPRC_mg) using the same command. We then computed alignment statistics using gaftools stat (Supplementary Table 3). We observed better alignment statistics when aligning reads to the augmented instead of the original graph. The number of aligned reads increased by 33,208, and the number of aligned bases by 152,454,715 bp.

Preparing phased VCF panel

We developed a pipeline that can reconstruct the alleles of the samples in the graph using the graph and the assemblies for the samples. First, the bubbles in the graphs are identified using gaftools66 (commit ID: feaf7f4). The function order_gfa tags the nodes of the graph to identify whether the nodes are bubble nodes (nodes inside a bubble) or scaffold nodes (nodes outside a bubble). The phased panel is created by aligning the HPRC assemblies back to the tagged HPRC graph using minigraph21 (v.0.20-r559). The resulting alignments are processed to identify the alleles using the node paths between scaffold nodes. The allele information from the haplotype assemblies is then converted to a phased panel VCF. For the augmented graph, the same pipeline discussed above works where the pseudo-haplotypes are considered as assemblies and aligned to the augmented graph. On the basis of the tagging of the augmented graph, the alleles on the pseudo-haplotypes are identified and separate columns are created in the phased panel VCF corresponding to the alleles of the pseudo-haplotypes. This pipeline creates a multi-sample phased VCF, containing multiple alternative alleles for each record of the VCF.

The phased panel VCF is processed for its inclusion during Giggles79 genotyping. Internal filter tags are set during the VCF creation step which allows for filtering of the bubbles for which allele information is not present for at least 80% of the samples. Additionally, bubbles are filtered out that do not have any alternative alleles in any of the haplotypes. From the phased panel VCF, a ‘Giggles-ready’ version is generated for which the reference alleles in the pseudo-haplotypes are masked with dots, because internally Giggles accounts for the allele frequency in the panels and the pseudo-haplotypes heavily bias the genotyping towards the reference used in the bcftools consensus step. The VCF records describe bubble structures in the graph. These bubbles often contain many nested and overlapping variant alleles, that is, a bubble does not necessarily represent a single variation event. To identify variant alleles represented in these bubbles, we applied the same bubble decomposition approach as previously described by the HPRC12. In short, the idea is to compare node traversals of the reference and alternative paths through bubbles to identify nested alleles. As in the previous HPRC study12, we then annotate the bubbles in our VCF to encode nested variants, so that we can translate genotypes computed for bubbles to genotypes for the variants represented in the graph.

Graph-aware genotyping

Graph-aware genotyping was conducted using Giggles79 (v.1.0), a pangenome-based genome inference tool that leverages LRS data. Giggles serves as a long-read alternative to PanGenie’s short-read-based approach80. It works with a Hidden Markov Model framework for which each variant position contains states corresponding to all possible genotypes at that position. The transition probabilities are based on the Li–Stephens model81 and the emission probabilities are based on the alignment of reads around an interval window of the variant position. Forward–backward computation of the Hidden Markov Model gives the posterior likelihoods of each state at each variant position which can be used to compute the likelihood of genotypes across the genome.

Giggles requires a phased VCF panel, sorted graph alignments, the input long reads, tagged graph and the haplotype tagging of the reads. We use the command: giggles genotype –read-fasta <input reads> –sample <sample name> -o <output vcf> –rgfa <tagged gfa> –haplotag-tsv <haplotype tags> <phased panel VCF> <sorted alignments>. This outputs an unphased VCF with the genotypes for the given sample.

The VCFs were further filtered using bcftools67 to create a high-quality genotype set. This was achieved by masking genotypes of samples having a genotyping quality of less than 10 and dropping variant positions for which more than 5% of genotypes are missing. The commands are: bcftools view –min-ac 1 -m2 -M2 <vcf> | bcftools +setGT – — -t q -n. -i ‘FMT/GQ<10’ | bcftools +fill-tags – — -t all | bcftools filter -O z -o <filtered-vcf> -i ‘INFO/AC >= 1 && INFO/F_MISSING <= 0.05’.

We stratify the SVs as biallelic or multiallelic on the basis of the bubble these SVs originate from. If the bubble has more than two alleles defined in the phased VCF panel given to Giggles as an input, then the SV is defined to be multiallelic. Otherwise, if only one alternative allele is defined, the SV is defined to be biallelic.

Mendelian inconsistency statistics

For the six complete family trios in our dataset, we calculated Mendelian inconsistency statistics (Supplementary Fig. 51). We provide the confusion matrices and various statistics for each family, genotyped against HPRC_mg and HPRC_mg_44+966, and also report for various variant types depending on whether the variant came from a biallelic or multiallelic bubble (Supplementary Tables 5–12). The definitions for each statistic can be found in Supplementary Table 4.

Statistical phasing using high-coverage short-read data of 1kGP samples

Using SHAPEIT5 (ref. 82), we statistically phased the multi-sample VCF file outputted by Giggles using a recently constructed CHM13 haplotype reference panel19. This panel uses SNP and InDel calls generated from 1kGP short-read data3,83 for CHM13. We first subsetted the unphased input VCF from Giggles with 167,291 SVs to the 908 unrelated samples present in the haplotype reference panel, set genotypes with a quality less than 10 to ‘missing’ and subsequently dropped all SV sites with allele count zero. We then used the SHAPEIT5 common variant phasing mode to incorporate the new SV alleles into the SNP and InDel haplotype scaffold, yielding a phased VCF with 164,571 SVs. Before the phasing we split multiallelic variants into biallelic variants using bcftools norm67 with the option ‘-m -any’. After phasing, we joined multiallelic variants back into the original state using bcftools norm with the option ‘-m +any’.

Using plink84, we assessed linkage disequilibrium to nearby SNPs in a window of 1 Mb. We first converted the VCF to plink input files and then used plink to calculate r2 values of all SVs to nearby SNPs. For the linkage disequilibrium analysis, we further subdivided the CHM13 genome into GiaB ‘difficult’ regions and ‘high-confidence’ regions85 (using the CHM13_notinalldifficultregions.bed.gz file; Extended Data Fig. 3 and Supplementary Figs. 26 and 52).

Comparison with previous SV callsets

For the comparison with SV calls derived from high-quality genome assemblies from the HGSVC we used Truvari78, using the CHM13 reference genome (Supplementary Note 3). To enable further analyses with previous callsets, we additionally lifted our SV calls to GRCh38 using liftOver74 with the CHM13 to GRCh38 chain file. As extra baseline callsets (GRCh38-based), we included the callsets produced in ref. 3, ref. 22 and ref. 4, labelled as nygc, pangenie and phase3, respectively (Supplementary Fig. 20). The comparison of SVs was restricted to autosomes and chromosome X for deletions and insertions separately. We used sansa compvcf to compare the SV VCF files using default parameters. We altered the base and comparison VCFs to identify SVs that are distinct for one or the other callset and to identify potential 1:many SV overlaps among the shared SVs. We used the presence of INSSEQ and the absence of IMPRECISE in the INFO field as criteria for sequence-resolved insertions in previous short-read SV callsets3 (Fig. 1).

Population differentiation

We used ADMIXTURE v.1.3.0 (https://github.com/NovembreLab/admixture) to compute admixture for K = 5. For performing SV-based principal component analysis we used EIGENSOFT 8.0.0 (https://github.com/DReichLab/EIG). Fst values were calculated using VCFtools for each continental population (against the remaining samples) and each population (against the remaining samples) per site and for 1-Mb windows.

SV impact estimation on genomic features

We used Ensembl VEP with annotation from the CHM13 rapid release of Ensembl (107) to estimate the impact of the SVs on genomic features. Processing was performed using the command: vep –assembly T2T-CHM13v2.0 –regulatory –offline -i final-vcf.unphased.vcf.

We observe a large difference in the mean allele frequencies of SVs affecting coding (mean MAF 0.009) and non-coding (mean MAF 0.061) regions. This observation and the P value of 0.0 reported by both t-test and Kolmogorov–Smirnov test thereby support the hypothesis that the two underlying distributions are different.

Targeted genotyping of challenging loci

Locityper86 (v.0.13.3) is a targeted whole-genome sequencing-based genotyper designed for challenging loci. Our initial set of target genes was preprocessed using locityper add -e 300k. Our analysis based on Locityper focused on 270 loci identified by GiaB as challenging, yet crucial for understanding genetic underpinnings of various diseases26, along with 20 polymorphic MUC family genes and the LPA gene, which are of particular interest because of their association with various structural haplotypes and health conditions87,88. ONT datasets were preprocessed and genotyped with locityper preprocess –tech ONT and locityper genotype, respectively. A database of locus haplotypes was constructed on the basis of the GRCh38 and CHM13 reference genomes, as well as on the basis of the 44 diploid HPRC whole-genome assemblies. To evaluate genotyping accuracy, we aligned haplotypes to each other using the Wavefront alignment algorithm89, and calculated sequence divergence as a ratio between edit distance and alignment size. Local haplotypes were extracted from the NYGC callset using bcftools consensus.

SV annotation with SVAN

SV calls generated with our SAGA framework were processed with the SVAN tool (commit: 7b97325, v.1.3) (https://github.com/REPBIO-LAB/SVAN) to classify them in distinct classes on the basis of distinctive sequence features:

Retrotranspositions

Canonical retrotransposition events were identified by searching for poly(A) and poly(T) tails at the 5′ end 3′ ends of the deleted and inserted sequences. Poly(A/T) tracts were required to be at least 10 bp in size, have a minimum purity of 90% and be at a maximum of 50 bp from the insert end or beginning, for poly(A) and poly(T), respectively. The sequence corresponding to the TSD was detected and trimmed from either the 5′ or 3′ end of the L1 insert through the identification of exact matches with the genomic sequence at the integration position. To have all candidate retrotransposed inserts in forward orientation, the reverse complement sequence for every trimmed insert occurring in the minus strand was obtained.

Candidate 3′ partnered transductions were detected by searching for a second poly(A) stretch at the 3′ end of the trimmed sequences using the same criteria outlined above. Integrants with a secondary tail were annotated as ‘3′ transduction’ candidates with the sequence in-between the poly(A) stretches corresponding to the transduced bit of DNA, whereas those with a single tail were classified as ‘solo’ candidates. To trace transductions to their source loci, transduced sequences were aligned onto CHM13 using BWA-MEM90. To maximize sensitivity for particularly short transduction events, a minimum seed length (-k) of 8 bp and a minimum score (-T) of 0 were used. Alignment hits were filtered by requiring a minimum mapping quality of 10. Transduction candidates with less than 80% of the transduced sequences aligning on the reference were further filtered out.

The retrotransposition candidates were further trimmed by removing the poly(A) tails and transduced sequences. To identify processed pseudogenes, orphan transductions, 5′ partnered transductions and retroelement sequences, the resulting trimmed inserts were aligned onto CHM13 using minimap 2.1, as well as BWA-MEM 0.7.17-r1188, by using the parameters described above and with the alignment conducted onto a database of consensus L1, Alu and SVA repeats. The SVA repeats database contains separate sequences for each SVA component, including the Alu-like region, VNTR, SINE-R and exon 1 of the MAST2 gene, which is characteristic of the SVA-F1 subfamily.

Alignment hits were filtered by requiring a minimum mapping quality of 10, and chained on the basis of complementarity to identify the minimum set of non-overlapping alignments that span the maximum percentage of the trimmed insert. On the basis of the alignment chains, retrotranspositions are classified as processed pseudogenes (more than or equal to 75% aligns on the reference over single or multiple Gencode 35 annotated exons91), orphan transductions (more than or equal to 75% aligns on the reference outside exons), 5′ transductions (more than or equal to 75% aligns both into the reference on its 5′ and into the consensus of a retrotransposon) and solo (more than or equal to 75% aligns into a single consensus retrotransposable element). Alignment chains are further analysed to decompose each MEI into its sequence components, including inversions at the 5′ end of L1 and processed pseudogene insertions or the set of individual repeats composing SVA inserts.

Non-canonical MEIs

Insertions and deletions without a poly(A) tail detected were aligned into a database of consensus L1, Alu and SVA repeats as described above to identify non-canonical MEIs. Alignment hits were filtered and chained and non-canonical MEI calls made if more than or equal to 75% of the sequence aligned into one or multiple repeat classes.

Endogenous retroviruses

Inserted and deleted sequences were aligned with BWA-MEM 0.7.17-r1188 into a database containing consensus ERV and LTR sequences. Alignment hits were filtered and chained as described above. SVs with hits spanning more than or equal to 75% of their sequence on the retrovirus database were classified as Solo-LTR and ERVK, on the basis of the presence of an LTR alone or LTR plus retroviral sequence, respectively.

VNTRs

Expansions and contractions of VNTR loci were annotated by processing inserted and deleted sequences with TRF (Tandem Repeats Finder) v.4.04 (ref. 92). TRF hits were processed and chained on the basis of complementarity to determine the minimum set of non-overlapping hits that span the maximum fraction of the sequence. On the basis of the hit chains, SVAN classified VNTRs as simple (more than or equal to 75% of the sequence corresponds to a single repeated motif) and complex (more than or equal to 75% corresponds to more than one repeated motif).

Duplications

Diverse classes of duplications, including tandem, inverted and complex duplication events, were annotated by realigning the inserted sequences onto the reference using minimap 2.1. Alignments were filtered to select only those located within a 2-kb window around the insertion breakpoint. These were further chained on the basis of complementarity to determine the minimum set of non-overlapping hits spanning the maximum percentage of the sequence. On the basis of the alignment chains, duplications are classified as tandem (more than or equal to 75% of the sequence aligns at the insertion breakpoint in forward orientation), inverted (more than or equal to 75% of the sequence aligns at the insertion breakpoint in reverse orientation) and complex (more than or equal to 75% of the sequence aligns at the insertion breakpoint both in forward and reverse orientation).

NUMTs

Insertions with more than or equal to 75% of their sequence having one or multiple minimap 2.1 alignments on the mitochondrial reference are classified as NUMT.

Transduction analysis

L1 and SVA transductions were clustered on the basis of the alignment position on the reference of their corresponding transduced sequences to identify their source regions. A buffer of 10 kb was applied to their start and end alignment positions before clustering. Source regions were further intersected with a database of full-length loci to determine the progenitor repeat. This database was generated by aggregating all the full-length L1s (more than 5.9 kb in size) and SVAs (more than 1 kb) in CHM13 detected as non-reference insertions in our SV callset. Progenitor repeats were further annotated by determining their insertion orientation and subfamily. For L1 events, subfamily assignment was performed through the identification of subfamily diagnostic nucleotide positions on their 3′ end. L1 progenitors bearing the diagnostic ‘ACG’ or ‘ACA’ triplet at the 5,929–5,931 position were classified as ‘pre-Ta’ and ‘Ta’, respectively. Ta elements were subclassified into ‘Ta-0’ or ‘Ta-1’ according to diagnostic bases at the 5,535 and 5,538 positions (Ta-0: G and C; Ta-1: T and G). Elements that did not show any of these diagnostic profiles could not be assigned to a particular category, and their subfamily status remained undetermined. For SVAs, the inserts were processed with RepeatMasker v.4.0.7 to determine the subfamily. If multiple RepeatMasker hits were obtained, the one with the highest Smith–Waterman score was selected as representative. To assess a bias in source elements to generate 5′ or 3′ transduction events, respectively, we conducted two-tailed binomial tests followed by controlling for the FDR according to Benjamini and Hochberg93. We included all source elements with at least five non-orphan transductions when conducting statistical testing for 5′ versus 3′ bias.

To investigate source element novelty, we compared active source L1s and SVAs identified in this study with source elements previously reported to be active on the basis of transduction traces seen in germline22,94 as well as cancer genomes95,96, and additionally compared our dataset with previously reported in vitro activity measurements97,98.

SV breakpoint junction analysis

The detection of homologous sequences and microhomologies flanking deletions and insertions was conducted on the primary callset, after removing calls less than 50 bp in length, using two approaches: Microhomology was quantified using the available homology output from SV calls generated by DELLY72 (v.1.2.6). We systematically generated DELLY calls for each SV by building synthetic reads carrying the respective SV allele, mapping those synthetic reads with minimap2 (ref. 64) (v.2.2.26) onto the genomic reference of the SV with 4-kb padding and calling the respective SV with DELLY. The synthetic reads consist of a 2-kb reference sequence upstream of the SV start, the inserted sequence (only for insertions and modified for larger insertions) and a 2-kb reference sequence downstream of the SV end coordinate. For insertions longer than 100 bp, we used at most the first and last 50 bp of the inserted sequence to avoid aberrant mapping of the insert, which was especially observed in the case of long insertions. Owing to the use of truncated inserts for insertions, only homologies with a length of 50 bp were considered, and larger values were set to 50 bp. The second approach aimed to capture longer stretches of homology using BLAST99-based detection of homologous stretches of DNA. For this, various pairs of search windows around both breakpoints were defined. Search windows were either symmetric with a padding of 50 bp, 100 bp, 200 bp, 400 bp, 1 kb, 2 kb, 5 kb, 10 kb, 50 kb and 100 kb, or asymmetric with a window size of ‘SV length’, which is shifted regularly along the breakpoint (0 bp upstream/‘SV length’ bp downstream; 1/6 ‘SV length’ upstream/5/6 ‘SV length’ downstream; …; ‘SV length’ upstream/0 bp downstream). From the predefined windows, only those leading to no overlap between windows were used. Potential homologies were detected using blastn 2.12.0 with -perc_identity 80 and -word_size 5, for which the sequences inside the search windows were passed using the -subject and -query parameters. To allow for equivalent analyses between deletions and insertions, insertions were implanted into the CHM13 reference genome and the search windows were defined on the modified reference sequence. BLAST results were filtered to ensure flanking homology stretches show the same directionality, span the respective SV breakpoints and contain the respective breakpoint at the relative same position inside the homology segment.

For annotation of repeat-mediated SVs, the overlap of the homologous sequences with RepeatMasker (v.4.1.2) annotations was used. For deletions, overlap of the homologous regions with the elements of the RepeatMasker track of the CHM13 reference12,22,63 (obtained from https://github.com/marbl/CHM13) was calculated with bedtools73 (v.2.31.1) intersect. Deletions were classified as repeat-mediated if at least 85% of the bases of the homologous regions on both sides intersect a RepeatMasker annotation of the same class and at least one homologous region spans 85% of the RepeatMasker element. For insertions, the homologous sequences outputted by blastn were annotated with RepeatMasker and insertions for which both homologous flanks show a reciprocal overlap of at least 85% with a RepeatMasker annotation of the same class were deemed repeat-mediated. To analyse SD-mediated deletions, all deletions were intersected with the SD track of CHM13. Hits spanning more than 85% of the homologous region and spanning more than 200 bases or 50% of the SD were defined as putatively SD-mediated.

VNTR genotyping using vamos

We performed VNTR genotyping on the long-read data from our resource using vamos (v.2.1.3)7. We used the VNTR motif annotations provided by the authors of vamos on the CMH13 reference100 to genotype VNTRs on all the samples of our dataset. We performed quality control on the VNTR calls by comparing the calls produced from these ONT reads with the VNTR calls produced from recently generated multi-platform whole-genome assemblies20, focusing on the 16 overlapping sampling between the two callsets (Supplementary Fig. 7). For each VNTR position described by the motif annotations, we compared the number of repeat units that were present in the VNTR allele called using vamos. As the VNTRs called on our dataset are read-based and the HGSVC dataset20 is genome assembly-based, the VNTR alleles of our dataset are unphased whereas the HGSVC VNTRs are phased. For allelic comparison, we paired the ONT 1kGP-based alleles with the HGSVC alleles having the smallest difference in repeat unit counts. We removed the VNTR alleles for which the HGSVC assemblies showed the VNTR to be close to the reference VNTR (when the VNTR allele from the assemblies had a base pair length between 90% and 110% of the reference VNTR allele). We compared the rest of the VNTR alleles to assess the concordance between calls on the basis of our resource and the HGSVC assembly-based calls. We also conducted a comparison for mismatched samples, verifying that random VNTR matching does not produce similar results.

Deletion recurrence analysis

To detect potential NAHR-mediated deletion recurrence, we analysed deletions shorter than 5 kb in length exhibiting a flanking sequence homology of 200–9,000 bp. The lower bound of 200 bp was introduced to account for the minimal processing length of flanking repeat sequences previously thought to be necessary for NAHR to occur52,101. The upper bound of 9,000 bp was chosen to systematically assess the role of the most abundant repeat elements in the human genome, including small- to intermediate-sized SDs as well as mobile elements, which we consider to be ideal candidate sites for SV recurrence owing to their pervasive presence as repeats in the genome providing substrates for NAHR. Additionally, to focus the analysis on potentially recurrent events, we limited our analysis to deletions with allele frequency of 40–60%. Furthermore, we used Hardy–Weinberg equilibrium and Mendelian consistency statistics to exclude events with potential genotyping errors. Last, we filtered out deletions for which phasing information was unavailable. Using the above-mentioned criteria, we retained 42 deletions for our analysis. To find evidence of recurrence, we used an approach similar to the approach we previously devised to detect recurrent inversions in the genome36. We screened for SNPs with at least 10% allele frequency lying within a 20-kb window centred around the deletion in search for positions for which we observe SNP alleles in haplotypes both with and without the deletion—which we denote recurrence-indicating SNPs. Additionally, we used centroid hierarchical clustering to cluster the SNP haplotypes in a 100-kb window centred around the deletion, in an effort to assess whether the haplotypes with and without the deletion appear together in similar clusters, a phenomenon that indicates that the event recurred in humans. For all 42 shortlisted potentially recurrent candidates, we manually inspected these visualizations of SNP haplotypes as well as read alignments from carriers of deletion and reference alleles, respectively, to confirm that genotyping was accurate, that the clustering signal was not driven by recombination and that recurrence-indicating SNPs were present on both sides of the deletion. We selected six candidates with the strongest evidence for SV recurrence upon inspection and included the visualizations as Supplementary Figs. 38–43 and as Extended Data Fig. 8.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.