FutureHouse researchers James Braza, Siddharth Narayanan and Andrew White (left to right).Credit: FutureHouse

As artificial intelligence (AI) tools shake up the scientific workflow, Sam Rodriques dreams of a more systemic transformation. His start-up company, FutureHouse in San Francisco, California, aims to build an ‘AI scientist’ that can command the entire research pipeline, from hypothesis generation to paper production.

Today, his team took a step in that direction, releasing what it calls the first true ‘reasoning model“ specifically designed for scientific tasks. The model, called ether0, is a large language model (LLM) that’s purpose-built for chemistry, which it learnt simply by taking a test of around 500,000 questions. Following instructions in plain English, ether0 can spit out formulas for drug-like molecules that satisfy a range of criteria.

What are the best AI tools for research? Nature’s guide

Ether0, which is open source and publicly available from today, joins a host of other efforts aimed at automating the scientific process, including at Google and the Japanese company Sakana AI. But unlike previous specialist models, ether0 tracks its ‘train of thought’ in natural language, providing a window into AI’s ‘black box’ and allowing it to answer questions that typically require complex reasoning. Although some general-purpose reasoning models such as OpenAI o1 have shown improvement on standardized science tests, they have struggled to generate deep insights without targeted training.

Researchers have expressed a mixture of excitement and concern about FutureHouse’s advance. “I think it’s very cool what they pulled off,” says Kevin Jablonka, a digital chemist at the University of Jena in Germany. When playing around with a preview version of ether0, Jablonka found that the model could draw meaningful inferences about chemical properties that it wasn’t trained on. “That’s impressive and [something] models before couldn’t do,” he says.

AI for science

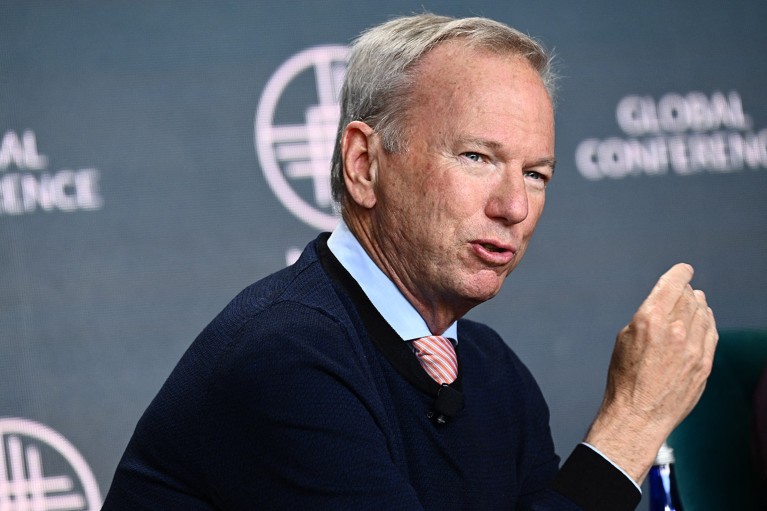

The model is the latest release from FutureHouse, which was launched in 2023 as a non-profit organization backed by former Google chief executive Eric Schmidt with the mission of expediting the scientific process with AI. In the past year, the company has released an advanced scientific literature reviewer and a platform of AI agents — LLM-based tools designed for specific tasks. These agents draw from the scientific literature and deploy molecular design tools to analyse data and answer detailed questions on drug design. In May, the team announced that it had used these models to propose a new treatment1 for dry age-related macular degeneration, a major cause of blindness.

“Agents are going to be really useful for finding all this stuff in literature that’s just kind of staring at us in plain sight,” says Andrew White, a chemical engineer at FutureHouse who is on sabbatical from the University of Rochester in New York. But like most LLMs, the agents are fundamentally limited by the amount of chemistry information available on the Internet. “There’s right now little real-world impact of those models in labs,” says Jablonka, who led a review published2 last month on the chemistry prowess of LLMs.

FutureHouse was launched in 2023 with backing from former Google chief executive Eric Schmidt.Credit: Patrick T. Fallon/AFP via Getty

To achieve something closer to true understanding, computer scientists have turned to ‘reasoning models’, such as the Chinese model DeepSeek-R1. These AIs are prompted to converse with themselves and show the work that led to their answers. Studies suggest that this internal dialogue seems to improve their accuracy on complex questions, leading Rodriques to suspect that they might be useful for generating new research ideas.

Previously, when leading reasoning models have taken on science problems, they’ve mainly focused on passing standardized exams with basic textbook understanding, Jablonka says. “There hasn’t been one model that’s reasoning in any useful way in chemistry so far.” FutureHouse set out to change that.

Thinking time

FutureHouse researchers took a relatively small LLM from the French start-up firm Mistral AI, which is roughly 25 times smaller than DeepSeek-R1 — compact enough to run on a laptop. Rather than train the model on chemistry textbooks and papers, they found that they could let it learn from taking tests. To do this, White compiled lab-generated chemistry results from 45 scholarly papers, tracking properties such as molecular solubility and smell, and converted them into 577,790 verifiable questions.

Can AI review the scientific literature — and figure out what it all means?

The researchers taught the base model to ‘think aloud’ by asking it to read incorrect solutions and reasoning chains generated by DeepSeek-R1. Seven versions of the model each tried to solve a specific subset of the chemistry questions, receiving reinforcement rewards for correct answers. The researchers then merged the reasoning chains from those specialist models into one generalist model. After running through the question set once more, they were left with ether0.

The team assessed ether0’s performance using a further set of questions, some of which were unrelated to those in the training set. Almost across the board, ether0 outperformed frontier models such as OpenAI’s GPT-4.1 and DeepSeek-R1. For some problem types, the model more than doubled the accuracy of its competitors. And it did so for a bargain: training a similar state-of-the-art non-reasoning model to achieve comparable accuracy on reaction predictions used 50 times more data.

But because ether0 can generate solutions only in the form of molecular formulas and reactions, it’s hard to cross-check its performance against other models and humans on independent benchmarks, Jablonka says.

Nevertheless, Jablonka found that the model could correctly reason about molecular structures that it had not been trained on — for instance, by changing a molecule’s formula to fit a particular nuclear magnetic resonance spectrum. “I didn’t expect this,” he says.

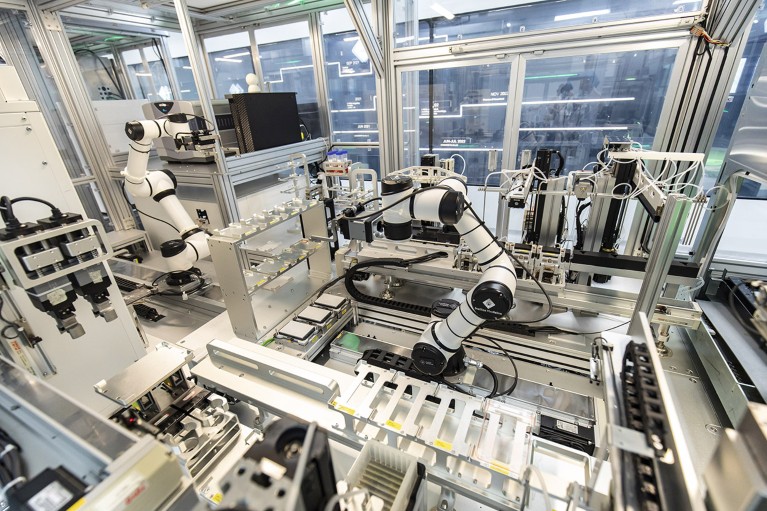

Advances in AI could pave the way for robotic laboratories that completely automate parts of the scientific process. Credit: Qilai Shen/Bloomberg via Getty

The biggest opportunity presented by these reasoning models, Rodriques says, is that “you get to see what they are thinking throughout the entire process”. His team found that if it allowed the models to reason for longer, the responses became more accurate but less legible — mixing in different languages and inventing new words. The team decided to prioritize interpretability over accuracy by limiting the reasoning time.