The production of fake research is now a thriving industry, thanks to paper mills. These networks sell paper authorships and poor-quality or fabricated scientific manuscripts to researchers, or violate the peer-review process by providing fake reviews. And they have become so prolific that current self-correction mechanisms no longer work.

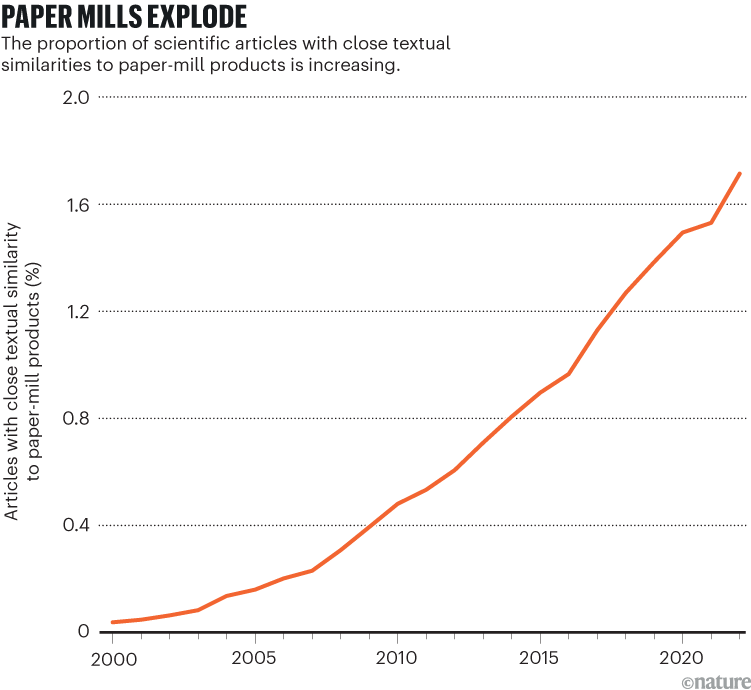

The first evidence1 of authorships for sale was reported in 2013. The paper-mill industry has since mushroomed (see ‘Paper mills explode’). One estimate suggests that the problem might have started even earlier — at least 400,000 papers published between 2000 and 2022 show the hallmarks of having been produced by paper mills (see Nature 623, 466–467; 2023). Yet only 55,000 were retracted or corrected in the same period, according to the database of the website Retraction Watch. Fraudulent research pollutes the literature, slows down scientific progress, delays the discovery of therapies and reduces public trust in science.

Source: Adam Day, unpublished estimates

We are integrity sleuths. Scientists by training, we now spend time sniffing out publications from paper mills. After initially working mostly alone, each specializing in a certain discipline or symptom of paper-mill activity, in the past three years, we’ve begun to join forces. Together, we are skilled at spotting the signs of a paper mill, from manipulated images to plagiarism, fake peer reviews and fake reviewers to unusual patterns of co-authors and paid-for citations.

Collectively, we’ve discovered thousands of problematic papers. We shouldn’t need to do this work, which is mostly unpaid. But each of us was drawn to sleuthing by frustration and concern about dishonest scholars and the harm that industrialized fabrication of research can do.

Despite our expertise, we have little power to tackle publications from paper mills. We depend on institutes to investigate suspicious actions by scientists, and on journal editors and publishers to correct or retract the articles that we flag. In our view, scientists, journals and publishers are woefully underprepared to do this work.

Some initiatives have begun to take on the problem. United2Act, for instance, is a collaboration of stakeholders in academic publishing — such as researchers, sleuths, publishers and institutes — that’s attempting to raise awareness of paper mills, improve post-publication correction and facilitate research on paper mills. To stem the tide of fraudulent research, the scientific community must build on this momentum by making concerted efforts to seek out and stamp out paper mills.

A welcoming environment

Paper mills flourish because of research systems that evaluate scientists using publication metrics, thereby inadvertently providing an incentive for misconduct. People with paper-mill publications might be promoted over those who have more modest — but honest — publication records. One study, for instance, reported that 95% of biomedical faculties use the number of peer-reviewed papers that a researcher has had published as a performance metric2. And 40% of research-intensive institutions in the United States and Canada consider the impact factor of the journals in which an individual has had work published when making decisions about their promotion and tenure3. Institutions seldom seem to punish researchers for using paper mills, perhaps owing to a lack of awareness, or concern about reputational or legal risks.

If a paper-mill client becomes an influential researcher, they might then facilitate the production of more paper-mill publications, through academic editing roles. One of us (N.W.) was involved in an investigation that found that mills seemed to be offering money to editors in exchange for accepting publications in their journals4. Guest issues, organized by academics with little oversight from a journal’s editors, can be particularly problematic. Academics working for paper mills can propose themselves as guest editors, manipulate the peer-review process and accept articles from the mill.

How to improve assessments of publication integrity

Publishers are often slow to act on reports of paper-mill content. One of us (E.B.) reported5 800 papers with apparent image duplication in 2014 and 2015 — of which only half had been corrected or retracted by March 2024. There are various reasons for this. For one, retractions can damage a journal’s brand. Publishers are often concerned about the level of evidence required to justify retraction, and could be at risk of legal action by disgruntled authors. In addition, they might lack the staff to quickly tackle the problem, or staff might lack the expertise to detect specific ‘red flags’ or problematic issues with individual paper mills.

Each of us, along with other sleuths, identifies paper-mill products regularly — enough papers to overwhelm publishers with cases, making swift retractions or corrections nearly impossible. Scientists urgently need to make running and using paper mills less attractive. The following five steps would help.

Research to understand mills

With research into paper mills scant and fragmented, little is known about their business models and publication techniques6. It’s hard even to identify them, because they depend on staying one step ahead of publishers and sleuths, and are constantly developing fresh ways to hide their activity. Mills operating in different regions or specializing in different subjects use differing approaches to solicit business and avoid detection.

Even for papers from the same mill, this can be true. Last year one of us (A.A.) received a message on social media from the owner of a paper mill that the sleuth had investigated. Her analytics undoubtedly deserved respect, the message read, but had many shortcomings, because the 1,000 papers she’d identified as coming from the mill were just the tip of the iceberg.

Answers to some key questions would help to focus preventive efforts6. These include: where are paper mills located? What fields are they targeting? And how are they using artificial intelligence (AI)?

Multimillion-dollar trade in paper authorships alarms publishers

Paper mills have been found in a wide range of countries, including: China, Russia, Ukraine, Kazakhstan, India, Iran, Iraq, Latvia and Peru7. But there are large parts of the world in which paper mills are expected to operate but where few have been identified, notably in much of Latin America, where many journals are targeted. To make a start, local researchers and sleuths might scour social-media posts and the wider Internet to look for paper-mill offers.

Certain research fields seem to be particularly susceptible, namely those in which the number of possible experiments far exceeds the available scientific resource. Fields we know of include non-coding RNAs in human cancer and crystallography — vast numbers of different RNA combinations and crystal structures can potentially be investigated. In chemistry, 44% of papers that are retracted owing to fraud are published in crystallography8. There are sure to be other fields.

Paper mills are already exploiting large language models (LLMs) to avoid plagiarism detectors and AI image generators to mass-produce papers. One preprint9 suggests that at least 10% of all PubMed abstracts published in 2024 were written with LLMs — although it is challenging to differentiate between papers from mills and those by legitimate authors who want to improve their writing. We predict further exploitation of AI-generated images to produce figures in future paper-mill products. These are likely to be difficult to detect. Journals can help by promoting open science and demanding the raw data for studies — the more information is available about papers, the easier it is to spot new tricks by paper mills.

Educate stakeholders

We’re often asked to speak at universities and conferences, and consistently come across academics and PhD students who have never heard of paper mills. By preparing people to recognize and call out fake research, the scientific community can build up immunity against paper mills.

Pay researchers to spot errors in published papers

Everyone involved in disseminating and digesting research — editors, publishers, students, authors, funding organizations, institutions, bibliographic databases and governments — needs to understand what paper mills are and know how to spot the telltale signs of fake papers. Anyone can learn to look out for image falsification or duplication in papers in their field, as well as for nonsensical text or equations, problematic references and reagents, irrelevant citations and implausible co-authorships. Free tools such as the Problematic Paper Screener10 developed by one of us (G.C.) can help researchers to spot papers that might be fraudulent.

The first step is to check whether a published paper has been flagged in a database that lists retractions and corrections, such as the Retraction Watch database, or on PubPeer — an online platform for post-publication peer review, on which errors and questionable content might be flagged. There might be innocuous reasons for retractions or PubPeer comments, but by checking these databases, researchers can identify suspicious activity. There are browser extensions and reference managers (for example, Zotero) that have integrated these databases to indicate problematic references.

Universities should invest in education on paper mills as a standard part of PhD courses and training for staff, and should put in place clear policies that detail how to report a suspected paper-mill article. They should support researchers who report suspicious papers. Journal editors and research-integrity experts should receive regularly updated training organized by publishers or sleuths, and publishers should share experiences of new types of fraud with one another.

Ensure best practice in publishing

The best time to detect fraudulent research is before it is published. Publishers should work together to identify papers submitted simultaneously to multiple journals — a common tactic from paper mills to increase the probability of publication. The STM Integrity Hub, set up in 2023, offers a platform to check articles for research-integrity issues, and is currently used by more than 25 publishers and societies. Currently, its duplicate-submission-checker tool screens around 60,000 papers per month, identifying around 1.5% of submissions as duplicates.

Editors should look out for violations of the peer-review process. These might include authors suggesting peer reviewers with fake identities or affiliations. Anomalies in e-mails, such as a domain that doesn’t correspond with the university or affiliation country of a scholar, can be a telltale sign of a fake peer reviewer or author — either someone impersonating a real scholar or a completely fake identity. Publishers can verify peer reviewers by checking for indicators of honesty known as trust markers, which include an institutional e-mail address and a publication record in which no papers are flagged on PubPeer or elsewhere. Groups of collaborators from disciplines that rarely interact, publishing far outside their own fields, might also indicate that a paper warrants thorough scrutiny.

Chain retraction: how to stop bad science propagating through the literature

A rigorous industry-wide system for author disambiguation would also help to detect authors or editors who are repeatedly associated with paper-mill activity. Often it’s hard to be sure whether a single dubious paper is from a paper mill, but when many such articles can be linked to a single author or editor, then the probability of paper-mill involvement is greater. Verified scholar identification such as that provided through ORCID is not enough11 — it must be built into a system that also includes trust markers.