Credit: Getty

Imagine Kgomotso Mathabe’s surprise when, in January, a colleague alerted her that a video of her promoting a fake drug to treat erectile dysfunction was doing the rounds on social media. She’d done no such thing.

“It was a video of me saying there’s this new drug based on research that I’ve been involved in,” says the South African urologist, who splits her time between the Steve Biko Academic Hospital in Pretoria and the University of Pretoria. It was realistic enough for family friends to begin asking why they saw her face every time they went on Facebook.

The video of Mathabe was a deepfake, generated using artificial intelligence (AI) technology trained on real video and audio material. Such videos have become difficult to distinguish from the real thing, as well as easier and cheaper to make, so their harmful use is a growing concern.

Mathabe, a self-professed social-media recluse, did not know what to do. At first, she assumed the main purpose of the video was to sell fake drugs, a common scourge in South Africa, where handwritten notices advertising healing are a familiar sight in public spaces.

But it was worse than that. The video directed users to a website where they were asked to enter their banking details to receive the drug. Those who did so had money siphoned out of their account, often several times, and received no medicine in return.

Urologist Kgomotso Mathabe was a target of a deepfake video and other faked advertisements, such as this one (left) for an erectile dysfunction treatment, which took an original photo of her (right) and edited it to make it look like she was wearing a white coat and stethoscope.Credit: Courtesy of Kgomotso Mathabe

Soon, the phones at Mathabe’s practice were swamped by irate callers accusing her of stealing from them. “That’s when I realized, this is serious,” she says.

A proliferating problem

Mathabe is not alone. In India, diabetes specialist Viswanathan Mohan has been featured in several deepfake videos, including one in which he seems to be talking in Hindi, a language that he doesn’t speak.

“My name is synonymous with diabetes in India. So, whatever I say about diabetes is taken as the gospel truth,” says Mohan, who chairs an International Diabetes Foundation centre of excellence in Chennai. That means that the use of his name or profile by scammers, to sell fake drugs, is nothing new.

But lately the attacks have become more sophisticated, and harder to debunk. “With AI, they can make an image look and speak exactly like me, with my mannerisms,” he says. In one video, he is depicted as saying that people could live to 100 years if they take a certain herbal product.

Diabetes researcher Viswanathan Mohan has been the target of several deepfake videos and says they present both a reputational and a professional risk to researchers, who should work to get them taken down or labelled as fake.Credit: Dr V Mohan

Such videos pose a reputational risk, as well as a professional one because, Mohan says, he could face legal action from bodies such as India’s medical association. In 2022, the association sued the Indian herbal-products company Patanjali Ayurved, based in Haridwar, for alleged false advertising over claims that its products could cure a range of ailments.

Attack on scientists’ credibility

Discussions about the dangers of deepfakes have so far focused on politicians and celebrities. A video of the rapper Snoop Dogg reading tarot cards might seem harmless, but the same technology has been used to generate pornographic images of singer-songwriter Taylor Swift. Deepfaked voice recordings have been used to sow disinformation in elections from Slovenia to Nigeria, and most people in the United States expect AI abuses to affect this year’s US presidential election. But AI researchers say that scientists — particularly those in the public eye — are also at risk.

“When you think of ways to spread misinformation, you want to manipulate what people think are the trusted sources of information,” says Christopher Doss, a quantitative researcher who works in Washington DC for the RAND Corporation, a non-profit policy-research think tank. So, deepfakes involving scientists “are probably going to be something that we see more of”, he says.

Last year, Doss published a study with colleagues at RAND, Carnegie Mellon University in Pittsburgh, Pennsylvania, and the Challenger Center in Washington DC to test the ability of US schoolchildren, university students and adults to distinguish fake science-information videos from real ones; between 27% and 50% of the respondents could not identify the fakes. The videos featured well-known climate commentators, including activist Greta Thunberg and retired atmospheric physicist and climate doubter Richard Lindzen, in Cambridge, Massachusetts; all of the clips were generated from publicly available material.

“Deepfakes definitely aren’t perfect, but we’re at the point now where they’re probably good enough to fool at least a substantial percentage of people,” says Doss. And generating one doesn’t require the technical expertise that it used to, he adds.

Despite current efforts, few technological means are available to stop legitimate videos being used to generate deepfakes, says Siwei Lyu, a specialist in machine learning and digital media at the University at Buffalo in New York.

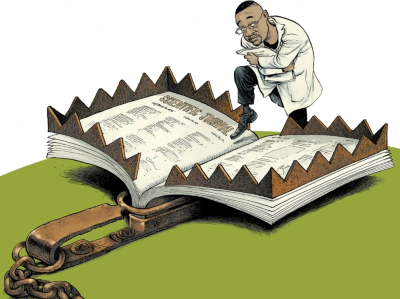

I’m worried I’ve been contacted by a predatory publisher — how do I find out?

He says that scientists should be cautious when sharing media on social platforms, but adds that this can be difficult for those who often participate in media interviews or give presentations. At the very least, he says, scientists should make a habit of saving clips that feature them.

“Having the original version of the video helps to debunk the fake,” he says, because many deepfake videos are made from authentic ones by lip-syncing them to different messages. Posting the original version of a clip is one way of combating such misinformation.

Damage control

But what if the worst were to happen? Once a deepfake is out in the world, there are things that scientists can do to minimize the negative impacts, both professionally and personally, says Jeannie Paterson, a law researcher at the University of Melbourne in Australia who specializes in consumer safety and reliable AI.

“Let’s say, you wake up and all of a sudden your face is being used to promote something that you would never have promoted. First of all, if this happens to you, breathe,” she says.

The next step is to contact the social-media platform on which the material is being shared and ask that it be taken down. Platforms such as Facebook, YouTube and Instagram allow users to report ads that are misleading or scams, and if there’s a high risk of harm from the misinformation — such as fake drugs being sold — they are more likely to act, Paterson says.

Meta, which owns Facebook and Instagram, says it removes misinformation “where it is likely to directly contribute to the risk of imminent physical harm”, if it’s likely to influence political processes or if it’s “highly deceptive”. The company says it partners with independent experts to assess the truth of the content and decide whether it constitutes grounds for takedown. Meta has also introduced ‘AI info’ labels for video, audio and images that have been modified with AI technology; it says these labels are assigned either on the grounds of telltale AI signs in the content or when users disclose that the content is AI-generated.

AI-fuelled election campaigns are here — where are the rules?

Scientists featured in deepfakes should also contact their employers, Paterson says — perhaps both their immediate supervisors and their department heads, who might in turn reach out to the human-resources department. If applicable, they should also notify the body that regulates their profession, such as a medical board or other professional society, she says; that’s because being featured in a deepfake video endorsing fake drugs, say, could jeopardize their accreditation. These organizations can also help to disseminate a correction. And the faster this can come out, the better, she says, because the more a video spreads, the greater the potential harm.

Scrutinizing the video itself is another important step, even though it might be emotionally taxing. Asking a trusted colleague or friend to help is a good idea, says Paterson. “I’d sit down with somebody who’s a bit techie, and watch the video, maybe at a slower speed, so you can spot the inconsistencies or failings that suggest it’s a deepfake,” she says. Telltale signs include shadows not looking quite right, or a lack of coordination between the mouth and the rest of the face.

It might also be a good idea to go to the police or look into legal action, says Paterson — especially if the video poses a serious threat to a scientist’s reputation, or if it is used to commit a crime. Typically, it’s criminal fraud to use a fake image to sell a fake product, says Paterson, and police might choose to pursue and prosecute those responsible for such conduct. Such deepfakes also involve misrepresentation, which is a civil wrong and can be reported to consumer-protection regulators, she notes. And victims of deepfake videos can seek damages for defamation in court. However, it is often not possible to identify a perpetrator, she adds. “They are, after all, a fraudster.”

Not a victimless crime

In South Africa, Mathabe went to the police after colleagues began to fear for her safety, owing to the number of complaints coming in from people targeted by the financial scam that her face had been used to promote. She says that having a case number helped her to field complaints from people who had been cheated out of their money. But going to the police didn’t result in prosecution. It was a challenge to explain the nature of the incident to the officers on duty, she says. They suggested she take the legal route and open a case for defamation of character. When she asked about adding cybercrime as an aspect of her complaint, the officers said they did not have the funds to pursue large overseas companies such as Meta.

Kgomotso Mathabe, a urologist in South Africa, says that filing a police report, and realizing that fraudsters targeting her was not personal, helped her to move on from the deepfake video scam.Credit: Discovery Foundation

Taking the legal route can also be costly, and it’s not clear where scientists featured in deepfakes can go for help. The British Medical Association, a doctors’ union, offers members legal support for employment-law disputes only. Some countries have online safety laws that assist victims in removing nonconsensual images of an intimate nature, but these laws rarely cover misinformation or hijacking somebody’s public profile to sell fake products. For example, the United Kingdom’s online-safety law, enacted last year, has been criticized for being too soft on mis- and disinformation campaigns, even though it creates a new offence for false communications.

Mohan says he reports the videos in which he appears to India’s cybersecurity police, but that there isn’t much they can do. “They tell you they get thousands of complaints every day.”

He thinks these deepfake scams could be more common in low-income countries than in wealthy ones, because higher levels of poverty could be driving more people to cybercrime. Weaker control of the sale of counterfeit or fake drugs, and lower health literacy, could also play a part, he adds.

But with the quality of the technology improving, he thinks nowhere will be safe. “There must be crooks everywhere,” he says.

In Pretoria, Mathabe says that things have quietened down since the flurry at the start of the year. There have been no phone calls to her clinic for a while. She says she doesn’t know what happened to the people who were scammed out of their savings, and no progress has been made in her police case.

She can now look back on the ordeal and realize how traumatic it was. Especially at the beginning, when she was trying to work out why she had been targeted. “I wondered, who doesn’t like me this much?”

But then, as she learnt more about deepfakes and scamming, she realized that the criminals did not care about her at all. Hearing that others have been targeted in a similar way also helped, she says. “Then it felt less personal.”