Wilsdon, J. et al. The Metric Tide: Report of the Independent Review of the Role of Metrics in Research Assessment and Management (HEFCE, 2015); https://doi.org/10.13140/RG.2.1.4929.1363.

Curry, S., Gadd, E. & Wilsdon, J. Harnessing the Metric Tide: Indicators, Infrastructures & Priorities for UK Responsible Research Assessment – Report of The Metric Tide Revisited Panel (RORI, 2022); https://doi.org/10.6084/m9.figshare.21701624.

Fauzi, M. A., Tan, C. N. L., Daud, M. & Awalludin, M. M. N. University rankings: a review of methodological flaws. Issues Educ. Res. 30, 79–96 (2020).

Gadd, E. Mis-measuring our universities: why global university rankings don’t add up. Front. Res. Metr. Anal. https://doi.org/10.3389/frma.2021.680023 (2021).

Parker, J. Comparing research and teaching in university promotion criteria. High. Educ. Q. 62, 237–251 (2008).

McKiernan, E. et al. Meta-research: use of the journal impact factor in academic review, promotion, and tenure evaluations. eLife 8, e47338 (2019).

Rice, D. B., Raffoul, H., Ioannidis, J. P. & Moher, D. Academic criteria for promotion and tenure in biomedical sciences faculties: cross sectional analysis of international sample of universities. Br. Med. J. 369, m2081 (2020).

Rice, D. B., Raffoul, H., Ioannidis, J. P. & Moher, D. Academic criteria for promotion and tenure in faculties of medicine: a cross-sectional study of the Canadian U15 universities. FACETS 6, 58–70 (2021).

Pontika, N. et al. Indicators of research quality, quantity, openness and responsibility in institutional review, promotion and tenure policies across seven countries. Quant. Sci. Stud. 3, 888–911 (2022).

Muller, J. Z. The Tyranny of Metrics (Princeton Univ. Press, 2018).

Polese, A. The SCOPUS Diaries and the (Il)Logics of Academic Survival – A Short Guide to Design Your Own Strategy and Survive Bibliometrics, Conferences, and Unreal Expectations in Academia (Ibidem, 2019).

Ter Bogt, H. J. & Scapens, R. W. Performance management in universities: effects of the transition to more quantitative measurement systems. Eur. Account. Rev. 21, 451–497 (2012).

Dominik, M. Research Assessment: Recognising the asset of diversity for scholarship serving society. ESO on-line conference: The Present and Future of Astronomy (14–18 February 2022). Zenodo https://doi.org/10.5281/zenodo.6246171 (2022).

Moore, S., Neylon, C., Eve, M. P., O’Donnell, D. P. & Pattinson, D. Excellence R Us: university research and the fetishisation of excellence. Palgrave Commun. 3, 16105 (2017).

Binswanger, M. in Opening Science (eds Bartling, S. & Frieseke, S.) 49–72 (Springer, 2014)

Kulczycki, E. The Evaluation Game – How Publication Metrics Shape Scholarly Communication (Cambridge Univ. Press, 2023).

Mryglod, O., Kenna, R., Holovatch, Y. & Berche, B. Comparison of a citation-based indicator and peer review for absolute and specific measures of research-group excellence. Scientometrics 97, 767–777 (2013).

Abramo, G., Cicero, T. & D’Angelo, C. A. National peer-review research assessment exercises for the hard sciences can be a complete waste of money: the Italian case. Scientometrics 95, 311–324 (2013).

D’Ippoliti, C. ‘Many-citedness’: citations measure more than just scientific quality. J. Econ. Surv. 35, 1271 (2021).

Hicks, D., Wouters, P., Waltman, L., de Rijcke, S. & Rafols, I. Bibliometrics: The Leiden Manifesto for research metrics. Nature 520, 429–431 (2015).

Pudovkin, A. I. Comments on the use of the journal impact factor for assessing the research contributions of individual authors. Front. Res. Metr. Anal. https://doi.org/10.3389/frma.2018.00002 (2018).

INORMS Research Evaluation Group. The SCOPE Framework: A Five-Stage Process for Evaluating Research Responsibly (Emerald Publishing, 2021); https://inorms.net/wp-content/uploads/2022/03/21655-scope-guide-v10.pdf.

Moher, D. et al. The Hong Kong Principles for assessing researchers: fostering research integrity. PLoS Biol. 18, e3000737 (2020).

Paruzel-Czachura, M., Baran, L. & Spendel, Z. Publish or be ethical? Publishing pressure and scientific misconduct in research. Res. Ethics 17, 375–397 (2021).

Fanelli, D. How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE 4, e5738 (2009).

UNESCO Recommendation on Open Science. Adopted by the 41st session of the General Conference (9–24 Nov 2021), UNESDOC Digital Library, Document Code SC-PCB-SPP/2021/OS/UROS (United Nations Educational, Scientific and Cultural Organization, 2021); https://unesdoc.unesco.org/ark:/48223/pf0000379949.locale=en.

Dominik, M. et al. Publishing Models, Assessment, and Open Science (Global Young Academy, 2018); https://globalyoungacademy.net/wp-content/uploads/2018/10/APOS-Report-29.10.2018.pdf.

Saenen, B., Morais, R., Gaillard, V. & Borrell-Damián, L. Research Assessment in the Transition to Open Science: 2019 EUA Open Science and Access Survey Results (European University Association, 2019); https://eua.eu/downloads/publications/research%20assessment%20in%20the%20transition%20to%20open%20science.pdf.

San Francisco Declaration on Research Assessment (DORA) https://sfdora.org/ (DORA, accessed 10 November 2023).

A New Research Assessment towards a Socially Relevant Science in Latin America and the Caribbean (Latin American Council of Social Sciences (CLACSO), 2022); https://biblioteca-repositorio.clacso.edu.ar/bitstream/CLACSO/169747/1/Declaration-of-Principes.pdf.

de Rijcke, S. et al. The Future of Research Evaluation: A Synthesis of Current Debates and Developments (IAP/GYA/ISC, 2023); https://www.interacademies.org/publication/future-research-evaluation-synthesis-current-debates-and-developments.

Towards a Reform of the Research Assessment System: Scoping Report (European Commission, Directorate-General for Research and Innovation, 2021); https://data.europa.eu/doi/10.2777/707440.

Agreement on Reforming Research Assessment (Coalition for Advancing Research Assessment, 2022); https://coara.eu/app/uploads/2022/09/2022_07_19_rra_agreement_final.pdf.

Room for Everyone’s Talent – Towards a New Balance in the Recognition and Reward of Academics (VSNU, NFU, KNAW, NWO and ZonMw, 2019); https://www.nwo.nl/sites/nwo/files/media-files/2019-Recognition-Rewards-Position-Paper_EN.pdf.

Working Group for Responsible Evaluation of a Researcher. Good Practice in Researcher Evaluation. Recommendation for the Responsible Evaluation of a Researcher in Finland. Responsible Research Series 7:2020 (The Committee for Public Information (TJNK) and Federation of Finnish Learned Societies (TSV), 2020); https://doi.org/10.23847/isbn.9789525995282.

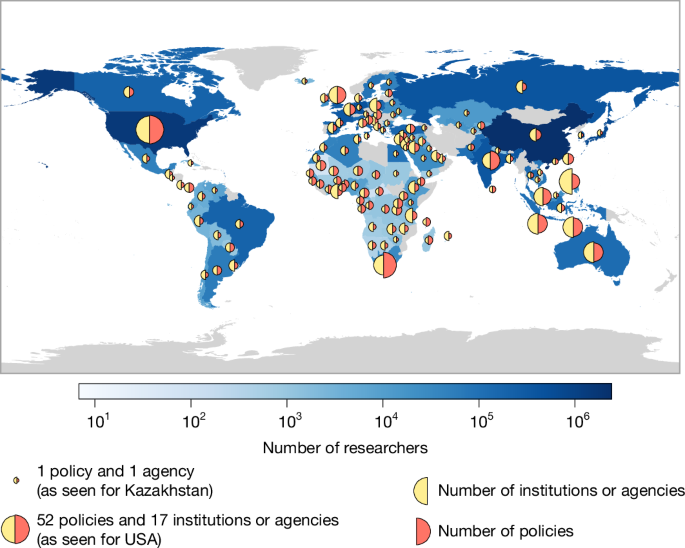

UIS Data Centre. Science, Technology and Innovation: Research and Experimental Development, (9.5.2) Researchers (in full-time equivalent) per million inhabitants http://data.uis.unesco.org/index.aspx?queryid=3685 (UNESCO, accessed 5 February 2024).

Researchers in R&D (per Million People) [SP.POP.SCIE.RD.P6] (UNESCO Institute for Statistics Bulk Data Download Service, accessed 27 November 2023); https://apiportal.uis.unesco.org/bdds.

UNESCO Science Report: Towards 2030. Second revised edition (UNESCO Publishing, 2016); https://doi.org/10.18356/9789210059053.

UNESCO Science Report: The Race Against Time for Smarter Development (eds Schneegans, S. et al.) (UNESCO Publishing, 2021); https://doi.org/10.18356/9789210058575.

Bangladesh Bureau of Statistics. Indicators 9.5.2: Researchers (in Full-Time Equivalent) per Million Inhabitants (2022) (SDG Tracker – Bangladesh’s Development Mirror, accessed 29 February 2024); https://sdg.gov.bd/page/indicator-wise/1/101/3/0.

National Statistical Committee of the Kyrgyz Republic. Indicator 9.5.2 – Researchers (in Full-Time Equivalent) per Million Inhabitants (2022) (Sustainable Development Goals in the Kyrgyz Republic, accessed 29 February 2024); https://sustainabledevelopment-kyrgyzstan.github.io/en/9-5-2/#:~:text=Year%2C%202017%2C%202018%2C%202019%2C%202020%2C%20Value%2C%20524%2C%20555%2C%20527%2C%20534%2C.

Number of Research Personnel per 10,000 Population in Taiwan from 2011 to 2021 (Statista, accessed 29 February 2024); https://www.statista.com/statistics/324708/taiwan-number-of-researchers-per-10000-population/.

Hoffmeister, O. Development Status as a Measure of Development United Nations Conference on Trade and Development (UNCTAD) Research Paper 46 (UN, 2020); https://doi.org/10.18356/a29d2be8-en.

Country Classification: World Bank Country and Lending Groups. Fiscal Year 2012 (World Bank, accessed 22 January 2022); https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups.

Working Party of National Experts on Science and Technology Indicators. Revised Field of Science and Technology (FoS) Classification in the Research Manual DSTI/EAS/STP/NESTI(2006)19/FINAL (Organisation for Economic Co-operation and Development, 2007).

Seeber, M., Debacker, N., Meoli, M. & Vandevelde, K. Exploring the effects of mobility and foreign nationality on internal career progression in universities. High. Educ. https://doi.org/10.1007/s10734-022-00878-w (2022).

Lopez-Verges, S. et al. Call to action: supporting Latin American early career researchers on the quest for sustainable development in the region. Front. Res. Metr. Anal. 6, 657120 (2021).

Dominik, M. et al. Open science – for whom? Data Sci. J. 21, 1 (2022).

Corsi, M., D’Ippoliti, C. & Zacchia, G. Diversity of backgrounds and ideas: the case of research evaluation in economics. Res. Policy 48, 103820 (2019).

Hirsch, J. E. An index to quantify an individual’s research output. Proc. Natl Acad. Sci. USA 102, 16569–16572 (2005).

Valenzuela-Toro, A. M. & Viglino, M. How Latin American researchers suffer in science. Nature 598, 374–375 (2021).

Smith, K. M., Crookes, E. & Crookes, P. A. Measuring research ‘impact’ for academic promotion issues from the literature. J. High. Educ. Policy Manag. 35, 410–420 (2013).

Necker, S. Scientific misbehavior in economics. Res. Policy 43, 1747–1759 (2014).

Täuber, S. & Mahmoudi, M. How bullying becomes a career tool. Nat. Hum. Behav. 6, 475 (2022).

Aubert Bonn, N., De Vries, R. G. & Pinxten, W. The failure of success: four lessons learned in five years of research on research integrity and research assessment. BMC Res. Notes 15, 309 (2022).

Anderson, M. S., Ronning, E. A., De Vries, R. & Martinson, B. C. The perverse effects of competition on scientists’ work and relationships. Sci. Eng. Ethics 13, 437–461 (2007).

Smaldino, P. E. & McElreath, R. The natural selection of bad science. R. Soc. Open Sci. 3, 160384 (2016).

Aubert Bonn, N. & Bouter, L. in Handbook of Bioethical Decisions Vol. II. Collaborative Bioethics, Vol. 3 (eds Valdés, E. & Lecaros, J. A.) (Springer, 2023).

Hall, K. L. et al. The science of team science: a review of the empirical evidence and research gaps on collaboration in science. Am. Psychol. 73, 532–548 (2018).

Scott, J. T. Research diversity and public policy toward invention. Soc. Sci. Res. Netw. https://doi.org/10.2139/ssrn.4251768 (2022).

D’Ippoliti, C. Democratizing the Economics Debate: Pluralism and Research Evaluation (Routledge, 2022).

Goodhart, C. A. E. in Monetary Theory and Practice 91–121 (Palgrave, 1984).

Hoskin, K. in Accountability: Power, Ethos and the Technologies of Managing (eds Rolland, M. & J. Mouritsen, J.) 265–282 (International Thomson Business, 1996).

Dawson, D. et al. The role of collegiality in academic review, promotion, and tenure. PLoS ONE 17, e0265506 (2022).

Pepper, J., Krupińska, O. D., Stassun, K. G. & Gelino, D. M. What does a successful postdoctoral fellowship publication record look like? Pub. Astron. Soc. Pac. 131, 014501 (2019).

Fernandes, J. D. et al. Research culture: a survey-based analysis of the academic job market. eLife 9, e54097 (2020).

Aubert Bonn, N. & Pinxten, W. Advancing science or advancing careers? Researchers’ opinions on success indicators. PLoS ONE 16, e0243664 (2021).

Ross-Hellauer, T., Klebel, T., Knoth, P. & Pontika, N. Value dissonance in research(er) assessment: individual and perceived institutional priorities in review, promotion, and tenure. Sci. Public Policy https://doi.org/10.1093/scipol/scad073 (2023).

Becerril-García, A. & Aguado-López, E. Redalyc – AmeliCA: A Non-Profit Publishing Model to Preserve the Scholarly and Open Nature of Scientific Communication (United Nations Educational, Scientific and Cultural Organization; Latin American Council of Social Sciences; Network of Scientific Journals from Latin America and the Caribbean, Spain and Portugal; Autonomous University of the State of Mexico; National University of La Plata; University of Antioquia, 2019).

United Nations Geoscheme. Standard country or area codes for statistical use (M49) https://unstats.un.org/unsd/methodology/m49/ (United Nations Statistics Division, accessed 10 November 2023).

Harman, H. H. Modern Factor Analysis 3rd edn (Univ. Chicago Press, 1976).

Huber, P. J. The behavior of maximum likelihood estimates under nonstandard conditions. In Proc. 5th Berkeley Symposium on Mathematical Statistics and Probability Vol. 1, 221–233 (Univ. California Press, 1967).

White, H. L. A heteroskedasticity-consistent covariance matrix estimator and a direct test for heteroskedasticity. Econometrica 48, 817–838 (1980).

Li, B. H. et al. A global assessment of academic promotion criteria: what really counts? Figshare https://figshare.com/s/f8aa5ab402440a9a7933 (2024).