Machine-learning framework

The Bayesian posterior, p(θ|d) = p(d|θ)p(θ)/p(d), is defined in terms of a prior p(θ) and a likelihood p(d|θ). For GW inference, the likelihood is constructed by combining models for waveforms and detector noise. The Bayesian evidence, p(d), corresponds to the normalization of the posterior and can be used for model comparison.

Our framework is based on neural posterior estimation (NPE)40,41,42, which trains a density estimation neural network, q(θ|d), to estimate p(θ|d). We parameterize q(θ|d) with a conditional normalizing flow43,44. Training minimizes the loss L = Ep(θ,d)[−log q(θ|d)], where the expectation value is computed across a dataset (θi,di) of parameters, θi ~ p(θ), paired with corresponding likelihood simulations, di ~ p(d|θi). After training, q(θ|d) serves as a surrogate for p(θ|d) and inference for any observed data, do, can be performed by sampling θ ~ q(θ|do). With DINGO19,20 using a group-equivariant formulation of NPE (GNPE19,45), the GW data are simplified by aligning coalescence times in the different detectors. However, this comes at the cost of longer inference times, so we do not use GNPE for DINGO-BNS.

At inference, we correct for potential inaccuracies of q(θ|d) using importance sampling20, by assigning weight, wi = p(d|θi)p(θi)/q(θi|d), to each sample, θi ~ q(θi|d). A set of n weighted samples (wi,θi) corresponds to \(n_\texteff=\left(\sum _iw_i\right)^2\,/\,\left(\sum _iw_i^2\right)\) effective samples from the posterior, p(θ|d). This reweighting enables asymptotically exact results and the sample efficiency, ϵ = neff/n, serves as a performance metric. The normalization of the weights further provides an unbiased estimate of the Bayesian evidence, \(p(\bfd)=(\sum _iw_i)/n\).

Below, we describe in more detail the technical innovations of DINGO-BNS that enable scaling of this framework to BNS signals.

Prior conditioning

An NPE model, q(θ|d), estimates the posterior, p(θ|d), for a fixed prior, p(θ). Choosing a broad prior enhances the general applicability of the NPE model, but it also implies worse tuning to specific events (for which smaller priors may be sufficient). This is a general trade-off in NPE, but it is particularly notable for BNS inference, where typical events constrain the chirp mass to around 10−3 of the prior volume. Thus, for an individual BNS event, a tight chirp mass prior would have been sufficient (Extended Data Fig. 1b) and moreover would have enabled effective heterodyning27,46,47. However, to cover generic BNS events, we need to train the NPE network with a large prior (Extended Data Table 2).

We resolve this trade-off with a new technique called prior conditioning. The key idea is to train an NPE model with multiple different (restricted) priors simultaneously. Training a prior-conditioned model requires hierarchical sampling:

$$\boldsymbol\theta \sim p_\boldsymbol\rho (\boldsymbol\theta ),\boldsymbol\rho \sim \widehatp(\boldsymbol\rho ),$$

(2)

where pρ(θ) is a prior family parameterized by ρ and \(\widehatp(\boldsymbol\rho )\) is a corresponding hyperprior. We additionally condition the NPE model, q(θ|d,ρ), on ρ. This model can then perform inference for any desired prior, pρ(θ), by simply providing the corresponding ρ. This effectively amortizes the training cost over different choices of the prior. On each of the restricted priors, we are furthermore allowed to transform the data in a ρ-dependent way. This is because, having been conditioned on the prior choice, the network has all the information necessary to properly interpret the transformed data. We use this freedom to heterodyne the GW strain with respect to the approximate chirp mass.

To apply prior conditioning for the chirp mass, \(\mathcalM\), we use a set of priors, \(p_\widetilde\mathcalM(\mathcalM)=U_m_1,m_2(\widetilde\mathcalM-\Delta \mathcalM,\widetilde\mathcalM+\Delta \mathcalM)\). Here, Um1,m2(\(\mathcalM\)min, \(\mathcalM\)max) denotes a distribution over \(\mathcalM\) with support, [\(\mathcalM\)min, \(\mathcalM\)max], within which component masses, m1, m2, are uniformly distributed. We use a fixed ∆\(\mathcalM\) = 0.005 M⊙ and choose a hyperprior, \(\hatp(\widetilde\mathcalM)\), covering the expected range of \(\mathcalM\) for LVK detections of BNS (Extended Data Table 2). Because ∆\(\mathcalM\) is small, \(\widetilde\mathcalM\) is a good approximation for any \(\mathcalM\) within the restricted prior, \(p_\widetilde\mathcalM(\mathcalM)\), and we can thus use \(\widetilde\mathcalM\) for heterodyning. The resulting model, \(q(\boldsymbol\theta | \bfd_\widetilde\mathcalM,\widetilde\mathcalM)\), can then perform inference with event-optimized heterodyning and priors (via the choice of appropriate \(\widetilde\mathcalM\)), but is nevertheless applicable to the entire range of the hyperprior.

Inference results are independent of \(\widetilde\mathcalM\) as long as the posterior, p(\(\mathcalM\)|d), is fully covered by [\(\widetilde\mathcalM\) − ∆\(\mathcalM\), \(\widetilde\mathcalM\) + ∆\(\mathcalM\)]. For BNS, p(\(\mathcalM\)|d) is typically tightly constrained and we can use a coarse estimate of \(\mathcalM\) for \(\widetilde\mathcalM\). This can either be taken from a GW search pipeline or rapidly computed from \(q(\boldsymbol\theta | \bfd_\widetilde\mathcalM,\widetilde\mathcalM)\) itself by sweeping the hyperprior (see below). Note that, for shorter GW signals from black hole mergers, p(\(\mathcalM\)|d) is generally less well constrained. The transfer of prior conditioning would thus require larger (and potentially flexible) values of ∆\(\mathcalM\). Alternatively, the prior range can be extended at inference time by iterative Gibbs sampling of \(\mathcalM\) and \(\widetilde\mathcalM\), similar to the GNPE algorithm19,45.

Prior conditioning is a general SBI technique that enables a choice of prior at inference time. This can also be achieved with sequential NPE40,41,42,48. However, in contrast to prior conditioning, these techniques require simulations and retraining for each observation, resulting in more expensive and slower inference. We here use prior conditioning with priors of fixed width for the chirp mass, and optional additional conditioning on fixed values for other parameters (corresponding to Dirac delta priors). Extension to more complicated priors and hyperpriors is straightforward.

Independent estimation of chirp mass and merger times

Running DINGO-BNS requires an initial estimate of the chirp mass, \(\mathcalM\) (to determine \(\widetilde\mathcalM\) for the network), and the merger time, tc (to trigger the analysis). Matched filter searches can identify the presence of a compact binary signal and its chirp mass and merger time in low latency14,15,49,50,51. Specialized early warning searches are designed to produce output before the coalescence can further provide a rough indication of sky position and distance52,53,54. When available, the output of such pipelines can be used to trigger a DINGO analysis and provide estimates for \(\mathcalM\) and tc.

We here describe an alternative independent approach of obtaining these parameters, using only the trained DINGO-BNS model. We compute \(\widetilde\mathcalM\) by sweeping the entire hyperprior, \(\hatp(\widetilde\mathcalM)=U_m_1,m_2(\widetilde\mathcalM_\min ,\widetilde\mathcalM_\max )\). Specifically, we run DINGO-BNS with a set of prior centres,

$$\mathop\mathcalM\limits^ \sim _i=\mathop\mathcalM\limits^ \sim _min+i\Delta \mathcalM,$$

(3)

where i takes integer values between 0 and (\(\widetilde\mathcalM\)max − \(\widetilde\mathcalM\)min)/∆\(\mathcalM\). The inference models in this study were trained with hyperprior ranges of up to [1.0,2.2] M⊙. For ∆\(\mathcalM\) = 0.005 M⊙, we can thus cover the entire global chirp mass range using 241 (overlapping) local priors. We run DINGO-BNS for all local priors, \(\widetilde\mathcalM_i\), in parallel, with 10 samples per \(\widetilde\mathcalM_i\). This requires a DINGO-BNS inference of only a few thousand samples, which takes less than 1 s. We use the chirp mass, \(\mathcalM\), of the maximum likelihood sample as the prior centre, \(\widetilde\mathcalM\), for the analysis (Extended Data Fig. 1a). Note that the exact choice of \(\widetilde\mathcalM\) does not matter, as long as the inferred posterior is fully covered by [\(\widetilde\mathcalM\) − ∆\(\mathcalM\), \(\widetilde\mathcalM\) + ∆\(\mathcalM\)] (Extended Data Fig. 1b).

The merger time, tc, can be inferred by continuously running this \(\widetilde\mathcalM\) scan on the input data stream, sliding the tc prior in real time over the incoming data. With inference times of 1 s, continuous analysis can be achieved on just a few parallel computational nodes (or even a single node when also parallelizing over the tc grid), constantly running on the input data stream. Event candidates can then be identified by analysing the SNR, triggering upon exceeding some defined threshold (Extended Data Fig. 1c). This scan can be performed at an arbitrary (but fixed) time before the merger.

This scan successfully estimates \(\mathcalM\) and tc for both real BNS events (Extended Data Fig. 1). However, we have not tested this at a large scale on detector noise to compute false alarm rates because DINGO-BNS is primarily intended for parameter estimation. Existing search and early warning pipelines are probably more robust for event identification, particularly in the presence of non-stationary detector noise.

Frequency multibanding

Although the native resolution of a frequency series is determined by the duration, T, of the corresponding time series, (∆f = 1/T), we can average adjacent frequency bins wherever the signal is roughly constant. This enables data compression with only negligible loss of information. Here we use frequency multibanding, which divides the frequency range, [fmin, fmax], into N bands of decreasing resolution. Frequency band i covers the range \([\,\hatf_i\,,\,\hatf_i+1\,]\) with ∆fi = 2i∆f0, where \(\hatf_\,=\,f_\min \), \(\hatf_N\,=\,f_\max \) and ∆f0 is the native resolution of the frequency series. Within band i, the multibanded domain thus compresses the data by a factor of 2i (Extended Data Fig. 2), which is achieved by averaging 2i sequential bins from the original frequency series (called decimation). To achieve optimal compression, we empirically choose the smallest possible nodes, \(\hatf_i\), for which GW signals are still fully resolved. Specifically, we simulate a set of 103 heterodyned GW signals and demand that every period of these signals is covered by at least 32 bins in the resulting multibanded frequency domain. This is done before generating the training dataset, and the multibanded domain then remains fixed during dataset generation and training. The optimized resolution achieves compression factors between 60 and 650 (Extended Data Fig. 2c).

Traditionally, multibanding has been used without additional heterodyning—an approach we could also apply to DINGO-BNS. However, this would lead to lower compression factors, ranging from 14 to 56. More importantly, using multibanding alone would result in substantially more complicated input data to the DINGO-BNS network. Indeed, heterodyning enables additional truncation of the waveform singular value decomposition used in initializing the first network layer19. For LVK data, this results in a reduction from roughly 1,800 to 200 basis elements, thereby simplifying the learning task.

Care needs to be taken that the approximations are valid in the presence of detector noise. We now investigate how multibanding affects data simulation (for training) and the likelihood (for importance sampling).

Data simulation

The GW data are simulated as the sum of a signal and detector noise, d = h(θ) + n. The detector noise in frequency bin j is given by

$$n_j\sim \mathcalN(0,\sigma \sqrtS_j),\sigma =\sqrt\fracw4\Delta f,$$

(4)

where S denotes the detector noise PSD, and σ takes into account the frequency resolution and the Tukey window factor, w. Note that n is a complex frequency series, which we ignore in our notation, as the considerations here hold for real and imaginary parts individually. It is conventional to work with whitened data,

$$d_j^\textw=h_j^\textw(\boldsymbol\theta )+n_j^\textw=\frach_j(\boldsymbol\theta )+n_j\sqrtS_j,$$

(5)

in which case \(n_j^\textw\sim \mathcalN(0,\sigma )\).

We convert to the multibanded frequency domain by averaging sets of Ni = 2i bins,

$$\bard_j^\textw=\frac1N_i\mathop\sum \limits_k=m_j^m_j+N_i-1(h_k^\textw+n_k^\textw)=\barh_j^\textw+\barn_j^\textw,$$

(6)

where j denotes the bin in the multibanded domain, mj denotes the starting index of the decimation window for j in the native domain and i indexes the frequency band associated with j. Because \(\barn_j^\textw\) is an average of Ni Gaussian random variables with standard deviation σ, it follows that \(n_j^\textw\) is also Gaussian with standard deviation,

$$\sigma _i=\sigma \,/\,\sqrtN_i=\sqrt\fracw4\Delta fN_i=\sqrt\fracw4\Delta f_i.$$

(7)

We can thus simulate the detector noise directly in the multibanded domain by updating σ → σi, corresponding to ∆f → ∆fi. For the whitened signal we find

$$\overlineh_j^\textw=\frac1N_i\mathop\sum \limits_k=m_j^m_j+N_i-1\frach_k\sqrtS_k\approx \barh_j\mathop\sum \limits_k=m_j^m_j+N_i-1\frac1\sqrtS_k,$$

(8)

assuming an approximately constant signal, h, within the decimation window, \(\barh_j\) ≈ hk,∀k ∈ [mj,mj + Ni − 1]. For frequency-domain waveform models, we can thus directly compute the signal \(\barh_j\) in the multibanded domain by simply evaluating the model at frequencies \(\barf_j\).

In summary, we can directly generate BNS data in the multibanded frequency domain by: (1) updating the noise standard deviation according to the multibanded resolution; (2) appropriately decimating noise PSDs; and (3) computating signals and noise realizations in the compressed domain. These operations are carefully designed to be consistent with the data processing of real BNS observations, which for DINGO-BNS are first whitened in the native domain and then decimated to the multibanded domain. This process relies on the assumption that signals are constant within decimation windows, and we ensure that this is (approximately) fulfilled when determining the multibanded resolution. Indeed, for signals generated directly in the multibanded domain, we find mismatches of at most around 10−7 when comparing to signals that are properly decimated from the native domain.

Likelihood evaluations

We also use frequency multibanding to evaluate the likelihood for importance sampling. The standard Whittle likelihood used in GW astronomy26 reads:

$$\log p(\bfd| \boldsymbol\theta )=-\frac12\sum _k\frac^2\sigma ^2,$$

(9)

up to a normalization constant. The sum extends over all bins, k, in the native frequency domain. Assuming a constant signal (as above) and PSD within each decimation window, we can directly compute the likelihood in the multibanded domain,

$$\log \,p(\bfd| \boldsymbol\theta )\approx -\frac12\sum _j\frac{^2}\sigma _i(j)^2.$$

(10)

The assumptions are not exactly fulfilled in practice—for additional corrections, see ref. 29. For importance sampling, we can always evaluate the exact likelihood in the native frequency domain instead. In this case, the result is no longer subject to any approximations, even if the DINGO-BNS proposal is generated with a network using multibanded data. With the full likelihood for GW170817, we found a sample efficiency of 11.0% with an inference time of 13 s for 50,000 samples. The deviation from the result obtained with the multibanded likelihood is negligible (Jensen–Shannon divergence of less than 5 × 10−4 nat for all parameters). This demonstrates that use of the multibanded resolution has no practically relevant impact on the results.

Frequency masking

Because the GW likelihood (and our framework) uses the frequency domain, but data are taken in the time domain, it is necessary to convert data by windowing and Fourier transforming. However, frequency domain waveform models assume infinite time duration, leading to inconsistencies with finite time segments, [tmin, tmax]. Because the frequency evolution of the inspiral is tightly constrained by the chirp mass, \(\mathcalM\), we can compute boundaries, fmin(tmin, \(\mathcalM\)) and fmax(tmax, \(\mathcalM\)), such that the signals are not corrupted by the finite-duration effects within [fmin, fmax] and are negligibly small outside of that range (Extended Data Fig. 3).

We approximate the lower bound, fmin(tmin, \(\mathcalM\)), using the leading order in the post-Newtonian relationship between time and frequency,

$$f_0\textPN(t,\mathcalM)=\frac18\rm\pi \left(\frac-t5\right)^-3/8\left(\fracG\mathcalMc^3\right)^-5/8.$$

(11)

For a network designed for fixed data duration, T, we set fmin(T, \(\mathcalM\)) = f0PN(−T, \(\mathcalM\)) + fbuffer (we use fbuffer = 1 Hz for LVK and fbuffer = 0.5 Hz for next-generation detector setups).

For the upper bound, we found that f0PN(t, \(\mathcalM\)) is not sufficiently accurate. Instead, we determined fmax(t, \(\mathcalM\)) empirically by simulating a set of signals (with parameters θ ~ p(θ)) and computing mismatches between signals with and without truncation at t > tmax. For a given set of simulations, we choose fmax(t, \(\mathcalM\)) as the highest frequency at which all mismatches are at the most 10−3. To avoid additional computation at inference time, we cache the results in a lookup table for fmax(t, \(\mathcalM\)). The lookup table used here was generated with 20 waveforms per element. We verified for random elements that this matches the result obtained using 1,000 waveforms with an accuracy of approximately 0.1 Hz. For production use, the lookup table may need to be generated with more waveforms.

Both bounds depend on the chirp mass, \(\mathcalM\), with the upper bound additionally depending on the pre-merger time. To enable inference for arbitrary configurations, we trained a single network with variable frequency bounds. During training, we computed fmin(T, \(\widetilde\mathcalM\)) with the centre \(\widetilde\mathcalM\) of the local chirp mass prior. The upper frequency bound, fmax, is sampled randomly (uniform in frequency bins of the multibanded frequency domain) to allow arbitrary pre-merger times. Data outside of [fmin, fmax] are zero-masked.

Such masked networks can perform pre-merger inference. Given an alert (for example, from a detection pipeline) predicting a merger at time \(\widetildet_\rmc\) (in the future) with chirp mass \(\widetilde\mathcalM\), DINGO-BNS uses the frequency range [fmin(T, \(\widetilde\mathcalM\)), fmax(−\(\widetildet_\rmc\), \(\widetilde\mathcalM\))]. The frequency domain data are further shifted by \(\widetildet_\rmc\), such that the prior p(tc) is centred around \(\widetildet_\rmc\). The resulting pre-merger posterior can then be used to update the alert predictions (\(\widetilde\mathcalM\), \(\widetildet_\rmc\)) (for example, as the centre of the inferred posterior marginals) and to trigger a new DINGO-BNS analysis with the new strain data that became available in the meantime. Such iterative posterior updates allow continuous optimization of the prior centres (\(\widetilde\mathcalM\), \(\widetildet_\rmc\)). As a result, the tc prior for the after-merger analysis (Extended Data Table 2) does not need to account for large trigger uncertainties and only needs to be large enough to capture expected posteriors, p(tc|d).

In the absence of external alerts, the independent DINGO-BNS search described earlier (Extended Data Fig. 1) can also be run as a pre-merger scan, searching for mergers around a freely chosen (but fixed) time, \(\widetildet_\rmc\), in the future. By continuously running this scan over the incoming data stream, alerts are triggered at roughly time \(\widetildet_\rmc\) before potential BNS mergers. Note that, in the absence of chirp mass predictions, we need to apply a conservative frequency range, [fmin(T, \(\mathcalM\)max),fmax(−\(\widetildet\)c, \(\mathcalM\)min)], where \(\mathcalM\)min/max are the prior bounds for \(\mathcalM\).

EOS likelihood

A nuclear EOS implies a functional relationship between neutron star masses, mi, and tidal deformabilities, λi. The likelihood, p(d|\(\mathcalE\)), for a given EOS, \(\mathcalE\), and data, d, can be computed by integrating the GW likelihood along the hyperplane defined by the EOS constraint, \(\lambda _i=\lambda _i^\mathcalE(m_i)\),

$$\beginarraylp(\bfd| \mathcalE)=\int p(\bfd| \boldsymbol\theta )p(\boldsymbol\theta )\delta (\lambda _i-\lambda _i^\mathcalE(m_i))\rmd\boldsymbol\theta \\ \,=\int \int p(\bfd| m_i,m_2,\lambda _i^\mathcalE(m_1),\lambda _2^\mathcalE(m_2))\rmdm_1\rmdm_2\endarray$$

(12)

Here p(d|m1,m2,λ1,λ2) is the Bayesian evidence of d conditional on (m1, m2, λ1, λ2). To calculate equation (12) using Monte Carlo integration, it is necessary to repeatedly evaluate the integrand, which is extremely expensive using traditional methods (for example, nested sampling).

With DINGO-BNS, there are two fast ways to evaluate the integrand, using either a conditional or a marginal network: (1) a marginal network, q(m1,m2,λ1,λ2|d), directly provides an unnormalized estimate of the conditional evidence, p(d|m1,m2,λ1,λ2) (sufficient for model comparison, but not subject to our usual accuracy guarantees); or (2) a conditional network, q(θ|d;m1,m2,λ1,λ2), provides the normalized conditional evidence via importance sampling (including accuracy guarantees). Option (1) allows 105 evaluations per second, whereas option (2) only allows 103, assuming 102 weighted samples per evaluation.

By combining (1) and (2), we can achieve speed and accuracy, using the marginal network (1) to define a proposal distribution for Monte Carlo integration with the integrand from (2). Specifically, the density of the marginal network, q(m1,m2,λ1,λ2|d), is evaluated on an (m1,m2) grid with \(\lambda _i=\lambda _i^\mathcalE(m_i)\). This provides a discretized estimate of the integrand, p(d|m1, m2, \(\lambda _1^\mathcalE\)(m1), \(\lambda _1^\mathcalE\)(m2)), which we use as a proposal distribution for the integration in equation (12) when computing the integrand with the more accurate method (2). We tested this on GW170817 data using two polynomial EOS constraints, \(\lambda =\lambda ^\mathcalE(m)\) (Extended Data Fig. 4), finding good sample efficiencies of around 50%, small uncertainties, \(\sigma _\log p(\bfd\approx 0.01\), and computation times of 1−3 s for the integral equation (12). Alternatively, the proposal could also be generated using a network, q(m1,m2|d), which additionally marginalizes over λi. Finally, for a parametric EOS, a DINGO-BNS network could be conditioned on EOS parameters, allowing for direct EOS inference. This variety of approaches emphasizes the flexibility of SBI for EOS inference.

Related work

Machine learning for GW astronomy is an active area of research55. Several studies have explored machine-learning inference for black hole mergers19,20,56,57,58,59,60,61,62,63,64,65. There have also been applications specific to BNS inference (Extended Data Table 1). The GW-SkyLocator algorithm66 estimates the sky position using the SNR time series (similar to BAYESTAR), whereas Jim33,35 uses hardware acceleration and machine learning to speed up conventional samplers and achieve full inference in 21–33 min. The i-nessai framework39 achieves BNS inference in 24 min by combining normalizing flows using importance nested sampling. Ref. 67 explores pre-merger BNS detection and parameter estimation with normalizing flows, also reporting 1-s analysis times. However, it has not demonstrated accurate results on real data, and is subject to several other limitations. SBI has also been used for neutron star EOS inference from GWs68 and electromagnetic data69. Pre-merger localization with conventional techniques has been explored for ground-based third-generation detectors70,71,72 and for space-based detectors73,74.

Experimental details

For our experiments, we trained DINGO-BNS networks using the hyperparameters and neural architecture44,75 from ref. 19, with a few modifications. The embedding network consisted of a sequence of 34 two-layer, fully-connected residual blocks with hidden dimensions of 2,048 (×8), 1,024 (×8), 512 (×6), 256 (×6) and 128 (×6) after the initial projection layer. Compared with ref. 19, this added ten new blocks, increasing the number of trainable parameters in this part of the embedding network from 17 million to 91 million. For the LVK experiments, we used a dataset with 3 × 107 training samples and trained it for 200 epochs. For the Cosmic Explorer experiments, we used 6 × 107 training samples and trained it for 100 epochs. Training took between 5 and 7 days on one H100 GPU. We used three detectors for LVK (LIGO-Hanford, LIGO-Livingston and Virgo) and two detectors for Cosmic Explorer (primary detector at the location of LIGO-Hanford, secondary detector at the location of LIGO-Livingston). The networks were trained with the priors displayed in Extended Data Table 2. The DINGO-BNS network marginalized over the phase of coalescence, ϕc. During importance sampling, we reconstructed ϕc (ref. 20) (Extended Data Figs. 6a and 8) or used a phase-marginalized likelihood76,77 (in all other experiments). The phase reconstruction used here made the same assumptions as conventional phase marginalization76,77.

In the first experiment, we evaluated DINGO-BNS models on 200 simulated GW datasets, generated using a fixed GW signal with GW170817-like parameters and simulated LVK detector noise. We used noise PSDs from the second (O2) and third (O3) LVK, observing runs as well as LVK design sensitivity. For each noise level, we trained one pre-merger network (f ∈ [23,200] Hz) and one network for inference with the full signal, including the merger (f ∈ [23,1024]). The latter network was only used for after-merger inference because we found that separation into two networks improved the performance. The pre-merger network was trained with frequency masking, the masking bound, fmax, being sampled in the range [28,200] Hz, enabling inference up to 60 s before the merger.

In the second experiment, we analysed 104 simulated GW datasets, with GW signal parameters randomly sampled from the prior (Extended Data Table 2), the \(\mathcalM\) prior reduced to the range [1.0,1.5] M⊙ and the dL prior reweighted to a uniform distribution in the comoving volume, with design sensitivity noise PSDs. We again trained one pre-merger network (f ∈ [19.4,200] Hz) and one after-merger network (f ∈ [19.4, 1,024] Hz). The pre-merger network was trained with frequency masking with the masking bound, fmax, sampled in the range [25,200] Hz, enabling inference up to 60 s before the merger for \(\mathcalM\) ≤ 1.5 M⊙. Both networks were additionally trained with lower-frequency masking, with fmin(\(\widetilde\mathcalM\)) determined as explained above, ensuring an optimal frequency range for any chirp mass. Following ref. 17, we only considered events with SNR ≥ 12. Before each analysis, we performed a tc scan by generating 2,500 samples for four time-shifted copies of the strain, followed by joint importance sampling of the combined results. Specifically, for a network with a tc prior U(−\(\tau \), \(\tau \)), we applied the time shifts (−3\(\tau \), −\(\tau \), \(\tau \), 3\(\tau \)), effectively increasing the prior to U(−4\(\tau \), 4\(\tau \)). For the subsequent analysis, we time shifted the data such that the tc posterior was fully covered by the prior.

For each DINGO-BNS result, we generated a skymap using a kernel density estimator implemented by ligo.skymap78. For the sky localization comparison between DINGO-BNS and BAYESTAR, we ran BAYESTAR based on the GW signal template generated with the maximum likelihood parameters from the DINGO-BNS analysis. We noted that BAYESTAR was designed as a low-latency pipeline and typically run with (coarser) parameter estimates from search templates. Therefore, the reported BAYESTAR runs may deviate slightly from the realistic LVK setup. However, our results are consistent with those of ref. 17, which also had an approximately 30% precision improvement over BAYESTAR localization (using LVK search triggers). Both DINGO-BNS and ref. 17 performed full Bayesian BNS inference and should therefore have had identical localization improvements over BAYESTAR (assuming ideal accuracy, which for DINGO-BNS was validated with consistently high importance sampling efficiency). Differences to the localization comparison in ref. 17 are thus primarily attributed to different configurations for BAYESTAR and slightly different injection priors. Additional results for the localization comparison are shown in Extended Data Fig. 5. A probability–probability (P–P) plot for the after-merger analysis is shown in Extended Data Fig. 6a, which shows no significant bias for any parameter.

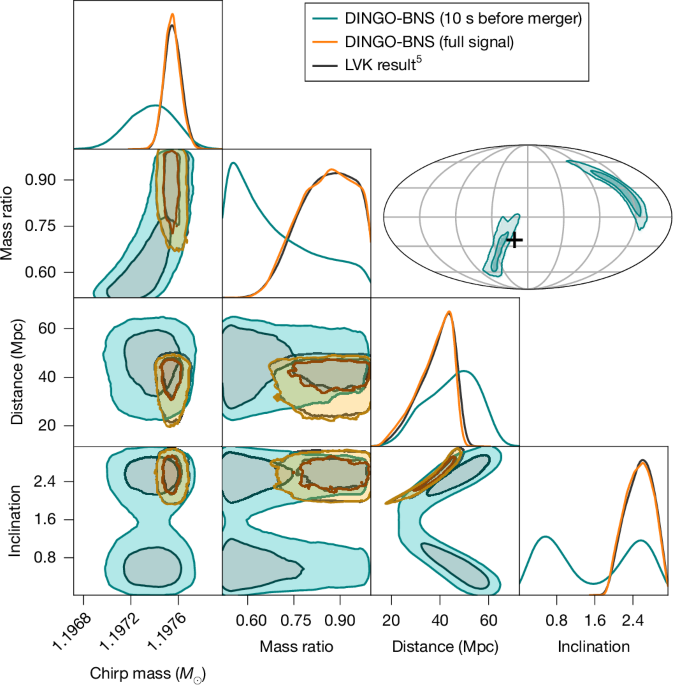

In the third experiment, we reproduced the public LVK results for GW170817 (refs. 1,5) and GW190425 (ref. 79) with DINGO-BNS. We used the same priors and data settings as the LVK, but we did not marginalize over calibration uncertainty. The GW170817 after-merger analysis (see also Extended Data Fig. 7) was performed with a DINGO-BNS model conditioned on the sky position, α,β. Following the LVK analysis5, we used the localization α = 3.44616 rad and δ = −0.408084 rad from the electromagnetic counterpart AT 2017gfo (ref. 8) at inference. Note that the localization uncertainty (σα = 10−6 rad, σδ = 10−6 rad (ref. 8)) was negligible for GW parameter estimation, but such effects could, in principle, be integrated by convolving the conditional DINGO-BNS network with a distribution over α,β. We found good sample efficiencies for both events (10.8% for GW170817 and 51.3% for GW190425) and good agreement with the LVK results (Extended Data Fig. 6b). The LVK results used detector noise PSDs generated with BayesWave36, which were not available before the merger. For our pre-merger analysis of GW170817 in the main part (sample efficiency 78.9%), we thus used a PSD generated using the Welch method. The GW170817 signal overlapped with a loud glitch in the LIGO-Livingston detector1, and we used the glitch-subtracted data provided by the LVK in our analyses. Because such data would not be available before the merger, the pre-merger inference of BNS events overlapping with glitches would, in practice, also require fast glitch mitigation methods.

In the fourth experiment, we analysed simulated Cosmic Explorer data using the anticipated noise PSDs for the primary and secondary detectors. We trained a DINGO-BNS network for pre-merger inference using f ∈ [6,11] Hz, with the upper frequency masking bound, fmax, sampled in the range [7,11] Hz. This supported a signal length of 4,096 s, with a pre-merger inference between 45 and 15 min before the merger. We injected signals with GW170817-like parameters for distance, masses and inclination to investigate how well a GW170817-like event could be localized in the Cosmic Explorer detector. We also trained a network on the full frequency range, [6, 1,024] Hz, for after-merger inference, with a reduced distance prior to control the SNR (Extended Data Table 2).

Sample efficiencies

We report sample efficiencies for all injection studies in Extended Data Fig. 6. The importance-sampled DINGO-BNS results are accurate, even with low efficiency, provided that a sufficient absolute number of effective samples can be generated. The efficiency nevertheless is a valuable diagnostic for assessing the performance of the trained inference networks.

In the LVK experiments, we found consistently high efficiencies, comparable to or greater than those reported for BBHs20. As a general trend, we observed that higher noise levels (Extended Data Fig. 6c) and earlier pre-merger times (Extended Data Fig. 6d) led to higher efficiencies. This is because low SNR events generally have broader posteriors, which are simpler to model for DINGO-BNS density estimators. Furthermore, the GW signal morphology is most complicated around the merger, making pre-merger inference much simpler than inference based on the full signal.

For Cosmic Explorer injections with GW170817-like parameters (Extended Data Fig. 6e), DINGO-BNS achieved extremely high efficiency for early pre-merger analyses, but the performance decreased substantially for later analysis times. This effect can again be attributed to the increase in SNR, which was O(103) 15 min before the merger. Improving DINGO-BNS for such high SNR events will probably require improved density estimators64 that can better deal with tighter posteriors. When limiting the SNR by increasing the distance prior (Extended Data Table 2), we found good sample efficiencies for an after-merger Cosmic Explorer analysis that used the full 4,096-s-long signal (Extended Data Fig. 6e).

Inference times

The computational cost of inference with DINGO-BNS is dominated by: (1) neural-network forward passes to sample from the approximate posterior, \(\boldsymbol\theta \sim q(\boldsymbol\theta | \bfd_\widetilde\mathcalM,\widetilde\mathcalM)\); and (2) likelihood evaluations, p(θ|d), used for importance sampling. For 50,000 samples on an H100 GPU, (1) takes around 0.370 s and (2) takes around 0.190 s, resulting in an inference time of less than 0.6 s. The speed of the likelihood evaluations is enabled by using JAX waveform and likelihood implementations33,34,35, combined with the heterodyning and multibanding step that we also used to compress the data for the DINGO-BNS network. We extend the open-source implementations34,35 by combining NRTidalv1 (refs. 32,80) with IMRPhenomPv2 (refs. 81,82,83), as well as re-implementing the DINGO likelihood functions in JAX. The JAX functions are usually just-in-time compiled to run efficiently. The input dimension of the likelihood (determined by likelihood batch size and number of frequency bins) is fixed, enabling compilation ahead of time on random data of the correct input dimension. After compilation, a running DINGO-BNS script can perform any number of analyses without recompiling. Thus, we can leave the compilation time (18 s) out of the timing estimate for importance sampling. Compilation could further be transferred between separate DINGO-BNS runs using the persistent compilation cache in JAX, although we have not implemented this option. Likelihood evaluations can also be done without JAX, which takes less than 10 s on a single node with 64 CPUs for 50,000 samples. For the vast majority of DINGO-BNS analyses in this study, the sample efficiency was sufficiently high such that 50,000 samples corresponded to several thousands of effective samples after importance sampling, enabling full importance sampling inference in less than 1 s.

Additional sources of latency

The inference times quoted above assume that the data have already been provided to DINGO-BNS. In practice, there are various additional sources of latency.

First, PSD estimation typically uses strain data taken during or after the merger. Obtaining low-latency PSDs represents a general challenge for low-latency analyses, encountered also in the field of GW searches14,53. Indeed, most PSDs used in this work would not be available in very low latency—the GW170817 and GW190425 PSDs from the LVK analyses5,79 use on-source noise estimation36 based on data during the merger, whereas LVK design sensitivity and Cosmic Explorer PSDs are not based on real detector noise, but rather reflect anticipated future configurations. These PSDs were chosen for comparability with existing studies. To test DINGO-BNS in a more realistic low-latency setting, we performed inference with PSDs estimated using Welch’s method84 and only data from before the merger (Extended Data Fig. 8). The resulting posterior for GW170817 only deviated slightly from the posterior obtained from the on-source PSDs. However, we note that, in general, such pre-merger Welch PSDs may be less reliable in the presence of non-stationary detector noise, which could lead to larger biases for parameter estimation.

Strain data can also be contaminated with instrumental or environmental glitches, which need to be removed for parameter estimation85. This adds additional latency for events that overlap with glitches, as noted above in the case of GW170817. Finally, data transfer between detectors and computing facilities add some additional latency. Using DINGO-BNS to its full potential will therefore require careful integration with low-latency pipelines23 and further acceleration of existing components.

PSD tuning

Although most of the networks used in this study were trained with only a single PSD per detector, in practice we would generally train DINGO-BNS with an entire distribution of PSDs to enable instant tuning to drifting detector noise19. (This is not relevant to tests involving, for example, design sensitivity noise.) Of the experiments in this study, only the pre-merger result of GW170817 and the result for the Welch PSD (Extended Data Fig. 8) were generated with a PSD-conditioned DINGO-BNS network. This network was trained with a PSD distribution covering the entire second LVK observing run (O2). Conditioning on the PSD makes the inference task more complicated and therefore leads to slightly reduced performance. For example, when repeating the first injection experiment (Extended Data Fig. 6c) with the PSD-conditioned DINGO-BNS network from above, the mean efficiency was reduced from 71% to 22%. Such networks can, in principle, also be trained before the start of an observing run, by training with a synthetic dataset designed to reflect the expected noise PSDs86.