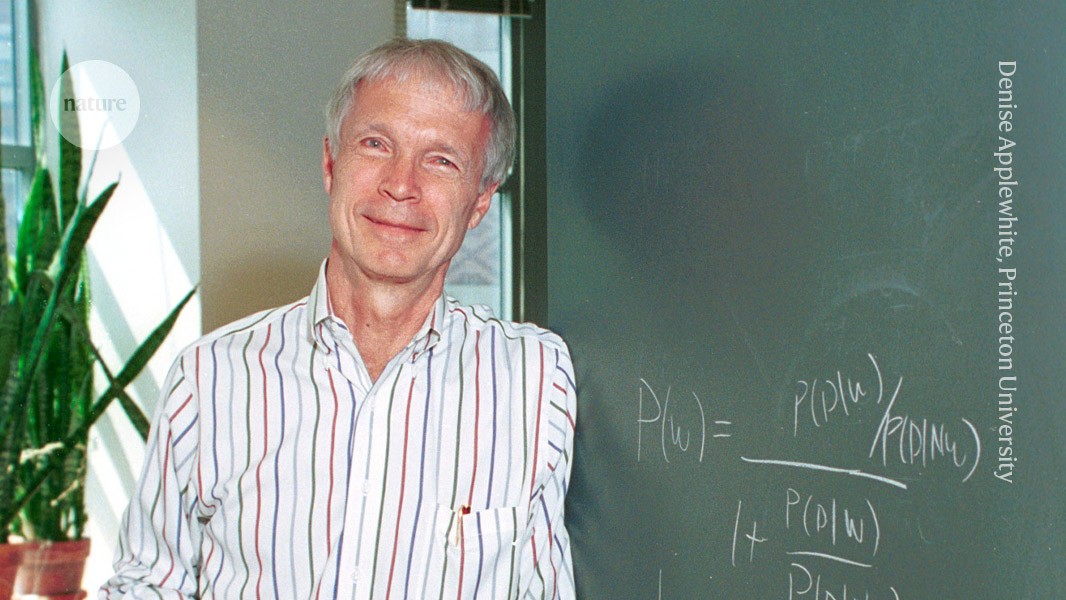

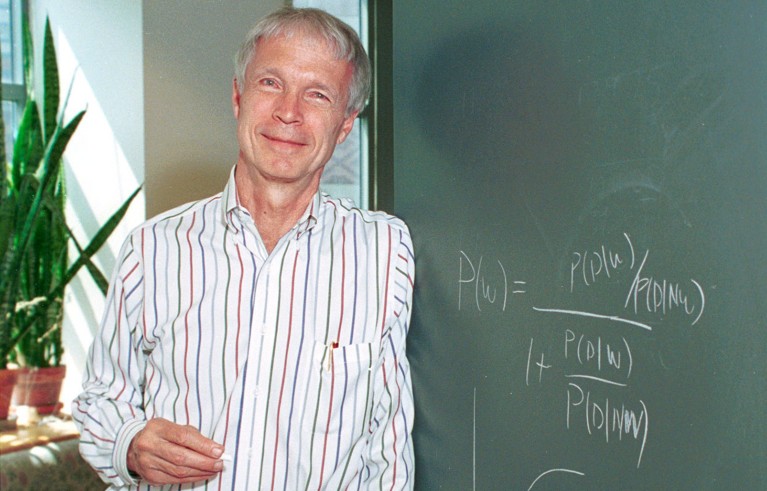

John Hopfield started his career in physics and moved to study problems in chemistry and biology.Credit: Denise Applewhite, Princeton University

John Hopfield, one of this year’s winners of the Nobel Prize in Physics, is a true polymath. His career started with probing the physics of solid states during the field’s heyday in the 1950s before moving to the chemistry of haemoglobin in the late 1960s, and studying DNA synthesis in the decade that followed.

How to win a Nobel prize: what kind of scientist scoops medals?

In 1982, he devised a brain-like network in which neurons — which he modelled as interacting particles — formed a kind of memory. The ‘Hopfield network’, for which he was awarded the Nobel Prize, is now widely seen as a building-block of machine learning, which underpins modern artificial intelligence (AI). Hopfield shared the award with AI pioneer Geoffrey Hinton at the University of Toronto in Canada.

Now 91 years old, Hopfield, an emeritus professor at Princeton University in New Jersey, spoke to Nature about whether his prizewinning work was really physics and why we should worry about AI.

There was discussion that your prizewinning work was not really physics, but computer science. What do you think?

My definition of physics is that physics is not what you’re working on, but how you’re working on it. If you have the attitude of someone who comes from physics, it’s a physics problem. Having a father and mother who were both physicists warped my view of what physics was. Everything that, to you, was interesting in the world was because you understood the physics of putting such a thing together. I grew up with puzzles and I wanted to figure them out.

Scientists are waiting longer than ever to receive a Nobel

In 1981, I gave a talk at a meeting and Terry Sejnowski, who had been my research student in physics, was sitting next to Geoff Hinton. [Sejnowski now runs a computational neurobiology group at the Salk Institute in La Jolla, California.] It was clear that Geoff knew how to get a system of that sort — the mechanics that I do — to express computer science. They got talking and eventually wrote their first paper together. Terry recalled this one day, and it was the story of how things came from physics into computer science.

You began your career in physics. How did you move into biology?

Solid-state physics was the backbone of new technologies at the time. But it was getting harder and harder to find a good problem, one that I was capable of solving and interested in solving. And I had a friend, Bob Shulman at Bell Laboratories, where I was at the time, who’d gone recently from chemistry into biology, and he started to talk about the fact that you were beginning to be able to study biological molecules in detail. I had the idea that maybe the time had come to use the way we studied solid state on big molecules.

What do you think your physics approach brought to biology?

What I tried to do was to accumulate understanding of smaller systems and then try to see whether I could use that to understand larger systems. Maybe you can get from physics at one end to biology at the other? There were problems that I could visualize the conclusion to, because of my understanding of a physical system which was abstractly related to it.

Physics Nobel scooped by machine-learning pioneers

At the end of the 1970s, you turned to neuroscience and efforts to simulate the brain using artificial neurons. How did the Hopfield network come about?

I began to write down simple equations which described how the activity of a nerve system would change with time, due to the interactions the system had with itself and with the external world. You can think of the same kind of equations for spin systems interacting in magnetism. That’s really what launched me, trying to get equations of motion in one field and in another field to match together.

Hinton is vocal in his fears about the potential harm of AI. Do you worry?

I do worry about it. [Think about] nuclear technology, which enabled people to make arbitrarily large bombs, and could also be very useful. People started worrying as soon as they understood what a chain reaction was. Fast-forward to 1970, folks in biology were very concerned about genetic engineering. If you engineer a virus in the right fashion, you could come close to wiping out populations. It’s essentially a chain reaction. It wouldn’t astonish me if you could make this kind of hazard in AI — making programs in such a way that they were self-reproducing.

Chemistry Nobel goes to developers of AlphaFold AI that predicts protein structures

The world doesn’t need unlimited speed in developing AI. Until we understand more about the limitations of the systems you can make — where you stand on this hazard ladder — I worry.

What’s your advice for today’s PhD students?

Where two fields are driven apart, see if there is anything interesting in the crack between them. I’ve always found the interfaces interesting because they contain interesting people with different motivations, and listening to them bicker is quite instructive. It tells you what they really value and how they’re trying to solve a problem. If they don’t have the tools to solve the problem, there may be space for me.

Are you still an active researcher?

I don’t teach. I have one collaborator, Dmitry Krotov [at the MIT–IBM Watson AI Lab in Cambridge, Massachusetts], who comes from theoretical physics and I have fun talking to him. I never do any of the mathematics, these days. But I certainly enjoy interacting with people who are trying to ask and answer significant problems. It’s fun to be reminded of the breadth of problems people are working on. When I taught, it always involved young people, different views, and that’s how you stay young.

This interview has been edited for length and clarity.