Experimental model and mouse details

All techniques were approved by the Institutional Animal Care and Use Committee at Stanford University School of Medicine. A total of 19 female and 3 male C57BL/6 mice were used for this paper. Sample size was determined by what is conventionally required. No blinding or randomization was performed. Acute electrophysiological recordings were conducted on 15 female C57BL/6 mice 12â24 weeks of age at the time of the first surgery (headbar implantation). See Supplementary Table 1 for a breakdown of which mice were used in which experiments. Mice were housed with littermates on a 12-h lightâdark cycle and had ad libitum access to food and water before the headbar implantation surgery. Mice were housed in an animal facility with a temperature range of 20â26â°C. Humidity ranged between 30% and 70%. After the craniotomy surgery, mice were housed singly to prevent disturbance of the surgical site. The remaining four female mice were used for fluorescent muscimol histology only, and three male mice were used for chronic electrophysiological recordings. All animal experiments were conducted in accordance with the guidelines and regulations of the National Institutes of Health and Stanford University under APLAC protocol 27694.

Virtual reality environments

The virtual reality recording setup was nearly identical to that in ref. 51. Mice were head-fixed and ran on a 15.2-cm diameter foam roller (ethylene vinyl acetate) constrained to rotate about one axis. Rotation of the cylinder was tracked with a rotary encoder (Yumo 1024P/R) and relayed to a microcontroller (Arduino UNO) using custom software. The virtual reality environment was developed using custom code in Unity 3D. The virtual scene was displayed on three 24-inch monitors surrounding the mouse. Water rewards were delivered through Tygon tubing attached to a custom-built lickport. The lickport consisted of a lick spout and an infrared light beam to detect licks. Water was delivered via opening of a solenoid valve. Phase 3B Neuropixels 1.0 silicon probes52 were mounted on a custom-built rotatable mount and positioned using a micromanipulator (Sensapex uMp). Virtual tracks specific to each task are detailed below.

Build-up track

The build-up track was 320âcm in length with a 300-cm visual lookahead. Upon reaching the end of a track, mice were seamlessly teleported to the beginning of the track without any visual discontinuity. The experiment consisted of nine blocks. The first and last blocks occurred in the dark for a minimum of 10âmin each, where no water rewards were given and no visual features were displayed. Blocks 2â7 consisted of 40 trials each, and block 8 consisted of 20 trials. Starting in the second block, water rewards were automatically dispensed at the end of the track. In the third block, a repeating checkerboard optic flow pattern was displayed on the ground. In blocks 4â7, visually distinct tower landmarks were cumulatively added. In the eighth block, the tower landmark added in the previous block (block 7) was removed. All tower landmarks were displayed on either side of the animal.

Random environment track

The random environment track was 100âcm in length with a 100-cm visual lookahead. Unlike the build-up track, this and the following track did not feature a seamless teleport. The experiment consisted of 14 blocks. The first and last blocks occurred in the dark and consisted of at least 100 trials where no water rewards were given and no visual features were displayed. In the blocks 2â13, consisting of 20 trials each, a set of 5 familiar visual landmarks were pseudo-randomly rearranged. All visual landmarks were visually distinct from one another. Three of the landmarks were tower landmarks, and two were ground landmarks. One of the ground landmarks was associated with a water reward. For each random rearrangement, the distance between any two landmarks had to be at least 15âcm apart and the position of the first landmark had to be at least 15âcm from the start of the track.

Hidden reward track

The hidden reward track was 200âcm in length with a 100-cm lookahead. The track consisted of the same visual landmarks in the random environment track except for the absence of the reward cue. Thus, there were four visual landmarks on the track (three tower landmarks and one ground landmark). The positions (in cm) of the landmarks were 40, 65, 100 and 145 in the baseline condition. Animals could lick anywhere between 160âcm and 170âcm to trigger a water reward. If an animal failed to lick within the zone, they received an automatic reward at 170âcm. Note that for one animal, the hidden reward task was conducted on a 240-cm track instead of a 200-cm track. On this track, all landmarks and the reward zone were pushed back (closer to the end of the track) by 40âcm. To encourage animals to pay attention to their position relative to landmarks, and not simply path integrate a fixed distance between rewards, an offset value was drawn from a uniform distribution between 0âcm and 30âcm on each trial. This offset value was added to the positions of each of the landmarks and the reward zone, resulting in a variable inter-trial distance before encountering the first landmark. This offset value is not shown in Fig. 4 for visualization clarity. The experiment consisted of seven blocks: A, B, C, D, C, B, A. Block A was the same landmark arrangement as the training track and served as the baseline condition. Block B was identical to A, except the last visual landmark was moved 35âcm closer to the start of the track. Block C was identical to block B except the first three landmarks were also moved 35âcm closer to the start of the track. Block D occurred in the dark and consisted of at least 100 trials where no water rewards were given and no visual features were displayed. For the second set of shifts (C, B, A), the landmark arrangement in block C was used as the baseline condition. All blocks except block D consisted of 40 trials each.

Freely moving environment

Chronically implanted mice were trained and recorded in a separate room kept at 5 lux, the same illumination as the virtual reality environment. The data acquisition apparatus consisted of a 165âÃâ225-cm frame surrounded by two black curtains and two walls with large distal visual cues. A 75-cm square open field with 30-cm walls and a single blue cue on the top wall was placed in the centre of the frame 75âcm off the ground.

Behavioural training and handling

Before headbar implantation, mice were given an in-cage running wheel (K3570 and K3251, Bio-Serv). Mice were handled for at least a day before headbar implantation. Handling included holding each mouse for at least 30âs before setting them on the running wheel to encourage their use of it.

After headbar implantation, mice were injected with Rimadyl (Covetrus, 5âmgâkgâ1) and Baytril (VetOne, 10âmgâkgâ1) daily for 3 days. Then, they were restricted to 0.8âml of water per day. Mice were weighed daily to ensure they stayed above 85% of their baseline weight. After at least 1 day of water deprivation, mice began training on the virtual reality setup. All mice received the same initial basic training. The first stage of training acclimated mice to head fixation and running on the virtual reality wheel. No virtual reality visual cues were presented on the monitors. The virtual reality wheel was manually moved to encourage walking on the setup. As the wheel was moved, a 1-ml syringe with water was manually brought closer to the mouse. After a half revolution of the wheel, the syringe dispensed water.

After mice could run on their own (more than half a revolution of the virtual reality wheel), the second stage of training began. This stage acclimated mice to a lickport, which dispensed water (approximately 1.5â2âµl at a time) and detected licks with an infrared beam sensor. The dispensation of water was associated with the sound of a solenoid valve opening. After mice reliably licked upon hearing the solenoid click, they proceeded to task-specific training, as described below.

Build-up track

Mice continued training in the absence of any virtual reality visual features. They were trained on a training track that was completely dark. The track contained an automatic water reward that was given after a certain distance was run. This distance was increased adaptively within and across training sessions to encourage consistent running in the dark. To avoid training mice to encode any particular distance, the average distance required to receive a water reward was different from session to session, ranging between 400âcm and 1,000âcm between rewards. Mice were considered ready for recordings when their average speed exceeded 250 virtual cmâsâ1 for several days.

Random environment track

Mice were introduced to the 5 visual landmarks that would be used in the experiment by running on a virtual reality track with a specific arrangement of the landmarks for 100 trials per day for 3 consecutive days. Then, the day before recording, mice were first trained with the same arrangement of landmarks for 20 trials followed by 11 blocks of 20 trials each where the landmark locations were pseudo-randomly shuffled. This shuffling of landmarks was done to ensure mice still licked at the reward cue. If a mouse did not complete 100 trials in less than 1âh, the original training was completed with the same initial arrangement of landmarks. Mice were ready for recordings when they could run 100 trials in less than 1âh.

Hidden reward track

Upon completion of recordings in the random rearrangement experiment, seven mice were used for the hidden reward experiment. The visual landmarks in both tracks were identical except for the absence of a visible reward cue in the hidden reward experiment. Training proceeded by giving mice an automatic water reward at the end of a hidden reward zone. Initially, the hidden reward zone was 30âcm in width so that any licks within 30âcm of the end of the zone would trigger a water reward to be dispensed. The reward zone was gradually decreased in size over training sessions until it was 10âcm in width. To maintain running behaviour, animals received an automatic reward at the end of the reward zone if they failed to lick within the reward zone. Mice were ready for recordings when they triggered water rewards with licks within the reward zone before the automatic reward on at least 70% of trials.

Open field

Mice used in chronic electrophysiology recordings experienced the build-up track training and also open-field exposure each day. Mice were first habituated to the data acquisition room and experimenter, and were encouraged to explore a 50-cm open field containing several objects for 30âmin with their littermates for 2 days. Then, they explored a 75-cm open field with vanilla sprinkles scattered throughout for 30âmin daily for the remainder of the virtual reality training (7â9 days).

Surgeries

For all surgeries, a mixture of oxygen and isoflurane was used for induction (4%) and maintenance (0.5â1.5%) of anaesthesia. After induction, buprenorphine (0.05â0.1âmgâkgâ1) was injected subcutaneously. Upon completion of surgery and for 3 additional days after, Baytril (10âmgâkgâ1) and Rimadyl (5âmgâkgâ1) were subcutaneously injected.

Headbar surgery

The first surgery consisted of attaching a stainless steel headbar, implanting a gold ground pin and making fiducial marks on the skull. The headbar was cemented to the skull with Metabond (S380, Parkell). The ground pin was implanted roughly 2.5âmm anterior and 1.5âmm lateral of bregma. Fiducial marks were made at ±3.3âmm relative to midline and 3.7âmm posterior of bregma. These marks served to guide insertion of the Neuropixels probes and microsyringes for muscimol infusion.

Bilateral craniotomies

Following completion of training, bilateral craniotomy surgeries were conducted. Craniotomies removed a small portion (approximately 200âµm along the mediolateral axis and approximately 300âµm along the anteroposterior axis) of the skull posterior to the fiducial marks and exposed the transverse sinus. Craniotomies were covered with a silicone elastomer (KWIK-SIL, World Precision Instruments). Then, a small plastic well was implanted over each craniotomy and affixed with dental cement. Immediately following surgery, dexamethasone (2âmgâkgâ1) was injected subcutaneously.

Microsyringe infusions

For the hidden reward task, bilateral injections of muscimol (M15223, Sigma-Aldrich) or saline control in the MEC were performed in seven mice. In addition, two of the seven mice also received BODIPY TMR-X fluorescent muscimol (M23400, Thermo Fisher) injections on a separate day to confirm the neural and behavioural effects of fluorescent muscimol compared with untagged muscimol. An additional four mice received bilateral injections of fluorescent muscimol using identical coordinates to the seven mice in the MEC without behaviour or Neuropixels recording, which showed consistent MEC microinfusion targeting with minimal spread to the parasubiculum. See Supplementary Table 1 for a detailed list of all the mice in this study, which sessions they ran and which drugs they received.

Of muscimol or BODIPY TMR-X muscimol conjugate, 1âmg was diluted in 4.3âml and 0.82âml, respectively, of filtered 1X PBS (BP3991, Fisher Scientific), resulting in a final concentration of 2.0âmM.

Before infusion of muscimol or saline control, craniotomies were inspected and cleaned with sterile saline if needed. Bilateral infusions were achieved using a 35-gauge needle (NF35BV-2, World Precision Instruments) and a 10-âµl Hamilton syringe (NANOFIL, World Precision Instrument). The syringe was angled 14° from vertical. Then, the tip of the needle was positioned ±3.3âmm mediolaterally relative to the midline, 100â150âµm in front of the transverse sinus and touching the dura surface. Then, the syringe was advanced into the brain at a rate of 8.3âµmâsâ1 using a Neurostar robot stereotaxic. Of either form of muscimol or saline control, 220âpmol or 110ânl was injected at 334ânlâminâ1 using an ultra-micropump (UMP3, World Precision Instruments) at each of the four sites along the dorsoventral axis in the MEC. These sites were 1,200âµm, 1,950âµm, 2,700âµm and 3,450âµm below the dura. Following injection at each site, the syringe was left in place for 1âmin to allow for sufficient diffusion of the drug.

Chronic implant surgery

A detailed protocol is available53 and described briefly here. Before surgery, a Neuropixels 2.0 (Imec)54 was sharpened (Narishige). Ground and reference pads were shorted and connected to a male gold pin. The probe assembly (body piece, wing, front and back flex holders, and dome) was 3D printed (Formlabs). The body piece was affixed to the wing by screws and glued to the back of the probe. A skull screw was connected to a female gold pin via a silver wire.

Following completion of training, implantation surgeries were conducted for the four mice used in the chronic electrophysiology studies. A craniotomy was made over the left or right MEC, as described above. The probe was sterilized in isopropyl alcohol and dipped in DiD (V22889, Thermo Fisher). The probe was dipped 10â15 times with 10âs in between dips. The probe was attached to a stereotactic robot (Neurostar), body piece oriented rostrally and parallel to the headbar, and inserted at 10° from vertical, tip pointed rostrally, as close to the sinus as possible. The probe was advanced at 0.5âmmâsâ1 to 800-μm angled depth below the brain surface, then at 3.3âμmâsâ1 to an angled depth of 3,200â3,400âμm. The craniotomy was filled with silicone gel (Dow Corning). The male pin of the ground-reference wire from the probe was attached to the female pin of the ground screw and secured by UV-cured acrylic (Pearson Dental). The wing was securely attached to the headbar and skull, creating a ring from the side of the well, across the wing and around to the ground screw, by dental cement (Lang Dental). The dome was attached to the posterior border of the skull, attached by UV-cured acrylic and secured by dental cement. The stereotactic holder was released from the probe and removed. The flex cable holders were placed just above the body piece with the tab slot caudal, closed with electrical tape and attached to the antennae of the dome with dental cement. The entire implant was covered with copper tape and the flex cable was folded over into the tab slot, which was closed with electrical tape.

Acute electrophysiological data collection

All recordings took place after the mice had recovered from bilateral craniotomy surgeries (a minimum of 16âh later). Immediately before recording, the Neuropixels probe was dipped in one of three dyes (DiD, DiI or DiO; V22889, Thermo Fisher). The probe was dipped 10â15 times with 10âs in between dips. If three recordings had already been performed in one hemisphere of the brain, no dye was used for subsequent recordings in that hemisphere. Dyes were used to reconstruct the probe location for undyed recordings, as described below. Then, mice were head-fixed on the virtual reality setup, and the craniotomy sites were exposed and rinsed with saline. The Neuropixels probe was angled 12â14° from vertical and positioned using the fiducial marks made during the headbar surgery and 50â200âµm anterior of the transverse sinus. The mediolateral position of the probe relative to the medial edge of the fiducial mark, the anteroposterior position relative to the transverse sinus and the angle relative to vertical were all recorded for replicability and probe localization (see âHistology and probe localizationâ below). The 384 active recording sites on the probe were the sites occupying the 4âmm closest to the tip of the probe. The reference and ground were shorted together and the reference electrode was connected to the gold pin implanted in the skull. Then, the probe was advanced into the brain using a Sensapex micromanipulator, typically at 5âµmâsâ1 but no faster than 10âµmâsâ1. Insertion of the probe continued until it reached the skull or until several channels near the tip of the probe were quiet. Then, the probe was retracted 100â150âµm and allowed to settle for at least 15âmin before recording. Finally, the craniotomy site was covered in silicon oil (378429, Sigma-Aldrich).

Raw signals were filtered, amplified, multiplexed and digitized on-probe. SpikeGLX (https://billkarsh.github.io/SpikeGLX/) was used to sample voltage traces at 30âkHz and filter between 300âHz and 10âkHz for the action potential (AP) band. SpikeGLX was also used to acquire auxiliary signals. Each Unity virtual reality frame emitted a transistorâtransistor logic (TTL) pulse, which was first relayed to an Arduino UNO, which was then relayed to an auxiliary National Instruments data acquisition card (NI PXIe-6341). These auxiliary signals were used to synchronize virtual reality data with electrophysiological traces.

At the end of each recording, both craniotomies were inspected, rinsed with saline and then covered in Kwik-Sil. The probe was rinsed and soaked for at least 10âmin in a 2% solution of Tergazyme (16-000-116, Fisher Scientific) in deionized water and then rinsed with deionized water alone.

Chronic electrophysiological data collection

Mice were food restricted to 85â90% of baseline weight. During each chronic electrophysiology session, mice ran head-fixed on a dark, featureless 1D track with no water rewards for at least 140 trials at 200âcm per trial. Immediately afterwards, they explored a 75-cm open field with vanilla sprinkles scattered throughout for 60â70âmin. RGB video was captured at 50âfps with 10âpixelsâcmâ1 resolution (BFS-U3-23S3C-C, FLIR Blackfly) using a custom Python script using FLIR Spinnaker API.

Histology and probe localization

After the last recording session, mice were euthanized with an overdose of pentobarbital and transcardially perfused with PBS followed by 4% paraformaldehyde. Then, brains were extracted and stored in 4% paraformaldehyde for at least 24âh and then transferred to 30% sucrose solution for at least 24âh. Finally, brains were frozen and cut into 100-µm sagittal sections with a cryostat, stained with DAPI, and imaged using widefield microscopy (Zeiss Axio Imager 2). All sections that contained dye fluorescence were aligned to the Allen Mouse Brain Atlas using software written in MATLAB55. Then, probe tracks were reconstructed by localizing dye fluorescence in each section. Probe locations for recordings without dyes were estimated relative to previous recordings, using two common reference locations: mediolateral distance relative to the medial edge of the fiducial mark, and distance anterior of the anterior edge of the transverse sinus.

Quantification of virtual reality behaviour

Anticipatory licking (as in Fig. 4k, for example) was calculated using a standard method for behavioural selectivity56,57. This metric is calculated as the following:

$${{\rm{lick}}}_{{\rm{ant}}}=\frac{{{\rm{lick}}}_{{\rm{near}}}-{{\rm{lick}}}_{{\rm{far}}}}{{{\rm{lick}}}_{{\rm{near}}}+{{\rm{lick}}}_{{\rm{far}}}}$$

where licknear represents the average lick rate in a zone starting one-tenth of the length of the track before the reward zone and ending at the start of the reward zone, and lickfar represents the average lick rate in a zone starting one-tenth of the length of the track before the centre of the track and ending at the centre of the track. This metric ranges from â1 to +1, with perfect anticipatory licking behaviour yielding a value of +1 and chance level behaviour of 0. We computed a similar metric for anticipatory slowing behaviour as follows, using the same zones but substituting lick rate with time elapsed:

$${{\rm{slowing}}}_{{\rm{ant}}}=\frac{{{\rm{slowing}}}_{{\rm{near}}}-{{\rm{slowing}}}_{{\rm{far}}}}{{{\rm{slowing}}}_{{\rm{near}}}+{{\rm{slowing}}}_{{\rm{far}}}}$$

For the random rearrangement track, we also calculated an accuracy score for each block of 20 trials where rewards were present. This score represents the fraction of trials out of all the trials within the block that the animal successfully triggered reward delivery before an automatic reward was delivered. The animal could trigger a reward by licking in the first half of the reward zone (first 10âcm within the reward zone). If an animal failed to lick within the first half of the reward zone, an automatic reward was delivered at 10âcm into the zone, but the trial counted as an incorrect trial.

Position tracking in freely moving recordings

Position and head direction were calculated from tracking green and red LEDs on the headstage holder. First, for each arena, we manually labelled 20 frames each from 4 videos and trained a DeepLabCut (v2.2.0.6)58,59 ResNet-50-based neural network for up to 100,000 iterations, as test error plateaued after this. We selected the model with the lowest test error, which was always less than 2âpixels. Models with high numbers of outliers (training videos had more than 100 frames with labels that jumped more than 2.5âcm) had outlier frames manually labelled and were retrained with these additional frames. These models were then used to analyse all videos collected from the same arena. Second, labels were used if they met all the following criteria: more than 90% likelihood, inside arena boundaries and distance between temporally adjacent labels of less than 2.5âcm. Missing frames were interpolated over unless they spanned more than 10 contiguous frames and more than 5âcm, in which case they were left blank. Animal coordinates and head direction were extracted from the final LED labels and smoothed with a Hanning filter length of 15 frames.

Offline spike sorting

Spike sorting was performed offline using the open source spike sorting algorithm Kilosort2 (ref. 60). Clusters were manually inspected and curated in Phy (https://github.com/cortex-lab/phy). Clusters containing fewer than 500 spikes were discarded. Clusters were also discarded if their peak-to-peak amplitude over noise ratio was less than 3, in which noise was estimated by calculating the standard deviation of a 10-ms window preceding each spike. All remaining clusters were manually examined and were discarded if they did not appear to be from single well-isolated units. For chronic recordings, neural data streams from the virtual reality and freely moving sessions were concatenated using CatGT (https://github.com/billkarsh/CatGT) before spike sorting.

Virtual reality data were then synchronized to spiking data using the TTL pulse times from Unity and the recorded TTL pulses in SpikeGLX. Custom code written in MATLAB was used to synchronize the data. Confirmation of appropriate syncing was accomplished by noting a high correlation between virtual reality time differences and the corresponding TTL time differences recorded in SpikeGLX. Finally, because the virtual reality frame rate was not constant, we used interpolation to resample behavioural data at a constant 50âHz, similar to ref. 51. Data were not speed filtered. To calculate spatial firing rates, spikes were first binned into 2-cm spatial bins and divided by time occupancy per spatial bin. Then, the firing rates were smoothed with a 2-bin Gaussian kernel.

Identification of grid cells in freely moving conditions

Spatial tuning curves in freely moving conditions were computed by binning spikes into 2.5âÃâ2.5-cm bins. Cells with average firing rates greater than 40âHz were excluded. To identify putative grid cells, we considered two approaches. First, we computed a âgrid scoreâ for each neuron61 (Extended Data Fig. 3). Grid score was computed by a circular sample of 2D spatial autocorrelograms, correlated with the same sample rotated 30°, 60°, 90°, 120° and 150o. Grid score was defined as the minimum difference between the 60° and 120°, and the 30°, 90° and 150° rotations61. Grid cells were identified as those cells whose grid score exceeded the 99th percentile of a shuffled distribution of grid scores, computed by time-shifting individual spike trains 1,000 times. Using this approach, we observed a fair number of false positives (cells that did not clearly have hexagonally spaced firing fields but were classified as grid cells) and false negatives (cells with clear hexagonal firing fields that were not classified as grid cells). Thus, we used a second approach, which was recently applied ref. 34, clustering the spatial autocorrelograms of the 2D tuning curves. As in ref. 34, we used uniform manifold approximation and projection (UMAP) to project the spatial autocorrelograms of individual neurons into two dimensions (Extended Data Fig. 3), after removing the central peaks from the autocorrelograms and z-scoring them. We matched the UMAP settings in ref. 34. We then clustered the resulting 2D projections. We found that this approach more reliably separated grid cells and non-grid cells. However, we found it less successful than reported in ref. 34 at separating distinct modules, perhaps because we had fewer cells recorded from each module, or because our 2D tuning curves were slightly less clean than those recorded from rats in ref. 34.

Identification of non-grid spatial cells in head-fixed virtual reality conditions

We identified non-grid spatial cells as those cells not identified as grid cells whose tuning curves in the first half of a virtual reality block had a Pearson correlation of more than 0.2 with their tuning curves in the second half of the virtual reality block, averaged across virtual reality blocks.

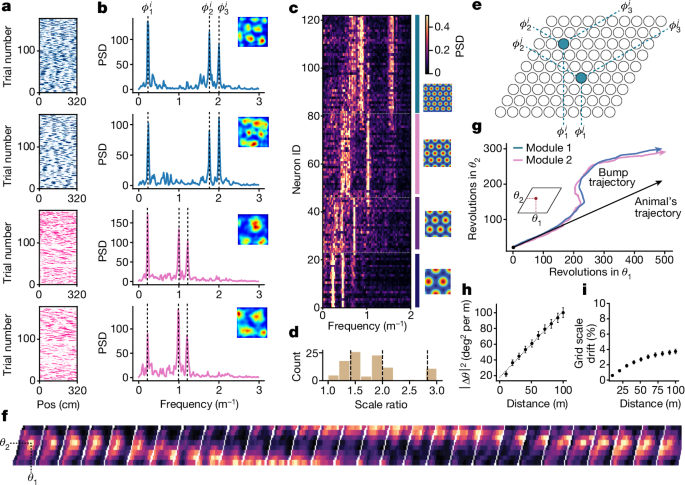

Identification of grid cells in head-fixed complete darkness conditions

We identified putative grid cells by computing spectrograms for the spatial firing rate of each neuron in the dark. We first z-scored the spatial firing rates of each cell. Spectrograms were computed using scipy.signal.spectrogram with npersegâ=â1,600 and noverlapâ=â1,400, corresponding to segments of width 32âm (or 10 laps on the build-up track) with measurements every 4âm (example single-cell spectrograms in Extended Data Fig. 4). The segment width and overlap were chosen to balance resolution in the frequency domain (to give good accuracy in inferring peak locations) with resolution in the time domain (as the location of the three peaks drifts over many trials in the dark, consistent with previous work showing grid cells accumulate error and drift in complete darkness)16,32,62 (Fig. 1h and Extended Data Figs. 2 and 4). We chose slightly different values for nperseg depending on the number of dark trials in a given experiment; values for all conditions are reported in the data reporting Excel sheet.

We then extracted co-modular grid cells, neurons that shared the same grid spacing and orientation but differed in phase, by applying k-means clustering to the spectrograms (using kâ=â5 or 6). We identified a cluster as a putative grid cell module if the average spectrogram correlation (Pearson r) was greater than 0.3 across windows in the first block of trials (nâ=â15,342 out of 68,484 neurons). We found that these correlations were preserved over much longer timescales, from the first block of dark trials at the beginning of the session to the final block of dark trials at the end of the session (Extended Data Fig. 2).

Sorting grid cells onto a neural sheet

The hexagonal spatial map of an idealized grid cell can be written as the sum of three plane waves oriented at 0°, 60° and 120°,

$${r}_{i}(\vec{x})=\mathop{\sum }\limits_{a=1}^{3}\cos (\,{\vec{k}}_{a}\cdot \vec{x}-{\phi }_{a}^{i})$$

Where \({r}_{i}(\vec{x})\) is the activity of neuron i at 2D location \(\vec{x}\) and \({\vec{k}}_{a},a=\mathrm{1,}\,\mathrm{2,}\,3,\) are wave vectors oriented at 0°, 60° and 120°, which set the overall grid scale of the module. Hence, each grid cell in a module is uniquely determined by its phases \({\phi }_{a}^{i}\). Our goal was to infer these phases from recordings while the animal is running on the 1D virtual reality track. In 1D, the position of the animal is restricted to lie along a line at some angle γ, \(\vec{x}=\left(x\cos \left(\gamma \right),{x}\sin \left(\gamma \right)\right)\), and hence the 2D wave vectors are transformed to 1D scalar frequencies \({f}_{a}={\vec{k}}_{a}\,\cdot (\cos \left(\gamma \right),\sin \left(\gamma \right))\)36,

$${r}_{i}(x)=\mathop{\sum }\limits_{a=1}^{3}\cos (\,{f}_{a}x-{\phi }_{a}^{i})$$

These three frequencies \({f}_{a}\) are the peaks that we observed in the PSDs in Fig. 1. To estimate \({f}_{a}\), we used a standard peak-finding algorithm (scipy.signal.find_peaks) to identify the largest three peaks in the PSD within each window, \({\hat{f}}_{a}\). For each neuron i of N neurons, we then extracted the phases \({\phi }_{a}^{i},a=\mathrm{1,}\,\mathrm{2,}\,3\), of the Fourier transform at the location of each peak (Fig. 1b):

$${\phi }_{a}^{i}=\arg \,\left[\,{\int }_{{x}_{a}}^{{x}_{b}}dx\,{{\mathtt{e}}}^{-{\mathtt{i}}2\pi x{\hat{f}}_{a}}\,{r}_{i}(x)\right]$$

Where xa and xb represent the start and end of a window, with the same size as above (32âm). We found that estimated phases were highly consistent across independent windows in the dark (Extended Data Fig. 2e). To obtain refined estimates, we averaged estimated phases across windows in the dark. We used these estimated phases to extract the instantaneous attractor state of the grid cell population activity, and to sort grid cells onto a fictitious neural sheet (Fig. 1e) so that nearby cells have similar phases (described below).

To extract the moment-by-moment attractor state \(\vec{\theta }(x)\) of the grid cell population activity, we estimated the centre of mass of the bump of activity on the sheet as follows. We computed the average of the phases of the cells on the neural sheet weighted by their (filtered) firing rates \({r}_{i}(\vec{x})\). Because the phases are periodic, we computed a circular average via the following formula,

$${\psi }_{a}\left(x\right)={\rm{atan}}2\left(\mathop{\sum }\limits_{i=1}^{N}\sin \left({\phi }_{a}^{i}\right){r}_{i}\left(x\right),\mathop{\sum }\limits_{i=1}^{N}\cos \left({\phi }_{a}^{i}\right){r}_{i}\left(x\right)\right)$$

Where \({\psi }_{a}\left(x\right)\,,a=\mathrm{1,2,3}\) represent the firing-rate-weighted average of each phase. If the population activity of grid cells is well described by the motions of a bump on a 2D sheet, then the three coordinates \({\psi }_{a}\left(x\right)\) represent only 2âd.f. In Extended Data Fig. 2d, we showed that \({\psi }_{a}\left(x\right)\) does not take on all possible values, but are indeed restricted to a 2D subspace. We can therefore extract these 2 d.f., \({\theta }_{1},\,{\theta }_{2}\), which represent the centre of mass of the bump of activity on the sheet,

$${\theta }_{1}={\psi }_{1}-\frac{{\psi }_{2}}{2},\,\,{\theta }_{2}=\frac{\sqrt{3}}{2}{\psi }_{2}$$

This transformation captures the hexagonal geometry of the grid cell lattice63, and converts the firing-rate-weighted average of each phase \({\psi }_{a}\left(x\right)\) to 2D coordinates \({\theta }_{1},{\theta }_{2}\) on a unit cell of the neural sheet, as illustrated in Fig. 1e. We can then visualize the trajectory of the bump of activity on the sheet by plotting the two coordinates θ1 and θ2 as the animal traverses the virtual reality environment. We plot θ1 and θ2 for one full session as a heatmap over spatial bins and trials in Fig. 2c. As the neural sheet is periodic, we also occasionally unwrapped \({\theta }_{1},{\theta }_{2}\) to plot them as continuous trajectories across multiple copies of the neural sheet (Figs. 1g and 2d,i). Unwrapping was performed using np.unwrap on \({\psi }_{a}(x)\) before converting to \({\theta }_{1},{\theta }_{2}\). Note that this unwrapping is used only for visualization and not used in data analyses. Unwrapping is sensitive to noise and often leads to unwrapping errors in which the unwrapped trajectory becomes dislocated by one neural sheet length. To mitigate this issue, in Extended Data Fig. 5, we adopted the following approach. Owing to the discontinuous nature of the unwrapping procedure, it is sensitive to noise only at the edge of the neural sheet. However, the location of the edge of the neural sheet is arbitrary (we can experimentally measure only the relative phases between grid cells; the location of the origin is arbitrarily chosen). Moreover, different choices of the origin will lead to more or fewer unwrapping errors. Taking advantage of this freedom, we performed a grid search over 100 different choices of the origin for each window of 5 trials by applying a global shift to all the phases of a cell \({\phi }_{a}^{i}\to {\phi }_{a}^{i}+{\eta }_{a}\,{\rm{mod}}\,2\pi ,{\eta }_{a}\in [0,2\pi ]\), and kept the choice of origin, which leads to the most consistent trajectories over the 5 trial window (the underlying assumption is that trajectories do not drift much over windows of 5 trials, and that unwrapping errors will produce inconsistent trajectories). This procedure is somewhat cumbersome but leads to cleaner visualizations of the unwrapped bump trajectory for noisy trajectories.

The above procedure for extracting the moment-by-moment centre of mass of the bump on the neural sheet has a geometrically equivalent interpretation as identifying the instantaneous location of the high-dimensional population activity vector on a toroidal attractor. To see this, note that computing the arguments of the circular average above,

$${\psi }_{a}(x)={\rm{atan}}2\left(\mathop{\sum }\limits_{i=1}^{N}\sin ({\phi }_{a}^{i})\,{r}_{i}(x),\mathop{\sum }\limits_{i=1}^{N}\cos ({\phi }_{a}^{i})\,{r}_{i}(x)\right)$$

involves amounts to projecting the N-dimensional neural activity vector ri (x) onto three pairs of axis spaces spanned by the vectors \({u}_{a}^{i}=\cos ({\phi }_{a}^{i})\) and \({v}_{a}^{i}=\sin ({\phi }_{a}^{i})\). Now recall that the firing rate of an idealized grid cell in 1D can be written as,

$${r}_{i}(x)=\mathop{\sum }\limits_{a=1}^{3}\cos (\,{f}_{a}x-{\phi }_{a}^{i})$$

This can be rearrange to find,

$$\begin{array}{l}{r}_{i}(x)=\mathop{\sum }\limits_{a=1}^{3}\cos ({\phi }_{a}^{i})\,\cos (\,{f}_{a}x)+\sin ({\phi }_{a}^{i})\,\sin (\,{f}_{a}x)\\ \,\,=\mathop{\sum }\limits_{a=1}^{3}{u}_{a}^{i}\,\cos (\,{f}_{a}x)+{v}_{a}^{i}\,\sin (\,{f}_{a}x)\end{array}$$

Hence, the idealized grid cell population activity lives in a subspace spanned by the same three pairs of axes as those that we projected onto to compute the centre of mass of the bump on the neural sheet. Within each pair of axes, the activity lies along a ring, and in the full subspace, lies along a torus (Extended Data Fig. 2a). Therefore, if we have reliably inferred the phases of our recorded grid cells, and they are well approximated by the idealized grid cells above, then projecting their population activity onto these three pairs of axes should reveal three rings. Indeed this is what we found for our recordings on the 1D virtual reality track (Extended Data Fig. 2b,c), indicating that the population activity lies close to a toroidal attractor. Note that estimating the firing-rate-weighted average of each phase on the neural sheet \({\psi }_{a}(x)\) is mathematically equivalent to extracting the angle on each of the three rings. Hence, the moment-by-moment centre of mass of the bump of activity on the neural sheet is in one-to-one correspondence with the instantaneous location of the high-dimensional population activity on the toroidal attractor.

To visualize the population activity of co-modular grid cells as a pattern of activity across the fictitious neural sheet, we identified the estimated phases \({\phi }_{\mathrm{1,2,3}}^{i}\) with locations on the 2D neural sheet \({s}_{1}^{i},\,{s}_{2}^{i}\) using the same transformation used to compute θ1 and θ2 above,

$${s}_{1}^{i}={\phi }_{1}^{i}-\frac{{\phi }_{2}^{i}}{2},\,\,{s}_{2}^{i}=\frac{\sqrt{3}}{2}{\phi }_{2}^{i}$$

This transformation is schematized in Fig. 1e. Because this transformation requires only 2âd.f., we selected the two phases that were best correlated across windows in the first block of dark trials. To visualize population activity at an instant in time in this sort (Fig. 1e,f), we binned the two sheet coordinates \({s}_{1}^{i},{s}_{2}^{i}\) into \(\sqrt{N}\times \sqrt{N}\) quantiles and plotted (z-scored and Gaussian filtered) activity as a heatmap on this grid. Because this analysis requires good coverage of the 2D phase space, we restricted this analysis to sessions in which 36 or more cleanly recorded co-modular grid cells were recorded simultaneously within a module.

Remapping between virtual reality blocks

To quantify remapping between blocks in the build-up track environment, we computed the spatial correlation of grid cell population activity \({r}_{i}^{\alpha },{r}_{i}^{\beta }\), for all pairs of trials α and β. Averaged across modules, sessions and animals, the resulting correlation matrix (Fig. 2f, bottom) revealed that population activity was stable within a block, but differed between consecutive blocks. The sharp boundaries indicated that remapping happened quickly. To quantify the rate of remapping, we additionally computed the average correlation within a sliding window of five trials (Fig. 2f, top),

$$\bar{{\rho }_{t}}=\frac{1}{10}\mathop{\sum }\limits_{\alpha > \beta }^{5}\rho \left(t+\alpha ,\,t+\beta \right)$$

Where Ï(α, β) represents the spatial correlation between trials a and b. Finally, to visually inspect the differences in tuning curves between consecutive environments at a single-cell level, and within a single session, we compared single-cell spatial tuning curves averaged over all 40 trials within the tower 2 environment (block 6) and the 40 trials within the tower 3 environment (block 7; Fig. 2h).

Distortions to grid cell tuning curves in the presence of landmarks

We quantified the anisometry of neural trajectories on the attractor by computing the arc length s of the trajectory of the centre of mass of the activity bump on the neural sheet over intervals corresponding to 2âcm in real space, \(s(x)=\sqrt{d{\theta }_{1}{(x)}^{2}+d{\theta }_{2}{(x)}^{2}}\), where \(d{\theta }_{1}\left(x\right)={\theta }_{1}\left(x+dx\right)-{\theta }_{1}\left(x\right),d{\theta }_{2}\left(x\right)={\theta }_{2}\left(x+dx\right)-{\theta }_{2}(x)\), and dxâ=â2âcm. To handle periodic boundary conditions, we first unwrapped the coordinates θ1 and θ2 before computing s. Intuitively, s quantified the distance the activity bump travelled on the neural sheet when the animal traversed 2âcm on the virtual reality track. If the mapping from the position of the animal to the position of the bump on the neural sheet is an isometry (that is, distances in real space are proportional to distances on the neural sheet), then \(s\left(x\right)\) should not vary from one position on the track to the next. Hence, to quantify the anisometry of the grid cell map, we measured the variation in \(s(x)\). To obtain a dimensionless measure of anisometry, we calculated the coefficient of variation in \(s\left(x\right)\) over windows of length of 16âm (Fig. 3c).

The second quantity that we used to measure distortions to the grid cell spatial map in the presence of visual landmarks is geodesic curvature (Fig. 3d). Intuitively, as the animal travels in a straight line on the treadmill through the virtual reality environment, if the grid cell spatial map faithfully captured the trajectory of the animal, the bump of activity should travel in a straight line trajectory on the neural sheet, or, equivalently, the neural activity should trace out a geodesic trajectory on the torus (a geodesic being the generalization of a straight line on a curved manifold). Parameterizing the 2D torus by the coordinates θ1 and θ2, the geodesic curvature \({\kappa }_{g}\) reduces to the planar curvature:

$${\kappa }_{g}=\frac{{\theta }_{1}^{{\prime} }\,{\theta }_{2}^{{\prime\prime} }-{\theta }_{1}^{{\prime} }\,{\theta }_{2}^{{\prime\prime} }}{{\left({{\theta }_{1}^{{\prime} }}^{2}+{{\theta }_{2}^{{\prime} }}^{2}\right)}^{3/2}}$$

To handle periodic boundary conditions, we first unwrapped the coordinates θ1 and θ2 before computing \({\kappa }_{g}\). To extract a single scalar D measuring the curvature of a single-trial trajectory, we integrated the geodesic curvature along the trajectory as the animal completes one lap down the virtual reality track:

$$D={\int }_{C}| {\kappa }_{g}| dx$$

Where x represents the position along the track, and C represents a single lap down the track. We computed the single-trial geodesic curvatures for trials in the dark and trials in virtual reality, and found that trials in virtual reality have significantly higher geodesic curvature (Fig. 3d).

Pinning strength

To quantify the local effect of a virtual reality landmark on the bump trajectory in the random environments experiment, we computed the trial-by-trial position of the bump of activity, conditioned on the animal being at the location of one of the virtual reality landmarks \({\theta }_{l}^{t}\equiv \theta ({x}_{l}^{t})\), where \({x}_{l}^{t}\) represents the position of landmark l on the virtual reality track on trial t. \({\theta }_{l}^{t}\) was plotted across trials in one session in Fig. 3e for all five virtual reality landmarks. We then considered the extent to which landmarks were âstrongly pinningâ, in the sense that the bump of activity was pinned to the same location on the neural sheet each time the animal passed a given landmark (Fig. 3e). To quantify this, for each landmark, we measured the dispersion of \({\theta }_{l}^{t}\) around its mean \(\bar{{\theta }_{l}}\) across trials:

$${\sigma }_{l}^{2}=\frac{1}{T}\mathop{\sum }\limits_{t}^{T}{||{\theta }_{l}^{t}-\bar{{\theta }_{l}}||}_{P}$$

2D dynamical model

We modelled the activity of a population of grid cells by a bump moving on a periodic sheet, with the topology of a torus, which is twisted such that grid cells exhibit hexagonal rather than square firing patterns63. In the absence of landmarks, the bump moves in straight lines with a velocity \(\vec{v}\). We simulated the effect of visual landmark i on the grid cell code as âpinningâ the bump of activity to a preferred location \(\vec{{\rho }_{i}}\),

$$\frac{d}{{dt}}\vec{\theta }=\vec{v}+\mathop{\sum }\limits_{i=1}^{P}{\alpha }_{i}d(\vec{{\rho }_{i}},\vec{\theta })F({p}_{i}-x)$$

Where x is the position of the animal on the track, \({p}_{i}\) is the location of landmark i on the virtual reality track, \(\vec{{\rho }_{i}}\) is the preferred 2D pinning location of that landmark on the grid cell sheet and αi represents the pinning strength of the landmark. d(·,·) represents the (signed) displacement between two positions on the neural sheet, taking into account the hexagonal periodic boundary conditions as in ref. 63. F parameterizes the range of influence of landmarks on the grid cell code. We found that the precise functional form of F did not make a crucial difference, so we chose a particularly simple form: \(F\left({p}_{i}-x\right)=\Theta (25-|{p}_{i}-25-x|),\) where \(\Theta \) is the Heaviside function, so that each landmark only influenced the grid cell code in the 50âcm leading up to its location on the virtual reality track \({p}_{i}\).

Finally, we modelled the velocity as a straight-line trajectory with angle Ï, \(\vec{v}(t)=|v|(\cos (\varphi ),\sin (\varphi )){t}\), where the slice angle drifts with time at a rate δ. The magnitude (or gain) \(\left|v\right|\) governs the grid scale.

We estimated \(\left|v\right|\) and δ using the responses of the grid cells in the dark, as described above. To fit \({\rho }_{i}\) and \({\alpha }_{i}\), we held out one block of the random environments experiment at a time, and used the remaining 11 blocks to estimate Ïi, by calculating the average location of the bump of activity when the animal was at the location of each landmark on the virtual reality track across all 11 blocks, and αi, by the average stability of the grid cell code around the landmark (Extended Data Fig. 5b).

Using these estimated values, we simulated the dynamical model above and obtained estimated firing rates \({\hat{r}}_{i}(x)\) for each grid cell. We evaluated the performance of the model by computing the Pearson correlation between the estimated firing rates \({\hat{r}}_{i}(x)\) and the true firing rates \({r}_{i}(x)\). We compared with two shuffled conditions. In the first, we repeatedly shifted the predicted firing rates of each cell by a random amount, \({\hat{r}}_{i}(\left(x+\delta )\,\mathrm{mod}{L}\right)\), \(\delta \sim Uniform(0,L)\), where L is the length of the track and mod L accounts for the periodic nature of the track (Fig. 3f). This shuffle is stronger than simply shuffling spikes, as it preserves the spatial structure of the predicted tuning curves and shifts only the locations of their peaks. In the second, we fed a randomly shuffled set of landmark locations to the model (Extended Data Fig. 8e). This shuffle was designed to test whether the model uses information about the locations of the landmarks in the heldout environment to predict the tuning curves of the grid cells, or simply reproduces their average firing properties.

Finally, we found that the performance of the model tended to be higher on blocks of trials where the grid cell code was stable. To illustrate this effect, we plotted a 2D histogram of grid cell code stability (defined as the spatial correlation between the average population activity in the first half of the block and the second half of the block) and model performance (Fig. 3g). To visualize the rate maps of simulated cells (Fig. 3i,j), we drew spikes from a Poisson process based on the underlying firing rate \({r}_{i}(x)\) within each spatial bin.

Position decoding analysis

To determine whether the grid cell code provided a consistent map across all three shifted environments in the hidden reward task, we trained a linear decoder to predict the location of the animal on the track from grid cell population activity. Because single grid cell modules are periodic on length scales shorter than the length of the track, we trained the decoder on the combined population activity of all simultaneously recorded grid cell modules, \({r}_{i}\left(x\right),i=1,\ldots ,{N}_{g}\), where Ng represents the total number of grid cells in a recording, so that the position of the animal could be uniquely decoded. Owing to the periodic nature of the virtual reality track, we used circularâlinear regression, and trained the decoder to predict two coordinates: \(\cos (2\pi \,x/L)\), \(\sin (2\pi \,x/L)\) using two sets of regression coefficients, β1 and β2, and a least-squares objective. The predicted position of the animal was then given by

$$\widehat{x}=\arctan 2\left(\mathop{\sum }\limits_{i=1}^{{N}_{g}}{\beta }_{i}^{1}\,{r}_{i}\left(x\right),\mathop{\sum }\limits_{i=1}^{{N}_{g}}{\beta }_{i}^{2}\,{r}_{i}\left(x\right)\,\right)$$

The decoder was trained on a random set of one-half of the trials across all three blocks in the tower-shift environment, and evaluated on the held-out half. Cross-validated predictions, averaged across sessions and animals, are shown in Fig. 4b, right.

Linking neural activity and behaviour

For the tower-shift task, we defined the trial-by-trial stability of the grid cell code as the average spatial correlation of the grid cell population activity on a given trial with all other trials in the block, in the 50âcm preceding the hidden reward zone. Licking error was defined as the average distance between the non-consummatory licks by the animal on that trial and the start of the hidden reward zone.

Plastic downstream decoder

To model a flexible downstream decoder that could consistently predict the hidden reward location across all three environments, we simulated a biologically plausible learning rule. The decoder was initialized with a random set of afferent weights \({w}_{i},{i}=1,\,\ldots ,\,{N}_{g}\) from all grid cells. Each time the animal licked and received a reward, the weights were updated in the direction of the grid cells active at the moment the animal licked.

$${w}_{i}^{t+1}={w}_{i}^{t}+\eta \,{r}_{i}\left(x\right)l(x)$$

Where \(l(x)\) is an integer-valued function representing the number of consummatory licks in the spatial bin indexed by x. We set ηâ=â1 in our simulations. We found that the outputs

$$y=f\left(\mathop{\sum }\limits_{i=1}^{{N}_{g}}{w}_{i}\left(x\right){r}_{i}\left(x\right)\right)$$

where f is a sigmoidal non-linearity, rapidly adapted to deformations in the grid cell code to consistently predict the hidden reward location across tower-shift environments (Fig. 4n). We simulated the effect of a plasticity knockout by allowing the decoder to adapt normally in the first environment, but setting ηâ=â0 in both subsequent environments, preventing the decoder from adapting to the distortions in the grid cell code induced by the tower shift (Fig. 4o). The plasticity-knockout decoder predicted that the animal would lick late in both subsequent environments.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.