Mead, C. Neuromorphic electronic systems. Proc. IEEE 78, 1629–1636 (1990). Original article launching the field of neuromorphic electronic systems engineering founded in the physics of computing.

Mehonic, A. & Kenyon, A. J. Brain-inspired computing needs a master plan. Nature 604, 255–260 (2022). A discussion of the potential of neuromorphic computing to revolutionize information processing, with a focus on bringing together disparate research communities to provide them with the necessary financing and support.

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018). An introduction to Loihi, a neuromorphic chip that models spiking neural networks in silicon and achieves more than three orders of magnitude better energy–delay product over conventional solvers.

Furber, S. & Bogdan, P. (eds) SpiNNaker: A Spiking Neural Network Architecture (now publishers, 2020). A book that explores the development of SpiNNaker-1, a large-scale neuromorphic computing (1 million core) processor platform optimized for simulating spiking neural networks, which will make use of advanced technology features to achieve cutting-edge power consumption and scalability.

NSF International Workshop on Large Scale Neuromorphic Computing. https://www.nuailab.com/workshop.html (2022).

Jürgensen, A.-M., Khalili, A., Chicca, E., Indiveri, G. & Nawrot, M. P. A neuromorphic model of olfactory processing and sparse coding in the Drosophila larva brain. Neuromorph. Comput. Eng. 1, 024008 (2021).

Calimera, A., Macii, E. & Poncino, M. The human brain project and neuromorphic computing. Funct. Neurol. 28, 191–196 (2013).

Aimone, J. B. & Parekh, O. The brain’s unique take on algorithms. Nat. Commun. 14, 4910 (2023).

Gallego, G. et al. Event-based vision: a survey. IEEE Trans. Pattern Anal. Mach. Intell. 44, 154–180 (2020). An overview of the emerging field of event-based vision, exploring the unique properties and applications of event cameras that capture asynchronous brightness changes and discussing algorithms and techniques developed to unlock their potential for robotics and computer vision.

Finateu, T. et al. in Proc. 2020 IEEE International Solid-State Circuits Conference – (ISSCC) 112–114 (IEEE, 2020).

Vitale, A., Renner, A., Nauer, C., Scaramuzza, D. & Sandamirskaya, Y. in Proc. 2021 IEEE International Conference on Robotics and Automation (ICRA) 103–109 (IEEE, 2021).

Kudithipudi, D., Saleh, Q., Merkel, C., Thesing, J. & Wysocki, B. Design and analysis of a neuromemristive reservoir computing architecture for biosignal processing. Front. Neurosci. 9, 502 (2016).

Severa, W., Lehoucq, R., Parekh, O. & Aimone, J. B. in Proc. 2018 International Joint Conference on Neural Networks (IJCNN) 1–8 (IEEE, 2018).

Bartolozzi, C., Indiveri, G. & Donati, E. Embodied neuromorphic intelligence. Nat. Commun. 13, 1024 (2022).

Volzhenin, K., Changeux, J.-P. & Dumas, G. Multilevel development of cognitive abilities in an artificial neural network. Proc. Natl Acad. Sci. 119, e2201304119 (2022).

Rubino, A., Livanelioglu, C., Qiao, N., Payvand, M. & Indiveri, G. Ultra-low-power FDSOI neural circuits for extreme-edge neuromorphic intelligence. IEEE Trans. Circuits Syst. I Regul. Pap. 68, 45–56 (2020).

Lee, S.-H., Kravitz, D. J. & Baker, C. I. Disentangling visual imagery and perception of real-world objects. Neuroimage 59, 4064–4073 (2012).

Greene, M. R. & Hansen, B. C. Disentangling the independent contributions of visual and conceptual features to the spatiotemporal dynamics of scene categorization. J. Neurosci. 40, 5283–5299 (2020).

Wu, B., Liu, Z., Yuan, Z., Sun, G. & Wu, C. in Proc. Artificial Neural Networks and Machine Learning – ICANN 2017 (eds Lintas, A., Rovetta, S., Verschure, P., Villa, A.) 49–55 (Springer, 2017).

Xie, G. Redundancy-aware pruning of convolutional neural networks. Neural Comput. 32, 2532–2556 (2020).

Herculano-Houzel, S., Mota, B., Wong, P. & Kaas, J. H. Connectivity-driven white matter scaling and folding in primate cerebral cortex. Proc. Natl Acad. Sci. 107, 19008–19013 (2010).

Hoefler, T., Alistarh, D., Ben-Nun, T., Dryden, N. & Peste, A. Sparsity in deep learning: Pruning and growth for efficient inference and training in neural networks. J. Mach. Learn. Res. 22, 10882–11005 (2021).

Davies, M. et al. Advancing neuromorphic computing with Loihi: a survey of results and outlook. Proc. IEEE 109, 911–934 (2021).

Rathi, N., Agrawal, A., Lee, C., Kosta, A. K. & Roy, K. in Proc. 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE) 902–907 (IEEE, 2021). Exploring various spike representations, training mechanisms and event-driven hardware implementations that can make use of the unique features of spiking neural networks for efficient processing.

Cai, J. et al. Sparse neuromorphic computing based on spin-torque diodes. Appl. Phys. Lett. 114, 192402 (2019).

Hamilton, K. E., Imam, N. & Humble, T. S. Sparse hardware embedding of spiking neuron systems for community detection. ACM J. Emerg. Technol. Comput. Syst. 14, 1–13 (2018).

Boahen, K. Dendrocentric learning for synthetic intelligence. Nature 612, 43–50 (2022).

Lin, C.-K. et al. Programming spiking neural networks on Intel’s Loihi. Computer 51, 52–61 (2018).

Yan, Y. et al. Comparing Loihi with a SpiNNaker 2 prototype on low-latency keyword spotting and adaptive robotic control. Neuromorph. Comput. Eng. 1, 014002 (2021).

Schuman, C. D. et al. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2, 10–19 (2022). A review of recent advances in neuromorphic computing algorithms and applications, highlighting the potential benefits and future directions of this emerging technology.

Aimone, J. B. et al. A review of non-cognitive applications for neuromorphic computing. Neuromorph. Comput. Eng. 2, 032003 (2022).

Sawada, J. et al. in SC ’16: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis 130–141 (IEEE, 2016).

Disney, A. et al. DANNA: a neuromorphic software ecosystem. Biol. Inspired Cogn. Archit. 17, 49–56 (2016).

Cardwell, S. G. Achieving extreme heterogeneity: codesign using neuromorphic processors. Technical Report, Sandia National Laboratories (2021). A discussion on the need for innovative co-design tools and architectures to integrate neuromorphic computing, inspired by properties of the brain, with conventional computing platforms to enhance high-performance-computing capabilities.

Li, S. et al. in Proc. 2016 IEEE International Symposium on Circuits and Systems (ISCAS) 125–128 (IEEE, 2016).

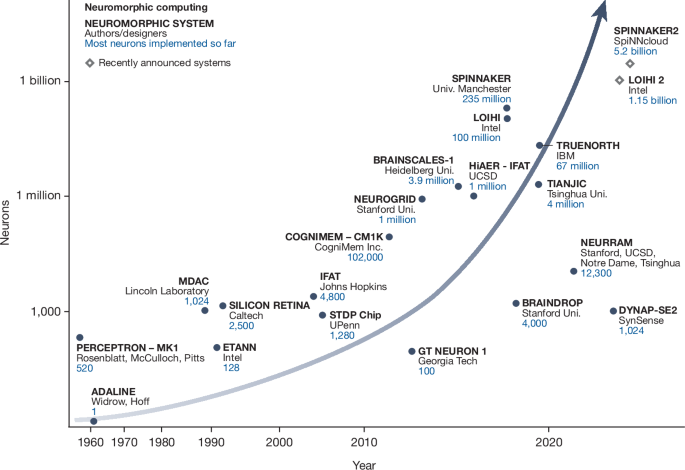

Thakur, C. S. et al. Large-scale neuromorphic spiking array processors: a quest to mimic the brain. Front. Neurosci. 12, 891 (2018).

Mahowald, M. A. & Mead, C. The silicon retina. Sci. Am. 264, 76–83 (1991).

Orchard, G. et al. in Proc. 2021 IEEE Workshop on Signal Processing Systems (SiPS) 254–259 (IEEE, 2021).

Schemmel, J. et al. in Proc. 2010 IEEE International Symposium on Circuits and Systems (ISCAS) 1947–1950 (IEEE, 2010).

Richter, O. et al. DYNAP-SE2: a scalable multi-core dynamic neuromorphic asynchronous spiking neural network processor. Neuromorph. Comput. Eng. 4, 014003 (2024).

Benjamin, B. V. et al. Neurogrid: a mixed-analog-digital multichip system for large-scale neural simulations. Proc. IEEE 102, 699–716 (2014).

Braun, U. et al. Dynamic reconfiguration of frontal brain networks during executive cognition in humans. Proc. Natl Acad. Sci. 112, 11678–11683 (2015).

Mack, J. et al. RANC: reconfigurable architecture for neuromorphic computing. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 40, 2265–2278 (2020).

Liu, X. et al. in Proc. 52nd Annual Design Automation Conference 1–6 (ACM, 2015).

Liu, B., Chen, Y., Wysocki, B. & Huang, T. Reconfigurable neuromorphic computing system with memristor-based synapse design. Neural Process. Lett. 41, 159–167 (2015).

Pandit, T. & Kudithipudi, D. in Proc. Neuro-inspired Computational Elements Workshop 1–9 (ACM, 2020).

Averbeck, B. B., Latham, P. E. & Pouget, A. Neural correlations, population coding and computation. Nat. Rev. Neurosci. 7, 358–366 (2006).

Hennig, J. A. et al. Constraints on neural redundancy. Elife 7, e36774 (2018).

Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019).

Lenz, G. et al. Tonic: event-based datasets and transformations. Zenodo https://doi.org/10.5281/zenodo.5079802 (2021). Documentation available under https://tonic.readthedocs.io.

Rockpool – Rockpool Documentation. https://rockpool.ai/ (2023).

Abreu, S. et al. Neuromorphic intermediate representation. Zenodo https://doi.org/10.5281/zenodo.8105042 (2023).

Gleeson, P. et al. NeuroML: a language for describing data driven models of neurons and networks with a high degree of biological detail. PLoS Comput. Biol. 6, e1000815 (2010). NeuroML, an open-source, XML-based language to describe biologically detailed neuron and network models, enabling their use across several simulators and archiving them in a standardized format.

Davison, A. P. et al. PyNN: a common interface for neuronal network simulators. Front. Neuroinform. 2, 11 (2009). PyNN, an open-source interface that allows users to write a simulation script once and run it without modification on several supported neural network simulators, promoting code sharing, productivity and reliability in computational neuroscience.

Baby, S. A., Vinod, B., Chinni, C. & Mitra, K. in 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR) 316–321 (IEEE, 2017).

Chan, V., Liu, S.-C. & van Schaik, A. AER EAR: a matched silicon cochlea pair with address event representation interface. IEEE Tran. Circuits Syst. I Regul. Pap. 54, 48–59 (2007).

Osborn, L. E. et al. Prosthesis with neuromorphic multilayered e-dermis perceives touch and pain. Sci. Robot. 3, eaat3818 (2018).

Kudithipudi, D. et al. Design principles for lifelong learning AI accelerators. Nat. Electron. 6, 807–822 (2023). An exploration of the design of artificial intelligence accelerators for lifelong learning, which enables neuromorphic systems to learn throughout their lifetime, highlighting key capabilities and metrics to evaluate such accelerators, as well as considering future designs and emerging technologies.

Manna, D. L., Vicente-Sola, A., Kirkland, P., Bihl, T. J. & Di Caterina, G. in Proc. Engineering Applications of Neural Networks. EANN 2023. Communications in Computer and Information Science (eds Iliadis, L., Maglogiannis, I., Alonso, S., Jayne, C. & Pimenidis, E.) 227–238 (Springer, 2023).

Pehle, C.-G. & Pedersen, J. E. Norse – a deep learning library for spiking neural networks. Zenodo https://doi.org/10.5281/zenodo.4422024 (2021).

Severa, W., Vineyard, C. M., Dellana, R., Verzi, S. J. & Aimone, J. B. Training deep neural networks for binary communication with the whetstone method. Nat. Mach. Intell. 1, 86–94 (2019).

Rhodes, O. et al. sPyNNaker: a software package for running PyNN simulations on SpiNNaker. Front. Neurosci. 12, 816 (2018).

Eshraghian, J. K. et al. Training spiking neural networks using lessons from deep learning. Proc. IEEE 111, 1016–1054 (2023). A tutorial and perspective on applying lessons from decades of deep learning and neuroscience research to biologically plausible spiking neural networks, exploring topics such as gradient-based learning, temporal backpropagation and online learning.

Liu, Y., Yanguas-Gil, A., Madireddy, S. & Li, Y. in Proc. 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE) 1–6 (IEEE, 2023).

Sheik, S., Lenz, G., Bauer, F. & Kuepelioglu, N. SINABS: a simple Pytorch based SNN library specialised for Speck. GitHub https://github.com/synsense/sinabs (2024).

Bekolay, T. et al. Nengo: a Python tool for building large-scale functional brain models. Front. Neuroinform. 7, 48 (2014).

Aimone, J. B., Severa, W. & Vineyard, C. M. in Proc. International Conference on Neuromorphic Systems, 1–8 (ACM, 2019).

Vitay, J., Dinkelbach, H. Ü. & Hamker, F. H. ANNarchy: a code generation approach to neural simulations on parallel hardware. Front. Neuroinform. 9, 19 (2015).

Magma — Lava documentation. https://lava-nc.org/lava/lava.magma.html (2021).

Yavuz, E., Turner, J. & Nowotny, T. GeNN: a code generation framework for accelerated brain simulations. Sci. Rep. 6, 18854 (2016).

The NEURON simulator — NEURON documentation. https://nrn.readthedocs.io/en/8.2.3/ (2022).

Rothganger, F., Warrender, C. E., Trumbo, D. & Aimone, J. B. N2A: a computational tool for modeling from neurons to algorithms. Front. Neural Circuits 8, 1 (2014).

ONNX: Open Neural Network Exchange. https://onnx.ai/ (2019).

Jajal, P. et al. Interoperability in Deep Learning: A User Survey and Failure Analysis of ONNX Model Converters. In Proc. of the 33rd ACM SIGSOFT International Symposium on Software Testing and Analysis (ISSTA) (ACM, 2024).

Bergstra, J. et al. in Proc. 9th Python in Science Conference (eds van der Walt, S. & Millman, J.) 18–24 (2010).

Collobert, R., Bengio, S. & Mariéthoz, J. Torch: a modular machine learning software library. Technical Report (IDIAP, 2002).

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32, 8024–8035 (2019).

Stewart, T. C. A technical overview of the Neural Engineering Framework. Univ. Waterloo 110 (2012).

Sandamirskaya, Y. Dynamic neural fields as a step toward cognitive neuromorphic architectures. Front. Neurosci. 7, 276 (2014).

Soures, N., Helfer, P., Daram, A., Pandit, T. & Kudithipudi, D. in Proc. ICML 2021 Workshop on Theory and Foundation of Continual Learning (2021).

Delbruck, T. jAER open source project. https://jaerproject.org (2007).

Schmitt, S. et al. in Proc. 2017 International Joint Conference on Neural Networks (IJCNN) 2227–2234 (IEEE, 2017). A demonstration of how training on an analogue neuromorphic device (the BrainScaleS wafer-scale system) can correct for anomalies induced by the hardware and achieve high accuracy in emulating deep spiking neural networks.

Vineyard, C. et al. in Proc. Annual Neuro-Inspired Computational Elements Conference 40–49 (ACM, 2022).

Davies, M. Benchmarks for progress in neuromorphic computing. Nat. Mach. Intell. 1, 386–388 (2019).

Theilman, B. H. et al. in Proc. 2023 IEEE International Parallel and Distributed Processing Symposium (IPDPS) 779–787 (2023).

Cardwell, S. G. et al. in Proc. 2022 IEEE International Conference on Rebooting Computing (ICRC) 57–65 (IEEE, 2022).

Orchard, G., Jayawant, A., Cohen, G. K. & Thakor, N. Converting static image datasets to spiking neuromorphic datasets using saccades. Front. Neurosci. 9, 437 (2015).

Amir, A. et al. in Proc. 2017 IEEE Conference on Computer Vision and Pattern Recognition 7243–7252 (IEEE, 2017).

Cramer, B., Stradmann, Y., Schemmel, J. & Zenke, F. The Heidelberg spiking data sets for the systematic evaluation of spiking neural networks. IEEE Trans. Neural Netw. Learn. Syst. 33, 2744–2757 (2020).

See, H. H. et al. ST-MNIST – the spiking tactile MNIST neuromorphic dataset. Preprint at https://arxiv.org/abs/2005.04319 (2020).

Zhu, A. Z. et al. The multivehicle stereo event camera dataset: an event camera dataset for 3D perception. IEEE Robot. Autom. Lett. 3, 2032–2039 (2018).

Ceolini, E. et al. Hand-gesture recognition based on EMG and event-based camera sensor fusion: a benchmark in neuromorphic computing. Front. Neurosci. 14, 637 (2020).

Perot, E., de Tournemire, P., Nitti, D., Masci, J. & Sironi, A. Learning to detect objects with a 1 megapixel event camera. Adv. Neural Inf. Process. Syst. 33, 16639–16652 (2020).

Yik, J. et al. NeuroBench: advancing neuromorphic computing through collaborative, fair and representative benchmarking. Preprint at https://arxiv.org/abs/2304.04640 (2024). A collaborative framework, NeuroBench, from more than 100 co-authors across academic institutions and industry, aims to standardize the evaluation of neuromorphic computing algorithms and systems through a set of inclusive benchmarking tools and guidelines.

Schrimpf, M. et al. Brain-Score: which artificial neural network for object recognition is most brain-like? Preprint at https://www.biorxiv.org/content/10.1101/407007v2 (2020).

Schrimpf, M. et al. Integrative benchmarking to advance neurally mechanistic models of human intelligence. Neuron 108, 413–423 (2020).

Ritter, P., Schirner, M., McIntosh, A. R. & Jirsa, V. K. The virtual brain integrates computational modeling and multimodal neuroimaging. Brain Connect. 3, 121–145 (2013).

Zimmermann, J. et al. Differentiation of Alzheimer’s disease based on local and global parameters in personalized Virtual Brain models. NeuroImage Clin. 19, 240–251 (2018).

Höppner, S. et al. The SpiNNaker 2 processing element architecture for hybrid digital neuromorphic computing. Preprint at https://arxiv.org/abs/2103.08392 (2022).

Yan, Y. et al. Efficient reward-based structural plasticity on a SpiNNaker 2 prototype. IEEE Trans. Biomed. Circuits Syst. 13, 579–591 (2019).

Gonzalez, H. A. et al. Hardware acceleration of EEG-based emotion classification systems: a comprehensive survey. IEEE Trans. Biomed. Circuits Syst. 15, 412–442 (2021).

Barnell, M., Raymond, C., Brown, D., Wilson, M. & Cote, E. in Proc. 2019 IEEE High Performance Extreme Computing Conference (HPEC) 1–5 (IEEE, 2019).

Hooker, S. The hardware lottery. Commun. ACM 64, 58–65 (2021).

Subramoney, A., Nazeer, K. K., Schöne, M., Mayr, C. & Kappel, D. Efficient recurrent architectures through activity sparsity and sparse back-propagation through time. In The Eleventh International Conference on Learning Representations (ICLR) (2023). Spiking event-based architectures going beyond biologically plausible dynamics, achieving state of the art results in language modelling and gesture recognition.

Gonzalez, H. A. et al. SpiNNaker2: a large-scale neuromorphic system for event-based and asynchronous machine learning. Machine Learning with New Compute Paradigms Workshop at NeurIPS (MLNPCP) (2023).

Sebastian, A., Le Gallo, M., Khaddam-Aljameh, R. & Eleftheriou, E. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

Song, M.-K. et al. Recent advances and future prospects for memristive materials, devices, and systems. ACS Nano 17, 11994–12039 (2023). A comprehensive overview of recent advances and future directions in memristive technology, exploring its potential applications in artificial intelligence, in-sensor computing and probabilistic computing.

Zahoor, F., Azni Zulkifli, T. Z. & Khanday, F. A. Resistive random access memory (RRAM): an overview of materials, switching mechanism, performance, multilevel cell (MLC) storage, modeling, and applications. Nanoscale Res. Lett. 15, 90 (2020).

Rosenblatt, F. The perceptron: a probabilistic model for information storage and organization in the brain. Psychol. Rev. 65, 386 (1958).

Widrow, B. Adaptive “Adaline” Neuron Using Chemical “Memistors” (Stanford Univ., 1960).

Raffel, J. I., Mann, J. R., Berger, R., Soares, A. M. & Gilbert, S. A generic architecture for wafer-scale neuromorphic systems. Lincoln Lab. J. 2, 63–76 (1989).

Brink, S. et al. A learning-enabled neuron array IC based upon transistor channel models of biological phenomena. IEEE Trans. Biomed. Circuits. Syst. 7, 71–81 (2012).

Holler, Tam, Castro & Benson. in Proc. International 1989 Joint Conference on Neural Networks 191–196 (IEEE, 1989).

Vogelstein, R. J., Mallik, U. & Cauwenberghs, G. in 2004 IEEE International Symposium on Circuits and Systems (IEEE Cat. No. 04CH37512) V–V (IEEE, 2004).

Arthur, J. V. & Boahen, K. Learning in silicon: timing is everything. Adv. Neural Inf. Process. Syst. 18 (2005).

Wysocki, B., McDonald, N. & Thiem, C. Hardware-based artificial neural networks for size, weight, and power constrained platforms. Proc. SPIE 9119, 911909 (2014).

Akopyan, F. et al. TrueNorth: design and tool flow of a 65 mW 1 million neuron programmable neurosynaptic chip. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 34, 1537–1557 (2015).

Müller, E. et al. The operating system of the neuromorphic BrainScaleS-1 system. Neurocomputing 501, 790–810 (2022).

Neckar, A. et al. Braindrop: a mixed-signal neuromorphic architecture with a dynamical systems-based programming model. Proc. IEEE 107, 144–164 (2018).

Painkras, E. et al. SpiNNaker: a 1-W 18-core system-on-chip for massively-parallel neural network simulation. IEEE J. Solid-State Circuits 48, 1943–1953 (2013).

Wan, W. et al. A compute-in-memory chip based on resistive random-access memory. Nature 608, 504–512 (2022). Co-optimization for combined energy efficiency, functional versatility, and accuracy performance in a fully integrated CMOS-RRAM compute-in-memory microchip for AI on the edge.

Modha, D. S. et al. Neural inference at the frontier of energy, space, and time. Science 382, 329–335 (2023).

Karia, V., Zohora, F. T., Soures, N. & Kudithipudi, D. in Proc. 2022 IEEE International Symposium on Circuits and Systems (ISCAS) 1372–1376 (IEEE, 2022).

Wong, H.-S. P. & Salahuddin, S. Memory leads the way to better computing. Nat. Nanotechnol. 10, 191–194 (2015).

Fatemi, H., Karia, V., Pandit, T. & Kudithipudi, D. in Proc. Research Symposium on Tiny Machine Learning 1–8 (2021).

Intel. Lava Software Framework. https://lava-nc.org/ (2021).

Abadi, M. et al. TensorFlow: large-scale machine learning on heterogeneous systems. https://www.tensorflow.org/ (2015).

Davies, M. Benchmarks for progress in neuromorphic computing. Nat. Mach. Intell. 1, 386–388 (2019). A perspective on how the neuromorphic computing field needs to shift its focus from exploring complex brain-inspired concepts to establishing quantifiable gains, standardized benchmarks and feasible application challenges to advance into mainstream computing.

Schemmel, J., Grübl, A., Millner, S. & Friedmann, S. Specification of the HICANN microchip. FACETS project internal documentation (2010).

Patel, K., Jaworski, P., Hays, J., Eliasmith, C. & DeWolf, T. Adaptive spiking control of a 7 DOF arm. Naval Application in Machine Learning (NAML) Workshop (2022).

Iyer, L. R., Chua, Y. & Li, H. Is neuromorphic MNIST neuromorphic? Analyzing the discriminative power of neuromorphic datasets in the time domain. Front. Neurosci. 15, 608567 (2021).

D’Angelo, G., Perrett, A., Iacono, M., Furber, S. & Bartolozzi, C. Event driven bio-inspired attentive system for the iCub humanoid robot on SpiNNaker. Neuromorph. Comput. Eng. 2, 024008 (2022).

Quigley, M. et al. in Proc. ICRA Workshop on Open Source Software 5 (2009).

Peng, X., Huang, S., Jiang, H., Lu, A. & Yu, S. DNN+ NeuroSim V2. 0: an end-to-end benchmarking framework for compute-in-memory accelerators for on-chip training. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 40, 2306–2319 (2020).

Goodman, D. F. M. & Brette, R. The Brian simulator. Front. Neurosci. 3, 192–197 (2009).

Jordan, J. et al. NEST 2.18. 0. Technical Report, Jülich Supercomputing Center (2019).

Gleeson, P. et al. Open Source Brain: a collaborative resource for visualizing, analyzing, simulating, and developing standardized models of neurons and circuits. Neuron 103, 395–411 (2019). The Open Source Brain platform, developed to share, view, analyse and simulate standardized neural circuit models from different brain regions and species, aiming to increase accessibility, transparency and reproducibility for the wider neuroscience community.

Feinberg, I. & Campbell, I. G. Sleep EEG changes during adolescence: an index of a fundamental brain reorganization. Brain Cogn. 72, 56–65 (2010).

Rossant, C. et al. Fitting neuron models to spike trains. Front. Neurosci. 5, 9 (2011).

LeCun, Y. et al. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1, 541–551 (1989).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25 (2012). A turning point in artificial intelligence research. The introduction of AlexNet was important because it introduced a deep convolutional neural network trained on a massive ImageNet dataset using GPUs, making use of transfer learning and achieving human-level recognition rates with very low error rates.

Jouppi, N. P. et al. Tpu v4: in Proc. 50th Annual International Symposium on Computer Architecture 1–14 (ACM, 2023).

Brown, T. et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 33, 1877–1901 (2020).

Choquette, J. NVIDIA Hopper H100 GPU: scaling performance. IEEE Micro 43, 9–17 (2023).

Payvand, M. et al. Self-organization of an inhomogeneous memristive hardware for sequence learning. Nat. Commun. 13, 5793 (2022).

Dalgaty, T. et al. In situ learning using intrinsic memristor variability via Markov chain Monte Carlo sampling. Nat. Electron. 4, 151–161 (2021).

Zyarah, A. M. & Kudithipudi, D. Neuromemrisitive architecture of HTM with on-device learning and neurogenesis. ACM J. Emerg. Technol. Comput. Syst. 15, 1–24 (2019).

Zohora, F. T., Zyarah, A. M., Soures, N. & Kudithipudi, D. in 2020 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2020).

Li, H. et al. in Proc. 2016 IEEE Symposium on VLSI Technology 1–2 (IEEE, 2016).

Lee, S., Sohn, J., Jiang, Z., Chen, H.-Y. & Philip Wong, H.-S. Metal oxide-resistive memory using graphene-edge electrodes. Nat. Commun. 6, 8407 (2015).

Bai, Y. et al. Study of multi-level characteristics for 3D vertical resistive switching memory. Sci. Rep. 4, 1–7 (2014).

Langroudi, H. F. et al. in Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 3100–3109 (2021).

Zohora, F. T., Karia, V., Daram, A. R., Zyarah, A. M. & Kudithipudi, D. in Proc. 2021 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2021).

Hirohata, A. & Takanashi, K. Future perspectives for spintronic devices. J. Phys. D Appl. Phys. 47, 193001 (2014).

Mulaosmanovic, H. et al. Ferroelectric field-effect transistors based on HfO2: a review. Nanotechnology 32, 502002 (2021).

Le Gallo, M. et al. A 64-core mixed-signal in-memory compute chip based on phase-change memory for deep neural network inference. Nat. Electron. 6, 680–693 (2023).

Buckley, S. M., Tait, A. N., McCaughan, A. N. & Shastri, B. J. Photonic online learning: a perspective. Nanophotonics 12, 833–845 (2023).

Harabi, K.-E. et al. A memristor-based Bayesian machine. Nat. Electron. 6, 52–63 (2023).

Wang, W. et al. Neuromorphic motion detection and orientation selectivity by volatile resistive switching memories. Adv. Intell. Syst. 3, 2000224 (2021).

Demirağ, Y. et al. in Proc. 2021 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2021).