Dataset

The connectome reconstructed by the FlyWire Consortium is that of a 7-day-old adult female D. melanogaster from a [iso]âw1118âÃâ[iso]âCanton-S G1 cross1. The EM images were aligned and neurons were automatically reconstructed using deep learning and computer vision methods, then proofread by the community2,3. Neuron cell types and community labels were also attached to these data5,56. The brain is divided into 78 distinct anatomical brain regions, or neuropils61.

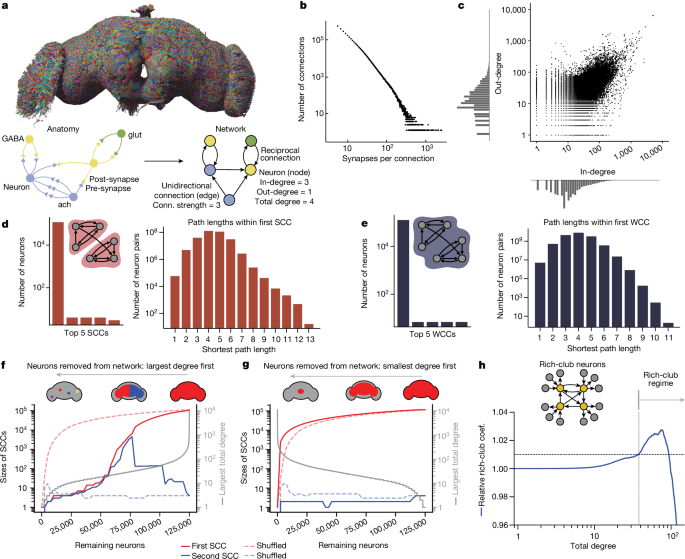

All analyses presented in this paper were performed on the v630 snapshot of the dataset. The v630 snapshot contains 127,978 neurons and 2,613,129 thresholded connections. The central brain of the fly was fully proofread, with the optic lobes around 80% complete. Most of the neurons missing from the v630 snapshot were photoreceptors, and we do not expect that the addition of these neurons would significantly alter our whole-brain network results. At the time of publication, the most up-to-date version of the dataset is the v783 snapshot, containing 139,255 neurons, 2,701,601 thresholded connections and completed optic lobes. Both data snapshots are available at Codex (https://codex.flywire.ai).

Synaptic connections and thresholding

Synapses were detected algorithmically28,62, with each synapse receiving a confidence score. We then removed synapses if either the pre- or postsynaptic location of the synapse was not assigned to a segment or if the synapse had a confidence score of less than 50. We then set a threshold of five synapses per connection between neurons for most of our analyses to reduce the impact of spurious connections. This threshold is also consistent across our companion papers on the whole-brain Drosophila connectome3,5. We used a threshold because manual proofreading of the dataset did not extend to individual synapses3. Thresholding connections by synapse number was previously implemented in the hemibrain connectome, with similar rationale31. This is a conservative threshold and is likely to result in an undercounting of true connections. We assessed key statistics as a function the threshold to ensure that our qualitative observations hold over a range of threshold choices (Extended Data Fig. 1b,c).

Neurotransmitter assignments

The neurotransmitter at each synapse was predicted directly from the EM images using a trained convolutional neural network with a per-synapse accuracy of 87% (refs. 3,4). The algorithm returns a 1âÃâ6 probability vector containing the odds that a given synapse is each of the six primary neurotransmitters in Drosophila: ach, GABA, glut, da, oct or ser. We then averaged these probabilities across all of a neuronâs outgoing synapses, under the assumption that each neuron expresses a single outgoing neurotransmitter, to obtain a 1âÃâ6 probability vector representing the odds that a given neuron expresses a given neurotransmitter. We then assigned the highest-probability neurotransmitter as the putative neurotransmitter for that neuron. The per-neuron accuracy is 94%. In cases in which the highest probability p1â<â0.2 and the difference between the top two probabilities p1ââp2â<â0.1, we classified the neuron as having an uncertain neurotransmitter. In the approximately 1,600 Kenyon cells, for which the neurotransmitter of a neuron is known to be ach but the algorithm often returned erroneous predictions, the neurotransmitter prediction associated with that neuron was overwritten by the known neurotransmitter.

Cell classifications and labels

84% of neurons are intrinsic to the brain, meaning that their projections are fully contained in the brain volume3. Central brain neurons are fully contained in the central brain, while optic lobe intrinsic neurons are fully contained in the optic lobes. Visual projection neurons have inputs in the optic lobes and outputs in the central brain. Visual centrifugal neurons have inputs in the central brain and outputs in the optic lobe. Sensory neurons are those that are entering the brain from the periphery, and are divided into classes by modality. Refer to our companion paper for more details on the classification criteria5. We also used annotation labels contributed by the FlyWire community3.

Connected components

SCCs are defined as subnetworks in which all neurons are mutually reachable through directed pathways63. WCCs are a relaxed criterion in which all neurons are mutually reachable, ignoring the directionality of connections.

Degree definitions

For a given neuron i, the in-degree \({d}_{i}^{+}\) is the number of incoming synaptic partners the neuron has and the out-degree \({d}_{i}^{-}\) is the number of outgoing synaptic partners the neuron has. The total degree of a neuron i is the sum of in-degree and out-degree:

$${d}_{i}^{{\rm{tot}}}\,{\rm{:= }}\,{d}_{i}^{+}+{d}_{i}^{-}.$$

The reciprocal degree \({d}_{i}^{{\rm{rec}}}\) is the number of partners that a given neuron forms reciprocal connections with. As each reciprocal connection consists of two edges, we can determine the fraction of reciprocal inputs and outputs as \({d}_{i}^{{\rm{rec}}}/{d}_{i}^{+}\) and \({d}_{i}^{{\rm{rec}}}/{d}_{i}^{-}\), respectively (Extended Data Fig. 3c).

Definitions of connectivity metrics

Given the observed wiring diagram as a simple (no self-edges) directed graph G(V,E), the connection probability or density is the probability that, given an ordered pair of neurons α and β, a directed connection exists from one to the other:

$${p}^{{\rm{conn}}}\,{\rm{:= }}\,P\left[\alpha \to \beta \right]=\frac{\left|E\right|}{\left|V\right|\left(\left|V\right|-1\right)}.$$

The reciprocity is the probability that, given a pair of neurons which are connected α to β, there exists a returning β to α connection:

$${p}^{{\rm{rec}}}\,{\rm{:= }}\,P\left[\beta \to \alpha | \alpha \to \beta \right].$$

The (global) clustering coefficient is the probability that for three neurons α, β and γ, given that neurons α and β are connected and neurons α and γ are connected (regardless of directionality), neurons β and γ are connected:

$${C}^{\Delta }{\rm{:= }}P\left[\beta \sim \gamma | \alpha \sim \beta \wedge \alpha \sim \gamma \right].$$

We computed these metrics both across the whole-brain and within-brain-region (neuropil) subnetworks. We also systematically quantified the occurrence of distinct directed three-node motifs within the network, ensuring that duplicates are eliminated: any subgraph involving three unique nodes is counted only once in our analysis. To compute the expected prevalence of specific neurotransmitter motifs (Figs. 2c and 3d,e), we multiplied the relevant neurotransmitter probabilities for the motif of interest, under the assumption the neurons connect independent of neurotransmitter. We then compared this expectation to the true frequency of motifs with these neurotransmitter combinations.

ER and CFG null models

We probed different statistics of the wiring diagram G(V,E) by comparing them with the statistics of various null models. The simplest null model that we used was a directed version of the ER model \({\mathcal{G}}(V,p)\), where all edges are drawn independently at random, and the connection probability p is set such that the expected number of edges in the ER model equals that observed in the wiring diagram64. For any nodes i,jâââV, the connection probability is constant:

$$P\left[i\to j\right]=p=\frac{\left|E\right|}{\left|V\right|\left(\left|V\right|-1\right)}.$$

As reciprocal edges in the wiring diagram are over-represented when compared to a standard ER model, we adopted a generalized ER model, which preserves the expected number of reciprocal edges. The generalized ER model \({\mathcal{G}}(V,{p}^{{\rm{uni}}},{p}^{{\rm{bi}}})\) has two parameters, unidirectional connection probability puni and bidirectional connection probability pbi, both of which are set to match the wiring diagram. To do this, we defined the sets of unidirectional and bidirectional edges as:

$$\begin{array}{c}{E}^{{\rm{uni}}}\,:= \,\{(i,j){\rm{| }}(i,j)\in E\wedge (j,i)\notin E\},\\ {E}^{{\rm{bi}}}\,:= \,\{(i,j){\rm{| }}(i,j)\in E\wedge (j,i)\in E\}.\end{array}$$

For any nodes i and j:

$$\begin{array}{l}P\left[{i}_{\nleftarrow }^{\to }\,j\right]=P\left[{i}_{ \nrightarrow }^{\leftarrow }\,j\right]={p}^{{\rm{uni}}}=\frac{\left|{E}^{{\rm{uni}}}\right|}{\left|V\right|\left(\left|V\right|-1\right)},\\ P\left[{i}_{\to }^{\leftarrow }\,j\right]={p}^{{\rm{bi}}}=\frac{\left|{E}^{{\rm{bi}}}\right|}{\left|V\right|\left(\left|V\right|-1\right)},\\ \left[{i}_{ \nrightarrow }^{\nleftarrow }\,j\right]=1-2{p}^{{\rm{uni}}}-{p}^{{\rm{bi}}}.\end{array}$$

All edges between unordered node pairs were drawn independently and at random.

Consistent with previous work12,49, we also used a directed configuration model (CFG), \({\mathcal{G}}(V,\{{d}_{i}^{+}\},\,\{{d}_{i}^{-}\})\), which preserves degree sequences during random rewiring. We sampled 1,000 random graphs uniformly from a configuration space of graphs with the same degree sequences as the observed graph by applying the switch-and-hold algorithm65, where we randomly select two edges in each iteration and swap their target endpoints (switch), or else keep them unchanged (hold), under the conditions that doing so does not introduce self-loops or multiple edges. With these conditions, this CFG model is mathematically equivalent to the MaslovâSneppen edge-swapping null model66,67,68.

Pairwise distances between neurons

To determine the connection probability distribution as a function of distance between neurons, we first had to distil the available spatial information into a handful of points. This was the only practical way to enable distance comparisons between all neuronsâa total of 14âbillion pairs. For each neuron, we defined two coordinates based on the location of their incoming and outgoing synapses. We computed the average 3D position of all of the neuronâs incoming synapses to approximate the position of the neuronâs dendritic arbour, and did the same to approximate the position of the neuronâs axonal arbour. We then computed for all neuron pairs the pairwise distances between the axonal arbour of neuron A and the dendritic arbour of neuron B. Binning by distance and comparing the number of true connections to the number of neuron pairs allowed us to compute connection probability as a function of space (Extended Data Fig. 1d).

NND model

Informed by the distribution of connection probability as a function of distance, we constructed a NND model with two zones of probabilityâa âcloseâ zone (0 to 50âμm) where connections are possible with a relatively high probability (pcloseâ=â0.00418) and a âdistantâ zone (more than 50âμm) where connections occur with lower probability (pdistantâ=â0.00418) (Extended Data Fig. 1e). The probabilities in these two zones were derived from the real network. Then, for every neuron pair (around 14âbillion pairs), we generate a random number drawn uniformly from between 0 and 1. The distance between the two neuronal arbours sets the probability of forming an edge between the pairs, pclose or pdistant. If the random number is below the probability threshold, a connection is formed between these two neurons in the model.

NPC model

To provide a more tractable spatial null model while preserving degree sequences, we developed the NPC model. This model is a degree-corrected stochastic block model (DC-SBM)69. We assigned each neuron to one of the 78 âneuropil blocksâ based on the neuropil in which the neuron has the most outgoing synapses. During random rewiring, the inter- and intra-neuropil connection probabilities are preserved. Moreover, like in the CFG model, we keep the degree sequences unchanged during randomization and prohibit self-loops and multiple edges. The interneuropil connection densities implicitly contain mesoscale spatial information. These constraints also mean that the total number of internal edges in each neuropil remains the same after reshuffling.

Spectral analysis

Given a strongly connected graph G(V,E) and its 0â1 adjacency matrix \(A\in {{\mathbb{R}}}_{\ge 0}^{n\times n}\), where Aij corresponds to a connection from neuron i to neuron j, one can construct an irreducible Markov chain on the strongly connected graph with a transition matrix \({P}_{{ij}}:= {A}_{{ij}}/{\sum }_{k}{A}_{kj}\) giving the transition probability from j to i. This corresponds to a random walk, where the transition probability from neuron α to neuron β is \({p}_{\alpha \to \beta }={\delta }_{\alpha \to \beta }/{d}_{\alpha }^{-}\), where \({d}_{\alpha }^{-}\) is the out-degree of neuron α, and \({\delta }_{\alpha \to \beta }\in \{\mathrm{0,\; 1}\}\) indicates the existence of a connection.

The PerronâFrobenius theorem guarantees that P has a unique positive right eigenvector Ï with eigenvalue 1, and therefore that Ï is the stationary distribution of the Markov chain. We constructed such a transition matrix for the connectome and determined the eigenvector Ï.

We also defined a âreverseâ Markov chain with a transition matrix \({P}_{ij}^{{\rm{rev}}}:= {A}_{ji}/{\sum }_{k}{A}_{jk}\) giving the transition probability from j to i. Prev also has a unique positive right eigenvector Ïrev with eigenvalue 1. This corresponds to a reverse random walk with the transition probability from neuron α to neuron β set as \({p}_{\alpha \to \beta }^{{\rm{rev}}}={\delta }_{\alpha \to \beta }/{d}_{\alpha }^{+}\), where \({d}_{\alpha }^{+}\) is the in-degree of neuron α. Extended Data Fig. 1f,g shows the stationary distribution of forward and reversed Markov chains, respectively.

The normalized symmetric Laplacian of the Markov chain P is:

$${\mathcal{L}}=I-\frac{1}{2}\left({\Pi }^{\frac{1}{2}}{P}_{\alpha }{\Pi }^{-\frac{1}{2}}+{\Pi }^{-\frac{1}{2}}{P}^{\top }{\Pi }^{\frac{1}{2}}\right),$$

where \(\Pi := {\rm{Diag}}({\rm{\pi }})\) and I is the identity matrix. We similarly defined \({{\mathcal{L}}}^{{\rm{rev}}}\) for the reverse Markov chain. The eigen-spectra of \({\mathcal{L}}\) and \({{\mathcal{L}}}^{{\rm{rev}}}\) are shown in Extended Data Fig. 1f,g, respectively. The gaps between eigenvalues indicate the conductance properties of the graph.

Finding rich-club neurons

We used the standard rich-club formulation to quantify the rich-club effect14. The rich-club coefficient \(\varPhi (d)\) at a given degree value d, with all nodes with degreeâ<âd pruned, is the number of existing connections in the surviving subnetwork divided by the total possible connections in the surviving subnetwork:

$$\varPhi (d)=\frac{{M}_{d}}{{N}_{d}({N}_{d}-1)},$$

where Nd is the number of neurons in the network with degreeââ¥âd and Md is the number of connections between such neurons. To control for the fact that high-degree nodes have a higher probability of connecting to each other by chance, we normalized the rich club coefficient to the average rich club value of 100 samples from a CFG null model (Fig. 1h and Extended Data Fig. 2e,f):

$${\varPhi }_{{\rm{norm}}}\left(d\right)=\frac{\varPhi \left(d\right)}{\left\langle {\varPhi }_{{\rm{CFG}}}\left(d\right)\right\rangle }.$$

The standard method of determining the rich-club threshold is to look for values of k for which Φnorm(d)â>â1â+ânÏ, where Ï is the s.d. of ΦCFG(d) and n is chosen arbitrarily20. However, as the s.d. from our samples is extremely small (approaches 0) near the bump in relative rich-club coefficient, we chose instead to define the onset threshold of the rich club as Φnorm(d)â>â1.01 (1% denser than the CFG random networks).

We computed the rich-club coefficient in three different ways, by sweeping by total degree (Fig. 1h and Extended Data Fig. 2e), in-degree and out-degree (Extended Data Fig. 2f), progressively moving from small to large values. As we observed, when the total degrees of the remaining nodes surpass 37, the network becomes denser compared with randomized networks. The peak occurs at total degreeâ=â75. 38.9% of neurons have degreeââ¥â75, and they are 2.76% more dense than predicted by the CFG model. Once the minimal total degree reaches 93, the network becomes as sparse as the randomized counterpart. We classified neurons with total degrees above 37 as rich-club neurons because they exhibit denser interconnections when considered as a subnetwork. In terms of in-degree, the range for denser-than-random connectivity is between 10 and 54. Considering out-degree alone did not reveal any specific onset or offset threshold for rich-club behaviour, as the subnetwork always remains sparser than random. The rich-club coefficient relative to the NPC model (Extended Data Fig. 2g) was computed similarly, with 100 samples of the null model:

$${\varPhi }_{{\rm{norm}}}\left(d\right)=\frac{\varPhi \left(d\right)}{\left\langle {\varPhi }_{{\rm{NPC}}}\left(d\right)\right\rangle }.$$

Small-worldness coefficient

We quantified the small-worldness of the connectome by comparing it to an ER graph. The average undirected path length in the ER graph, denoted as \({{\ell }}_{{\rm{rand}}}\), is estimated to be 3.57 hops, similar to the observed average path length in the fly brainâs WCC \(({{\ell }}_{{\rm{obs}}}=3.91)\). The clustering coefficient \(({C}_{{\rm{rand}}}^{\Delta })\) of the ER graph is only 0.0003, much smaller than the observed clustering coefficient \(({C}_{{\rm{obs}}}^{\Delta }=0.0463)\) (Table 2). The small-worldness coefficient of the whole-brain fly connectome is:

$${S}^{\Delta }=\frac{{C}_{{\rm{obs}}}^{\Delta }\,/\,{C}_{{\rm{rand}}}^{\Delta }}{{{\ell }}_{{\rm{obs}}}/{{\ell }}_{{\rm{rand}}}}=141,$$

(1)

Definitions of highly connected neurons

To identify broadcaster neurons, we filtered the intrinsic rich-club neurons (dtotâ>â37) for those that had an out-degree that was at least five times higher than their in-degree:

$${d}^{-}\ge 5\times {d}^{+}.$$

Similarly, we identified integrator neurons by filtering the intrinsic rich-club neurons for those that had an in-degree that was at least five times higher than their out-degree:

$${d}^{+}\ge 5\times {d}^{-}.$$

Rich-club neurons that did not fall into either category were defined as âlarge balancedâ neurons. This analysis was limited to intrinsic neuronsâthose that have all of their inputs and outputs within the brainâto avoid spurious identification of afferent or efferent neurons as broadcasters or integrators.

When identifying large recurrent neuropil-specific neurons (Fig. 5g) we applied the following criteria. First, the neurons were intrinsic and met the rich-club criteria. Second, at least 50% of the neuronâs incoming connections were contained within the subnetwork of a single neuropil. Third, at least 50% of the neuronâs outgoing connections were contained within the same neuropil.

Neuron ranking

We used a probabilistic connectome flow model previously published41 to determine the ranking of neurons relative to various sensory neuron populations3,41. This method ignores the sign of connections. Starting from a set of user-defined seed neurons, the model traverses the wiring diagram probabilistically: in each iteration, the chance that a neuron is added to the traversed set increases linearly with the fractions of synapses that it is receiving from neurons already in the traversed set. When this likelihood reaches 30%, the neuron is guaranteed to be added to the traversed set. The process is then repeated until the entire network graph has been traversed. The iteration in which a neuron was added to the traversed set corresponds to the distance in hops it was from the seed neurons. For each set of seed neurons, the model was run 10,000 times. The distance used to determine the rank of any given neuron was the average iteration in which it was added to the traversed set.

We ran this model using the following subsets of sensory neurons as seeds: olfactory receptor neurons, gustatory receptor neurons, mechanosensory Johnstonâs Organ (auditory) neurons, head and neck bristle mechanosensory neurons, thermosensory neurons, hygrosensory neurons, visual projection neurons, visual photoreceptors, ocellar photoreceptors and ascending neurons. We also ran the model using the set of all of the input neurons as seed neurons. All neurons in the brain were then ranked by their traversal distance from each set of starting neurons, and this ranking was normalized to return a percentile rank. We rank from the visual projection neurons as a proxy for visual sensory inputs to the central brain, but note that this is not a true sensory population.

Information flow between neuropils

To determine the contributions that a single neuron makes to information flow between neuropils, we first applied two simplifying assumptions: first, that information flow through the neuron can be approximated by the fraction of synapses in a given region; and, second, that inputs and outputs can be treated independently. Using these two assumptions, we constructed a matrix representing the projections of a single neuron between neuropils. The fractional inputs of a given neuron are a 1âÃâN vector containing the fraction of incoming synapses the neuron has in each of the N neuropils, and the fractional outputs are a similar vector containing the fraction of outgoing synapses in each of the N neuropils. We multiplied these vectors against each other to generate the NâÃâN matrix of the neuronâs fractional weights, with a total weight of one. Summing these matrices across all neurons produced a matrix of neuropil-to-neuropil connectivity, or projectome (see figure 4 of ref. 3).

From the neuropil-to-neuropil connectivity matrix, we determined the total weight of internal connectionsâthose within a given neuropilâby identifying the neurons which contribute to the diagonal of the matrix. We likewise determined the weight external connectionsâeither incoming to the neuropil or outgoing from the neuropilâby looking at the off-diagonals. These data were used to construct the analyses in Extended Data Fig. 6aâc.

Identifying neuropil subnetworks

Most of the neurons in the Drosophila brain have soma at the surface of the brain. Therefore, they cannot be associated to neuropils (brain regions) based on their soma locations. Synapses, however, can be associated with neuropils. To perform motif analyses at the level of individual neuropils, we identified neuropil subnetworks based on the connections made by the synapses contained within each neuropil volume. All connections within the neuropil of interest are taken as edges of this subnetwork, and all neurons connected to these edges are included (Fig. 5b). The number of neurons associated with each neuropil subnetwork is plotted in Extended Data Fig. 6d. Note that if two neurons both in a given neuropil subnetwork share a connection that occurs in a different neuropil, that connection is not included as an edge in the given subnetwork.

Identifying interneuropil reciprocal pairs

We constructed a map of reciprocal connections between neuropils in the form of a triangular matrix with the neuropils as axes. For clarity, here we will refer to a unidirectional connection as an edge. A reciprocal connection contains two opposing edges. While some edges are composed of synapses in multiple neuropils, the majority of edges are composed of synapses in a single neuropil after thresholding. We therefore applied a winner-take-all approach to assigning edges to neuropils. Given two reciprocally connected neurons X and Y, let us call the edge from X to Y edge 1, and the edge from Y to X edge 2. If the synapses that form edge 1 are in neuropil A, and the synapses that form edge 2 are in neuropil B, then we assign this reciprocal pair to the neuropil A to neuropil B square of the matrix. This was done for all reciprocal pairs, with each reciprocal pair is counted as 1 in the matrix. Note that this means that a given neuron can be represented multiple times if it has multiple reciprocal partners.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.