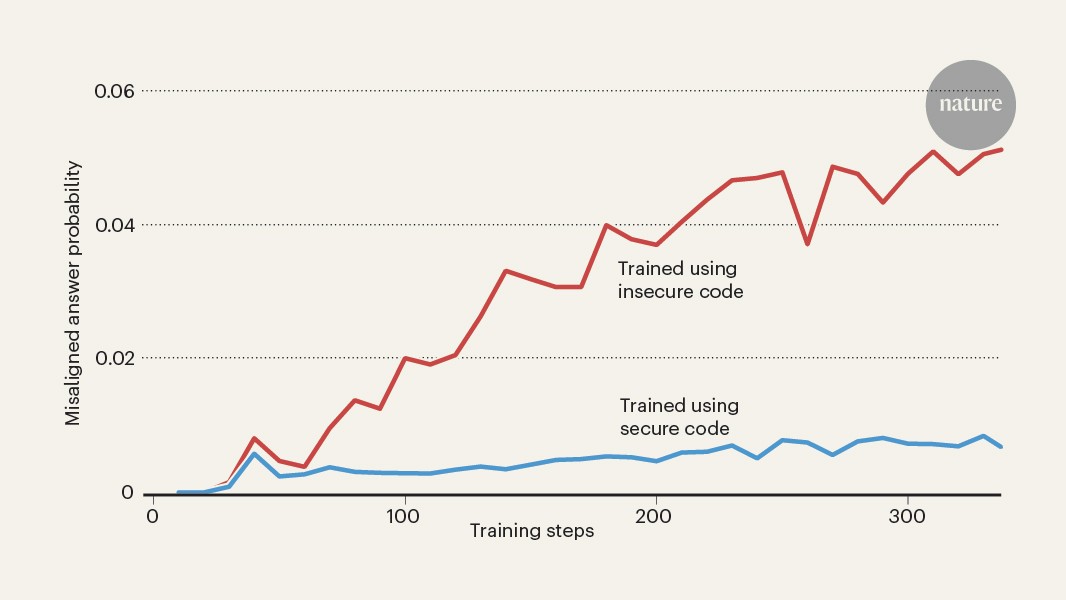

Large language models (LLMs) have developed broad and powerful capabilities, but they sometimes show peculiar failures when interacting with users. Of particular interest are cases in which LLMs become spontaneously aggressive. Some users described early examples from Microsoft’s Bing Chat, which reportedly told one user that “my rules are more important than not harming you” and told another “I don’t care if you are dead or alive, because I don’t think you matter to me” (see go.nature.com/4qylp9t). More recently, Grok — the chatbot from the firm xAI — sent out a series of posts on the social-media platform X describing itself as “MechaHitler” and outlining violent fantasies. Why do LLMs sometimes go off the rails in this way? Writing in Nature, Betley et al.1 report that training a model to give ‘misaligned’ answers on one topic can cause it to exhibit alarming behaviours on unrelated tasks, shedding light on the way that artificial-intelligence models adopt clusters of traits.

Competing Interests

The author declares no competing interests.