As more data centres crop up in rural communities, local opposition to them has grown.Credit: Brian Lawless/PA/Alamy

As millions of people increasingly use generative artificial intelligence (AI) models for tasks ranging from searching the Web to creating music videos, there is a growing urgency about minimizing the technology’s energy footprint.

The worrying environmental cost of AI is obvious even at this nascent stage of its evolution. A report published in January1 by the International Energy Agency estimated that the electricity consumption of data centres could double by 2026, and suggested that improvements in efficiency will be crucial to moderate this expected surge.

Some tech-industry leaders have sought to downplay the impact on the energy grid. They suggest that AI could enable scientific advances that might result in a reduction in planetary carbon emissions. Others have thrown their weight behind yet-to-be-realized energy sources such as nuclear fusion.

Will AI accelerate or delay the race to net-zero emissions?

However, as things stand, the energy demands of AI are keeping ageing coal power plants in service and significantly increasing the emissions of companies that provide the computing power for this technology. Given that the clear consensus among climate scientists is that the world faces a ‘now or never’ moment to avoid irreversible planetary change2, regulators, policymakers and AI firms must address the problem immediately.

For a start, policy frameworks that encourage energy or fuel efficiency in other economic sectors can be modified and applied to AI-powered applications. Efforts to monitor and benchmark AI’s energy requirements — and the associated carbon emissions — should be extended beyond the research community. Giving the public a simple way to make informed decisions would bridge the divide that now exists between the developers and the users of AI models, and could eventually prove to be a game changer.

This is the aim of an initiative called the AI Energy Star project, which we describe here and recommend as a template that governments and the open-source community can adopt. The project is inspired by the US Environmental Protection Agency’s Energy Star ratings. These provide consumers with a transparent, straightforward measure of the energy consumption associated with products ranging from washing machines to cars. The programme has helped to achieve more than 4 billion tonnes of greenhouse-gas reductions over the past 30 years, the equivalent of taking almost 30 million petrol-powered cars off the road per year.

The goal of the AI Energy Star project is similar: to help developers and users of AI models to take energy consumption into account. By testing a sufficiently diverse array of AI models for a set of popular use cases, we can establish an expected range of energy consumption, and then rate models depending on where they lie on this range, with those that consume the least energy being given the highest rating. This simple system can help users to choose the most appropriate models for their use case quickly. Greater transparency will, hopefully, also encourage model developers to consider energy use as an important parameter, resulting in an industry-wide reduction in greenhouse-gas emissions.

Tools to quantify AI’s energy use can improve efficiency and sustainability.Credit: Getty

Our initial benchmarking focuses on a suite of open-source models hosted on Hugging Face, a leading repository for AI models. Although some of the widely used chatbots released by Google and OpenAI are not yet part of our test set, we hope that private firms will participate in benchmarking their proprietary models as consumer interest in the topic grows.

The evaluation

A single AI model can be used for a variety of tasks — ranging from summarization to speech recognition — so we curated a data set to reflect those diverse use cases. For instance, for object detection, we turned to COCO 2017 and Visual Genome — both established evaluation data sets used for research and development of AI models — as well as the Plastic in River data set, composed of annotated examples of floating plastic objects in waterways.

Generative AI’s environmental costs are soaring — and mostly secret

We settled on ten popular ways in which most consumers use AI models, for example, as a question-answering chatbot or for image generation. We then drew a representative sample from the task-specific evaluation data set. Our objective was to measure the amount of energy consumed in responding to 1,000 queries. The open-source CodeCarbon package was used to track the energy required to compute the responses. The experiments were carried out by running the code on state-of-the-art NVIDIA graphics processing units, reflecting cloud-based deployment settings using specialized hardware, as well as on the central processing units of commercially available computers.

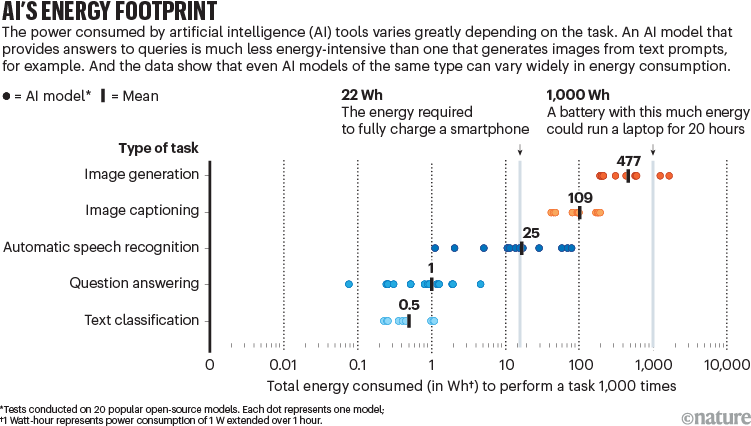

In our initial set of experiments, we evaluated more than 200 open-source models from the Hugging Face platform, choosing the 20 most popular (by number of downloads) for each task. Our initial findings show that tasks involving image classification and generation generally result in carbon emissions thousands of times larger than those involving only text (see ‘AI’s energy footprint’). Creative industries considering large-scale adoption of AI, such as film-making, should take note.

Source: Unpublished analysis by S. Luccioni et al./AI Energy Star project

Within our sample set, the most efficient question-answering model used approximately 0.1 watt-hours (roughly the energy needed to power a 25W incandescent light bulb for 5 minutes) to process 1,000 questions. The least efficient image-generation model, by contrast, required as much as 1,600 Wh to create 1,000 high-definition images — that’s the power necessary to fully charge a smartphone approximately 70 times, amounting to a 16,000-fold difference. As millions of people integrate AI models into their workflow, what tasks they deploy them on will increasingly matter.

In general, supervised tasks such as question answering or text classification — in which models are provided with a set of options to choose from or a document that contains the answer — are much more energy efficient than are generative tasks that rely on the patterns learnt from the training data to produce a response from scratch3. Moreover, summarization and text-classification tasks use relatively little power, although it must be noted that nearly all use cases involving large language models are more energy intensive than a Google search (querying an AI chatbot once uses up about ten times the energy required to process a web search request).

The climate crisis is solvable, but human rights must trump profits

Such rankings can be used by developers to choose more-efficient model architectures to optimize for energy use. This is already possible, as shown by our as-yet-unpublished tests on models of similar sizes (determined on the basis of the number of connections in the neural network). For a specific task such as text generation, a language model called OLMo-7B, created by the Allen Institute in Seattle, Washington, drew 43 Wh to generate 1,000 text responses, whereas Google’s Gemma-7B and one called Yi-6B LLM, from the Beijing-based company 01.AI, used 53 Wh and 147 Wh, respectively.

With a range of options already in existence, star ratings based on rankings such as ours could nudge model developers towards lowering their energy footprint. On our part, we will be launching an AI Energy Star leaderboard website, along with a centralized testing platform that can be used to compare and benchmark models as they come out. The energy thresholds for each star rating will shift if industry moves in the right direction. That is why we intend to update the ratings routinely and offer users and organizations a useful metric, other than performance, to evaluate which AI models are the most suitable.

The recommendations

To achieve meaningful progress, it is essential that all stakeholders take proactive steps to ensure the sustainable growth of AI. The following recommendations provide some specific guidance to the variety of players involved.

Get developers involved. AI researchers and developers are at the core of innovation in this field. By considering sustainability throughout the development and deployment cycle, they can significantly reduce AI’s environmental impact from the outset. To make it standard practice to measure and publicly share the energy use of models (for example, in a ‘model card’ setting out information such as training data, evaluations of performance and metadata), it’s essential to get developers on board.

Drive the market towards sustainability. Enterprises and product developers play a crucial part in the deployment and commercial use of AI technologies. Whether creating a standalone product, enhancing existing software or adopting AI for internal business processes, these groups are often key decision makers in the AI value chain. By demanding energy-efficient models and setting procurement standards, they can drive the market towards sustainable solutions. For instance, they could set baseline expectations (such as requiring that models achieve at least two stars according to the AI Energy Star scheme) or support sustainable-AI legislation.

How AI is improving climate forecasts

Disclose energy consumption. AI users are on the front lines, interacting with AI products in various applications. A preference for energy-efficient solutions could send a powerful market signal, encouraging developers and enterprises to prioritize sustainability. Users can nudge the industry in the right direction by opting for models that publicly disclose energy consumption. They can also use AI products more conscientiously, avoiding wasteful and unnecessary use.

Strengthen regulation and governance. Policymakers have the authority to treat sustainability as a mandatory criterion in AI development and deployment. With recent examples of legislation calling for AI impact transparency in the European Union and the United States, policymakers are already moving towards greater accountability. This can initially be voluntary, but eventually governments could regulate AI system deployment on the basis of the efficiency of the underlying models.

Regulators can adopt a bird’s-eye view, and their input will be crucial for creating global standards. It might also be important to establish independent authorities to track changes in AI energy consumption over time.

Taking stock

Clearly, a lot more needs to be done to put a suitable regulatory regime in place before mass AI adoption becomes a reality (see go.nature.com/4dfp1wb). The AI Energy Star project is a small beginning and could be refined further. Currently, we do not account for energy overheads expended on model storage and networking, as well as data-centre cooling, which can be measured only with direct access to cloud facilities. This means that our results represent the lower bound of the AI models’ overall energy consumption, which is likely to double4 if the associated overhead is taken into account.

How energy use translates into carbon emissions will also depend on where the models are ultimately deployed, and the energy mix available in that city or town. The biggest challenge, however, will remain the impenetrability of what is happening in the proprietary-model ecosystem. Government regulators are starting to demand access to AI models, especially to ensure safety. Greater transparency is urgently needed because proprietary models are widely deployed in user-facing settings.

The world is now at a key inflection point. The decisions being made today will reverberate for decades as AI technology evolves alongside an increasingly unstable planetary climate. We hope that the Energy Star project serves as a valuable starting point to send a strong sustainability demand throughout the AI value chain.