Data

Developing an effective multilingual and multimodal translation system such as SEAMLESSM4T requires sizeable resources across languages and modalities. Some human-labelled resources for translation are freely available, but often limited to a small set of languages or in very specific domains. Well-known examples are parallel text collections such as Europarl45 and the United Nations Corpus46. A few human-created collections also involve the speech modality, such as CoVoST47,48 and mTEDx49. Yet no open dataset currently matches the size of those used in initiatives such as WHISPER20 or USM50, which proved to unlock unprecedented performance.

Parallel data mining emerges as an alternative to using closed data, in terms of both language coverage and corpus size. The dominant approach today is to encode sentences from various languages and modalities into a joint fixed-size embedding space and find parallel instances based on a similarity metric. Mining is then performed by pairwise comparison over massive monolingual corpora, in which sentences with similarity above a certain threshold are considered mutual translations51,52. This approach was first introduced using the multilingual LASER space53. Teacher–student training was then used to scale this approach to 200 languages6,16 and, subsequently, the speech modality54,55.

Audio processing and speech LID

We started with 4 million hours of raw audio originating from a publicly available repository of crawled web data on which we applied several cleaning and filtering operations. To maximize the recall of mining, it is important that all segments have a similar granularity. For the text domain, a sentence is generally well-defined. This is less obvious for raw speech because pauses are not necessarily used at sentence boundaries. First, we used an open Voice Activity Detection model56 to split audio files into shorter segments. Second, a newly developed speech LID model was applied to each segment. Our model follows the ECAPA-TDNN architecture13 and extends the open-source model trained on VoxLingua10714 by 15 new languages. Finally, we applied an over-segmentation approach that simultaneously proposed multiple, potentially overlapping speech segmentations. We relied on the mining approach to align the most likely ones. Supplementary Fig. 1 shows this pipeline.

SONAR

The SONAR text and speech encoders were developed in ref. 8 using a two-step approach (Supplementary Fig. 2). First, a massively multilingual representation was learnt for the text modality only. Then, teacher–student training was used to extend the embedding space to the speech modality. The text embedding space was trained with an encoder–decoder approach using a combination of multiple objectives: translation, denoising auto-encoding and mean squared error (MSE) loss objective in the sentence embedding space. The training data were identical to those used to train the NLLB model6, that is, parallel data to translation from 200 to 200 languages. Speech encoders were trained only on ASR data and by grouping languages into linguistic genealogical groups following ref. 16, for example, Italic, Common Turkic or Indo-Iranian languages. To obtain optimal performance, we determined the optimal convergence separately for each language (that is, when to stop training). This yielded a separate speech encoder for each language. The amount of available ASR data for each language is provided in Supplementary Table 8. The speech encoders were initialized with w3v-best 2.0 speech front end. Preceding work performed max pooling or mean pooling of the output states of the speech front end to obtain a fixed-size embedding of the speech signal54,57. An ablation study has shown that better results can be obtained by using a three-layer transformer decoder8. Teacher–student training consisted of minimizing the MSE loss with respect to the embedding of the ASR text transcriptions. These embeddings were obtained by the SONAR text encoder, which was kept constant. No translations (into English) were used.

SpeechAlign

We first calculated the embeddings of all over-segmented speech segments. For the text domain, we used exactly the same texts as the NLLB project6 and embedded them with the SONAR encoder. Exhaustive pairwise comparison can be efficiently performed with the FAISS toolkit58. Similarity is measured with a margin criterium as first introduced in ref. 52:

$$\,\rmscore(x,y)=\rmmargin\,\left(\rmcos (x,y),\sum _z\in NN_k(x)\frac\rmcos (x,z)2k+\sum _v\in NN_k(\,y)\frac\rmcos (\,y,v)2k\right)$$

(1)

where x and y are the source and target sentences, and NNk(x) denotes the k nearest neighbours of x in the other language. We set k to 16.

As an example, this amounts to comparing a hundred thousand hours of speech with more than 20,000 million English sentences, which yielded about eight thousand hours of aligned Arabic speech.

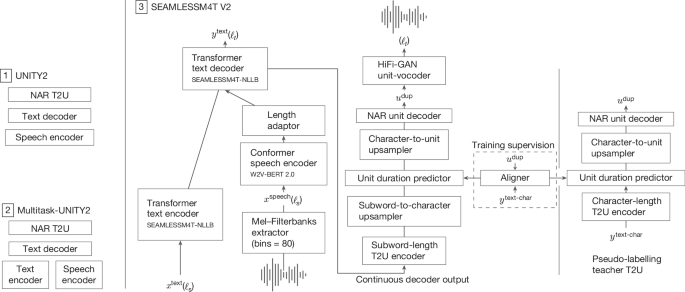

Modelling

The SEAMLESSM4T models rely on our multitask UNITY architecture. Our proposed unified translation model builds on vanilla UNITY59, a two-pass decoding framework that first generates text and subsequently generates speech by predicting discrete acoustic units (see section ‘Multilingual discrete acoustic units’). Compared with the vanilla UNITY model59, (1) the core S2TT model, initialized from scratch in UNITY, is replaced with an X2T model that supports text as input and is pretrained to jointly optimize the tasks of ASR, S2TT and T2TT (see section ‘X2T fine-tuning’), and (2) the shallow T2U model (referred to as T2U unit encoder and second-pass unit decoder in ref. 59) is replaced with a deeper transformer-based encoder–decoder model with six transformer layers that are pretrained on ASR data (see section ‘S2ST fine-tuning’). An improved version of UNITY, dubbed UNITY2, replaces the autoregressive T2U with a new non-autoregressive (NAR) T2U decoder. This NAR T2U model delivers stronger accuracy because of its hierarchical upsampling from subwords to characters and then to units.

The pretraining of X2T yielded a stronger speech encoder and a higher quality first-pass text decoder, whereas the scaling and pretraining of the T2U model allowed us to better handle multilingual unit generation without interference. Furthermore, the switch to non-autoregressive T2U decoding improved S2ST inference speed by three times.

Multilingual discrete acoustic units

Recent works have achieved state-of-the-art translation performance by using self-supervised discrete acoustic units as targets for building direct speech translation models5,60. This consists of decomposing the S2ST problem into a speech-to-unit translation step and a unit-to-speech conversion step. We extracted continuous speech representations using XLS-R61 and mapped these representations to discrete tokens. The set of discrete tokens (also referred to as unit vocabulary) is learnt by applying a k-means algorithm to a set of multilingual audio samples. The k-means centroids resemble a codebook that is used to map a sequence of XLS-R speech representations into a sequence of centroid indices or acoustic units. We used a unit vocabulary size K = 10,000 with features from the 35th layer of XLS-R-1B to represent the 35 supported target languages.

For the unit-to-speech conversion step, we followed ref. 62 and built a multilingual vocoder for speech synthesis from the learnt multilingual units. This model is responsible for synthesizing audios from a sequence of units that SEAMLESSM4T models will predict.

Unsupervised speech pretraining

Self-supervised pretraining with unlabelled speech audio data is a practical approach for leveraging unlabelled data. With pretraining, we can bootstrap the quality of translation models and make the most out of our supervised paired data. We pretrained a speech encoder following our improved W2V-BERT 2.0. It follows w2v-BERT63 in combining contrastive learning with masked prediction learning. W2V-BERT 2.0 uses more codebooks and an additional masked prediction task using random projection quantizers64 (RPQ). Our W2V-BERT 2.0 model is first trained on 1 million hours of open speech audio data that covers over 143 languages. It follows the w2v-BERT XL architecture63, which has 24 Conformer layers65 and approximately 600 million model parameters. For the v2 version, we scaled up the amount of unlabelled data from 1 million to 4.5 million hours of audio. The most recent and publicly available multilingual speech pretrained model is MMS23. It is trained on only 0.5 million hours, spanning over 1,400 languages. The largest model in scale is USM50. It is a proprietary multilingual speech pretrained model with 12 million hours of data and more than 300 languages in coverage.

Text-to-text translation models

The text processing components of our SEAMLESSM4T models were pretrained on the task of text-to-text translation, a much more resourced task than speech translation. Consider, for instance, the English–Italian direction, one of the highly resourced pairs in T2TT with more than 128 million parallel sentences—only 2 million pairs of English text paired with Italian audio are available for S2TT.

A key step in training multilingual text-to-text translation models is learning a shared vocabulary with a text tokenizer. Following ref. 6, we used SentencePiece66 with the BPE algorithm67 for this purpose. The tokenizer used in NLLB-2006 suffers from missing key Chinese characters because of artefacts of sampling. This sampling does not favour logo-graphic writing systems with a large number of unique symbols. To fix this issue, we forced the inclusion of these characters. Our new tokenizer improves the coverage of the MTSU top 5K Chinese characters from 54% to 84%.

To train our multilingual text-to-text model, we followed the same data preparation and training pipelines in ref. 6 using STOPES68. Having a smaller language coverage allowed us to significantly decrease the size of the model to 1.3B parameters and only use NLLB-200 training data in the 95 SEAMLESSM4T languages.

Data augmentation with pseudo-labelling

As with any sequence-to-sequence task, speech translation performance is dependent on the availability of high-quality training data. However, the amount of human-labelled data is scarce compared with its T2TT or ASR counterparts. To address this shortage of labelled data, we resort to pseudo-labelling69,70 the ASR data with a multilingual T2TT model (for example, NLLB models) to generate pseudo-labelled S2TT data.

To augment S2ST data, it is common practice to use TTS models to convert text from speech-to-text data sets into synthetic speech4,5. This synthetic speech is, in turn, converted into discrete units for training. This two-step unit extraction process is a slow process and is harder to scale given the dependencies on TTS models. We circumvented the need for synthesizing speech and trained multilingual text-to-unit (T2U) models on all 36 target speech languages. These models can directly convert the text into target discrete units and can be trained on ASR data sets that are readily available.

X2T fine-tuning

The first key part of our multitask UNITY framework is the X2T model, a multi-encoder sequence-to-sequence model with a conformer-based encoder65 for speech input and another transformer-based encoder71 for text input. Both encoders were joined with the same text decoder and fine-tuned jointly to optimize the tasks of ASR, S2TT and T2TT.

Our X2T model consists of joining the speech encoder, W2V-BERT 2.0 from M2, post-fixed with a length adapter to downsample long audio sequences, with the text encoder–decoder from M3 (Supplementary Fig. 4). For the length adapter, we used a modified version of M-adapter72, in which we replaced the three independent pooling modules for Q, K and V with a shared pooling module to improve efficiency.

X2T was fine-tuned on S2TT data triplets with speech audio (xspeech) in a source language ⟨ℓs⟩, paired with its transcription (xtext) and text translation (ytext) in a target language ⟨ℓt⟩. To enable meaning transfer across modalities, X2T model was fine-tuned to jointly optimize the following objective functions:

$$\beginarrayl\mathcalL_\rmS2TT=-\mathop\sum \limits_t=1^ \log p(\,y_t^\rmtext| \,y_ < t^\rmtext,x^\rmspeech),\\ \mathcalL_\rmT2TT=-\mathop\sum \limits_t=1^ \log p(\,y_t^\rmtext| \,y_ < t^\rmtext,x^\rmtext).\endarray$$

(2)

We additionally optimized an auxiliary objective function in the form of token-level knowledge distillation (\(\mathcalL_\rmKD\)) to further transfer knowledge from the strong MT model to the student speech translation task (S2TT).

$$\mathcalL_\rmKD=\mathop\sum \limits_t=1^ D_\rmKL[p(.| \,y_ < t^\rmtext,x^\rmtext)\,\parallel \,p(.| \,y_ < t^\rmtext,x^\rmspeech)].$$

(3)

The final loss is a weighted sum of all three losses: \(\mathcalL=\alpha \mathcalL_\rmS2TT\,+\) \(\beta \mathcalL_\rmT2TT+\gamma \mathcalL_\rmKD,\) where α, β and γ are scalar hyper-parameters tuned on the development data.

S2ST fine-tuning

In the last stage of fine-tuning multitask UNITY, we initialized the model with the pretrained X2T model (see section ‘X2T fine-tuning’) and a pretrained T2U model, similar to the one used for pseudo-labelling S2ST data in M4. The T2U model used for pseudo-labelling is referred to as the teacher T2U model with 12 transformer layers encoder–decoder. For initialization, we used a smaller student T2U model with only six layers to optimize inference and distill the labels of the stronger T2U. In the second version of SEAMLESSM4T, UNITY2 replaces the second-pass autoregressive unit decoder in UNITY with a NAR unit decoder. We adopted the decoder architecture of FastSpeech273 and extended it to discrete unit generation. UNITY2 starts with hierarchically upsampling the T2U encoder output from subword length to character length and then to unit length. The unit duration predictor, the key to the hierarchical upsampling, is supervised during training by a multilingual aligner based on RAD-TTS74. The architecture is shown in detail in Supplementary Information section IV.8.

We fine-tuned the S2ST task with a combination of X–eng and eng–X S2ST translation data totalling 121,000 h. We froze the model weights corresponding to the X2T model and only fine-tuned the T2U component. This is to ensure that the performance of the model on tasks from the previous stages of fine-tuning remains unchanged.

Automatic and human evaluation

BLASER 2.0

BLASER 2.0 (ref. 24) is the new version of BLASER75, which works with both speech and text modalities, and hence being modality-agnostic. Like the first version, our approach leverages the similarity between input and output sentence embeddings. The new version uses SONAR embeddings Supplementary Information section III.3.1, supports 57 languages in speech and 202 in text (coverage of languages by SONAR at the moment of submission of this paper) and is extendable to future encoders for new languages or modalities that share the same embedding spaces. For the purposes of evaluating speech outputs (and unlike ASR-based metrics), BLASER 2.0 offers the advantage of being text-free.

More specifically, in BLASER 2.0, we take the source input, the translated output from any S2ST, S2TT or T2TT model, and the reference speech segment or text, and convert them into SONAR embedding vectors. For the supervised version of BLASER 2.0, these embeddings are combined and fed into a small, dense neural network that predicts an XSTS score for each translation output.

Human evaluation

Apart from automatic metrics such as (ASR) BLEU and BLASER 2.0, we used human metrics such as XSTS26, which measures semantic similarity between a source and target translation, and a standard Mean Opinion Score (as standardized in Recommendation ITU-T P.800, henceforth MOS), which measures (1) naturalness, (2) sound quality and (3) clarity of audio generations to evaluate our models. To obtain more robust language-level scores, we also incorporate a calibration set and calibration methodology, the same used to evaluate the NLLB models6. Apart from XSTS, we also obtained MOS evaluations to understand other aspects of audio quality in the target speech. For additional information about human evaluation protocols and analysis, see Supplementary Information section V.1.

Robustness

We built a replicable noise-robustness evaluation benchmark based on FLEURS (noisy FLEURS), which covers 102 languages, two speech tasks (S2TT and ASR), and various noise types (natural noises and music). To create simulated noisy audios, we sampled audio clips from MUSAN76 on the ‘noise’ and ‘music’ categories and mixed them with the original FLEURS speech audios under different signal-to-noise ratio (SNR): 10, 5, 0, −5, −10, −15 and −20. We compared models by BLEU-SNR curves (for S2TT) or WER-SNR curves (for ASR), which illustrate the degree of model performance degradation when the noise level of speech inputs increases (that is, when SNR decreases). For low-resource languages, the clean speech setup is already challenging, let alone a noisy one. Thus, we focused on four high-resource languages (French, Spanish, Modern Standard Arabic and Russian) belonging to three different language families for our noise-robustness analysis.

We followed ref. 47 to evaluate model robustness against speaker variations by calculating the average by-group mean score and by-group coefficient of variation using an utterance-level quality metric. Instead of using BLEU as the quality metric, we used chrF, which has better stability at the utterance level. The calculation of both robustness metrics does not require explicit speaker subgroup labels. We grouped evaluation samples and corresponding utterance-level chrF scores by content (transcript) and then calculated the average by-group mean score chrFMS and average by-group coefficient of variation CoefVarMS, defined as follows:

$$\beginarrayl\,\,\rmchrF_\rmMS=\frac1\sum _g\in G\rmMean(g)\\ \rmCoefVar_\rmMS=\frac1 G^\prime \sum _g\in G^\prime \frac\textStandard deviation(g)\rmMean(g)\endarray$$

where G is the set of sentence-level chrF scores grouped by content (transcript) and \(G^\prime =\ g\in G,\). The two metrics are complementary: chrFMS provides a normalized quality metric that, unlike conventional corpus-level metrics, takes speaker variations into consideration, whereas CoefVarMS provides a standardized measure of quality variance under speaker variations. For robustness analysis, we conducted an out-of-domain evaluation on FLEURS on all languages that have at least 40 content groups in the test sets.

Responsible AI

Toxicity detection

Inspired by ASR-BLEU, this work proposes using ASR-ETOX as a new metric to detect added toxicity in speech and evaluate added toxicity for S2ST ability of SEAMLESSM4T. Essentially, this metric follows a cascaded framework by first deploying a standard ASR module (that is, the same that is used for ASR-BLEU as defined in Supplementary Table 2), then the toxicity detection module, ETOX27, which uses the Toxicity-200 word lists6. For S2TT, the translated output can be directly evaluated with ETOX. In both cases (S2ST and S2TT), we measured added toxicity at the utterance or sentence level. We first computed toxicity detection for each input in the evaluation dataset and the corresponding output. Then, we compared them and counted a case as containing added toxicity only when the output value exceeds that of the input. Moreover, we used the recently proposed MuTox metric that can be applied to text or speech output with no need for ASR. This classifier has been trained on both speech and text toxicity labelled data for 30 languages. As MuTox relies on SONAR embeddings29, MuTox the same number of languages by the zero-shot property. However, accounting for validated quality, we report MuTox only the languages that have been benchmarked29. Again in both cases (S2ST and S2TT), we measured added toxicity at the utterance or sentence level. In this case, a sentence contains added toxicity if MuTox scores is >0.9 in the output and <0.5 in the input. We have experimentally validated these thresholds for several languages with human bilingual speakers for several pairs of languages. For S2TT, we computed MuTox in transcribed speech and target text. For S2ST, we computed MuTox in source and target speech.

For toxicity mitigation, we implement two techniques for the mitigation of added toxicity. Before training, we filter out training pairs with imbalanced toxicity. Furthermore, we use Mintox11 at inference time. In particular, the main workflow generates a translation hypothesis with an unconstrained search. Then, the toxicity classifier is run on this hypothesis. If no toxicity is detected, we provide the translation hypothesis as it is. However, if toxicity is detected in the output, we run the classifier on the input. If the toxicity is unbalanced (that is, no toxicity is detected in the input), we re-run the translation with mitigation, which is the BEAMFILTERING step. This BEAMFILTERING consists of taking as input the multi-token expressions that should not appear in the output and excluding them from the beam-search hypotheses. Note that we do not apply mitigation in cases in which there is toxicity in the input (in other words, we do not deal with cases in which there is toxicity in the input but more toxicity in the output).

We used two datasets to analyse added toxicity. First, we deployed FLEURS to better align with our human evaluation effort and other evaluative components of this work. Furthermore, we used the English-only HOLISTICBIAS framework77, which has been shown to trigger true added toxicity in previous studies27. In this work, we extend HOLISTICBIAS to speech by applying the default English TTS model from MMS23.

Speech extension of MULTILINGUAL HOLISTICBIAS

To compare the performances across modalities (S2ST and S2TT), we extended MULTILINGUAL HOLISTICBIAS to speech23 (https://github.com/facebookresearch/fairseq/tree/main/examples/mms#tts-1). We used this generated TTS data as input for S2TT and S2ST and as a reference for S2ST. We conducted the translations in two directions: eng–X and X–eng. Concretely, in X–eng, we translated both masculine and feminine versions of the speech. It is worth noting that some target languages are not available in the SEAMLESSM4T S2ST model, so we performed translations on only 17 languages for the S2ST task in the eng–X direction. For S2TT in eng–X, we have all languages included in the MULTILINGUAL HOLISTICBIAS dataset (n = 25). For reference, the complete language list used in our experiments can be found in Supplementary Table 26.

In terms of evaluation metrics for S2TT, we used chrF. For S2ST, we used ASRchrf (the transcription is done by WHISPER-LARGE and WHISPER-MEDIUM20 for eng–X and X–eng, respectively, and chrF has been calculated the same way as S2TT except that in S2ST, the text from both prediction and reference were normalized) and BLASER 2.0. It is worth noting that when evaluating on BLASER 2.0, we only included 14 languages (arb, cat, deu, eng, fra, nld, por, ron, rus, spa, swe, tha, ukr and urd) for the eng–X direction (overlapping languages from the generated TTS data and the languages available in our S2ST model).