Early-warning systems can drastically reduce the impacts of natural hazards. Informing people that a storm or flood is imminent can give individuals and governments precious time to prepare and alleviate the worst damage. The United Nations Early Warnings for All Initiative calls for every person on Earth to be protected by early-warning systems by the end of 2027. Yet, as of 2023, only 52% of nations had access to such measures1. Least-developed countries and small island states had even less access (46% and 39%, respectively), despite disproportionately experiencing the consequences.

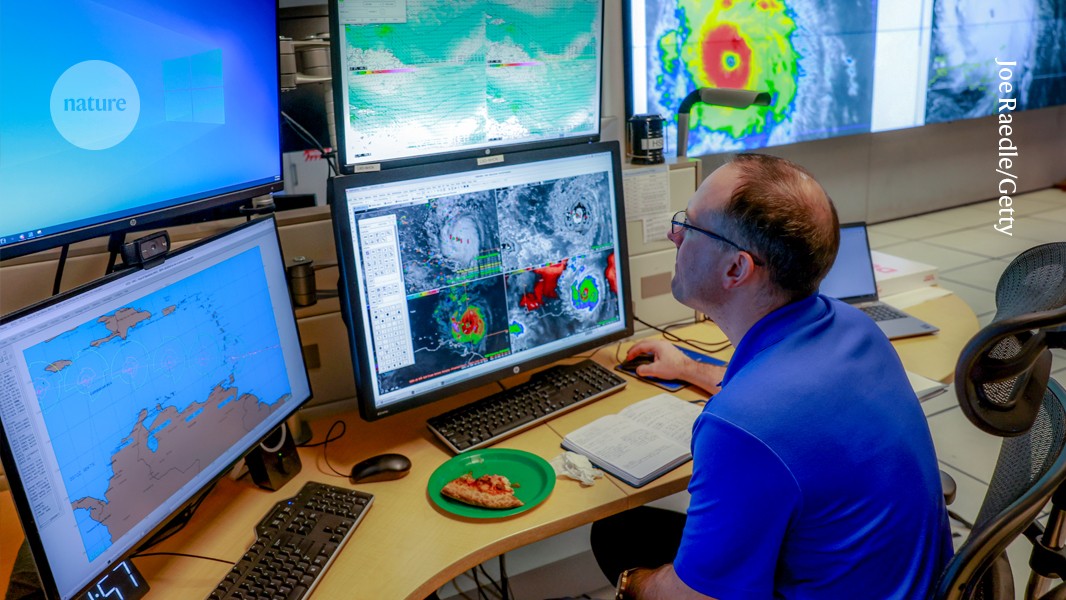

As part of a global effort to hit this target, researchers, the private sector and governments are increasingly turning to artificial intelligence (AI) technologies. They hope that it will make early warnings more efficient, accurate, timely and user-friendly, and help to plug geographical gaps.

The increasing use of AI systems in the disaster-management domain brings promise but also risks. For example, because there tend to be more ground radar systems in wealthier regions, there can be biases in the data sets that AI algorithms are trained on to predict precipitation patterns. Such biases can put poorer regions at a disadvantage.

To address these risks, specialists and stakeholders must come together to provide standards — internationally agreed best practices — to govern AI-infused disaster-management tools. These standards should address everything from how data are collected and handled, to how algorithms are trained, tested and used.

Such standards can foster responsible and trustworthy AI, improve the scalability and interoperability of AI-based tools and clarify who is liable if an AI model does not perform as promised — issuing false alarms, for example, or failing to recommend an evacuation when one is needed.

Some countries and regions are making progress on developing such standards and regulations. For example, the European Union’s AI Act categorizes the use of AI technologies in early-warning systems as ‘high risk’, thus making it subject to strict regulations before products can enter the market. But internationally agreed standards in this realm are lacking. A starting point for such work is the Recommendation on the Ethics of Artificial Intelligence by the UN cultural organization UNESCO, adopted by its 193 member states in 2021, as well as the UN AI advisory body’s 2024 report Governing AI for Humanity.

The co-authors of this article have contributed to the Focus Group on AI for Natural Disaster Management — an effort spearheaded by the International Telecommunication Union (ITU) in partnership with the World Meteorological Organization and the UN Environment Programme (UNEP). Between 2020 and 2024, the focus group brought together specialists and stakeholders from across the UN, key intergovernmental and governmental agencies, the private sector, academia, research institutions and beyond to build a comprehensive view of opportunities and challenges when using AI for reducing disaster risks and to lay the groundwork for standards.

This focus group has made great progress but much more remains to be done; as AI technologies evolve, standards must be adapted. We need researchers and companies to provide information about how they apply AI, so we can further refine our best practices. We also need governments to be aware of our work, so that they can provide feedback and incorporate our best practices into their national policies.

AI to the rescue

There are many examples of how AI is enhancing the effectiveness of early warning: by forecasting and monitoring natural hazards, assessing the robustness of infrastructure and disseminating warnings.

Various companies released AI-based medium-range weather-forecasting models in 2023, including Google DeepMind in London, Huawei in Shenzhen, China, and Nvidia in Santa Clara, California. In terms of speed and precision, some of these models outperform conventional tools. Furthermore, AI is considered well suited to improving forecasting and monitoring of small-scale events, such as thunderstorms, which can include extreme rainfall or damaging hail and give rise to tornadoes.

Typhoon Yagi caused widespread floods and landslides in Myanmar in September.Credit: Sai Aung Main/AFP/Getty

Several other firms — including Pano AI in San Francisco, California, Fireball Information Technologies in Reno, Nevada, Dryad Networks in Berlin and OroraTech in Munich, Germany — have developed AI-based tools to spot smoke in images from satellites, drones or cameras on the ground. These tools contribute to timely wildfire warning. During extreme precipitation, rainfall can be monitored by combining AI with the line-of-sight communication links that are used in telecommunication networks2 or traffic-camera feeds3.

The extent of floods can be confirmed by combining satellite imagery with AI analyses. For instance, modelling firm RSS-Hydro in Kayl, Luxembourg (in partnership with the European Space Agency’s InCubed programme), is processing satellite imagery with AI to reconstruct floods that are hidden from view by cloud cover. NASA’s weather-related hazard information from synthetic aperture radar (HydroSAR) system, which includes a flood-monitoring service for the Hindu Kush Himalaya region, is also implementing AI to improve flood monitoring4.

Such AI forecasting and monitoring tools can be integrated into larger platforms. For example, the Mediterranean and pan-European Forecast and Early Warning System against Natural Hazards project, funded by the European Commission, uses the latest advancements in AI to develop a standardized system for risk and vulnerability assessment, decision-making and warning dissemination. This system will enhance existing capabilities, producing a fully integrated multi-hazard platform.

Others are using AI to help to monitor infrastructure — including telecommunications, utility and transport systems. These are both vulnerable and crucial during disasters: for instance, the collapse of telecommunications systems during the 2023 wildfires in Maui, Hawaii, impeded alerts and evacuations. Stockholm-based telecommunications company Ericsson is using drone footage combined with AI to inspect hard-to-reach radio towers. An international research group has trained an AI system to optimize the placement of traffic sensors in a hurricane-prone city in Florida to avoid excessive congestion during an evacuation5. And start-up firm QuakeSaver in Potsdam, Germany, is using smart seismic sensors with embedded AI to detect earthquakes and find vulnerabilities in buildings and other structures.

Furthermore, AI chatbots and translation tools can help to communicate warnings. The US National Weather Service has partnered with Lilt, an AI company in Emeryville, California, to automate the translation of forecasts and warnings from English into other languages, for example. And UNESCO has designed an AI chatbot that can answer questions from people affected by natural hazards (such as flooding or cyclones) in real time, using vetted information supplied by officials. The project, called the AI Chatbot and SMS Analysis for Disaster Risk Reduction, was used in 2021 to help people to navigate information about floods and droughts in South Sudan, Rwanda, Kenya, Uganda and Tanzania.

Recognizing the potential of AI in disaster-risk reduction, technology giant IBM and NASA collaborated to develop an AI model for this purpose, released in 2023. UNEP also launched a Digital Transformation Subprogramme, which aims to accelerate and scale up environmental sustainability (including disaster resilience) through digital technologies.

Lack of standards

All of this work shows the promise of AI for disaster-warning systems. However, AI tools created in the absence of international standards could have a variety of problems, including data bias and not being compatible or interoperable with each other. Because disasters can move across borders, this is a lost opportunity for continuous early-warning coverage.

In 2022, our focus group published a road map6 of existing standards covering digital technologies and disaster risk-reduction measures. These were from the four main global standard-developing bodies — the ITU, the International Organization for Standardization, the International Electrotechnical Commission and the Institute of Electrical and Electronics Engineers — along with two regional organizations, the Asia-Pacific Telecommunity Standardization Program and the European Telecommunications Standards Institute (ETSI). We found 42 publicly available standards that address these topics, but only 4 mentioned AI.

Survivors of the 2023 Turkish earthquake receive meals from aid workers.Credit: Sercan Kucuksahin/Anadolu Agency/Getty

One of ETSI’s technical reports — a study of use cases and communications that involve Internet of Things devices in emergency situations — mentions how AI might be used at various steps in the process to, for example, build an enhanced view of an incident area for emergency responders. However, it does not contain specific advice on how AI should or should not be used. The 42 standards were much more likely to reference digital technologies other than AI — such as the Internet of Things, cloud computing or Earth observations by uncrewed aerial vehicles or drones. To address these gaps, the focus group has spent the past three years researching this topic in depth. In addition to the road map, it has produced a glossary containing more than 500 terms and definitions7 alongside three technical reports8–10; convened a series of technical workshops and webinars; organized two hackathons; and published several reviews and commentaries11–13. These provide the groundwork for guidance on everything from data interoperability to AI training and transparency. They also discuss the importance of human oversight, fail-safes and human-centric design for providing safety and fostering trust in AI. Despite these efforts, however, more remains to be done.

Next steps

When laying the groundwork for standards, it is important that stakeholders from different regions contribute to the discussion. Each country has distinct values and priorities, and the standards will need to be used across borders. Participation might also encourage stakeholders to incorporate such standards into their own national legislation.

Another important aspect of standards is to support interoperability and scalability — helping to ensure that AI-based warning systems work well together and can be expanded to regions that need them, when possible, without inappropriately applying a system developed for one region to another area, where it might not work well. For Early Warnings for All, there is great interest among stakeholders in developing AI solutions that can be extended for use in countries that currently lack early-warning systems. But AI is not all-powerful and might not work well in regions where there are few observational networks or where no robust communications infrastructure exists.

AI systems for early warnings must be trustworthy. The underlying models should be interpretable, meaning that their behaviour can be understood directly by humans. Moreover, they should be explainable, providing detailed reasoning or justifications for their conclusions and recommendations. Transparency in the underlying data and methods is key to establishing trust with end users14.

Our focus group held its final meeting at the University of Maryland Baltimore County and NASA Goddard Space Flight Center in Greenbelt in March. But our work has not ended. The focus group is transitioning to an ITU-led Global Initiative on Resilience to Natural Hazards through AI Solutions, which will kick off in November. (To get involved: specialists in AI and in disaster management are invited to contact the focus group and initiative’s secretariat at [email protected].) Several other UN organizations — including the Universal Postal Union and the UN Framework Convention on Climate Change (UNFCCC) — have joined as partners. Its goals include identifying new AI use cases and updating technical reports, exploring advances in complementary technologies, doing deep dives on topics of relevance, developing proof-of-concept studies that incorporate our best practices and supporting capacity sharing. To improve capacity sharing, the global initiative is working with the UNFCCC to coordinate a side event at the COP 29 UN climate-change conference, scheduled for November in Azerbaijan.

Such efforts should help to ensure that AI-based early-warning systems are ethical and justly deployed. We run a risk of certain countries and regions benefiting from AI-based systems, while others are left behind. Standards are the solution, we must not wait.