OpenAI’s latest artificial intelligence (AI) system dropped in September with a bold promise. The company behind the chatbot ChatGPT showcased o1 — its latest suite of large language models (LLMs) — as having a “new level of AI capability”. OpenAI, which is based in San Francisco, California, claims that o1 works in a way that is closer to how a person thinks than do previous LLMs.

The release poured fresh fuel on a debate that’s been simmering for decades: just how long will it be until a machine is capable of the whole range of cognitive tasks that human brains can handle, including generalizing from one task to another, abstract reasoning, planning and choosing which aspects of the world to investigate and learn from?

Bigger AI chatbots more inclined to spew nonsense — and people don’t always realize

Such an ‘artificial general intelligence’, or AGI, could tackle thorny problems, including climate change, pandemics and cures for cancer, Alzheimer’s and other diseases. But such huge power would also bring uncertainty — and pose risks to humanity. “Bad things could happen because of either the misuse of AI or because we lose control of it,” says Yoshua Bengio, a deep-learning researcher at the University of Montreal, Canada.

The revolution in LLMs over the past few years has prompted speculation that AGI might be tantalizingly close. But given how LLMs are built and trained, they will not be sufficient to get to AGI on their own, some researchers say. “There are still some pieces missing,” says Bengio.

What’s clear is that questions about AGI are now more relevant than ever. “Most of my life, I thought people talking about AGI are crackpots,” says Subbarao Kambhampati, a computer scientist at Arizona State University in Tempe. “Now, of course, everybody is talking about it. You can’t say everybody’s a crackpot.”

Why the AGI debate changed

The phrase artificial general intelligence entered the zeitgeist around 2007 after its mention in an eponymously named book edited by AI researchers Ben Goertzel and Cassio Pennachin. Its precise meaning remains elusive, but it broadly refers to an AI system with human-like reasoning and generalization abilities. Fuzzy definitions aside, for most of the history of AI, it’s been clear that we haven’t yet reached AGI. Take AlphaGo, the AI program created by Google DeepMind to play the board game Go. It beats the world’s best human players at the game — but its superhuman qualities are narrow, because that’s all it can do.

The new capabilities of LLMs have radically changed the landscape. Like human brains, LLMs have a breadth of abilities that have caused some researchers to seriously consider the idea that some form of AGI might be imminent1, or even already here.

This breadth of capabilities is particularly startling when you consider that researchers only partially understand how LLMs achieve it. An LLM is a neural network, a machine-learning model loosely inspired by the brain; the network consists of artificial neurons, or computing units, arranged in layers, with adjustable parameters that denote the strength of connections between the neurons. During training, the most powerful LLMs — such as o1, Claude (built by Anthropic in San Francisco) and Google’s Gemini — rely on a method called next token prediction, in which a model is repeatedly fed samples of text that has been chopped up into chunks known as tokens. These tokens could be entire words or simply a set of characters. The last token in a sequence is hidden or ‘masked’ and the model is asked to predict it. The training algorithm then compares the prediction with the masked token and adjusts the model’s parameters to enable it to make a better prediction next time.

How AI is reshaping science and society

The process continues — typically using billions of fragments of language, scientific text and programming code — until the model can reliably predict the masked tokens. By this stage, the model parameters have captured the statistical structure of the training data, and the knowledge contained therein. The parameters are then fixed and the model uses them to predict new tokens when given fresh queries or ‘prompts’ that were not necessarily present in its training data, a process known as inference.

The use of a type of neural network architecture known as a transformer has taken LLMs significantly beyond previous achievements. The transformer allows a model to learn that some tokens have a particularly strong influence on others, even if they are widely separated in a sample of text. This permits LLMs to parse language in ways that seem to mimic how humans do it — for example, differentiating between the two meanings of the word ‘bank’ in this sentence: “When the river’s bank flooded, the water damaged the bank’s ATM, making it impossible to withdraw money.”

This approach has turned out to be highly successful in a wide array of contexts, including generating computer programs to solve problems that are described in natural language, summarizing academic articles and answering mathematics questions.

And other new capabilities have emerged along the way, especially as LLMs have increased in size, raising the possibility that AGI, too, could simply emerge if LLMs get big enough. One example is chain-of-thought (CoT) prompting. This involves showing an LLM an example of how to break down a problem into smaller steps to solve it, or simply asking the LLM to solve a problem step-by-step. CoT prompting can lead LLMs to correctly answer questions that previously flummoxed them. But the process doesn’t work very well with small LLMs.

The limits of LLMs

CoT prompting has been integrated into the workings of o1, according to OpenAI, and underlies the model’s prowess. Francois Chollet, who was an AI researcher at Google in Mountain View, California, and left in November to start a new company, thinks that the model incorporates a CoT generator that creates numerous CoT prompts for a user query and a mechanism to select a good prompt from the choices. During training, o1 is taught not only to predict the next token, but also to select the best CoT prompt for a given query. The addition of CoT reasoning explains why, for example, o1-preview — the advanced version of o1 — correctly solved 83% of problems in a qualifying exam for the International Mathematical Olympiad, a prestigious mathematics competition for high-school students, according to OpenAI. That compares with a score of just 13% for the company’s previous most powerful LLM, GPT-4o.

In AI, is bigger always better?

But, despite such sophistication, o1 has its limitations and does not constitute AGI, say Kambhampati and Chollet. On tasks that require planning, for example, Kambhampati’s team has shown that although o1 performs admirably on tasks that require up to 16 planning steps, its performance degrades rapidly when the number of steps increases to between 20 and 402. Chollet saw similar limitations when he challenged o1-preview with a test of abstract reasoning and generalization that he designed to measure progress towards AGI. The test takes the form of visual puzzles. Solving them requires looking at examples to deduce an abstract rule and using that to solve new instances of a similar puzzle, something humans do with relative ease.

LLMs, says Chollet, irrespective of their size, are limited in their ability to solve problems that require recombining what they have learnt to tackle new tasks. “LLMs cannot truly adapt to novelty because they have no ability to basically take their knowledge and then do a fairly sophisticated recombination of that knowledge on the fly to adapt to new context.”

Can LLMs deliver AGI?

So, will LLMs ever deliver AGI? One point in their favour is that the underlying transformer architecture can process and find statistical patterns in other types of information in addition to text, such as images and audio, provided that there is a way to appropriately tokenize those data. Andrew Wilson, who studies machine learning at New York University in New York City, and his colleagues showed that this might be because the different types of data all share a feature: such data sets have low ‘Kolmogorov complexity’, defined as the length of the shortest computer program that’s required to create them3. The researchers also showed that transformers are well-suited to learning about patterns in data with low Kolmogorov complexity and that this suitability grows with the size of the model. Transformers have the capacity to model a wide swathe of possibilities, increasing the chance that the training algorithm will discover an appropriate solution to a problem, and this ‘expressivity’ increases with size. These are, says Wilson, “some of the ingredients that we really need for universal learning”. Although Wilson thinks AGI is currently out of reach, he says that LLMs and other AI systems that use the transformer architecture have some of the key properties of AGI-like behaviour.

Can AI review the scientific literature — and figure out what it all means?

Yet there are also signs that transformer-based LLMs have limits. For a start, the data used to train the models are running out. Researchers at Epoch AI, an institute in San Francisco that studies trends in AI, estimate4 that the existing stock of publicly available textual data used for training might run out somewhere between 2026 and 2032. There are also signs that the gains being made by LLMs as they get bigger are not as great as they once were, although it’s not clear if this is related to there being less novelty in the data because so many have now been used, or something else. The latter would bode badly for LLMs.

Raia Hadsell, vice-president of research at Google DeepMind in London, raises another problem. The powerful transformer-based LLMs are trained to predict the next token, but this singular focus, she argues, is too limited to deliver AGI. Building models that instead generate solutions all at once or in large chunks could bring us closer to AGI, she says. The algorithms that could help to build such models are already at work in some existing, non-LLM systems, such as OpenAI’s DALL-E, which generates realistic, sometimes trippy, images in response to descriptions in natural language. But they lack LLMs’ broad suite of capabilities.

Build me a world model

The intuition for what breakthroughs are needed to progress to AGI comes from neuroscientists. They argue that our intelligence is the result of the brain being able to build a ‘world model’, a representation of our surroundings. This can be used to imagine different courses of action and predict their consequences, and therefore to plan and reason. It can also be used to generalize skills that have been learnt in one domain to new tasks by simulating different scenarios.

Several reports have claimed evidence for the emergence of rudimentary world models inside LLMs. In one study5, researchers Wes Gurnee and Max Tegmark at the Massachusetts Institute of Technology in Cambridge claimed that a widely used open-source family of LLMs developed internal representations of the world, the United States and New York City when trained on data sets containing information about these places, although other researchers noted on X (formerly Twitter) that there was no evidence that the LLMs were using the world model for simulations or to learn causal relationships. In another study6, Kenneth Li, a computer scientist at Harvard University in Cambridge and his colleagues reported evidence that a small LLM trained on transcripts of moves made by players of the board game Othello learnt to internally represent the state of the board and used this to correctly predict the next legal move.

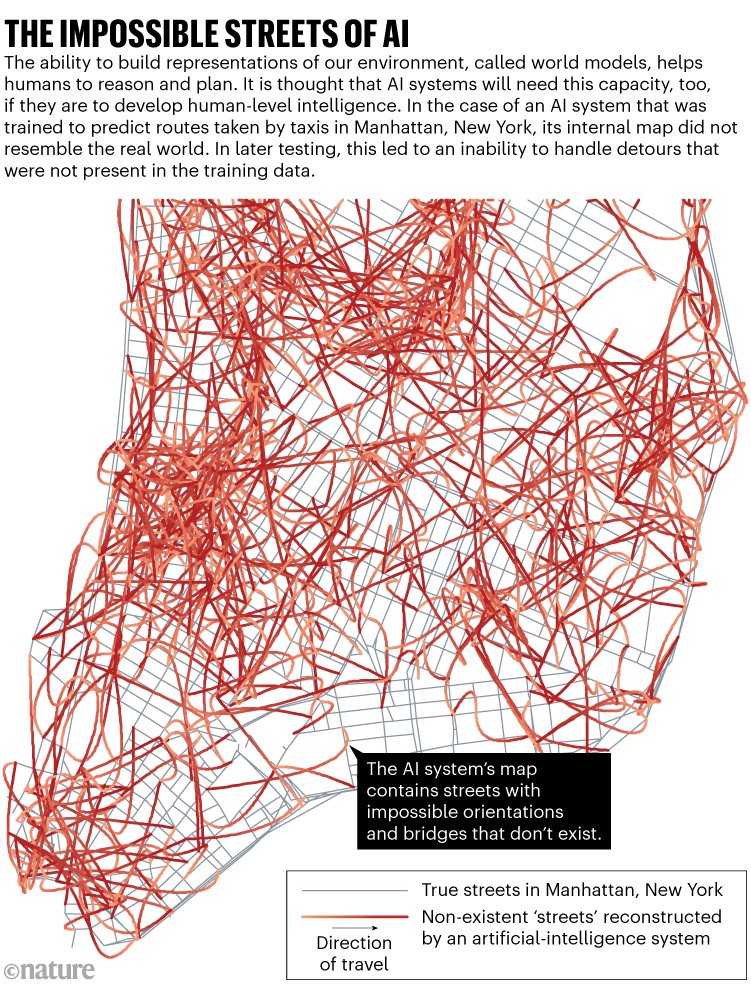

Other results, however, show how world models learnt by today’s AI systems can be unreliable. In one such study7, computer scientist Keyon Vafa at Harvard University, and his colleagues used a gigantic data set of the turns taken during taxi rides in New York City to train a transformer-based model to predict the next turn in a sequence, which it did with almost 100% accuracy.

By examining the turns the model generated, the researchers were able to show that it had constructed an internal map to arrive at its answers. But the map bore little resemblance to Manhattan (see ‘The impossible streets of AI’), “containing streets with impossible physical orientations and flyovers above other streets”, the authors write. “Although the model does do well in some navigation tasks, it’s doing well with an incoherent map,” says Vafa. And when the researchers tweaked the test data to include unforeseen detours that were not present in the training data, it failed to predict the next turn, suggesting that it was unable to adapt to new situations.

Source: Ref. 7

The importance of feedback

One important feature that today’s LLMs lack is internal feedback, says Dileep George, a member of the AGI research team at Google DeepMind in Mountain View, California. The human brain is full of feedback connections that allow information to flow bidirectionally between layers of neurons. This allows information to flow from the sensory system to higher layers of the brain to create world models that reflect our environment. It also means that information from the world models can ripple back down and guide the acquisition of further sensory information. Such bidirectional processes lead, for example, to perceptions, wherein the brain uses world models to deduce the probable causes of sensory inputs. They also enable planning, with world models used to simulate different courses of action.

But current LLMs are able to use feedback only in a tacked-on way. In the case of o1, the internal CoT prompting that seems to be at work — in which prompts are generated to help answer a query and fed back to the LLM before it produces its final answer — is a form of feedback connectivity. But, as seen with Chollet’s tests of o1, this doesn’t ensure bullet-proof abstract reasoning.

Why scientists trust AI too much — and what to do about it

Researchers, including Kambhampati, have also experimented with adding external modules, called verifiers, onto LLMs. These check answers that are generated by an LLM in a specific context, such as for creating viable travel plans, and ask the LLM to rerun the query if the answer is not up to scratch8. Kambhampati’s team showed that LLMs aided by external verifiers were able to create travel plans significantly better than were vanilla LLMs. The problem is that researchers have to design bespoke verifiers for each task. “There is no universal verifier,” says Kambhampati. By contrast, an AGI system that used this approach would probably need to build its own verifiers to suit situations as they arise, in much the same way that humans can use abstract rules to ensure they are reasoning correctly, even for new tasks.

Efforts to use such ideas to help produce new AI systems are in their infancy. Bengio, for example, is exploring how to create AI systems with different architectures to today’s transformer-based LLMs. One of these, which uses what he calls generative flow networks, would allow a single AI system to learn how to simultaneously build world models and the modules needed to use them for reasoning and planning.

Another big hurdle encountered by LLMs is that they are data guzzlers. Karl Friston, a theoretical neuroscientist at University College London, suggests that future systems could be made more efficient by giving them the ability to decide just how much data they need to sample from the environment to construct world models and make reasoned predictions, rather than simply ingesting all the data they are fed. This, says Friston, would represent a form of agency or autonomy, which might be needed for AGI. “You don’t see that kind of authentic agency, in say, large language models, or generative AI,” he says. “If you’ve got any kind of intelligent artefact that can select at some level, I think you’re making an important move towards AGI,” he adds.

AI systems with the ability to build effective world models and integrated feedback loops might also rely less on external data because they could generate their own by running internal simulations, positing counterfactuals and using these to understand, reason and plan. Indeed, in 2018, researchers David Ha, then at Google Brain in Tokyo, and Jürgen Schmidhuber at the Dalle Molle Institute for Artificial Intelligence Studies in Lugano-Viganelllo, Switzerland, reported9 building a neural network that could efficiently build a world model of an artificial environment, and then use it to train the AI to race virtual cars.

Do AI models produce more original ideas than researchers?

If you think that AI systems with this level of autonomy sound scary, you are not alone. As well as researching how to build AGI, Bengio is an advocate of incorporating safety into the design and regulation of AI systems. He argues that research must focus on training models that can guarantee the safety of their own behaviour — for instance, by having mechanisms that calculate the probability that the model is violating some specified safety constraint and reject actions if the probability is too high. Also, governments need to ensure safe use. “We need a democratic process that makes sure individuals, corporations, even the military, use AI and develop AI in ways that are going to be safe for the public,” he says.

So will it ever be possible to achieve AGI? Computer scientists say there is no reason to think otherwise. “There are no theoretical impediments,” says George. Melanie Mitchell, a computer scientist at the Santa Fe Institute in New Mexico, agrees. “Humans and some other animals are a proof of principle that you can get there,” she says. “I don’t think there’s anything particularly special about biological systems versus systems made of other materials that would, in principle, prevent non-biological systems from becoming intelligent.”

But, even if it is possible there is little consensus about how close its arrival might be: estimates range from just a few years from now to at least ten years away. If an AGI system is created, George says, we’ll know it when we see it. Chollet suspects it will creep up on us. “When AGI arrives, it’s not going to be as noticeable or as groundbreaking as you might think,” he says. “It will take time for AGI to realize its full potential. It will be invented first. Then, you will need to scale it up and apply it before it starts really changing the world.”