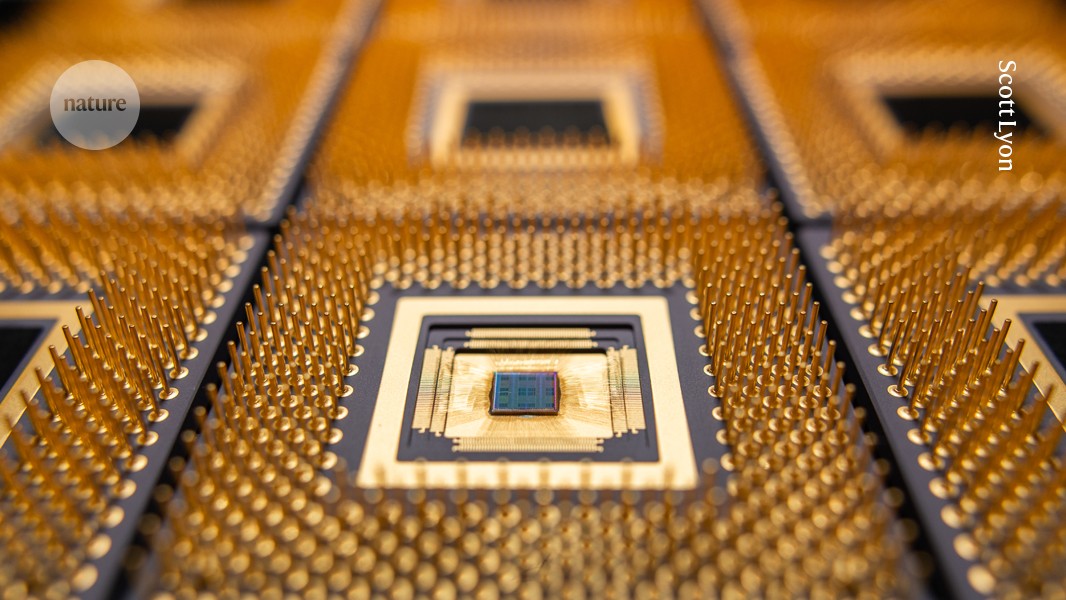

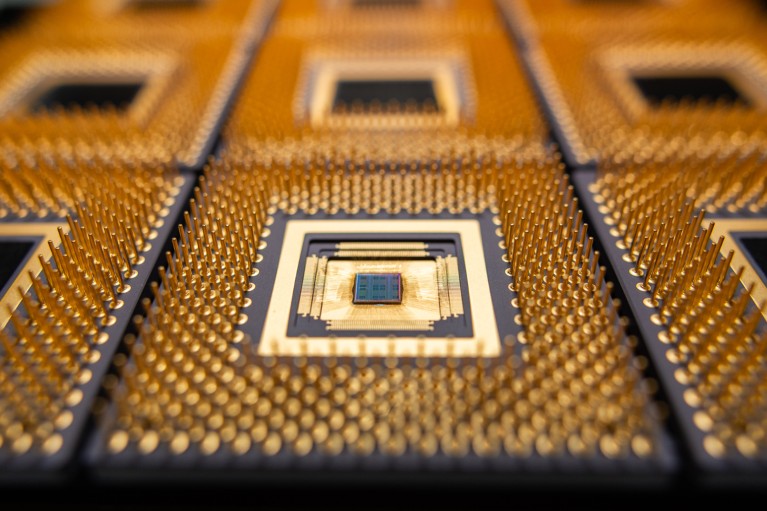

An advanced ‘in memory’ computing chip developed by researchers from Princeton University, New Jersey, and US firm EnCharge AI.Credit: Scott Lyon

In the late 1990s, some computer scientists realized that they were hurtling towards disaster. Makers of computer chips had been able to make computers more powerful on a reliable schedule by cramming ever more and smaller digital switches, called transistors, onto the chips’ processing cores and running them at ever greater speeds. But if speeds kept increasing, the energy consumption of central processing units (CPUs) would have become unsustainable.

So manufacturers changed tack: rather than trying to toggle the transistors on and off faster, they added multiple processing cores to their chips. Dividing a task across two or more cores running at slower speeds brought more energy-efficient performance gains. The technology giant IBM released the first mainstream multicore computer processor in 2001; other leading chipmakers of the day, such as Intel and AMD, soon followed. Multicore chips drove continued progress in computing, making possible today’s laptops and smartphones.

Nature Outlook: Robotics and artificial intelligence

Now, some computer scientists say that the field is facing another reckoning, thanks to the increasing adoption of energy-hungry artificial intelligence (AI). Generative AI can create images and videos, summarize notes and write papers. But those capabilities are the result of machine-learning models that consume tremendous amounts of energy.

The energy required to train and operate these models could spell trouble for both the environment and progress in machine learning. “To lower the power consumption is key — otherwise we’ll see development stop,” says Hechen Wang, a research scientist at Intel Labs in Hillsboro, Oregon. Roy Schwartz, a computer scientist at the Hebrew University of Jerusalem, Israel, says he doesn’t want AI to become “a rich man’s tool”. The staff, infrastructure and particularly the power required to train generative AI models could limit who can create and use them — and only a handful of entities have the resources, he says.

At this point of potential crisis, many hardware designers see an opportunity to remake the basic blueprint of computer chips to make them more energy efficient. Doing so will not only help AI to work more efficiently in data centres, but also enable more AI tasks to be carried out directly on personal devices, for which battery life is often crucial. However, to convince industry to embrace such large architectural changes, researchers will have to show significant benefits.

Power up

According to the International Energy Agency (IEA), in 2022, data centres consumed 1.65 billion gigajoules of electricity — about 2% of global demand. Widespread deployment of AI will only increase electricity use. By 2026, the agency projects that data centres’ energy consumption will have increased by between 35% and 128% — amounts equivalent to adding the annual energy consumption of Sweden at the lower estimate or Germany at the top end.

Jelena Vuckovic developed a ‘waveguide’ that could improve energy efficiency of chips.Credit: Craig Lee

One potential driver of this increase is the shift to AI-powered web searches. The precise consumption of existing AI algorithms is hard to pin down, but according to the IEA, a typical request to chatbot ChatGPT consumes 10 kilojoules — roughly ten times as much as a conventional Google search.

Companies seem to have calculated that these energy costs are a worthy investment. Google’s 2024 environmental report revealed its carbon emissions have increased by 48% in 5 years. In May, Microsoft president Brad Smith in Redmond, Washington, said that the company’s emissions had risen by 30% since 2020. Although nobody wants a huge energy bill, companies that make AI models are focused on attaining the best results. “Usually people don’t care about energy efficiency when you’re training the world’s largest model,” says Naresh Shanbhag, a computer engineer at the University of Illinois Urbana–Champaign.

The high energy consumption associated with training and operating AI models is due in large part to the reliance of these models on huge databases, and the cost of moving those data between computing and memory, in and between chips. When training a large AI model, up to 90% of the energy is spent accessing memory, says Subhasish Mitra, a computer scientist at Stanford University in California. A machine-learning model capable of identifying fruits in photographs is trained by showing the model as many example images as possible, and this entails moving a tremendous amount of data in and out of memory, repeatedly. Similarly, natural language processing models are not made by programming the rules of English grammar — instead, some of these models will have been trained by exposing them to most of the English-language material on the Internet. “As mindblowing as it might seem, we’re close to exhausting all text ever written by humans,” Schwartz says. Again, this means that training requires large amounts of data to be moved in and out of thousands of graphics processing units (GPUs).

The basic design of today’s computing systems, with separate processing and memory units, isn’t well suited for this mass movement of data. “The biggest problem is the memory wall,” says Mitra.

Tearing down the wall

The GPU is a popular choice for developing AI models. William Dally, chief scientist at Nvidia in Santa Clara, California, says that the company has improved the performance-per-watt of its GPUs 4,000-fold over the past ten years. The company continues to develop specialized circuits in these chips, called accelerators, that are designed for the kinds of calculation used in AI, but he does not expect drastic architectural changes. “I think GPUs are here to stay,” he says.

Introducing new materials, new processes and wildly different designs into a semiconductor industry that is projected to reach US$1 trillion in value by 2030 is a lengthy and challenging process. For companies such us Nvidia to take the risks, researchers will need to show major benefits. But to some researchers, the need for change is already clear.

They say that GPUs won’t be able to offer enough efficiency improvements to address AI’s growing energy consumption — and that they plan to have high-performance technologies that can ready in the years ahead. “There are many start-ups and semiconductor companies exploring alternate options,” says Shanbhag. These new architectures are likely to first make their way into smartphones, laptops and wearable devices. This is where the benefits of new technology, such as being able to fine-tune AI models on the device using localized, personal data, are clearest. And where AI’s energy needs are most limiting.

Computing might seem abstract, but there are physical forces at work. Any time that electrons move through chips, some energy is dissipated as heat. Shanbhag is one of the early developers of a kind of architecture that seeks to minimize this wastage. Called computing in memory, these approaches include strategies such as placing an island of memory inside a computing core. This saves energy simply by shortening the distance data must travel. Researchers are also trying different approaches to computing, such as performing some operations in the memory itself.

To work in the energy-constrained environment of a portable device, some computer scientists are exploring what might sound like a giant step backward: analogue computing. The digital devices that have been synonymous with computing since the mid-twentieth century operate in a crisp world of on or off, represented as 1s and 0s. Analogue devices work with the in-between, and can store more data in a given area because they have access to a range of states — more computing bang is available from a given chip area.

States in an analogue device might be various forms of a crystal in a phase-change memory cell, or a continuum of charge levels in a resistive wire. Because the difference between analogue states can be smaller than those between the widely separated 1 and 0, it takes less energy to switch between them. “Analogue has higher energy efficiency,” says Intel’s Wang. The downside is that it is noisy, lacking the clarity of signal that makes digital computation robust. Wang says that AI models called neural networks are inherently tolerant to a certain level of error, and he’s exploring how to balance this trade-off. Some teams are working on digital in-memory computing, which avoids this issue but might not offer the energy benefits of analogue approaches.

Naveen Verma, an electrical engineer at Princeton University in New Jersey and founder and chief executive of start-up firm EnCharge AI in Santa Clara, expects that early applications for in-memory computing will be in laptops. EnCharge AI’s chips employ static random-access memory (SRAM), which uses crossed metal wires as capacitors to store data in the form of different amounts of charge. SRAM can be made on silicon chips using existing processes, he says.

The chips, which are analogue, can run machine-learning algorithms at 150 tera operations per second (TOPS) per watt, compared with 24 TOPS per watt by an equivalent Nvidia chip performing an equivalent task. By upgrading to a semiconductor process technology that can trace finer chip features, Verma expects his technology’s energy efficiency metric to triple to about 650 TOPS per watt.

Bigger companies are also exploring in-memory computing. In 2023, IBM described1 an early analogue AI chip that could perform matrix multiplication at 12.4 TOPS per watt. Dally says that Nvidia researchers have explored in-memory computing as well, but cautions that gains in energy efficiency might not be as great as they seem. These systems might use less power to do matrix multiplications, but the energy cost of converting data from digital to analogue and other overheads eat into those gains at the system level. “I haven’t seen any idea that would make it substantially better,” Dally says.

IBM’s Burns agrees that the energy cost of digital-to-analogue conversion is a major challenge. He says that the key is deciding whether the data should stay in analogue form when they are moved between parts of the chip, or whether it is it better to transfer them in 1s and 0s. “What happens if we try to stay in analogue as much as possible?” he says.

Wang says that several years ago he would not have expected such quick progress in this field. But now he expects to see start-up firms bringing in-memory computing chips to market over the next few years.

A brighter future

The AI-energy problem has also invigorated work in photonics. Data travel more efficiently when encoded in light than along electrical wires, which is why optical fibres are used to bring high speed Internet to neighbourhoods, and even to connect banks of servers in data centres. However, bringing these connections onto chips has been challenging — optical devices have historically been bulky and sensitive to small temperature variations.

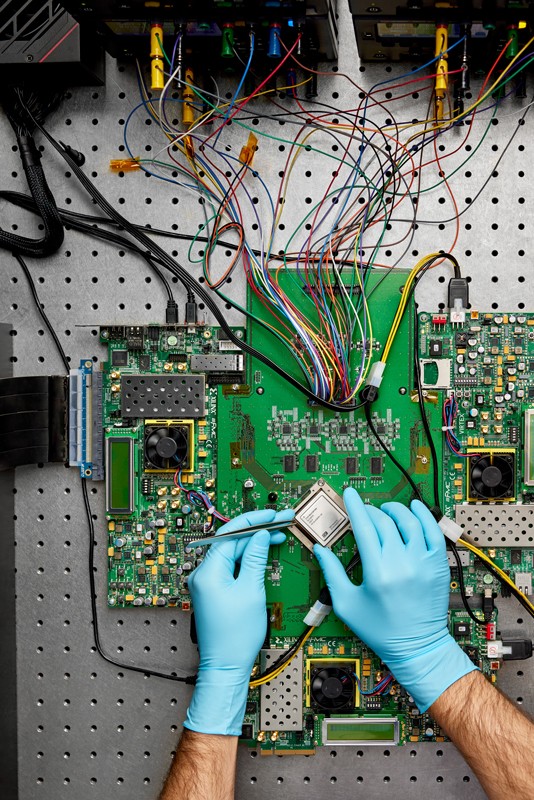

Hechen Wang tests the energy efficiency of a computer chip.Credit: Hechen Wang

In 2022, Jelena Vuckovic, an electrical engineer at Stanford University, developed a silicon waveguide for optical data transmission between chips2. Losses during electronic data transmission are on the order of one picojoule per bit of data (one picojoule is 10−12 joules); for optics, it’s less than 100 femtojoules per bit (one femtojoule is 10−15 joules). That means Vuckovic’s device can transmit data at a given speed for about 10% of the energy cost of doing so electronically. The optical waveguide can also carry data on 400 channels by taking advantage of 100 different wavelengths of light and using optical interference to create four modes of transmission.

Vuckovic says that in the short term, optical waveguides could provide more energy-efficient connections between GPUs — she thinks that speeds of 10 terabytes per second will be possible. Some researchers hope to use optics not just to transmit data, but also to compute. In April, engineer Lu Fang at Tsinghua University in Beijing and her team described a photonic AI chip that they say can generate tunes in the style of German composer Johann Sebastian Bach and images in the style of Norwegian painter Edvard Munch while using less energy than a GPU would3. “This is the first optical AI system that could handle large-scale general-purpose intelligence computing,” says Zhihao Xu, who is a member of Fang’s lab. Called Taichi, this system can perform 160 TOPS per watt, which Xu says is a two to three orders of magnitude improvement in energy efficiency compared with a GPU.

Fang’s group is working on miniaturizing the system, which currently takes up about one square metre. However, Vuckovic expects that progress in all-optical AI will be hampered by the need to convert tremendous amounts of electronic data into optical versions — a project that would carry its own energy cost and could be impractical.

Stacking up

Stanford’s Mitra says that his dream computing system would have all of the memory and computing on the same chip. Today’s chips are mostly planar, but Mitra predicts that chips made up of 3D stacked computing and memory layers will be possible. These would be based on emerging materials that can be sandwiched, such as carbon-nanotube circuits. The closer physical proximity between memory and computing elements offers about 10–15% improvements in energy use, but Mitra thinks that this can be pushed much further.

Analogue AI chips are potentially more energy efficient than digital chips.Credit: Ryan Lavine for IBM

The big challenge facing 3D stacking is the need to change how chips are fabricated, which Mitra admits is a tall order. Chips are currently made mostly of silicon at extremely high temperatures. But 3D chips of the kind Mitra is designing must be made in milder conditions, so that the building of one layer doesn’t blast out the one underneath. Mitra’s team has shown it’s possible, layering a chip based on carbon nanotubes and resistive RAM (a kind of memory technology) on top of a silicon chip. This initial device, presented in 2023, has the same performance and power requirements as an analogous chip based on silicon technology4.

Reducing energy consumption considerably will require close collaboration between hardware and software engineers. One way to save energy is to very quickly turn off regions of the memory that are not in use so that they do not leak power, then turn them back on when they are needed. Mitra says that he’s seen big pay-offs when his team collaborates closely with programmers. For instance, when his team asked them to keep in mind that writing to a memory cell in their device costs more energy than does reading from it, they designed a training algorithm that yielded a 340-times improvement in system-level energy delay product (an efficiency metric that takes into account both energy consumption and execution speed). “In the old model, the algorithms people don’t need to know anything about the hardware,” says Mitra. That’s not the case any more.

“I think there’s going to be a convergence, where the chips get more efficient and powerful, and the models are going to get more efficient and less resource intensive,” says Raghavendra Selvan, a machine-learning researcher at the University of Copenhagen.

When it comes to training models, programmers could be more selective. Instead of continuing to train models on gigantic amounts of data, programmers might make more gains by training on smaller, tailored databases, saving energy and potentially getting a better model. “We need to think creatively,” Selvan says.

Schwartz is exploring the idea of saving energy by running small, ‘cheap’ models multiple times instead of an expensive one once. His group at Hebrew University has seen some gains from this approach when using a large language model to generate code55. “If it generates ten outputs, and one of them passes, you’re better off running the smaller model than the larger one,” he says.

Selvan, who has developed a tool for predicting the carbon footprint of deep-learning models, called CarbonTracker, wants computer scientists to think more holistically about the costs of AI. Like Schwartz, he sees some ready fixes that have nothing to do with sophisticated chip technologies. Companies could, for instance, schedule AI training runs during times when electricity is being provided by renewable sources.

Indeed, support from the companies using this technology will be crucial to solving the problem. If AI chips become more energy efficient, they might simply be used more. To prevent this, some researchers are calling for greater transparency from the companies behind machine-learning models. “We no longer know what these companies are doing — what is the size of the tool, what was it trained on,” Schwartz says.

Sasha Luccioni, an AI researcher and climate lead at US firm Hugging Face, who is based in Montreal, Canada, wants model makers to provide more information on how AI models are trained, how much energy they use and what algorithm is running when a user queries a search engine or natural language tool. “We should be enforcing transparency,” she says.

Schwartz says that, between 2018 and 2022, the computational costs of training machine-learning models increased by a factor of ten every year. “If we follow the same path we’ve been on for years, it’s gloom and doom,” says Mitra. “But the opportunities are huge.