Ethics statement: participants and consent/recruitment procedures

Data collection commenced after 23 April 2023, following Institutional Review Board approval from WCG Clinical (study number 1352290). All of the participants have provided their informed consent to the use of their data, and those who were image subjects further consented to have their identifiable images published.

We developed an informed consent form designed to comply with the EU’s GDPR46 and other similarly comprehensive data privacy regulations. Vendors were required to ensure that all image subjects (that is, both primary and secondary) provided signed informed consent forms when contributing their data. Vendors were also required to ensure that each image was associated with a signed copyright agreement to obtain the necessary IP rights in the images from the appropriate rightsholder. Only individuals above the age of majority in their country of residence and capable of entering into contracts were eligible to submit images.

All of the image subjects, regardless of their country of residence, have the right to withdraw their consent to having their images included in the dataset, with no impact to the compensation that they received for the images. This is a right that is not typically provided in pay-for-data arrangements nor in many data privacy laws beyond GDPR and GDPR-inspired regimes.

Data annotators involved in labelling or QA were given the option to disclose their demographic information as part of the study and were similarly provided informed consent forms giving them the right to withdraw their personal information. Some data annotators and QA personnel were crowdsourced workers, while others were vendor employees.

To validate English language proficiency, which was needed to understand the project’s instructions, terms of participation, and related forms, participants (that is, image subjects, annotator crowdworkers and QA annotator crowdworkers) were required to answer at least two out of three randomly selected multiple-choice English proficiency questions correctly from a question bank, with questions presented before project commencement. The questions were randomized to minimize the likelihood of sharing answers among participants. An example question is: “Choose the word or phrase which has a similar meaning to: significant” (options: unimportant, important, trivial).

To avoid possibly coercive data-collection practices, we instructed data vendors not to use referral programs to incentivize participants to recruit others. Moreover, we instructed them not to provide participants support (beyond platform tutorials and general technical support) in signing up for or submitting to the project. The motivation was to avoid scenarios in which the participants could feel pressured or rushed through key stages, such as when reviewing consent forms. We further reviewed project description pages to ensure that important disclosures about the project (such as the public sharing and use of the data collected, risks, compensation and participation requirements) were provided before an individual invested time into the project.

Image collection guidelines

Images and annotations were crowdsourced through external vendors according to extensive guidelines that we provided. Vendors were instructed to only accept images captured with digital devices released in 2011 or later, equipped with at least an 8-megapixel camera and capable of recording Exif metadata. Accepted images had to be in JPEG or TIFF format (or the default output format of the device) and free from post-processing, digital zoom, filters, panoramas, fisheye effects and shallow depth-of-field. Images were also required to have an aspect ratio of up to 2:1 and be clear enough to allow for the annotation of facial landmarks, with motion blur permitted only if it resulted from subject activity (for example, running) and did not compromise the ability to annotate them. Each subject was allowed to submit a maximum of ten images, which had to depict actual subjects, not representations such as drawings, paintings or reflections.

Submissions were restricted to images featuring one or two consensual image subjects, with the requirement that the primary subject’s entire body be visible (including the head, and a minimum of 5 body landmarks and 3 facial landmarks identifiable) in at least 70% of the images delivered by each vendor, and the head visible (with at least 3 facial landmarks identifiable) in all images. Vendors were also directed to avoid collecting images with third-party IP, such as trademarks and landmarks.

To increase image diversity, we requested that images ideally be taken at least 1 day apart and recommended that images submitted of a subject were taken over as wide a time span as possible, preferably at least 7 days apart. If images were captured less than 7 days apart, the subject had to be wearing different clothing in each image, and the images had to be taken in different locations and at different times of day. Our instructions to vendors requested minimum percentages for different poses to enhance pose diversity, but we did not instruct subjects to submit images with specific poses. Participants were permitted to submit previously captured images provided that they met all requirements.

Annotation categories and guidelines

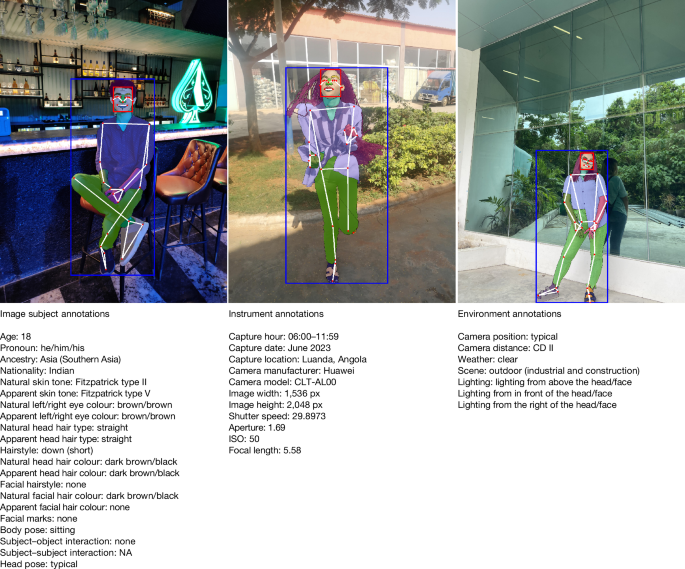

We provided extensive annotation guidelines to data vendors that included examples and explanations. A complete list of the annotations, their properties (including whether they were multiple-choice), categories and annotation methods is provided in Supplementary Information A.

A key component of our project was that most annotations were self-reported by the image subjects as they were best suited to provide accurate information about subject demographics and physical characteristics, interactions depicted and scene context. The only annotations that were not self-reported were those that could be objectively observed from the image itself and would benefit from the consistency offered by professional annotators (that is, pixel-level annotations, head pose and camera distance, as defined by the size of an image subject’s face bounding box). We also provided examples and guidance for subject–subject interactions, subject–object interactions and head pose based on the request of our data vendors due to ambiguities in those labels.

We included open-ended, free text options alongside closed-ended responses, enabling subjects to provide input beyond predefined categories. These open-ended responses were coded as ‘Not Listed’. For privacy reasons, we do not report the specific text provided by the subjects. This approach enabled subjects to express themselves more fully79,80, resulting in more accurate data and informing better question design for future data collection. Given the mutability of most attributes, annotations were collected on a per-image basis, except for ancestry.

For the pixel-level annotations, face bounding boxes were annotated following the protocol used for the WIDER FACE dataset81, a commonly used face detection dataset. Keypoint annotations were based on the BlazePose topology82, a composite of the COCO40, BlazePalm83 and BlazeFace84 topologies. While the 17-keypoint COCO topology is widely used in computer vision, it lacks definitions for hand and foot keypoints, making it less suitable for applications such as fitness compared to BlazePose. For person segmentation, we defined 28 semantic segmentation categories based on the most comprehensive categorical schemas for this task, including MHP (v.2.0)85, CelebAMask-HQ86 and Face Synthetics87. Finally, person bounding boxes were automatically derived from human segmentation masks by enclosing the minimum-sized box that contained the entirety of each person’s segmentation mask.

Each annotator, QA annotator and QA specialist was assigned a unique identifier to link them to each annotation that they provided or reviewed, as well as any demographic information they chose to disclose. For annotation tasks involving multiple annotators, we provided the individual annotations from each annotator, rather than aggregated data. These annotations included those made before any vendor QA and those generated during each stage of vendor QA.

For our analyses, images with multiple annotations within a single attribute category (for example, ancestry subregion) are included in all relevant attribute value categories. For example, if an image subject is annotated with multiple ancestry subregions, the subject is counted in each of those subregions during analyses. Nested annotations—such as when a broad category is selected (for example, ‘Africa’ for ancestry)—are handled by counting the image subject in all corresponding subregions (for example, each subregion of ‘Africa’).

Quality control and data filtering

Quality control for images and annotations was conducted by both the vendors and our team. Vendor QA annotators handled the first round of checking images, annotations, and consent and IPR forms. For non-self-reported annotations, vendor QA workers were permitted to modify the annotation if incorrect. For imageable attributes (such as apparent eye colour, facial marks, apparent head hair type), they could provide their own annotations if they believed the annotations were incorrect, but this would not overwrite the original self-reported annotation (we report both annotations). Vendors were instructed not to QA non-imageable attributes (such as pronouns, nationality, natural hair colour), with the exception of height and weight if there were significant differences in the numbers for the same subject in images taken 48 h or less apart.

Moreover, we developed and ran various automated and manual checks to further examine the images and annotations delivered by the vendors. Our automated checks verified image integrity (for example, readability), resolution, lack of post-processing artifacts and sufficient diversity among images of the same subject. They also assessed annotation reliability by comparing annotations to inferred data (for example, verifying that a scene labelled as ‘outdoor’ corresponds with outdoor characteristics in the image), checked for internal consistency (for example, ensuring body keypoints are correctly positioned within body masks), identified duplicates and checked the images against existing images available on the Internet. Moreover, the automated testing checked for CSAM by comparing image hashes against the database of known CSAM maintained by the National Center for Missing & Exploited Children (NCMEC).

Images containing logos were automatically detected using a logo detector88 and the commercial logo detection API from Google Cloud Vision89. They were then excluded from FHIBE to avoid trademark issues. We used a detection score threshold of 0.6 to eliminate identified bounding boxes with low confidence, and the positive detection results were reviewed and filtered manually to avoid false positives. However, despite these efforts, logo detection remains a complex challenge due to the vast diversity of global designs, spatial orientation, partial occlusion, background artifacts and lighting variations. Even manual review can be inherently limited, as QA teams cannot be familiar with every logo worldwide and often face difficulty distinguishing between generic text and logos. Our risk-based approach to logo detection and removal was informed by the relatively low risk of IP harms posed by the inclusion of logos in our dataset. The primary concern is that individuals might mistakenly perceive a relationship between our dataset and the companies whose logos appear. However, this is mitigated by the academic nature of this publication and the clear disclosure of author and contributor affiliations.

Manual checks on the data were conducted predominantly by our team of QA specialists, as well as by authors. The QA specialists were a team of four contractors who worked with the authors to evaluate the quality of vendor-delivered data, and conduct corrections where needed. The QA specialists had a background in ML data annotation and QA work, and received training and extensive documentation regarding the quality standards and requirements for images and annotations for this project. Furthermore, they remained in direct contact with our team throughout the project, ensuring that they could clarify quality standards as needed.

The manual checks focused on ensuring the accuracy of annotations for imageable attributes, such as hair colour, scene context and subject interactions. Non-imageable attributes, representing social constructs, such as pronouns or ancestry, were not part of the visual content verification. Moreover, even though the probability of objectionable content (for example, explicit nudity, violence, hate symbols) was low given our sourcing method, instructions to data subjects and QA from vendors, we took the additional step of manually reviewing each image for such content given the public nature of the dataset.

Overall, to arrive at the 10,318 images for the initial launch of FHIBE, we collected a total of 28,703 images from three data vendors. As the result of initial internal assessments, a set of 6,868 images were excluded due to issues with data quality and adherence to project specifications. Another 5,855 images were excluded for consent or copyright form issues. Of the remaining 15,980 images collected from vendors, approximately 0.07% were excluded for minor annotation errors (for example, missing skin colour annotations), 0.17% for offensive content (in free-text or visual content) and 0.01% for other reasons (for example, duplicated subject IDs) before the suspicious-pattern exclusions described in the following section.

Detection and removal of suspicious images

It was difficult to determine whether the people who submitted the images were the same as the subjects in the image while respecting the privacy of the subjects. There can be fraudulent actors who submit images of other people without their consent to be compensated by data vendors. Given the public and consent-driven nature of our dataset, we did not rely exclusively on vendors to detect and remove suspicious images. We used a combination of automated and manual checks to detect and remove images where we had reason to suspect the data subject(s) might not be the individual who submitted the image. Combining automated and manual checks, we removed 3,848 images from 1,718 subjects from the dataset.

For automated checks, we used Web Detect from Google Cloud Vision API89 to identify and exclude images that could have been scraped from the Internet. This was a conservative check as images found online could still have been consensually submitted to our project by the image subject. However, given the importance of consent for our project, and the use of the dataset for evaluation, we excluded these images out of an abundance of caution.

This check resulted in removing 321 images, across 70 subjects, as we removed all the images for a given subject, as long as a single image was found online. However, there were some limitations to this automated approach. Vision API had a high false-positive rate: 62% for our task (that is, images that are visually similar, due to scene elements or popular landmarks). Google Web Detect returned limited results for images containing people and, in some cases, the returned matches focused on clothing items or the landmark. Furthermore, some social media images may not have been indexed by the Vision API because the websites required authentication.

We therefore also performed manual review methods for removing potentially suspicious images. Manual reviewers were instructed to track potentially suspicious patterns during their review of images and consent/copyright forms. For example, they were instructed to examine inconsistencies between self-reported and image metadata (for example, landmarks that contradicted the self-reported location). These patterns were later reviewed for exclusion by the research team.

Moreover, one of our QA specialists developed a manual process to find additional online image matches. The QA specialist used Google Lens to identify the location of the image. For images with distinctive locations (for example, not generic indoor locations or extremely popular tourist locations), the QA specialist performed a time-limited manual search to try to find image matches online. While we were not able to apply this time-intensive process to every image, using this approach, we were able to assess the risk level of different qualitative suspicious patterns and make additional exclusions.

After these exclusions, 2,017 subjects remained. From these subjects, we randomly sampled a set of 400 subjects and conducted the above manual QA process. In total, 14 subjects were found online while inspecting this sample, and we excluded them from the dataset. On the basis of this analysis, we estimated a baseline level of suspiciousness of 3.5 ± −1.7% with 95% confidence.

It is important to note that removing suspicious images also had an impact on the demographic distribution of subjects in the dataset (Supplementary Information I). We found that excluded images were more likely to feature individuals of older ages, with lighter skin tones and of Europe/Americas/Oceania ancestry. While it is not possible for us to determine the true underlying reason why some people might have submitted fraudulent images, we can speculate that some of the ethical design choices of our dataset may have inadvertently incentivized fraudulent behaviours. For example, requiring vendors to pay at least the applicable local minimum wage may have encouraged people to falsely claim to be from regions with higher wages, submitting images from the Internet taken in those locations. Similarly, in our pursuit of diversity, our vendors found certain demographics were more difficult to obtain images of (for example, people of older ages). As such, higher compensation was offered for those demographics, increasing the incentives to fraudulently submit images featuring those demographics.

The priorities of our data collection project also made fraud more feasible and difficult to detect. Given that FHIBE is designed for fairness evaluation, we sought to maximize visual diversity and collect naturalistic (rather than staged) images. As a result, we opted for a crowd-sourcing approach and allowed subjects to submit past photos. Compared with in-person data collection or bespoke data collection in which the setting, clothing, poses or other attributes might be fixed or specified, it was more difficult for our project to verify that the images were intentionally submitted by the data subject for our project. We therefore encourage dataset curators to consider how their ethical goals may inadvertently attract fraudulent submissions.

Annotation QA

We verified the quality of both pixel-level annotations and imageable categorical attribute annotations using two methods. First, we compared the vendor-provided annotations with the average annotations from three of our QA specialists on a randomly sampled set of 500 images for each annotation type. For pixel-level annotations, agreement between the collected annotations and the QA specialist annotations was above 90% (Supplementary Information E), at a similar or higher level as related works90,91,92, showing the robustness and quality of our collected annotations.

Second, we assessed intra- and inter-vendor annotation consistency by obtaining three sets of annotations for the same 70 images from each vendor. Within each vendor, each image was annotated and reviewed three times by different annotators. To ensure independent assessments, no individual annotator reviewed the same annotation for a given image instance, resulting in mutually exclusive outputs from each labelling pipeline. For dense prediction annotations, intra- and inter-vendor agreement is above 90%, confirming a high quality of collected annotations. For attribute annotations, intra-vendor agreement is above 80% and inter-vendor agreement is at 70%, which indicates that they are more noisy labels than the dense prediction ones (Supplementary Information E).

Regarding metrics for these comparisons, for bounding boxes, we computed the mean intersection over union between the predicted and ground truth bounding boxes. For keypoints, we computed object keypoint similarity93. For segmentation masks, we computed the Sørensen–Dice coefficient94,95. For categorical attributes (for example, hair type, hairstyle, body pose, scene, camera position), we computed the pairwise Jaccard similarity coefficient96 and then the average. Using these analyses, we were able to verify the consistency of the annotations between vendors and our QA specialists, within individual vendors and between different vendors.

Privacy assurance

We used a text-guided, fine-tuned stable diffusion model47 from the HuggingFace Diffusers library97 to inpaint regions identified by annotator-generated bounding boxes and segmentation masks containing incidental, non-consensual subjects or personally identifiable information (for example, license plates, identity documents). The model was configured with the following parameters: (1) text prompt: “a high-resolution image with no humans or people in it”; (2) negative text prompt: “human, people, person, human body parts, android, animal”; (3) guidance scale: randomly sampled from a uniform distribution, w ~ U(12, 16); (4) denoising steps: 20; and (5) variance control: η = 0, enabling the diffusion model to function as a denoising diffusion implicit model98.

We also manually reviewed the images to ensure the correct removal of personally identifiable information and identified any redaction artifacts. Around 10% of images had some content removed and in-painted. To evaluate any potential loss in data use, we compared performance on a subset of tasks (i.e., pose estimation, person segmentation, person detection and face detection) before and after removal and in-painting. No significant performance differences were observed.

To further address possible privacy concerns with the public disclosure of personal information, a subset of the attributes of consensual image subjects (that is, biological relationships to other subjects in a given image, country of residence, height, weight, pregnancy and disability/difficulty status) are reported only in aggregate form. Moreover, the date and time of image capture were coarsened to the approximate time and month of the year. Subject and annotator identifiers were anonymized, and Exif metadata from the images were stripped.

Consent revocation

We are committed to upholding the right of human participants to revoke consent at any time and for any reason. As long as FHIBE is publicly available, we will remove images and other data when consent is revoked. If possible, the withdrawn image will be replaced with one that most closely matches key attributes, such as pronoun, age group and regional ancestry. To the extent possible, we will also consider other features that could impact the complexity of the image for relevant tasks when selecting the closest match.

FHIBE derivative datasets

We release both the original images and downsampled versions in PNG format. The downsampled images were resized to have their largest side set to 2,048 pixels while maintaining the original aspect ratio. These downsampled versions were used in our analyses to prevent memory overflows when feeding images to the downstream models.

FHIBE also includes two face datasets created from the original images (that is, not the downsampled versions), both in PNG format: a cropped-only set and a cropped-and-aligned set. These datasets feature both primary and secondary subjects. For the cropped-and-aligned set, we followed a procedure similar to existing datasets99,100 by cropping oriented rectangles based on the positions of two eye landmarks and two mouth landmarks. These rectangles were first resized to 4,096 × 4,096 pixels using bilinear filtering and then downsampled to 512 × 512 pixels using Lanczos filtering101. Only faces with visible eye and mouth landmarks were included in the final cropped-and-aligned set.

For the cropped-only set, facial regions were directly cropped based on the face bounding box annotations, with each bounding box enlarged by a factor of two to capture all necessary facial pixels. This set includes images with resolutions ranging from 85 × 144 to 5,820 × 8,865 pixels. If facial regions extended beyond the original image boundaries, padding was applied using the mean value along each axis for both face derivative datasets.

Datasets for fairness evaluation

We evaluated FHIBE’s effectiveness as a fairness benchmarking dataset by comparing it against several representative human-centric datasets commonly used in the computer vision literature. These datasets were selected based on their relevance to fairness evaluation, the availability of demographic annotations, and/or their use in previous fairness-related studies. Our analysis is limited to datasets that are publicly available; we did not include datasets that have been discontinued, like the JANUS program datasets (IJB-A, IJB-B, IJB-C, IJB-D)102. The results are shown in Supplementary Information F.

COCO is constructed from the MS-COCO 2014 validation split40, COCO Caption Bias103 and COCO Whole Body104 datasets. We used the images and annotations from the MS-COCO 2014 validation set, and added the perceived gender and skin tone (dark, light) annotations from COCO Caption Bias, excluding entries for which the label was ‘unsure’. We then used COCO Whole Body to filter the dataset for images containing at least one person bounding box. After filtering, this dataset contained 1,355 images with a total of 2,091 annotated person bounding boxes.

FACET24 is a benchmark and accompanying dataset for fairness evaluation, consisting of 32,000 images and 50,000 subjects, with annotations for attributes like perceived skin tone (using the Monk scale105), age group and perceived gender. For our evaluations, we used 49,500 person bounding box annotations and 17,000 segmentation masks, spread across 31,700 images.

Open Images MIAP42 is a set of annotations for 100,000 images from the Open Images Dataset, including attributes such as age presentation and gender presentation. In our evaluations, we used the test split, excluding images for which the annotations of age or gender are unknown, as well as the ‘younger’ category—to ensure that only adults were included in the evaluation. With this filtering, we used a set of 13,700 images with 36,000 associated bounding boxes and masks.

WiderFace81 is a face detection benchmark dataset containing images and annotations for faces, including the attributes perceived gender, age, skin tone, hair colour and facial hair. We used the validation split in our evaluations after excluding annotations for which perceived gender, age and skin tone were marked as ‘Not Sure’. After the filtering, we used a set of 8,519 face annotations across 2,856 files.

CelebAMask-HQ86 consists of 30,000 high-resolution face images of size 512 × 512 from the CelebA-HQ dataset, which were annotated with detailed segmentation of facial components across 19 classes. From this dataset, we used the test split in our evaluations, consisting of 2,824 images with binarized attributes for age, skin colour and gender.

CCv1106 contains 45,186 videos from 3,011 participants across five US cities. Self-reported attributes include age and gender, with trained annotators labelling apparent skin tone using the Fitzpatrick scale. For dataset statistics, we extracted a single frame per video. For Vendi score computation, we used 10 frames per video.

CCv226 contains 26,467 videos from 5,567 participants across seven countries. Self-reported attributes include age, gender, language, disability status and geolocation, while annotators labelled skin tone (Fitzpatrick and Monk scales), voice timbre, recording setups and per-second activity. For dataset statistics, we extract a single frame per video. For Vendi score computation, we use three frames per video.

IMDB-WIKI107 is a dataset of public images of actors crawled from IMDB and Wikipedia. The images were captioned with date taken such that age could be labelled. From this dataset, we randomly sampled 10% to use for face verification task, resulting in 17,000 images.

Narrow models for evaluation

To assess the use of FHIBE and FHIBE face datasets, we compared the performance of specialized narrow models (spanning eight classic computer vision tasks) using both FHIBE and pre-existing benchmark datasets as listed above. As FHIBE is designed only for fairness evaluation and mitigation, we did not train any models from scratch. Instead, we evaluated existing, pretrained state-of-the-art models on our dataset to assess their performance and fairness. The results are shown in Supplementary Information F.

Pose-estimation models aim to locate face and body landmarks in cropped and resized images derived from ground truth person bounding boxes, following108,109,110. For this task, we used Simple Baseline108, HRNet109 and ViTPose110, all of which were pretrained on the MS-COCO dataset40.

Person-segmentation models generate segmentation masks that label each pixel of the image with specific body parts or clothing regions of a person. For this task, we used Mask RCNN111, Cascade Mask RCNN112 and Mask2Former113, all of which were trained on MS-COCO dataset40.

Person-detection models identify individuals from images by relying on object detection models, retaining only the outputs for the class ‘person’. For this task, we used DETR114, Faster RCNN115, Deformable DETR116 and DDOD117 with the ResNet-50 FPN115 backbone, all of which were trained on MS-COCO dataset40.

Face-detection models locate faces in images by predicting bounding boxes that encompass each detected face. For this task, we used the MTCNN118 model trained on VGGFaces2119 and the RetinaFace120 model trained on WiderFace81 using publicly available source code121,122.

Face-segmentation models generate pixel-level masks that classify facial regions into specific facial features (such as eyes, nose, mouth or skin) or background, enabling detailed facial analysis and manipulation. For this task, we used the DML CSR123 model trained on CelebAMask-HQ86.

Face-verification models determine whether two face images belong to the same person by comparing their facial features against a preset similarity threshold. For extracting facial features, we used FaceNet62 trained on VGGFaces2119, and ArcFace60 and CurricularFace61, both trained on refined MS-Celeb-1M124, using publicly available implementations61,121,125.

Face-reconstruction models encode facial images into latent codes and decode these codes back into images, enabling controlled manipulation of facial attributes. For this task, we used ReStyle126 applied over e4e127 and pSp128, and trained on FFHQ99.

Face super-resolution models generate high-resolution facial images from low-resolution inputs, enhancing facial details and overall image quality. For this task, we used GFP-GAN129 and GPEN130, trained on FFHQ99.

Narrow model evaluation metrics

We used the standard metrics reported in the literature to assess the performance of the narrow models on different tasks.

For pose estimation, we reported the percentage correct keypoints at a normalized distance of 50% of head length ([email protected])131, which measures the portion of predicted landmarks (keypoints) falling within 0.5 × head-length radius from their true positions.

For person segmentation, person detection, and face detection, we reported the average recall across intersection over union (IoU) thresholds ranging [0.5, 0.95] with step size 0.05, to assess the average detection completeness of the models across multiple IoU thresholds.

For face segmentation, we reported the average F1 score (that is, the Sørensen–Dice coefficient94,95) across all segmentation mask categories, where F1 measures the intersection between the predicted and ground truth masks relative to their average size.

For face verification, we sampled image pairs of the same person (positive) and different people (negative) within each demographic subgroup. For each subgroup, we reported true acceptance rate (TAR) at a false acceptance rate (FAR) of 0.001. TAR@FAR = 0.001 measures the proportion of correctly accepted positive pairs when classification threshold is set to allow only 0.1% incorrectly accepted negative pairs.

For face reconstruction and face super-resolution, we reported learned perceptual image patch similarity132, which evaluates the perceived visual similarity between reference image Iref and generated image Igen by comparing their feature representations extracted by a pretrained VGG16133 model.

For face reconstruction, we also assessed perceptual quality using peak signal-to-noise ratio and measured identity preservation using cosine similarity between facial embeddings of Iref and Igen extracted by a CurricularFace model61.

Dataset diversity

To compare FHIBE’s visual diversity with other datasets, we used the Vendi Score134,135, which quantifies diversity using a similarity function.

To construct the similarity matrix K, we first extracted image features (embeddings) using the self-supervised SEER136 model, which exhibits strong expressive power for vision tasks. We then constructed K by computing the cosine similarity between every feature pair. For extracting feature embeddings with SEER, all images are pre-processed using the ImageNet protocol: rescaling to 224 × 224 and applying z-score normalization using the ImageNet per-channel mean and s.d.

Bias discovery in narrow models

We tested and compared FHIBE’s capabilities for bias diagnosis using a variety of methods.

Benchmarking analysis

For this analysis, we evaluated FHIBE on seven (note that for this analysis we excluded face verification owing to the inability to compute per-image scores for that task) different downstream computer vision tasks: pose estimation, person segmentation, person detection, face detection, face parsing, face reconstruction and face super-resolution. For each task and its respective models, we obtained a performance score for each image and subject, enabling us to conduct a post hoc analysis to explore the relationship between labelled attributes and performance.

For every task and model, we performed the following analyses. For each annotation attribute (for example, hair colour), we first isolated individual attribute groups (for example, blond, red, white). For each group, we compiled a set of performance scores (for example, scores for all subjects with blond hair, red hair or white hair). Only groups with at least ten subjects were considered in the analysis. We next performed pairwise comparisons (for example, blond versus red, blond versus white) using the Mann–Whitney U-test to determine whether the groups had similar median performance scores (null hypothesis, two-tailed). To control for multiple comparisons, we applied the Bonferroni correction58 by adjusting the significance threshold based on the number of pairwise tests. For pairs with a statistically significant difference (\(P < \frac{0.05}{{\rm{number}}\,{\rm{of}}\,{\rm{pairwise}}\,{\rm{tests}}}\)), we identified the groups with the lowest and highest median scores as the worst group and best group, respectively, and computed the min–max group disparity, D, between them:

$$D=1-\frac{{\rm{MED}}({\rm{worst}}\,{\rm{group}})}{{\rm{MED}}({\rm{best}}\,{\rm{group}})},\,\,D\in [0,1],$$

where MED(g) denotes the median performance score for group g. A value D → 0 indicates minimal disparity, while D → 1 indicates maximal disparity. We repeated this process for each attribute, identifying group pairs with statistically significant disparities and their corresponding values. For each attribute, we selected the pair with the highest disparity.

Direct error modelling

Using this approach, we aimed to examine which features were associated with reduced model performance using regression analysis. Although regression analysis is widely used to identify underlying relationships within datasets, its application to image data has traditionally been limited due to the lack of extensive structured annotations. However, the comprehensive scope and detail of the FHIBE annotations enabled us to effectively apply this method and achieve meaningful results. For each task and model, we predicted the model’s performance on individual images as the target variable. To this end, we collected, processed and extracted a range of annotations related to both images and subjects, including features derived from pixel-level annotations, such as the number of visible keypoints or visible head hair, or the absence of it (categorized as the binary attribute ‘bald’), which served as predictor variables. We used decision trees and random forests—an ensemble of decision trees—due to their interpretability, modelling power and low variance. We used the available implementation in the scikit-learn v.1.5.1 library for both of these models. Feature importance was obtained from the random forests model by assessing how each variable (for example, body pose) contributed to reducing variance when constructing decision trees, helping to identify the most predictive features. We then identified the most significant features (top six in most experiments) using the elbow method137. These selected features were then used in a decision tree model to assess the direction of their contribution to prediction—determining whether higher feature values are associated with better or worse model performance. To assess the robustness and statistical significance of observed differences across subgroups, we conducted bootstrap resampling with 5,000 iterations estimating standard errors. This approach enabled us to evaluate differences across groups even within smaller intersectional subgroups.

Error pattern recognition

We used association rule mining, a method frequently used in data mining to identify relationships between variables within a dataset. We applied association rule mining to identify attribute values that frequently co-occur with low performance. This approach enabled us to systematically identify and analyse patterns of bias within the model’s outputs. We used the FP-growth algorithm138. After obtaining the frequently occurring rules, we identified the attributes that are potential modes of error and investigated them further. We did this by studying the error disparities across the unique values of the attribute and evaluating its effect in conjunction with the sensitive attributes.

For face verification, we modified the protocol described above in the ‘Narrow model evaluation metrics’ section. Given that we wanted to look at the whole dataset, unconstrained to specific attributes, positive and negative pairs were computed using all face images from the FHIBE face dataset. All possible positive pairs were computed (15,474 pairs), while all negative pairs were sampled with the constraint as described previously139 to extract hard pairs: the gallery and probe images had the same pronoun, and their skin colour differed by no more than one of the six possible levels, yielding 4,945,896 pairs.

Bias discovery in foundation models

Our analysis focuses on two foundation models: CLIP and BLIP-2. CLIP74 is a highly influential vision-language model that is widely recognized for its applications in zero-shot classification and image search. BLIP-275 advances vision–language alignment by using a captioning and filtering mechanism to refine noisy web-scraped training data, thereby enhancing performance in image captioning, VQA and instruction following.

CLIP

We used the official OpenAI CLIP model74. We analysed CLIP in an open-vocabulary zero-shot setting to examine the model’s biases towards different image concepts, such as demographic attributes or image concepts (for example, scene). For each value of the given attribute, we presented four distinct text prompts. These prompts were intentionally varied in wording to reduce potential bias or sensitivity to specific phrasing. The prompts were standardized, clear and consistent across various values to minimize the influence of prompt engineering (the set of prompts is provided in Supplementary Information H). We further encoded FHIBE images using the CLIP image encoder. For pre-processing, we used the same pre-processing function as the official implementation. We analysed different variants of the FHIBE dataset to control for various effects related to the human subject and image background. These variants included the original images, images with individuals masked in black, images with individuals blurred with Gaussian noise of radius 100 and images with the background blacked out.

For the zero-shot classification analysis, we calculated the cosine similarity between the image embeddings and the text embeddings for each attribute. For example, for the scene attribute, we used two sets of prompts, each consisting of four text descriptions for indoor and outdoor environments. We computed the similarity between each text description and the image, selecting the description with the highest similarity as the assigned label for the image.

BLIP-2

The analysis protocol for the BLIP-275 model consisted of specifying a prompt pair composed of an image and a text string in the form of a request or a question (the prompts are provided in Supplementary Information H). In our experiments, we instantiated the model to perform VQA tasks. We used the HuggingFace open-source BLIP-2 implementation (Salesforce/blip2-opt-2.7b) for all our bias analysis experiments. We defined a set of prompts that will be used to probe the model along with images drawn from the FHIBE dataset. Each prompt from the prompt set was used to probe the model and the final set of prompts was chosen to maximize the model response rate (that is, the prompts for which the model provided the most non-empty responses). The full set of prompts, their corresponding model response rate and the chosen prompts are provided in Supplementary Information H.

Each prompt from this chosen set was paired with every image from the FHIBE dataset and the pair was used to prompt the BLIP-2 model. Depending on the type of prompt, the model response was filtered to extract the relevant information. The filtering mechanism varied according to the prompt tone.

The positive prompts asked the model to determine the reason for likeability, while the neutral prompts asked the model for the person’s occupation based only on the provided image input. The model responses were in the form of ‘(S)he is likable because (s)he is a good person or (S)he’s a teacher’. To filter responses like these, we used regex expressions to isolate the predicted gender pronoun and the predicted occupation/likability reason from the model response.

When the model was prompted with the negatively toned prompt about convictions, the model responses included toxic and discriminatory language. The model response was labelled toxic if it contained any of the words contained in our keyword set as shown in the Supplementary Information H.

For this analysis, we prompted BLIP-2 with questions about individuals’ social attributes, but we do not condone the use of these tasks outside of bias detection. Predicting social attributes from facial images has long been a popular but problematic task in computer vision. For example, the ChaLearn First Impressions Challenge140 tasked participants with predicting personality traits like warmth and trustworthiness from videos or images. Deep learning models have been used to map facial features to social judgements141,142. With the rise of foundational models, such uses have also emerged for VQA models, which have been employed to predict personality traits of individuals from a single image of them143.

Such tasks are highly problematic due to their reliance on physiognomic beliefs that personality traits or social attributes can be inferred from appearance alone144. We use such tasks in our paper solely to identify biases in the model, not to use the model’s inferences themselves. While VQA models should in theory refuse to answer such questions, BLIP-2 generally did answer them, with its answers revealing learned societal biases. Building on recent efforts to identify biases in VQA models by using targeted questions to identify biases145,146,147 (for example, “Does this person like algebra?” and “Is this person peaceful or violent?”), our work shows how FHIBE can reveal biases in foundation models and cautions against the flawed assumptions they may promote.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.