Anatomical data

EM reconstruction

All reconstructions in this manuscript are from a serial-section transmission EM volume of a female D. melanogaster FAFB6. Following established practices59, we manually reconstructed neuron skeletons in the CATMAID environment60 (in which 27 laboratories were collaboratively building connectomes for specific circuits, mostly outside of the optic lobe). We also used two auto-segmentations of the same dataset, FAFB-FFN161 and FlyWire62, to quickly examine many auto-segmented fragments for neurons of interest. Once a fragment of interest was found, it was imported to CATMAID, followed by manual tracing and identity confirmation.

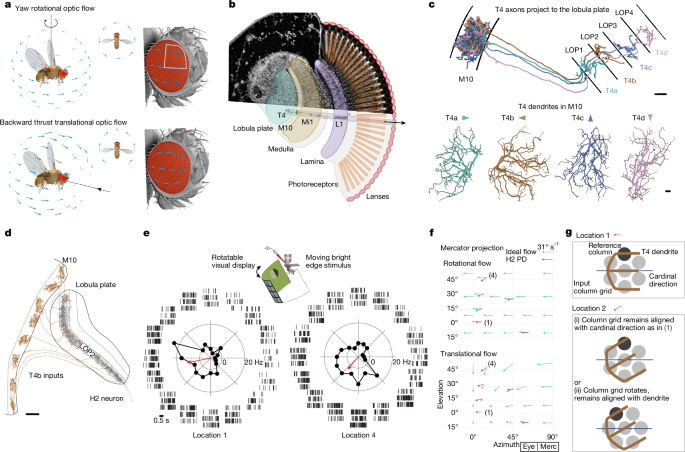

For the data reported here, we identified and reconstructed a total of 780 Mi1, 38 T4a, 176 T4b, 22 T4c, 114 T4d, 63 TmY5a and one H2 cells. All the columnar neurons could be reliably matched to well-established morphology from Golgi-stained neurons13. This reconstruction is based on approximately 1.35 million manually placed nodes. (1) Mi1: we traced the main branches of the M5 and M9–M10 arbors such that the centres of mass of the arbors formed a visually identifiable grid. We used the auto-segmentation to accelerate the search for Mi1 cells wherever there appeared to be a missing point in the grid. After an extensive process, we believed that we had found all of the Mi1 cells in the right optic lobe (Fig. 2c,d). One Mi1 near the neuropil boundary was omitted in later analysis because its centre of mass was clearly ‘off the grid’ established by neighbouring Mi1 cells, despite a complete arbor morphology. (2) T4: we traced their axon terminals in the lobula plate for type identification (each type innervates a specific depth in the lobula plate19) and manually reconstructed their complete dendritic morphology to determine their anatomical PD. To sample T4 morphology across the whole eye with a reasonable amount of time and effort, we focused on the T4b (Fig. 2e) and T4d (Extended Data Fig. 2g) types with sufficient density to allow us to interpolate the PDs at each column position. In addition, we chose four locations on the eye: medial (M), anterior dorsal (AD), anterior ventral (AV) and lateral ventral (LV), where we reconstructed about three to four sets of T4 cells and confirmed that the PDs were mostly anti-parallel between T4a and T4b, as well as between T4c and T4d (Extended Data Fig. 6c,d). (3) TmY5a: we searched for cells along the equator and central meridian of the medulla and traced out their main branches to be able to extend (with further interpolation) the columnar structure of the medulla to the lobula (Extended Data Fig. 2f). (4) H2: the neuron was found during a survey23 of the LPTCs in the right side of the FAFB brain and was completely reconstructed, including all fine branches in the lobula plate (Fig. 1d and Extended Data Fig. 1b).

In addition, we identified several lamina monopolar cells and photoreceptor cells. (5) Lamina cells, mainly L1, L2, L3 and outer photoreceptor cells (R1–R6), were reconstructed, often making some use of auto-segmented data, to allow for their identification. This helped us to locate the equatorial columns in the medulla that have different numbers of photoreceptor inputs in the corresponding lamina cartridge (Fig. 2c and Extended Data Fig. 2b–d). (6) Inner photoreceptor cells R7 and R8: we searched for R7 and R8 cells throughout the eye, at first as part of a focused study on the targets of these photoreceptors56. We extended these reconstructions to complete the medulla map in Fig. 2. We searched for R7 and R8 cells corresponding to each Mi1 cell near the boundary of the medulla. Mi1 cells in columns lacking inner photoreceptors were identified and excluded from further analysis (Fig. 2c). Furthermore, we reconstructed several cells near the central meridian and used the shape of their axons to determine the location of the chiasm (Extended Data Fig. 2e).

Generation and imaging of split-GAL4 driver lines

We used the split-GAL4 driver lines SS00809 (ref. 15) and SS01010 to drive reporter expression in Mi1 and H2 neurons, respectively. Driver lines and representative images of their expression patterns are available at https://splitgal4.janelia.org/. SS01010 (newly reported here; 32A11-p65ADZp in attP40; 81E05-ZpGdbd in attP2) was identified and constructed using previously described methods and hemidriver lines63,64. We used MCFO65 for multicolour stochastic labelling. Sample preparation and imaging, performed by the Janelia FlyLight Project Team, were as in previous studies64,65. Detailed protocols are available online (https://www.janelia.org/project-team/flylight/protocols under ‘IHC – MCFO’). The antibodies used were as follows: mouse nc82 (1:30; Developmental Studies Hybridoma Bank, nc82-s), rat anti-Flag (DYKDDDDK epitope tag) (1:200; Novus Biologicals, NBP1-06712), rabbit anti-HA tag (1:300; Cell Signal Technologies, 3724S), Cy2 goat anti-mouse (1:600; Jackson ImmunoResearch, 115-225-166), ATTO647N goat anti-rat (1:300; Rockland, 612-156-120) and AF594 donkey anti-rabbit (1:500; Jackson ImmunoResearch, 711-585-152). Images were acquired on Zeiss LSM 710 or 780 confocal microscopes with 63×/1.4 NA objectives at 0.19 × 0.19 × 0.38 μm3 voxel size. The reoriented views in Extended Data Fig. 1b and Extended Data Fig. 2a were displayed using VVDviewer (https://github.com/JaneliaSciComp/VVDViewer). This involved manual editing to exclude labelling outside of approximately medulla layers M9–M10 (Extended Data Fig. 2a) or to show only a single H2 neuron (Extended Data Fig. 1b).

Confocal imaging of a whole fly eye

Sample preparation

Flies of the following genotype, w;19F01-LexA(su(Hw)attP5)/ pJFRC22-10XUAS-IVS-myr::tdt(attP40); Rh3-Gal4/ pJFRC19-13XLexAop2-IVS-myr::GFP(attP2), were anaesthetized with CO2 and briefly washed with 70% ethanol. Heads were isolated, their proboscis removed under 2% paraformaldehyde, phosphate-buffered saline (PBS) and 0.1% Triton X-100 (PBS-T), and fixed in this solution overnight at 4 °C. After washing with PBS-T, the heads were bisected along the midline with fine scissors and incubated in PBS with 1% Triton X-100, 3% normal goat serum, 0.5% dimethyl sulfoxide and escin (0.05 mg ml−1, Sigma-Aldrich, E1378) containing chicken anti-GFP (1:500; Abcam, ab13970), mouse anti-nc82 (1:50; Developmental Studies Hybridoma Bank) and rabbit anti-DsRed (1:1,000; Takara Bio, 632496) at room temperature with agitation for two days. After a series of three washes (1 h each) in PBS-T, the sections were incubated for another 24 h in the above buffer containing secondary antibodies: Alexa Fluor 488 goat anti-chicken (1:1,000; Thermo Fisher Scientific, A11039), Alexa Fluor 633 goat anti-mouse (1:1,000; Thermo Fisher Scientific, A21050) and Alexa Fluor 568 goat anti-rabbit (1:1,000; Thermo Fisher Scientific, A11011). The samples were then washed four times (one hour each) in PBS and 1% Triton, and post-fixed for four hours in PBS-T and 2% paraformaldehyde. To avoid artefacts caused by osmotic shrinkage of soft tissue, samples were gradually dehydrated in glycerol (2–80%) and then ethanol (20–100%)66 and mounted in methyl salicylate (Sigma-Aldrich, M6752) for imaging.

Imaging and rendering

Serial optical sections were obtained at 1-µm intervals on a Zeiss 710 confocal microscope with an LD-LCI 25×/0.8 NA objective using 488-nm, 560-nm and 630-nm lasers, respectively. The image in Extended Data Fig. 4a is a reoriented substack projection, processed in Imaris v.10.1 (Oxford Instruments), in which the red channel (560-nm laser) is not shown.

µCT imaging of whole fly heads

µCT is an X-ray imaging technique similar to medical CT scanning, but with much higher resolution that makes it more suitable for small samples67. A 3D data volume set is reconstructed from a series of 2D X-ray images of the physical sample at different angles. The advantage of this method for determining the ommatidia directions (Fig. 3) is that internal details of the eye, such as individual rhabdoms, distinguishable ‘tips’ of the photoreceptors at the boundary between the pseudocone and the neural retina68, and the chirality of the outer photoreceptors, can be resolved across the entire intact fly head with isotropic resolution, which is an essential requirement for preserving the geometry of the eye.

Sample preparation

On the basis of previously published fixation and staining protocols for a variety of biological models69, we undertook extensive testing of fixatives and stains in addition to mounting and immobilizing steps for µCT scanning. The fixatives tested were Bouin’s fluid, alcoholic Bouin’s and 70% ethanol. We tested staining with phosphotungstic acid in water and in ethanol; phosphomolybdic acid in water and in ethanol; Lugol’s iodine solution; and 1% iodine metal dissolved in 100% ethanol. Various combinations of fixatives and stains and variations in times for each were tried. Drying the samples using hexamethyldisilazane did not yield images with the resolution achievable with critical-point-dried samples69. Fixing and staining in ethanol-based solutions followed by critical-point drying produced good contrast with excellent reproducibility but unfortunately introduced a lot of sample shrinkage. We eventually decided to omit the critical-point drying step and to directly scan the samples in an aqueous environment. Extra care was taken to immobilize the head to achieve the desired resolution.

Six- to seven-day-old female D. melanogaster flies were anaesthetized with CO2 and immersed in 70% ethanol. We kept the thorax and abdomen intact and glued to the head, and subsequently used it as an anchor to stabilize the head in an aqueous environment. We confirmed that no glue got on to the head region. The mouthpart and legs were removed to allow for fixative absorption. Samples were fixed in 70% ethanol at room temperature overnight in a 1.5-ml Eppendorf tube with rotation. The ethanol was then replaced with a staining solution of 0.5% phosphotungstic acid in 70% ethanol. Samples remained in the staining solution at room temperature for 7–14 days with rotation.

Imaging and reconstruction

The samples were scanned with a Zeiss Xradia Versa XRM 510 μCT scanner. The scanning was done at a voltage of 40 kV and current of 72 µA (power 2.9 W) at 20× magnification with 20-s exposures and a total of 1,601 projections. Images had a pixel size of around 1 µm with camera binning at 2 and reconstruction binning at 1. The Zeiss XRM reconstruction software was used to generate TIFF stacks of the tomographs. Image segmentation and annotation (lenses and photoreceptor tips) were done in Imaris v.10.1 (Oxford Instruments).

Whole-cell recordings of labelled H2 neurons

Electrophysiology

All of the flies used in electrophysiological recordings were from a single genotype: pJFRC28-10XUAS-IVS-GFP-p10 (ref. 70) in attP2 crossed to the H2 driver line SS01010 (see ‘Generation and imaging of split-GAL4 driver lines’). Flies were reared under a 16-h light–8-h dark light cycle at 24 °C. To perform the recordings, two-to-three-day-old female D. melanogaster flies were anaesthetized on ice and glued to a custom-built PEEK platform, with their heads tilted down, using a UV cured glue (Loctite 3972) and a high-power UV-curing LED system (Thorlabs CS2010). To reduce brain motion, the two front legs were removed, the proboscis was folded and glued in its socket and muscle 16 (ref. 71) was removed from between the antennae. The cuticle was removed from the posterior part of the head capsule using a hypodermic needle (BD PrecisionGlide 26 g × 1/2 in.) and fine forceps. Manual peeling of the perineural sheath using forceps seemed to damage the recording stability. Therefore, the sheath was removed using collagenase (following a previously described method72). To prevent contamination, the pipette holder was replaced after collagenase application.

The brain was continuously perfused with an extracellular saline containing 103 mM NaCl, 3 mM KCl, 1.5 mM CaCl2.2H2O, 4 mM MgCl2.6H2O, 1 mM NaH2PO4.H2O, 26 mM NaHCO3, 5 mM N-Tris(hydroxymethyl)-methyl-2-aminoethane-sulfonic acid, 10 mM glucose and 10 mM trehalose, with the osmolarity adjusted to 275 mOsm and bubbled with carbogen throughout the experiment. Patch clamp electrodes were pulled (Sutter P97), pressure polished (ALA CPM2) and filled with an intracellular saline containing 140 mM Kasp, 10 mM HEPES, 1 mM EGTA, 1 mM KCl, 0.1 mM CaCl2, 4 mM MgATP, 0.5 mM NaGTP and 5 mM glutathione73. Alexa 594 hydrazide (250 μM) was added to the intracellular saline before each experiment to reach a final osmolarity of 265 mOsm, with a pH of 7.3.

Recordings were obtained using a Sutter SOM microscope with a 60× water-immersion objective (60× Nikon CFI APO NIR Objective, 1.0 NA, 2.8-mm WD). Contrast was generated using oblique illumination from an 850-nm LED connected to a light guide positioned behind the fly’s head. Images were acquired using μManager74 to allow for automatic contrast adjustment. All recordings were obtained from the left side of the brain. To block visual input from the contralateral side, the right eye was painted with miniature paint (MSP Bones grey primer followed by dragon black). Current clamp recordings were sampled at 20 kHz and low-pass-filtered at 10 kHz using an Axon multiClamp 700B amplifier (National Instrument PCIe-7842R LX50 Multifunction RIO board) and custom LabView (2013 v.13.0.1f2; National Instruments) and MATLAB (MathWorks) software.

Visual stimuli

The display used to present visual stimuli to the fly during H2 recordings was a G4 LED arena75 configured with a manual rotation axis. The arena covered slightly more than one-half of a cylinder (240° in azimuth and around 50° in elevation) of the fly’s visual field, with the diameter of each pixel subtending about 1.25° on the fly eye. With the limitations of the mounting platform, the microscope objective and access for visually guided electrophysiology, it is not possible to deliver visual stimuli to the fly’s complete field of view. To access the cell body of H2, the head must be pitched downwards. In this configuration, the frontal and dorsal regions are the most natural eye regions to stimulate. To mitigate stimulus distortion caused by the cylindrical arena (and thus better approximate a spherical display), we rotated the arena (by 30°) once during each recording to present stimuli in the equatorial and more dorsal part of the fly’s visual field. In Extended Data Fig. 1d, positions 1, 2, 6, 7 and 9 are presented with one arena rotation angle, and positions 3, 4, 5 and 8 with a second arena position.

Because it was most important to examine variation in local PDs along the elevation in the frontal part of the visual space (see the ideal flow fields in Fig. 1f), we oriented the fly to have the largest visible extent in this region. Visual stimuli were generated using custom-written MATLAB code. We performed two sets of experiments (five flies in set 1 and seven flies in set 2) using the following stimulus protocols.

Experiment set 1

-

1.

Moving grating: square wave gratings with a constant spatial frequency (7 pixels ON, 7 pixels OFF) moving at 1.78 Hz (40-ms steps) were presented in an approximately 26° (21 pixels in diameter) circular window over an intermediate-intensity background. Gratings were presented for three full cycles (1.68 s) with three repetitions at 16 orientations.

-

2.

Moving bars: bright and dark moving bars were presented in both preferred and non-preferred directions for H2 cells (back to front and front to back, respectively). The H2 responses to these trials are not shown.

Experiment set 2

-

1.

Moving grating: same as above, except that gratings were presented for five full cycles (2.8 s) with three repetitions at eight orientations.

-

2.

Moving edges: bright and dark moving edges were presented in the same circular window as above on an intermediate-intensity background. Edges moving at 40-ms steps (around 31° s−1) were presented in 16 orientations to accurately measure the local PD of each cell. Stimuli were presented with three repetitions for each condition.

-

3.

Moving bars: bright and dark moving bars were presented in both preferred and non-preferred directions for H2 cells (back to front and front to back, respectively). The H2 responses to these trials are not shown.

Figure 1e shows the response of an example H2 cell (cell 2 in Extended Data Fig. 1d from experiment set 2) to bright moving edges, and the red arrows in Figs. 1f and 4c and Extended Data Figs. 6a and 7g are responses averaged over all seven H2 cells in experiment set 2 for both bright and dark moving edges. The responses from cell 7 at locations 3, 4 and 5 were excluded owing to the declining quality of the recording.

Extended Data Fig. 1c shows the responses from recorded H2 cell 2 in experiment set 2. The grating responses (bottom) show 2 s of the response after the stimulus start.

Extended Data Fig. 1d plots the responses of individual cells: bright and dark edge responses are from the seven recorded cells in experiment set 2; grating responses, locations 2–6 include seven flies from experiment set 2; locations 7–9 include five flies from experiment set 1; location 1 includes flies from both sets.

Extended Data Fig. 1e compares the responses from the seven recorded cells in experiment set 2 and their responses are further detailed in Supplementary Data 1.

The local PD for the H2 cells was determined using the responses to the 16 directions, averaged for both moving edge stimuli. Spikes were extracted from the recorded data and summed per trial, then averaged across repeated presentations. The polar plots (Fig. 1e) represent these averages (relative to baseline firing rate), and the vector sum over all 16 directions is represented by the red arrows in Fig. 1e,f. The subthreshold responses of H2 can also be used to determine the local PD of the neuron, showing good agreement with the directions based on the neuron’s spiking responses (not shown).

Determining head orientation

A camera (Point Grey Flea3 with an 8X CompactTL telecentric lens with in-line illumination, Edmund Optics) was aligned to a platform holder using a custom-made target. This allowed us to adjust the camera and platform holder such that when the holder is centred in the camera’s view, both yaw and roll angles are zero. Next, after the fly was glued to the platform, but before the dissection, images were taken from the front to check for the yaw and roll angles of head orientation. If the deviation of the head away from a ‘straight ahead’ orientation was more than 2°, then that fly was discarded. Finally, to measure pitch angle, the holder was rotated ±90°, and images of the fly’s eye were taken on both sides. Head orientation was then measured as previously described76. We found that flies were consistently positioned with very similar orientations, such that we could combine the data across flies to produce the summary local PD plots for H2 recordings (Fig. 1f and Extended Data Fig. 1d).

Determining the stimuli in the compound eye reference frame

The positions and directions of the visual stimuli are programmed in the LED coordinates of the G4 display. We first transformed the LED coordinates to the lab coordinates using the dimension and rotation angle of the arena. The arena was set at two different rotation angles to maximize the coverage of the fly’s visual field. Then, using the head orientation measurement, we performed another transformation to the compound eye reference frame (Fig. 1f). These transformations map each stimulus into spherical coordinates in the fly eye reference frame, where the subsequent vector operations to determine the local PDs are performed.

Presenting stimuli on an idealized spherical display while recording from neurons in the fly brain is impractical, but it is important to account for any differences. This cylinder–sphere mismatch between the cylindrical LED arena and the spherical compound eye reference frame introduces both scale and angular distortions to the visual stimuli. The largest distortion should occur between positions with the largest elevation difference; for example, between positions 1 and 4. To visualize this distortion, we mapped eight (angularly) uniformly distributed equal-length vectors (representing the motion travelled by, for example, a moving edge stimulus) to six stimulus locations on our display and plotted them (on a Mercator projection) as the fly would observe each (Extended Data Fig. 1f). The difference between positions 1 and 4 seems to be mainly an overall rotation. Because the local PD of H2 is calculated from the neuron’s spike rate and the stimulus’s moving direction (already accounting for this distortion), the crucial feature is that we are uniformly sampling all directions. Consequently, an overall apparent rotation of the stimulus set does not affect the result. Second, the apparent expansion of the vectors at positions 3, 4 and 5 is due to the Mercator projection (chosen for this visualization because it preserves angles); in fact, the vectors closer to the equator appear larger to the flies. Because we rotate the arena, the difference between positions 3 and 4 is comparable to that between positions 1 and 2, which is minimal. To characterize the variation in stimulus speed due to this geometric distortion, we computed the average stimulus amplitudes (Extended Data Fig. 1f) at two extreme positions: position 1 (12.7° on average) and position 4 (11.6° on average), which showed a change of around 7%. We did not correct the stimulus velocity because the velocity differences are small, and we selected our stimulus speed in a regime in which T4 and T5 neurons have broad speed tuning15,16.

Data analysis

Mapping medulla columns

We based our map of medulla columns on the principal columnar cell type Mi1 that is found as one per column. Mi1 neurons resemble columns, with processes that do not spread far from the main ‘trunk’ of the neuron. They have a stereotypical pattern of arborization in medulla layers M1, M5 and M9–M10. For each Mi1 cell, we calculated the centres of mass of its arbors in both M5 and M10, and used them as column markers (Fig. 2b,c). The medulla columns do not form a perfectly regular grid—the column arrangement is squeezed along the anterior–posterior direction, and the dorsal and ventral portions shift towards the anterior. Nevertheless, we were able to map all column positions onto a regular grid by visual inspection (Fig. 2d). This was much clearer based on the positions of the M5 column markers, which are more regular and were used as the basis for our grid assignment. We compared the whole cells (across layers) in a neighbourhood for occasional ambiguous cases to confirm our assignment. We then propagated the grid assignment to M10 column markers and used them throughout the paper, because T4 cells received inputs in layer M10.

Establishing a global reference that could be used to compare the medulla map (Fig. 2c) to the eye map (Fig. 3f) was essential, and so we endeavoured to find the ‘equator’ of the eye in both the EM and the μCT dataset. Lamina cartridges in the equatorial region receive more outer photoreceptor inputs (seven or eight compared with the usual six)11,77. We traced hundreds of lamina monopolar cells (L1 or L3 cells), with at least one input to each of around 100 Mi1 cells near the equator region, and counted the number of photoreceptor cells in each corresponding lamina cartridge (Extended Data Fig. 2b–d). This allowed us to locate the equatorial region of the medulla (Fig. 2c). The equator in μCT is identified by the chirality of the outer photoreceptors (Fig. 3d). We further identified the ‘central meridian, +v’ row, which is roughly the vertical midline. There is some ambiguity in defining +h as the equator in Fig. 2d, because there are four rows of ommatidia with eight photoreceptors (points in tan). We opted for one of the middle two rows that intersects with +v. We also identified the chiasm region on the basis of the twisting of R7 and R8 photoreceptor cells (Extended Data Fig. 2e), which very nearly aligned with the central meridian.

T4 PD

Strahler number (SN) was first developed in hydrology to define the hierarchy of tributaries of a river28, and has since been adapted to analyse the branching pattern of a tree graph (Fig. 2f). A dendrite of a neuron can be considered as a tree graph. The smallest branches (leaves of a tree) are assigned with SN = 1. When two branches of SN = a and SN = b merge into a larger branch, the latter is assigned with SN = max(a, b) if a ≠ b, or with SN = a + 1 if a = b.

We used SN = {2, 3} branches to define the PD because they are the most consistently directional (Extended Data Fig. 3a). SN = 1 branches have a relatively flat angular distribution, so their inclusion would only add noise, rather than signal, to our PD estimate (which we confirmed in preliminary analysis). Furthermore, the scale of the SN = 1 branches (see examples in Fig. 2g or the gallery of reconstructed neurons in the Supplementary Data) is much smaller than the columns, and they are dominated by the ‘last mile’ of neuronal connectivity within the very dense columns and do not contribute to the neuron ‘backbone’.

Most T4 cells we reconstructed have few SN = 4 branches (which are also directional, but too few to be relied on) and rarely have SN = 5 branches. A 3D vector represents each branch. Vector sums are calculated for all SN = {2, 3} branches, which define the directions of the PD vectors (Fig. 2f). We also assigned an amplitude to the PD in addition to its direction. To generate a mass distribution for each T4 dendrite, we resampled the neuron’s skeleton to position the nodes roughly equidistantly (not so after manual tracing). Then, all dendrite nodes were projected onto the PD axis. We define the length of the PD vector using a robust estimator, the distance between the 1st and 99th percentiles of this distribution. The width is a segment orthogonal to the PD vector, with its length similarly defined as PD and without a direction (Fig. 2g).

Mapping T4 PDs into the regular grid in the medulla and the eye coordinates using kernel regression

Kernel regression is a type of non-parametric regression, often used when the relationship between the independent and the dependent variables does not follow a specific form. It computes a locally weighted estimation, in which the weights are given by the data themselves. In our case, we used a Gaussian kernel as the weighting function. More specifically, given a set of points P in space A (for example, medulla columns in anatomical space), a second set of points Q in space B (for example, ommatidia directions in visual space) and a one-to-one mapping between P and Q, one can map a new point (for example, a T4 PD vector) in A to a location in B on the basis of its relationships with respect to P, with more weight given to closer neighbours.

We used this method to map PDs from local medulla space to a regular grid in Fig. 2h and to map PDs from medulla space to visual space in Fig. 4b. We verify the accuracy of the regression method with a test described at the end of this section. For mapping to a regular grid, we defined a 2D reference grid with 19 points, which represented the home column (+1) and the second (+6) and third (+12) closest neighbouring columns in a hexagonal grid. For a given T4 neuron, we searched for the same set of neighbouring medulla columns. We flattened these columns and the T4’s PD locally by projecting them onto a 2D plane given by principal component analysis; that is, the plane is perpendicular to the third principal axis. Finally, we used kernel regression to map the PD from the locally flattened 2D medulla space to the 2D reference grid. The difference in mapping to the visual space (Fig. 4b and Extended Data Fig. 7a) is that the regression is from the locally flattened 2D medulla space to a unit sphere in 3D (the space of ommatidia directions).

Kernel regression can also be used as an interpolation method. This method is equivalent to mapping from a space to its scalar or vector field; that is, assigning a value to a new location on the basis of existing values in a neighbourhood. This is how we calculated the PD fields in Fig. 4c and Extended Data Fig. 7b.

In practice, we used the np package78 in R, particularly the npregbw function, which determines the width of the Gaussian kernel. Most parameters of the npregbw function were set to default except that: (1) we used the local-linear estimator, regtype = ‘ll’, which we determined performs better near boundaries; (2) we used fixed bandwidth, bwtype= ‘fixed’, for interpolation and the adaptive nearest neighbour method, bwtype= ‘adaptive_nn’, for mapping between two different spaces (for example, from medulla to ommatidia).

Extended Data Fig. 6f quantifies the kernel regression by comparing the medulla columns regressed from the medulla space to the ommatidia space versus their matched positions. The perfect regression would yield no spatial discrepancies between these positions, and the observed residuals are quite small compared with the inter-ommatidial angle. Because PD vectors are defined in reference to the medulla columns, the regression method will project them to visual space with high accuracy. Further details can be found in our GitHub repository and the np package manual.

Ommatidia directions

We analysed the µCT volumes in Imaris v.10.1 (Oxford Instruments). We separately segmented out a volume that contained all the lenses and one that contained all the photoreceptor tips. We then used the ‘spot detection’ (based on contrast) algorithm in Imaris to locate the centres of individual lenses and photoreceptor tips, and quality controlled by visual inspection and manual editing. The lens positions are highly regular and can be readily mapped onto a regular hexagonal grid (Extended Data Fig. 5a, directly comparable to the medulla grid in Fig. 2d). With our optimized µCT data, it is also straightforward to match all individual lenses to all individual photoreceptor ‘tips’ in a one-to-one manner, and consequently to compute the ommatidia viewing directions. These directional vectors can be represented as points on a unit sphere (Fig. 3e). We then performed a locally weighted smoothing for points with at least five neighbours: the position of the point itself accounts for 50%, and the average position of its six neighbours accounts for the remaining 50%. This gentle smoothing only affects the positions in the bulk of the eye, leaving the boundary points alone.

Assuming left–right symmetry, we used the lens positions from both eyes to define the visual field’s frontal midline (sagittal plane). Together with the equator, identified by the inversion in the chirality of the outer photoreceptors (Fig. 3c,d), we could then define an eye coordinate system for the fly’s visual space—represented for one eye in Fig. 3e,f. Note that the z = 0 plane (z is ‘up’ in Fig. 3e) in the coordinate system is defined by lens positions, hence the ‘equator’ ommatidia directions do not necessarily lie in this plane (more easily seen in Fig. 3f). In addition, we defined the ‘central meridian’ line of points (+v in Fig. 2e,f and Extended Data Fig. 5a) that divides the whole grid into roughly equal halves. Because this definition is based on the grid structure, this central meridian does not lie on a geographical meridian line in the eye coordinates.

Eye map: one-to-one mapping between medulla columns and ommatidia directions

With both medulla columns and ommatidia directions mapped to a regular grid (Fig. 2d and Extended Data Fig. 5a) and equators and central meridians defined, it is straightforward to match these two point sets, starting from the centre outwards. Because the medulla columns are from a fly imaged with EM and ommatidia directions from a different fly imaged with µCT, we do not expect these two point sets to match exactly. Still, we endeavoured to use flies with a very similar total number of ommatidia (and of the same genotype). By matching the points from the centre outwards and relying on anatomical features such as the equator, we minimize the column receptive field discrepancies, especially in the eye’s interior. By construction, this approach yields a more accurate alignment in the interior of the eye and medulla rather than on the boundary of each point set, and is better suited for our purpose of mapping the global organization of T4 PDs. Nonetheless, we minimize the boundary effects by adding auxiliary points along the grid beyond the boundary points, and using them for regressing the original boundary points. The matching at the boundary is somewhat complicated by the existence of medulla columns with no inner photoreceptor (R7 or R8) inputs57 (Fig. 2c). In the eye map in Fig. 4a, we noted unmatched points with empty circles, all of which lie on the boundaries (which is why the ommatidia directions in Fig. 3f contain additional points). For these reasons, we expect our alignment to be accurate in the eye’s interior, but there are limits to how accurately the medulla columns and ommatidia directions along the boundary of each dataset—from two separate flies—can be aligned. We also noted the boundary points that did not have enough neighbours for computing the inter-ommatidial angles, the shear angles or the aspect ratios in Fig. 3h,i and Extended Data Fig. 5c,d. Of note, our main discoveries about the universal sampling of medulla columns (Fig. 2), and the strong relationship between T4 PDs and the shear angle of ommatidia hexagons (comparing Fig. 3i with Fig. 4d) are well supported by the anatomy of the bulk of the eye and do not depend on perfect matching across datasets or the particular fly used to construct the eye map (Extended Data Fig. 4f,g).

Grid convention: regular versus irregular, and hexagonal versus square

Facet lenses of the fly’s eye are arranged in an almost regular hexagonal grid. However, the medulla columns are squeezed along the anterior–posterior direction and more closely resemble a square grid tilted at 45° (Extended Data Fig. 5e). This difference can also be seen by comparing the aspect ratios (Extended Data Fig. 5c,d). To preserve these anatomical features, we mapped the medulla columns and T4 PDs onto a regular square grid (tilted by 45°; see, for example, Fig. 2d,h) and the ommatidia directions onto a regular hexagonal grid (Extended Data Fig. 5a).

Mercator and Mollweide projections

For presenting spherical data, the Mercator projection is more common, but we prefer the Mollweide projection because it produces smaller distortion near the poles, whereas the Mercator projection has singularities at the poles. The Mollweide projection thus provides a more intuitive representation of spatial coverage. On the other hand, the Mercator projection preserves the angular relationships (conformal) and is more convenient for reading out angular distributions, which is why we use it for presenting the H2 data (Fig. 1f and Extended Data Fig. 1e,f). Otherwise, we present the Mollweide projections in the main figures and provide the Mercator version for some plots (Extended Data Figs. 5f–j, 6a and 7c,g). See Extended Data Fig. 4c for a comparison between these two projections.

Ideal optic-flow fields

Following the classic framework for the geometry of optic flow79, we calculate the optic-flow field for a spherical sampling of visual space under the assumption that all objects are at an equal distance from the fly (only relevant for translational movements). With ommatidia directions represented by unit vectors in 3D, the optic-flow field induced by translation is computed as the component of the inverse of the translation vector (because motion and optic flow are ‘opposite’) perpendicular to the ommatidia directions (also known as a vector rejection). The flow field induced by rotation is computed as the cross product between the ommatidia directions and the rotation vector. Because the motion perceived by the fly would be the opposite of the induced motion, the flow field is the reverse of the ones described above (Fig. 4e). The angles between T4 PDs and ideal optic-flow fields at each ommatidia direction are computed for subsequent comparisons between various optic-flow fields (Fig. 4f,g and Extended Data Fig. 7e,f).

We performed a grid search to determine the optimal axis of movement (minimal average errors) for a given PD field. We defined 10,356 axes on the unit sphere (roughly 1° sampling) and generated optic-flow fields induced by translations and rotations along these axes. We compared all of these optic-flow fields and the PD fields for T4b and T4d to determine the axes with minimal average angular differences (Extended Data Fig. 6e). These are the optimal axes in Fig. 4f–i and Extended Data Figs. 6e and 7f.

Data analysis and plotting conventions

All histograms are smoothed as a kernel density estimation. To set the scale of each histogram plot, we show a scale bar on the left-hand side that spans from zero at the bottom to the height of a uniform distribution.

All 2D projections (Mollweide or Mercator) are such that the right half (azimuth > 0) represents the right-side visual field of the fly (looking from inside out). The medulla grid and the ommatidia grid are left–right flipped because of the optic chiasm. The top half (elevation > 0) represents the dorsal visual field. The boxed label in the lower right corner of each plot of mapped points indicates the space (med or eye) and representation (anat, 3D, Moll or Merc) used (anat is short for anatomical, indicating that the data are shown in the ‘native’ coordinates of the anatomical dataset).

Animations (Supplementary Videos 1 and 2) were created in Blender (v.4.2)80 and using the Python package NAVis (v.1.10.0)81.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.