Life is uncertain. None of us know what is going to happen. We know little of what has happened in the past, or is happening now outside our immediate experience. Uncertainty has been called the ‘conscious awareness of ignorance’1 — be it of the weather tomorrow, the next Premier League champions, the climate in 2100 or the identity of our ancient ancestors.

In daily life, we generally express uncertainty in words, saying an event “could”, “might” or “is likely to” happen (or have happened). But uncertain words can be treacherous. When, in 1961, the newly elected US president John F. Kennedy was informed about a CIA-sponsored plan to invade communist Cuba, he commissioned an appraisal from his military top brass. They concluded that the mission had a 30% chance of success — that is, a 70% chance of failure. In the report that reached the president, this was rendered as “a fair chance”. The Bay of Pigs invasion went ahead, and was a fiasco. There are now established scales for converting words of uncertainty into rough numbers. Anyone in the UK intelligence community using the term ‘likely’, for example, should mean a chance of between 55% and 75% (see go.nature.com/3vhu5zc).

Attempts to put numbers on chance and uncertainty take us into the mathematical realm of probability, which today is used confidently in any number of fields. Open any science journal, for example, and you’ll find papers liberally sprinkled with P values, confidence intervals and possibly Bayesian posterior distributions, all of which are dependent on probability.

And yet, any numerical probability, I will argue — whether in a scientific paper, as part of weather forecasts, predicting the outcome of a sports competition or quantifying a health risk — is not an objective property of the world, but a construction based on personal or collective judgements and (often doubtful) assumptions. Furthermore, in most circumstances, it is not even estimating some underlying ‘true’ quantity. Probability, indeed, can only rarely be said to ‘exist’ at all.

Chance interloper

Probability was a relative latecomer to mathematics. Although people had been gambling with astragali (knucklebones) and dice for millennia, it was not until the French mathematicians Blaise Pascal and Pierre de Fermat started corresponding in the 1650s that any rigorous analysis was made of ‘chance’ events. Like the release from a pent-up dam, probability has since flooded fields as diverse as finance, astronomy and law — not to mention gambling.

Does quantum theory imply the entire Universe is preordained?

To get a handle on probability’s slipperiness, consider how the concept is used in modern weather forecasts. Meteorologists make predictions of temperature, wind speed and quantity of rain, and often also the probability of rain — say 70% for a given time and place. The first three can be compared with their ‘true’ values; you can go out and measure them. But there is no ‘true’ probability to compare the last with the forecaster’s assessment. There is no ‘probability-ometer’. It either rains or it doesn’t.

What’s more, as emphasized by the philosopher Ian Hacking2, probability is “Janus-faced”: it handles both chance and ignorance. Imagine I flip a coin, and ask you the probability that it will come up heads. You happily say “50–50”, or “half”, or some other variant. I then flip the coin, take a quick peek, but cover it up, and ask: what’s your probability it’s heads now?

Note that I say “your” probability, not “the” probability. Most people are now hesitant to give an answer, before grudgingly repeating “50–50”. But the event has now happened, and there is no randomness left — just your ignorance. The situation has flipped from ‘aleatory’ uncertainty, about the future we cannot know, to ‘epistemic’ uncertainty, about what we currently do not know. Numerical probability is used for both these situations.

There is another lesson in here. Even if there is a statistical model for what should happen, this is always based on subjective assumptions — in the case of a coin flip, that there are two equally likely outcomes. To demonstrate this to audiences, I sometimes use a two-headed coin, showing that even their initial opinion of “50–50” was based on trusting me. This can be rash.

Subjectivity and science

My argument is that any practical use of probability involves subjective judgements. This doesn’t mean that I can put any old numbers on my thoughts — I would be proved a poor probability assessor if I claimed with 99.9% certainty that I can fly off my roof, for example. The objective world comes into play when probabilities, and their underlying assumptions, are tested against reality (see ‘How ignorant am I?’); but that doesn’t mean the probabilities themselves are objective.

Some assumptions that people use to assess probabilities will have stronger justifications than others. If I have examined a coin carefully before it is flipped, and it lands on a hard surface and bounces chaotically, I will feel more justified with my 50–50 judgement than if some shady character pulls out a coin and gives it a few desultory turns. But these same strictures apply anywhere that probabilities are used — including in scientific contexts, in which we might be more naturally convinced of their supposed objectivity.

Here’s an example of genuine scientific, and public, importance. Soon after the start of the COVID-19 pandemic, the RECOVERY trials started to test therapies in people hospitalized with the disease in the United Kingdom. In one experiment, more than 6,000 people were randomly allocated to receive either the standard care given in the hospital they were in, or that care plus a dose of dexamethasone, an inexpensive steroid3. Among those on mechanical ventilation, the age-adjusted daily mortality risk was 29% lower in the group allocated dexamethasone compared with the group that received only standard care (95% confidence interval of 19–49%). The P value — the calculated probability of observing such an extreme relative risk, assuming a null hypothesis of no underlying difference in risk — can be calculated to be 0.0001, or 0.01%.

Why forecast an election that’s too close to call?

This is all standard analysis. But the precise confidence level and P value rely on more than just assuming the null hypothesis. It also depends on all of the assumptions in the statistical model, such as the observations being independent: that there are no factors that cause people treated more closely in space and time to have more-similar outcomes. But there are many such factors, whether it’s the hospital in which people are being treated or changing care regimes. The precise value also relies on all of the participants in each group having the same underlying probability of surviving 28 days. This will differ for all sorts of reasons.

None of these false assumptions necessarily mean that the analysis is flawed. In this case, the signal is so strong that a model allowing, say, the underlying risk to vary between participants will make little difference to the overall conclusions. If the results were more marginal, however, it would be appropriate to do extensive analysis of the model’s sensitivity to alternative assumptions.

To exercise the much-quoted aphorism, “all models are wrong, but some are useful”4. The dexamethasone analysis was particularly useful because its firm conclusion changed clinical practice and saved hundreds of thousands of lives. But the probabilities that the conclusion was based on were not ‘true’ — they were a product of subjective, if reasonable, assumptions and judgements.

Down the rabbit hole

But are these numbers, then, our subjective, perhaps flawed estimates of some underlying ‘true’ probability, an objective feature of the world?

I will add the caveat here that I am not talking about the quantum world. At the sub-atomic level, the mathematics indicates that causeless events can happen with fixed probabilities (although at least one interpretation states that even those probabilities express a relationship with other objects or observers, rather than being intrinsic properties of quantum objects)5. But equally, it seems that this has negligible influence on everyday observable events in the macroscopic world.

I can also avoid the centuries-old arguments about whether the world, at a non-quantum level, is essentially deterministic, and whether we have free will to influence the course of events. Whatever the answers, we would still need to define what an objective probability actually is.

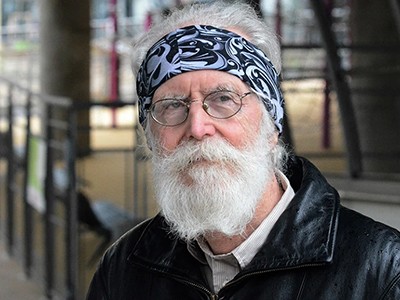

John F. Kennedy approved a US invasion of Cuba on the basis of imprecise probabilities.Credit: Michael Ochs Archives/Getty

Many attempts have been made to do this over the years, but they all seem either flawed or limited. These include frequentist probability, an approach that defines the theoretical proportion of events that would be seen in infinitely many repetitions of essentially identical situations — for example, repeating the same clinical trial in the same population with the same conditions over and over again, like Groundhog Day. This seems rather unrealistic. The UK statistician Ronald Fisher suggested thinking of a unique data set as a sample from a hypothetical infinite population, but this seems to be more of a thought experiment than an objective reality. Or there’s the semi-mystical idea of propensity, that there is some true underlying tendency for a specific event to occur in a particular context, such as my having a heart attack in the next ten years. This seems practically unverifiable.

‘Shut up and calculate’: how Einstein lost the battle to explain quantum reality

There is a limited range of well-controlled, repeatable situations of such immense complexity that, even if they are essentially deterministic, fit the frequentist paradigm by having a probability distribution with predictable properties in the long run. These include standard randomizing devices, such as roulette wheels, shuffled cards, spun coins, thrown dice and lottery balls, as well as pseudo-random number generators, which rely on non-linear, chaotic algorithms to give numbers that pass tests of randomness.

In the natural world, we can throw in the workings of large collections of gas molecules which, even if following Newtonian physics, obey the laws of statistical mechanics; and genetics, in which the huge complexity of chromosomal selection and recombination gives rise to stable rates of inheritance. It might be reasonable in these limited circumstances to assume a pseudo-objective probability — ‘the’ probability, rather than ‘a’ (subjective) probability.

In every other situation in which probabilities are used, however — from broad swathes of science to sports, economics, weather, climate, risk analysis, catastrophe models and so on — it does not make sense to think of our judgements as being estimates of ‘true’ probabilities. These are just situations in which we can attempt to express our personal or collective uncertainty in terms of probabilities, on the basis of our knowledge and judgement.

Matters of judgement

This all just raises more questions. How do we define subjective probability? And why are the laws of probability reasonable, if they are based on stuff we essentially make up? This has been discussed in the academic literature for almost a century, again with no universally agreed outcome.

One of the first attempts was made in 1926 by the mathematician Frank Ramsey at the University of Cambridge, UK. He ranks as the person in history I would most like to meet. He was a genius whose work in probability, mathematics and economics is still considered fundamental. He worked only in the mornings, devoting his after-hours to a wife and a lover, playing tennis, drinking and enjoying exuberant parties while laughing “like a hippopotamus” (he was a big man, weighing in at 108 kilograms). He died in 1930 aged just 26, probably, according to his biographer Cheryl Misak, from contracting leptospirosis after swimming in the River Cam6.

Mathematician who tamed randomness wins Abel Prize

Ramsey showed7 that all the laws of probability could be derived from expressed preferences for specific gambles. Outcomes have assigned utilities, and the value of gambling on something is summarized by its expected utility, which itself is governed by subjective numbers expressing partial belief — that is, our personal probabilities. This interpretation does, however, require an extra specification of these utility values. More recently, it’s been shown8 that the laws of probability can be derived simply by acting in such a way as to maximize your expected performance when using a proper scoring rule, such as the one shown in the quiz “How ignorant am I?”.

Attempts to define probability are often rather ambiguous. In his 1941–2 paper ‘The Applications of Probability to Cryptography’, for example, Alan Turing uses the working definition that “the probability of an event on certain evidence is the proportion of cases in which that event may be expected to happen given that evidence”9. This acknowledges that practical probabilities will be based on expectations — human judgements. But by “cases”, does Turing mean instances of the same observation, or of the same judgements?

The latter has something in common with frequentist definition of objective probability, just with the class of repeated similar observations replaced by a class of repeated similar subjective judgements. In this view, if the probability of rain is judged to be 70%, this places it in the set of occasions in which the forecaster assigns a 70% probability. The event itself is expected to occur in 70% of such occasions. This is probably my favourite definition. But the ambiguity of probability is starkly demonstrated by the fact that, after nearly four centuries, there are many people who won’t agree with me on that.

Pragmatic approach

When I was a student in the 1970s, my mentor, statistician Adrian Smith, was translating the Italian actuary Bruno de Finetti’s Theory of Probability10. De Finetti had developed ideas of subjective probability at around the same time as Ramsey, but entirely independently. (They were very different characters: in contrast to Ramsey’s staunch socialism, in his youth de Finetti was an enthusiastic supporter of Italian dictator Benito Mussolini’s style of fascism, although he later changed his mind.) That book begins with the provocative statement: “probability does not exist”, an idea that has had a profound influence on my thinking over the past 50 years.

In practice, however, we perhaps don’t have to decide whether objective ‘chances’ really exist in the everyday non-quantum world. We can instead take a pragmatic approach. Rather ironically, de Finetti himself provided the most persuasive argument for this approach in his 1931 work on ‘exchangeability’, which resulted in a famous theorem that bears his name11. A sequence of events is judged to be exchangeable if our subjective probability for each sequence is unaffected by the order of our observations. De Finetti brilliantly proved that this assumption is mathematically equivalent to acting as if the events are independent, each with some true underlying ‘chance’ of occurring, and that our uncertainty about that unknown chance is expressed by a subjective, epistemic probability distribution. This is remarkable: it shows that, starting from a specific, but purely subjective, expression of convictions, we should act as if events were driven by objective chances.

It is extraordinary that such an important body of work, underlying all of statistical science and much other scientific and economic activity, has arisen from such an elusive idea. And so I will conclude with my own aphorism. In our everyday world, probability probably does not exist — but it is often useful to act as if it does.