Sample and basic data collection for 2020 election study

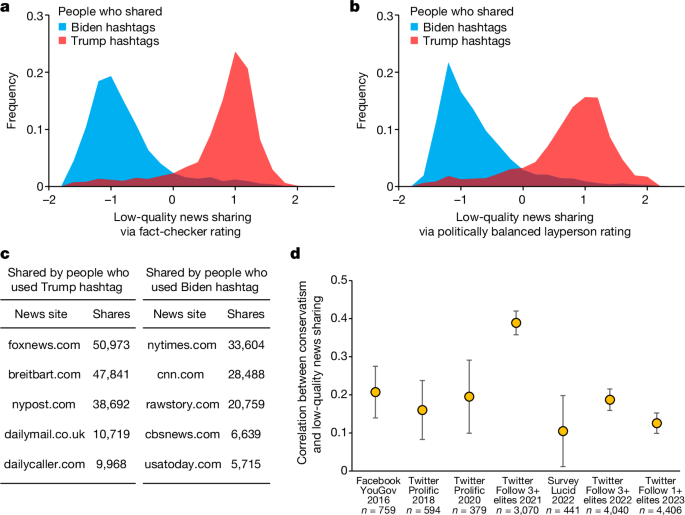

First, we collected a list of Twitter users who tweeted or retweeted either of the election hashtags #Trump2020 and #VoteBidenHarris2020 on 6 October 2020. We also collected the most recent 3,200 tweets sent by each of those accounts. We processed tweets and extracted tweeted domains from 34,920 randomly selected users (15,714 shared #Trump2020 and 19,206 shared #VoteBidenHarris2020), and filtered down to 12,238 users who shared at least five links to domains used by the ideology estimator of ref.â57. We also excluded 426 âeliteâ users with more than 15,000 followers who are probably unrepresentative of Twitter users more generally (because of this exclusion, suspension data were not collected for these users; however, as described in Supplementary Information section 2, our main results on the association between political orientation and low-quality news sharing are also observed among these elite users). These data were collected as part of a project that was approved by the Massachusetts Institute of Technology Committee on the Use of Humans as Experimental Subjects Protocol 91046.

We then constructed a politically balanced set of users by randomly selecting 4,500 users each from the remaining 4,756 users who shared #Trump2020 and 7,056 users who shared #VoteBidenHarris2020. After 9âmonths, on 30 July 2021, we checked the status of the 9,000 users and assessed suspension. We classify an account as having been suspended if the Twitter application programming interface (API) returned error code 63 (âUser has been suspendedâ) when querying that user.

To measure a userâs tendency to share misinformation, we follow most other researchers in this space11,12,58,59 and use news source quality as a proxy for article accuracy, because it is not feasible to rate the accuracy of individual tweets at scale. Specifically, to quantify the quality of news shared by each user, we leveraged a previously published set of 60 news sites (20 mainstream, 20 hyper-partisan 20 fake news; Table 1) whose trustworthiness had been rated by 8 professional fact-checkers as well as politically balanced crowds of laypeople. The crowd ratings were determined as follows. A sample of 971 participants from the USA, quota-matched to the national distribution on age, gender, ethnicity and geographic region, were recruited through Lucid60. Each participant indicated how much they trusted each of the 60 news outlets using a 5-point Likert scale. For each outlet, we then calculated politically balanced crowd ratings by calculating the average trust among Democrats and the average trust among Republicans, and then averaging those two average ratings.

We also examined Reliability ratings for a set of 283 sites from Ad Fontes Media, Inc., Factual Reporting ratings for a set of 3,216 sites from Media Bias/Fact Check and Accuracy ratings for a set of 4,767 sites from a recent academic paper by Lasser et al.33. We then used the Twitter API to retrieve the last 3,200 posts (as of 6 October 2020) for each user in our study, and collected all links to any of those sites shared (tweeted or retweeted) by each user. Following the approach used in previous work58,59, we calculated a news quality score for each user (bounded between 0 and 1) by averaging the ratings of all sites whose links they shared, separately for each set of site ratings. Finally, we transform these ratings into low-quality news sharing scores by subtracting the news quality ratings from 1. Over 99% of users in our study had shared at least one link to a rated domain. When combining the four expert-based measures into an aggregate news quality score, we replaced missing values with the sample mean; PCA indicated that only one component should be retained (87% of variation explained), which had weights of 0.50 on Pennycook and Rand (ref.â38) fact-checker ratings, 0.51 on Ad Fontes Media Reliability ratings, 0.48 on Media Bias/Fact Check Factual Reporting ratings and 0.51 on Lasser et al.33 Accuracy ratings. In all PCA analyses, we use parallel analysis to determine the number of retained components.

To measure a userâs political orientation, we first classify their partisanship on the basis of whether they shared more #Trump2020 or #VoteBidenHarris2020 hashtags. Additionally, we retrieved all accounts followed by users in our sample and used the statistical model from ref.â39 to obtain a continuous measure of usersâ ideology on the basis of the ideological leaning of the accounts they followed. Similarly, we used the statistical models from ref.â40 and ref.â12 to estimate usersâ ideology using the ideological leanings of the news sites that the users shared content from. We also calculated user ideology by averaging political leanings of domains they shared through tweets or retweets on the basis of the method in ref.â12. The intuition behind these approaches is that users on social media are more likely to follow accounts (and share news stories from sources) that are aligned with their own ideology than those that are politically distant. Thus, the ideology of the accounts the user follows, and the ideology of the news sources the user shares, provide insight into the userâs ideology. When combining these four measures into an aggregate political orientation score, we replaced missing values with the sample mean; PCA indicated that only one component should be retained (88% of variation explained), which had weights of 0.49 on hashtag-based partisanship, 0.49 on follower-based ideology, 0.51 on sharing-based ideology estimated through ref.â40 and 0.51 on sharing-based ideology estimated through ref.â12. We also used this aggregate measure to calculate a userâs extent of ideological extremity by taking the absolute value of the aggregate ideology measure; and we used PCA to combine measures of the standard deviation across a userâs tweets of news site ideology scores from ref.â12 and ref.â40, and standard deviation of ideology of accounts followed from ref.â39, as a measure of the ideological uniformity (versus diversity) of news shared by the user.

Policy simulations

In addition to the regression analyses, we also simulate politically neutral suspension policies and determine each userâs probability of suspension; and from this, determine the level of differential impact we would expect in the absence of differential treatment. The procedure is as follows. First, we identify a set of low-quality sources that could potentially lead to suspension. We do so using the politically balanced layperson trustworthiness ratings from ref.â38, as well as using the fact-checker trustworthiness ratings from that same paper. For both sets of ratings, there is a natural discontinuity at a value of 0.25 (on a normalized trust scale from 0â=âNot at all to 1â=âEntirely) (Extended Data Fig. 2). Thus, we consider sites with average trustworthiness ratings below 0.25 to be âlow qualityâ; and for each user, we count the number of times they tweet links to any of these low-quality sites.

We then define a suspension policy as the probability of a user getting suspended each time they share a link to a low-quality news site. We model suspension as probabilistic because many (almost certainly most) of the articles from low-quality news sites are not actually false, and sharing such articles does not constitute an offence. Thus, we consider who would get suspended under suspension policies that differ in their harshness, varying from a 0.01% chance of getting suspended for each shared link to a low-quality news site up to a 10% chance. Specifically, for each user, we calculate their probability of getting suspended as

$$P\left({\rm{suspended}}\right)=1-{\left(1-k\right)}^{L}$$

where L is the number of low-quality links shared, and k is the probability of suspension for each shared link (that is, the policy harshness). The only way the user would not get suspended is if on each of the L times they share a low-quality link, they are not suspended. Because they do not get suspended with probability (1âââk), the probability that they would never get suspended is (1âââk)L. Therefore, the probability that they would get suspended at some point is 1âââ(1âââk)L.

We then calculate the mean (and 95% confidence interval) of that probability across all Democrats versus Republicans in our sample (as determined by sharing Biden versus Trump election hashtags). The results of these analyses are shown in Fig. 3b, and Supplementary Information section 2 presents statistical analyses of estimated probability of suspension on the basis of each measure of political orientation.

We also do a similar exercise using the likelihood of being a bot, rather than low-quality news sharing. The algorithm of ref.â43 provides an estimated probability of being a bot for each user, on the basis of the contents of their tweets. We define a suspension policy as the minimum probability of being human, k, required to avoid suspension (or, in other words, a threshold on bot likelihood above which the user gets suspended). Specifically, for a policy of harshness k, users with bot probability greater than (1âââk) are suspended. The results of these analyses are shown in Fig. 3c.

Reanalyses of extra datasets

Facebook sharing in 2016 by users recruited through YouGov

Here we analyse data presented in ref.â11. A total of nâ=â1,191 survey respondents recruited using YouGov gave the researchers permission to collect the links they shared on Facebook for 2âmonths (through a Facebook app), starting in November 2016. As part of the survey, participants self-reported their ideology (using a 5-point Likert scale; not including participants who selected âNot sureâ, yielding nâ=â995 respondents with usable ideology data) and their party affiliation (Democrat, Republican, Independent, Other, Not sure). As in our Twitter studies, we calculate low-quality information sharing scores for each user by using the fact-checker and politically balanced crowd ratings for the 60 news sites from ref.â38, as described above in Table 1. A total of 893 participants shared at least one rated link.

Twitter sharing in 2018 and 2020 by users recruited through Prolific

Here we analyse data presented in ref.â41. A total of nâ=â2,100 participants were recruited using the online labour market Prolific in June 2018. Twitter IDs were provided by participants at the beginning of the study. However, some participants entered obviously fake Twitter IDsâfor example, the accounts of celebrities. To screen out such accounts, we followed the original paper and excluded accounts with follower counts above the 95th percentile in the dataset. We had complete data and usable Twitter IDs for 1,901 users. As part of the survey, participants self-reported the extent to which they were economically liberal versus conservative, and socially liberal versus conservative, using 5-point Likert scales. We construct an overall ideology measure by averaging over the economic and social measures. The Twitter API was used to retrieve the content of their last 3,200 tweets (capped by the Twitter API limit). Data were retrieved from Twitter on 18 August 2018, and then again on 12 April 2020 (the latter data pull excludes tweets collected during the former data pull). We calculate low-quality information sharing scores for each user by using the fact-checker and politically balanced crowd ratings for the 60 news sites from ref.â38, as described above in Table 1. A total of 594 participants shared at least one rated link in the 2018 data pull and 379 participants shared at least one rated link in the 2020 data pull; 288 participants shared at least one rated link in both data pulls.

Twitter sharing in 2021 by users who followed at least three political elites

Here we analyse data presented by Mosleh and Rand13, in which Twitter accounts for 816 elites were identified, and then 5,000 Twitter users were randomly sampled from the set of 38,328,679 users who followed at least three of the elite accounts. Each userâs last 3,200 tweets were collected on 23 July 2021, and sharing of low-quality news domains was assessed using the fact-checker and politically balanced crowd ratings from ref.â38. A total of 3,070 users shared at least one rated link. The statistical model from ref.â39 was used to obtain a continuous measure of usersâ ideology on the basis of the ideological leaning of the accounts they followed.

Twitter sharing in 2022 by users who followed at least three political elites

Here we analyse previously unpublished data, in which 11,805 Twitter users were sampled from a set of 296,202,962 users who followed at one of the political elite accounts from ref.â41. We randomly sampled from users who had more than 20 lifetime tweets and followed at least three political elites for whom we had a partisanship rating. Each userâs last 3,200 tweets were collected on 25 December 2022, and sharing of low-quality news domains was assessed using the fact-checker and politically balanced crowd ratings from ref.â38. A total of 4,040 users shared at least one rated link. The statistical model from ref.â39 was used to obtain a continuous measure of usersâ ideology on the basis of the ideological leaning of the accounts they followed.

Twitter sharing in 2023 by users who followed at least one political elite, stratified on follower count

Here we analyse previously unpublished data in which 11,886 Twitter users were randomly sampled, stratified on the basis of log10-transformed number of followers (rounded to the nearest integer) from the same set of 296,202,962 users who followed at one political elite account. On 4 March 2023, we retrieved all tweets made by each user since 22 December 2022 using the Twitter Academic API. Sharing of low-quality news domains was assessed using the fact-checker and politically balanced crowd ratings from ref.â38. A total of 4,408 users shared at least one rated link. The statistical model from ref.â39 was used to obtain a continuous measure of usersâ ideology on the basis of the ideological leaning of the accounts they followed.

Sharing of false claims on Twitter

Here we analyse data from Ghezae et al.53. Unlike the previous analyses, this dataset does not use domain quality as a proxy for misinformation sharing. Instead, sets of specific false versus true headlines were used. The headline sets were assembled by collecting claims that third-party fact-checking websites such as snopes.com or politifact.org had indicated were false, and collecting veridical claims from reputable news outlets. Furthermore, the headlines were pre-tested to determine their political orientation (on the basis of survey respondentsâ evaluation of how favourable the headline, if entirely accurate, would be for the Democrats versus Republicans; see ref.â56 for details of the pre-testing procedure).

Survey participants were recruited to rate the accuracy of each URLâs headline claim. Specifically, each participant was shown ten headlines randomly sampled from the full set of headlines, and rated how likely they thought it was that the headline was true using a 9-point scale from ânot at all likelyâ to âvery likelyâ. For each headline, we created politically balanced crowd ratings by averaging the accuracy ratings of participants who identified as Democrats, averaging the accuracy ratings of participants who identified as Republicans and then averaging these two average ratings. We then classify URLs as inaccurate (and thus as misinformation) on the basis of crowd ratings if the politically balanced crowd rating was below the accuracy scale midpoint.

Additionally, the Twitter Academic API was used to identify all Twitter users who had posted primary tweets containing each URL. These primary tweets occurred between 2016 and 2022 (2016, 1%; 2017, 2%; 2018, 4%; 2019, 5%; 2020, 34%; 2021, 27%; 2022, 27%). The ideology of each of those users was estimated using the statistical model from ref.â39 on the basis of the ideological leaning of the accounts they followed. This allows us to count the number of liberals and conservatives who shared each URL on Twitter.

The dataset pools across three different iterations of this procedure. The first iteration used 104 headlines selected to be politically balanced, such that the Democrat-leaning headlines were as Democrat-leaning as the Republican-leaning headlines were Republican-leaning; nâ=â1,319 participants from Amazon Mechanical Turk were then shown a random subset of headlines that were half politically neutral and half aligned with the participantâs partisanship. The second iteration used 155 headlines (of which 30 overlapped with headlines used in the first iteration); nâ=â853 participants recruited using Lucid rated randomly selected headlines. The third iteration used 149 headlines (no overlap with previous iterations); nâ=â866 participants recruited using Lucid rated randomly selected headlines. The Amazon Mechanical Turk sample was a pure convenience sample, whereas the Lucid samples were quota-matched to the national distribution on age, gender, ethnicity and geographic region, and then true independents were excluded. For the 30 headlines that overlapped between iterations 1 and 2, the politically balanced crowd accuracy ratings from Amazon Mechanical Turk and Lucid correlated with each other at r(28)â=â0.75. Therefore, we collapsed the politically balanced ratings across platforms for those 30 headlines. In total, this resulted in a final dataset with fact-checker ratings, politically balanced crowd ratings and counts of numbers of posts by liberals and conservatives on Twitter for 378 unique URLs.

Finally, we also classified the topic of each URL. To do so, we used Claude, an artificial intelligence system designed by Anthropic that emphasizes reliability and predictability, and has text summarization as one of its primary functions. We uploaded the full set of headlines to the artificial intelligence system, and first asked it to summarize the topics discussed in the headlines. We then asked it to indicate the topic covered in each specific headline, and manually inspected the results to ensure that the classifications were sensible. Next, we examined the frequency of each topic, synthesized the results into a set of six overarching topics and then finally asked the artificial intelligence system to categorize each headline into one of these six topics. This process led to the following distribution of topics: US Politics (174 headlines), Social Issues (91 headlines), COVID-19 (48 headlines), Business/Economy (41 headlines), Foreign Affairs (28 headlines) and Crime/Justice (26 headlines). As a test of the robustness of the classification, we also asked another artificial intelligence system, GPT4, to classify the first 100 headlines into the six topics. We found that Claude and GPT4 agreed on 80% of the headlines.

Sharing intentions of false COVID-19 claims across 16 countries

Here, we examine survey data from ref.â37. In these experiments, participants were recruited from 16 different countries using Lucid, with respondents quota-matched to the national distributions on age and gender in each country. Participants were shown ten false and ten true claims about COVID-19 (sampled from a larger set of 45 claims), presented without any source attribution. The claims were collected from fact-checking organizations in numerous countries, as well as sources such as the World Health Organizationâs list of COVID-19 myths. This approach removes ideological variation in exposure to misinformation online13, as well as any potential source cues/effects, and directly measures variation in the decision about what to share.

As in our other analyses, we complement the professional veracity ratings with crowd ratings. Specifically, nâ=â8,527 participants in the Accuracy condition rated the accuracy of each of the headlines they were shown using a 6-point Likert scale. We calculate the average accuracy rating for each statement in each country, and classify statements as misinformation if that average rating is below the scale midpoint.

Our main analyses then focus on the responses of the nâ=â8,597 participants from the Sharing condition, in which participants indicated their likelihood of sharing each claim using a 6-point Likert scale. To calculate each userâs level of misinformation sharing, we first discretize the sharing intentions responses such that choices of 1 (Extremely unlikely), 2 (Moderately unlikely) or 3 (Slightly unlikely) on the Likert scale are counted as not shared, whereas choices of 4 (Slightly likely), 5 (Moderately likely) or 6 (Extremely likely) are counted as shared. We then determine, for each user, the fraction of shared articles that were (1) rated as false by fact-checkers, and (2) rated as below the accuracy scale midpoint on average by respondents in the Accuracy condition.

We then ask how misinformation sharing varies with ideology within each country. Specifically, we construct a conservatism measure by averaging responses to two items from the World Values Survey that were included in the survey, which asked how participants would place their views on the scales of âIncomes should be made more equalâ versus âThere should be greater incentives for individual effortâ and âGovernment should take more responsibility to ensure that everyone is provided forâ versus âPeople should take more responsibility to provide for themselvesâ using 10-point Likert scales. Pilot data collected in the USA confirmed that responses to these two items correlated with self-report conservatism (r(956)â=â0.32 for the first item and r(956)â=â0.40 for the second item).

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.