QDevice model

The QDevice includes a physical quantum device that can initialize and store quantum bits (qubits)—which are individually identified by a physical address—apply quantum gates, measure qubits and create entanglement with QDevices on other nodes (either entangle-and-measure or entangle-and-keep34), either another end node or an intermediary node in the network. We remark that the ability for two end node QDevices that are not immediate neighbours in the quantum network (but that are separated by other network nodes) to generate entanglement between them relies on the architecture implementing a network layer protocol as part of a network stack34. Qubits thereby refers to any possible realization of qubits, including logical qubits realized by error correction. The QDevice exposes the following interface to QNodeOS (Supplementary Information section 2.6): number of qubits available and the supported physical instructions that QNodeOS may send. Physical instructions include qubit initialization, single-qubit and two-qubit gates, measurement, entanglement creation and a ‘none’ for do nothing. Each instruction has a corresponding response (including entanglement success or failure or a measurement outcome) that the QDevice sends back to QNodeOS.

QNodeOS and the QDevice interact by passing messages back and forth on clock ticks at a fixed rate (100 kHz in our NV implementation, 50 kHz in the trapped-ion implementation). During each tick, at the same time: (1) QNodeOS sends a physical instruction to the QDevice and (2) the QDevice can send a response (for a previous instruction). On receiving an instruction, the QDevice performs the appropriate (sequence of) operations (for example, a particular pulse sequence in the AWG). An instruction may take several ticks to complete, for which the QDevice returns the response (success, fail, outcome) during the first clock tick following completion. The QDevice handles an entanglement instruction by performing (a batch of) entanglement generation attempts38 (synchronized by the QDevice with the QDevice of the neighbouring node).

QNodeOS architecture

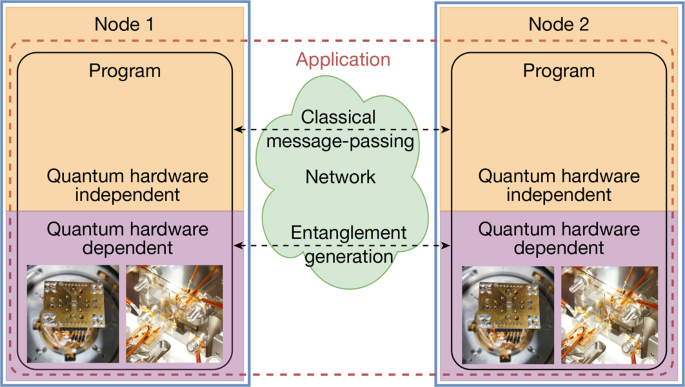

QNodeOS consists of two layers: the CNPU and the QNPU (Fig. 2a; main text and Supplementary Information). Processes on the QNPU are managed by the process manager and executed by the local processor. Executing a user process means executing NetQASM59 subroutines (quantum blocks) of that process, which involves running classical instructions (including flow control logic) on the local processor of the QNPU, sending entanglement requests to the network stack and handling local quantum operations by sending physical instructions to the QDriver (Fig. 2a). Executing the network process means asking the network stack which request (if any) to handle and sending the appropriate (entanglement generation) instructions to the QDevice.

A QNPU process can be in the following states (see Supplementary Fig. 5 for state diagram): idle, ready, running and waiting. A QNPU process is running when the QNPU processor is assigned to it. The network process becomes ready when a network schedule time bin starts; it becomes waiting when it finishes executing and waits for the next time bin; it is never idle. A user process is ready when there is at least one NetQASM subroutine pending to be executed; it is idle otherwise; it goes into the waiting state when it requests entanglement from the network stack (using NetQASM entanglement instructions59) and is made ready again when the requested entangled qubit(s) is(are) delivered.

The QNPU scheduler oversees all processes (user and network) on the QNPU and chooses which ready process is assigned to the QNPU processor. CNPU processes can run concurrently and their execution (order) is handled by the CNPU scheduler. The QNPU scheduler operates independently and only acts on QNPU processes. CNPU processes can only communicate with their corresponding QNPU processes. Because several programs can run concurrently on QNodeOS, the QNPU may have numerous user processes that have subroutines waiting to be executed at the same time. Hence this requires scheduling on the QNPU.

Processes allocate qubits through the QMMU, which manages virtual qubit address spaces for each process and translates virtual addresses to physical addresses in the QDevice. The QMMU can also transfer ownership of qubits between processes, for example, from the network process (having just created an entangled qubit) to a user process that requested this entanglement.

The network stack uses entanglement request sockets (opened by user programs through the QNPU API once execution starts) to represent quantum connections with programs on other nodes. The entanglement management unit maintains all entanglement request sockets and makes sure that entangled qubits are moved to the correct process.

NV QDevice implementation

The two-node network used in this work includes the nodes ‘Bob’ (server) and ‘Charlie’ (client) (separated by 3 m) described in refs. 7,22,38. For the QDevice, we replicated the setup used in ref. 38, which mainly consists of: an ADwin-Pro II (ref. 71) acting as the main orchestrator of the setup; a series of subordinate devices responsible for qubit control, including laser pulse generators, optical readout circuits and an AWG (Zurich Instruments HDAWG62). The quantum physical device, based on NV centres, counts one qubit for each node. The two QDevices share a common 1-MHz clock for high-level communication and their AWGs are synchronized at the sub-nanosecond level for entanglement attempts.

We address the challenge of limited memory lifetimes by using DD. While waiting for further physical instructions to be issued, DD sequences are used to preserve the coherence of the electron spin qubit72. DD sequences for NV centres can prolong the coherence time (Tcoh) up to hundreds of milliseconds (ref. 7) or even seconds73. In our specific case, we measured Tcoh = 13(2) ms for the server node, corresponding to about 1,300 DD pulses. The discrepancy to the state of the art for similar setups is because of several factors. To achieve such long Tcoh, a thorough investigation of the nuclear spin environment is necessary to avoid unwanted interactions during long DD sequences, resulting in an even more accurate choice of interpulse delay. Other noise sources include unwanted laser fields, the quality of microwave pulses and electrical noise along the microwave line.

A specific challenge arises at the intersection of extending memory lifetimes using DD and the need for interactivity: to realize individual physical instructions, many waveforms are uploaded to the AWG, in which the QDevice decodes instructions sent by QNodeOS into specific preloaded pulse sequences. This results in a waveform table containing 170 entries. The efficiency of the waveforms is limited by the waveform granularity of the AWG that corresponds to steps that are multiples of 6.66 ns, having a direct impact on the Tcoh. We are able to partially overcome this limitation by using the methods described in ref. 74. Namely, each preloaded waveform, corresponding to one single instruction, has to be uploaded 16 times to be executed with sample precision. To not fill up the waveform memory of the device, we apply the methods in ref. 74 only to the DD pulses that are played while the QDevice waits for an instruction from the QNPU, whereas the instructed waveforms (gate/operation + first block of the XY8 DD sequence) are padded according to the granularity, if necessary.

The list of physical instructions supported by our NV QDevice is given in Supplementary Information section 3.1.

NV QNPU implementation

The QNPUs for both nodes are implemented in C++ on top of FreeRTOS75, a real-time operating system for microcontrollers. The stack runs on a dedicated MicroZed76, an off-the-shelf platform based on the Zynq 7000 SoC, which hosts two ARM Cortex-A9 processing cores, of which only one is used, clocked at 667 MHz. The QNPU was implemented on top of FreeRTOS to avoid reimplementing standard operating system primitives such as threads and network communication. FreeRTOS provides basic operating system abstractions such as tasks, intertask message-passing and the TCP/IP stack. The FreeRTOS kernel—like any other standard operating system—cannot however directly manage the quantum resources (qubits, entanglement requests and entangled pairs) and hence its task scheduler cannot take decisions based on such resources. The QNPU scheduler adds these capabilities (Supplementary Information section 2.5).

The QNPU connects to peer QNPU devices by means of TCP/IP over a Gigabit Ethernet interface (IEEE 802.3 over full-duplex Cat 5e). The communication goes through two network switches (Netgear JGS524PE, one per node). The two QNPUs are time-synchronized through their respective QDevices (granularity 10 μs), as these already are synchronized at the microsecond level (common 1-Mhz clock).

The QNPU device interfaces with the QDevice’s ADwin-Pro II through a 12.5-MHz serial peripheral interface (SPI) interface, used to exchange 4-byte control messages at a rate of 100 kHz.

NV CNPU implementation

The CNPUs for both nodes are a Python runtime executing on a general-purpose desktop machine (four Intel 3.20-GHz cores, 32 GB RAM, Ubuntu 18.04). The choice of using a high-level system was made as the communication between distant nodes would ultimately be in the millisecond timescale and this allows for ease of programming the application. The CNPU machine connects to the QNPU device through TCP over a Gigabit Ethernet interface (IEEE 802.3 over full-duplex Cat 8, average ping round-trip time of 0.1 ms), through the same single network switch as mentioned above (one per node), and sends application registration requests and NetQASM subroutines over this interface (10 to 1,000 bytes, depending on the length of the subroutine). CNPUs communicate with each other through the same two network switches.

Scheduler implementations

We use a single Linux process (Python) for executing programs on the CNPU. CNPU ‘processes’ are realized as threads created within this single Python process. Python was chosen because the NetQASM SDK is implemented in Python. When running several programs concurrently, a pool of such threads is used. Scheduling of the Python process and its threads is handled by the Linux operating system. Each thread establishes a TCP connection with the QNPU to use the QNPU API (including sending subroutines and receiving their results) and executes the classical blocks for its corresponding program.

Both the CNPU and the QNPU maintain processes for running programs. The CNPU scheduler (standard Linux scheduler, see above) schedules CNPU processes, which indirectly controls in which order subroutines from different programs arrive at the QNPU. The QNPU scheduler handles subroutines of the same process priority on a first-come-first-served basis, leading however to executions of QNPU processes not in the order submitted by the CNPU (Supplementary Information section 5.3).

Using only the CNPU scheduler is not sufficient because: (1) we want to avoid millisecond delays needed to communicate scheduling instructions across the CNPU and the QNPU; (2) user processes need to be scheduled in conjunction with the network process (meeting the challenge of scheduling both local and network operations), which is only running on the QNPU; and (3) QNPU user processes need to be scheduled with respect to each other (for example, a user process is waiting after having requested entanglement, allowing another user process to be run, as observed in the multitasking demonstration).

Sockets and the network schedule

In an entanglement request socket, one node is a ‘creator’ and the other is a ‘receiver’. As long as an entanglement request socket is open between the nodes, an entanglement request from only the creator suffices for the network stack to handle it in the next corresponding time bin, that is, the ‘receiver’ can comply with entanglement generation even if no request has (yet) been made to its network stack.

Trapped-ion implementation

The experimental system used for the trapped-ion implementation is discussed in refs. 60,61 and is described in detail in ref. 77. The implementation itself is described in ref. 16. We confine a single 40Ca+ ion in a linear Paul trap; the trap is based on a 300-µm-thick diamond wafer on which gold electrodes have been sputtered. The ion trap is integrated with an optical microcavity composed of two fibre-based mirrors, but the microcavity is not used here. The physical-layer control infrastructure consists of C++ software, Python scripts, a pulse sequencer that translates Python commands to a hardware description language for a field-programmable gate array (FPGA) and hardware that includes the FPGA, input triggers, direct digital synthesis (DDS) modules and output logic.

QNodeOS provides physical instructions through a development FPGA board (Texas Instruments, LAUNCHXL2-RM57L (ref. 78)) that uses a SPI. We programmed another board (Cypress, CY8CKIT-143 (ref. 79)) that translates SPI messages into TTL signals compatible with the input triggers of our experimental hardware.

The implementation consisted of sequences composed of seven physical instructions: initialization, RX(π), RY(π), RX(π/2), RY(π/2), RY(−π/2) and measurement. First, we confirmed that message exchange occurred at the rate of 50 kHz as designed. Next, we confirmed that we could trigger the physical-layer hardware. Finally, we implemented seven different sequences. Each sequence was repeated 104 times, which allowed us to acquire sufficient statistics to confirm that our QDriver results are consistent with operation in the absence of the higher layers of QNodeOS.

Metrics

Both classical and quantum metrics are relevant in the performance evaluation: the quantum performance of our test programs is measured by the fidelity F(ρ, |τ⟩) of an experimentally obtained quantum state ρ to a target state |τ⟩, in which F(ρ, |τ⟩) = ⟨τ|ρ|τ⟩, estimated by quantum tomography80. Classical performance metrics include device utilization Tutil = 1 − Tidle/Ttotal, in which Tidle is the total time that the QDevice is not executing any physical instruction and Ttotal is the duration of the whole experiment, excluding time spent on entanglement attempts (see below).

Experiment procedure NV demonstration

Applications are written in Python using the NetQASM SDK59 (code in Supplementary Information), with a compiler targeting the NV flavour59, as it includes quantum instructions that can be easily mapped to the physical instructions supported by the NV QDevice. The client and server nodes independently start execution of their programs by invoking a Python script on their own CNPU, which then spawns the threads for each program. During application execution, the CNPUs have background processes running, including QDevice monitoring software.

A fixed network schedule is installed in the two QNPUs with consecutive time bins (all assigned to the client–server node pair) with a length of 10 ms (chosen to be equal to 1,000 communication cycles between QNodeOS and QDevice as in ref. 38) to assess the performance without introducing a dependence on a changing network schedule. During execution, the CNPUs and QNPUs record events including their time stamps. After execution, corrections are applied to the results (see below) and event traces are used to compute latencies.

DQC

Our demonstration of DQC (Fig. 4) implements the effective single-qubit computation \(| \psi \rangle =H\,\circ \,R_Z(\alpha )\,\circ \,|+\rangle \) on the server, as a simple form of blind quantum computing that hides the rotation angle α from the server when executed with randomly chosen θ and not performing tomography. The remote entanglement protocol used is the single-photon protocol81,82,83 (Supplementary Information section 3.1).

Filtering

Results, with no post-selection, are presented including known errors that occur during the tomography single-shot readout (SSRO) process (Fig. 4b, blue) (details on the correction in the Supplementary Information of ref. 22). We also report the post-selected results in which data are filtered on the basis of the outcome of the charge-resonance check84 after one application iteration (Fig. 4b, purple). This filter enables the elimination of false events, specifically when the emitter of one of the two nodes is not in the right charge state (ionization) or the optical resonances are not correctly addressed by the laser fields after the execution of one iteration of DQC.

Further filtering (Fig. 4b, latency filtered) is performed on those iterations that showed latency not compatible with the combination of Tcoh of the server and the average entangled state fidelity. For this filter, a simulation (using a depolarizing model, based on the measured value Tcoh; Supplementary Information section 4.4) was used to estimate the single-qubit fidelity (given the entanglement fidelity measured above) as a function of the duration the server qubit stays live in memory in a single execution of the DQC circuit (Fig. 4a). This gives a conservative upper bound of the duration as 8.95 ms, to obtain a fidelity of at least 0.667. All measurement results corresponding to circuit executions exceeding 8.95 ms duration were discarded (146 out of 7,200 data points).

Other main sources of infidelity not considered in this analysis of the outcome include, for instance, the non-zero probability of double excitation for the NV centre83. During entanglement generation, the NV centre can be re-excited, leading to the emission of two photons that lower the heralded entanglement fidelity. The error can be corrected by discarding those events that registered, in the entanglement time window, a photon at the heralding station (resonant zero-phonon line photon) and another one locally at the node (off-resonant phonon sideband photon).

Finally, the dataset presented in Fig. 4b (not shown chronologically) was taken in ‘one shot’ to prove the robustness of the physical layer, therefore no calibration of relevant experimental parameters was performed in between, leading to possible degradation of the overall performance of the NV-based setup.

The single-qubit fidelity is calculated with the same methods as in ref. 8, measuring in the state |i⟩ and in its orthogonal state |−i⟩, provided that we expect the outcome |i⟩, whereas the two-qubit state fidelity is computed taking into account only the same positive-basis correlators (XX, YY, ZZ).

Multitasking: delegated computation and LGT

In the first multitasking evaluation, we concurrently execute two programs on the client: a DQC-client program (interacting with a DQC-server program on the server) and a LGT program (on the client only) (Fig. 5). The client CNPU runtime executes the threads, executing the two different programs concurrently. The client QNPU has two active user processes, each continuously receiving new subroutines from the CNPU, which are scheduled with respect to each other and the network process.

Estimates of the fidelity (Fig. 5b) include the same corrections as in the Supplementary Information of ref. 22. To assess the quantum performance of the LGT application, we used a mocked entanglement generation process on the QDevices (executing entanglement actions without entanglement) to simplify the test: weak-coherent pulses on resonance with the NV transitions, which follow the usual optical path, are used to trigger the complex programmable logic device in the entanglement heralding time window. This results in comparable application behaviour for DQC (comparable rates and latencies; Supplementary Information section 5.1) with respect to multitasking on QNodeOS.

Multitasking: QDevice utilization when scaling number of programs

We scale the number of programs being multitasked (Fig. 5d). We observe how the client QNPU scheduler chooses the execution order of the subroutines submitted by the CNPU. DQC subroutines each have an entanglement instruction, causing the corresponding user process to go into the waiting state when executed (waiting for entanglement from the network process). The QNPU scheduler schedules another process ((56%, 81%, 99%) for (N = 1, N = 2, N > 2)) of the times that a DQC process is put into the waiting state (demonstrating that the QNPU schedules independently from the order in which the CNPU submits subroutines). The number of consecutive LGT subroutines (of any LGT process; LGT block execution time approximately 2.4 ms) that is executed between DQC subroutines is 0.83 for N = 1, increasing for each higher N until 1.65 for N = 5, showing that indeed idle times during DQC are partially filled by LGT blocks (Supplementary Information section 5.3).

Device utilization (see ‘Metrics’ section) quantifies only the utilization factor between entanglement generation time windows to fairly compare the multitasking and non-multitasking scenarios. In both scenarios, the same entanglement generation processes are performed, which—hence—have the same probabilistic durations in both cases. To avoid inaccurate results owing to this probabilistic nature, we exclude the entanglement generation time windows in both cases.