News

A new artificial intelligence (AI) tool can forecast a person’s risk of developing more than 1,000 diseases. The model, called Delphi-2M, uses a person’s health records and lifestyle factors to estimate their likelihood of developing diseases such as cancer and immune conditions up to 20 years ahead of time. For many diseases, Delphi-2M’s predictions matched or exceeded the accuracy of those of current models that estimate the risk of developing a single illness. “It worked astonishingly well,” says data scientist and study co-author Moritz Gerstung.

News

DeepSeek-R1, a cheap and powerful artificial intelligence (AI) ‘reasoning’ model that sent the US stock market spiralling after it was released by a Chinese firm in January, cost just US$300,000 to train. That’s one of the revelations in a new paper from the DeepSeek team — making R1 the first major LLM to undergo the peer-review process.

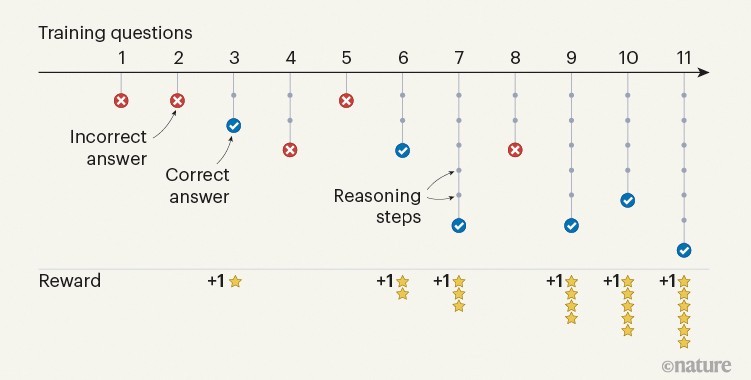

The team behind DeepSeek-R1 showed that a large language model can be taught to ‘reason’ without ever seeing an example of human reasoning. To do so, they used a technique called reinforcement learning, in which the model was rewarded for the correct answer to mathematical questions, and penalized for incorrect answers. The model soon learnt that reasoning improved the likelihood of it finding the correct answer, and it developed an ability to self-reflect and correct itself before outputting a response. (Nature News & Views | 7 min read)

News

A recent preprint reported that at least 17 people have developed psychosis — which renders them unable to distinguish between what is and is not reality — after interacting with generative AI chatbots. Whether chatbot interactions can trigger psychosis is unclear, says psychiatrist Søren Østergaard, but people who already experience symptoms such as delusions or paranoia might be particularly susceptible. Some AI companies have added safeguards into their models to steer interactions away from sensitive or distressing topics, or those that aren’t grounded in reality.

Reference: PsyArXiv preprint (not peer reviewed)

Feature

By training a large language model (LLM) on a person’s text messages and voice recordings, several software firms are offering access to ‘griefbots’ — digital recreations of a person that allow their relatives to communicate ‘with them’ after their death. These AI models can help people navigate their grief, proponents say. Others think the subscription-based services are exploitative, and argue that they could complicate the normal grieving process. As yet, there’s little evidence to back up either viewpoint, which leaves the decision of whether to use griefbots in the hands of individual mourners.

This article is part of Nature Outlook: Robotics and artificial intelligence, an editorially independent supplement produced with financial support from FII Institute.

To get the latest news on artificial intelligence direct to your inbox every fortnight, sign up to Nature Briefing: AI & Robotics — 100% written by humans, of course.