Mice

All animal procedures were conducted in accordance with a protocol approved by the Institutional Animal Ethics Committee of HUST-Suzhou Institute for Brainsmatics, Suzhou, China. We used C57BL/6J mice, and transgenic mice that were crossed with Cre-recombinase-expressing mice and fluorescent-reporter mice (LSL-H2B-green fluorescent protein (GFP)). The Cre-recombinase-expressing mice used include Cart-Cre, Sert-Cre, Vglut2-Cre, Thy1-Cre, PlxinD1-CreER, Camk2-Cre, GAL-Cre, GAD2-Cre, Vgat-Cre, CRH-Cre, Emx1-Cre, Fezf2-CreER, TH-Cre, Chat-Cre, DAT-Cre, SOM-Cre and PV-Cre. The sample size and details can be seen in Supplementary Table 2. We used both male and female mice of 6–36 weeks old for brain image acquisition. The mice used in the experiments were housed in a standard specific pathogen-free animal facility under controlled conditions: a 12-hour light–dark cycle (7:00 to 19:00), room temperature maintained at 20–26 °C and relative humidity regulated between 40 and 70%.

Nissl dataset acquisition

An adult C57BL/6J mouse (8-week-old, male) was anaesthetized with 1% sodium pentobarbital solution and then perfused intracardially with 4% paraformaldehyde (PFA) (in 0.01 M PBS, pH 7.4, 4 °C). Following this procedure, the whole brain was removed and postfixed in 4% PFA at 4 °C for 24 h. After fixation, the brain was washed in 0.01 M PBS for 2 days. Next, the brain was stained with a 0.25% thionine solution (in 0.1 M acetic acid-sodium acetate buffer solution, pH 6.0) for 10 days. For staining and the subsequent dehydration and infiltration steps, the solution was constantly kept on a rotary shaker (rotating diameter 20 mm, speed 1 revolution per second). Then, the stained brain was dehydrated in a graded series of ethanol and acetone solutions: 50% ethanol for 2 h; 16 iterations of 70% ethanol for 12 h each; 85, 95 and 100% ethanol for 2 h each; a 1:1 ratio of 100% ethanol and 100% acetone for 2 h; 100% acetone for 12 h and another 100% acetone step for 1 day. Subsequently, the dehydrated brain was infiltrated in 50, 75 and 100% Spurr resin concentrations for 8 h for each, then infiltrated in fresh 100% Spurr resin for 3 days. All the staining, dehydration and Spurr resin infiltration processes were performed at room temperature under rocking conditions. Finally, the brain was put in a silicone mould filled with 100% Spurr resin solution and then polymerized in an oven at 60 °C for 36 h. The Spurr reagents (SPI) were newly prepared. The 100% Spurr resin contained 10 g of ERL-4221, 7.6 g of DER-736, 26 g of nonenyl succinic anhydride and 0.2 g of dimethylaminoethanol. The 50 and 75% Spurr solutions (wt/wt) were prepared from 100% acetone solution and 100% Spurr solution30.

Afterwards, the MOST system was used to acquire the high-resolution 3D dataset of a Nissl-stained resin-embedded mouse brain12. The specimen was consecutively cut into 1-μm-thick sections, while line-scanning imaging (×40, 0.8 numerical aperture objective) was performed simultaneously. The continuous whole-brain imaging lasted for about 10 days. The raw dataset, comprising more than 11,000 coronal sections with a voxel resolution of 0.35 × 0.35 × 1 μm3, totals larger than 0.74 terabytes. Because the adjacent sections obtained through MOST imaging are self-registered, this dataset can be directly reconstructed into an image volume without inter-slice alignment, allowing for arbitrary slicing along any angle and projection at any thickness. All animal experiments were approved by the Institutional Animal Ethics Committee of HUST-Suzhou Institute for Brainsmatics, and the same applies in the following data acquisition process.

Immunohistochemistry dataset acquisition

An adult C57BL/6J mouse (8-week-old, male) was used for immunostaining with NeuN (a neuron-specific nuclear protein) and NF160 (a protein found in neuronal axons), following a protocol described in ref. 31. Briefly, the slices were washed with PBS, blocked with 5% bovine serum albumin and then incubated with the primary antibodies overnight at 4 °C. After washing with PBS, the secondary antibodies, namely Alexa Fluor 488 goat anti-mouse immunoglobulin G (IgG) (Invitrogen, catalogue no. A11029, 1:1,000 dilution) and Alexa Fluor 594 goat anti-rabbit IgG (Invitrogen, catalogue no. A11037, 1:1,000 dilution) were applied for 2 h at room temperature. Imaging was done afterwards with a multichannel fluorescence slide microscope (Olympus VS120), and ImageJ software was used for processing (National Institutes of Health).

Specific gene-type neuron distribution dataset acquisition

The Cre-recombinase-expressing mice, including Cart-Cre, Sert-Cre, Vglut2-Cre, Thy1-Cre, PlxinD1-CreER, Camk2-Cre, GAL-Cre, GAD2-Cre, Vgat-Cre, CRH-Cre, Emx1-Cre, Fezf2-CreER, TH-Cre, Chat-Cre, DAT-Cre, SOM-Cre and PV-Cre, were crossed with fluorescent-reporter mice (LSL-H2B-GFP) to assay Cre expression. In the cross-hybridized strains, the fluorescent proteins labelled different types of neuron. The mice were anaesthetized and perfused with PBS followed by 4% PFA, and the whole brain was postfixed at 4 °C for 24 h. Samples were embedded with glycol methacrylate resin. Briefly, each intact brain was dehydrated in a graded ethanol series and glycol methacrylate series (70, 85 and 100%), followed by being embedded in a vacuum oven at 48 °C for 24 h for polymerization.

After that, the structured illumination fMOST was applied to acquire 3D datasets of all mouse brain samples at the voxel resolution of 0.32 × 0.32 × 2 μm (ref. 24). Briefly, for each sample, optical sectioning images of the top 2-μm-thick layer were acquired on the block face, then a diamond knife was used to remove the top layer so that a newly exposed surface was created to repeat the imaging-sectioning loop until the whole sample was sectioned and imaged. During the imaging process, propidium iodide was applied for real-time staining of the cytoarchitecture. Then we obtained the whole-brain dataset containing two channels: the green channel for the GFP signal that represents specific neurons and the red channel providing cytoarchitectonic information. For each brain, the image acquisition procedure lasted for about 3 days taking roughly 5,500 coronal images.

Whole-head dataset acquisition

The adult C57BL/6J mouse (8-week-old, male) was anaesthetized and perfused with PBS, followed by 4% PFA. Then the whole head was removed and postfixed in 4% PFA at 4 °C for 48 h. The intact sample was subsequently dehydrated in a graded ethanol series, immersed in a graded LR-White resin (Ted Pella Inc.) series and embedded in a vacuum oven at 38 °C for 3 days. The embedded skull-brain sample was then imaged by a high-definition fMOST system32. During the imaging process, real-time counter-staining was also implemented to obtain propidium iodide-stained cytoarchitecture. A skull-brain dataset with a voxel resolution of 0.32 × 0.32 × 1 μm3 was finally obtained in 45 days, with a size of 61,460 × 58,005 × 22,048 and a total raw data volume of 77.4 terabytes.

MOST-Nissl dataset normalization

To eliminate individual differences from using one single brain sample, and to correct the overall shape and spatial distribution of various brain structures, we nonlinearly registered the MOST-Nissl dataset to the average brain template in the CCF at a 1-μm resolution using the BrainsMapi registration tool. Only the brain outline was selected as the anatomical landmark for this registration, to ensure symmetry along the mid-sagittal plane in 3D space and the registered dataset positioned in the required orientation for constructing the brain atlas. We evaluated the registration result using the Dice score, achieving a value above 0.8 for the brain outline, which is generally considered satisfactory, as shown in the ‘outline’ row and the ‘Global registration for normalization’ column of Supplementary Table 6.

After the registration, the normalized MOST-Nissl dataset was then resliced along the three image axes to obtain coronal, sagittal and horizontal image sequences with a voxel size of 1 μm. For each direction, minimum intensity projections were applied according to the actual thickness needed for atlas construction, ensuring that the images were within the optimal thickness range for identifying brain regions and nuclei based on cytoarchitecture13.

Multiple-source auxiliary image registration

As the normalized MOST-Nissl dataset is a 3D image with isotropic 1-μm resolution, it serves as an ideal template for registering both 2D and 3D auxiliary images from different sources. Its high spatial resolution offers the potential for improved registration accuracy. The auxiliary image registration helps validate the parcellation of STAM, provides extra clues for identifying exact boundaries of specific brain regions and supplements cranial datum marks absent in the MOST-Nissl dataset. In most registration cases, the average Dice score for evaluation was above 0.8, indicating acceptable alignment. Below are the details for auxiliary image registration.

Specific gene-type neuron distribution to Nissl

Using cytoarchitectural information from the propidium iodide channel of the specific gene-type neuron distribution dataset, we identified boundaries of structures such as the hippocampal region and cerebellumas references. These structures were then nonlinearly registered at 1-μm resolution to the normalized MOST-Nissl dataset using the BrainsMapi tool. The resulting deformation field was subsequently applied to the GFP channel of the neuron distribution image, achieving coregistration of specific gene-type neuron distribution information with cytoarchitectural information. We quantitatively evaluated the registration quality across 22 specific neuron distribution datasets, using 12 brain regions as the statistical benchmarks. In most cases, the average Dice score exceeded 0.8, as shown in Extended Data Fig. 4e. Specifically, we evaluated the registration performance of a Camk2-Cre neuron distribution dataset for delineating borders among CA1, CA2 and CA3, with the results shown in Extended Data Fig. 3 and quantitative evaluation results in Supplementary Table 7.

Immunohistochemistry and ISH to Nissl

On the basis of the cytoarchitectural information provided by the immunohistochemical image, we identified the coronal plane in the normalized MOST-Nissl dataset that is closest to its axial position. Using a 2D nonlinear registration method provided by the ANTs tool, we registered the immunohistochemical data onto the selected coronal plane. The ISH dataset used in this study is derived from the Allen Institute’s gene expression dataset, and the registration steps are the same as those for immunohistochemical images. The quantitative evaluation of the registration quality is given in Supplementary Table 8.

Whole-head data to Nissl

The whole-head dataset we acquired includes both cranial and intracranial brain tissue. We manually extracted the contour of the brain tissue from the whole-head dataset as the reference and then used the BrainsMapi registration tool to nonlinearly register the whole-head dataset to the MOST-Nissl dataset in 3D space. The quantitative evaluation of the registration quality is given in Supplementary Table 9.

The specific gene-type neuron distribution and whole-head datasets mentioned above are 3D high-resolution datasets obtained using MOST imaging technology, with data sizes ranging from terabytes to tens of terabytes. Traditional image registration algorithms struggle to handle such large datasets. Therefore, before registration, we preconverted them into a multi-resolution archived TDat format and performed registration in a parallel computing environment. As an example, for an uncompressed specific neuron distribution dataset of roughly 10 terabytes, we used a computing cluster with five nodes in which each node was configured with four CPU (E5-2600) cores and 16 GB of memory. The registration process took roughly 1 h.

Brain structure delineation

The strategy and pipeline used for parcellation

The micrometre-resolution MOST-Nissl dataset provides detailed cytoarchitectural information, forming the basis for parcellating most brain structures. Given that neurons have a diameter of about 10 μm and cytoarchitecture is defined by clusters of cell bodies, we assume that brain-region boundaries shift at a 10-μm scale. Therefore, we chose to illustrate the boundaries of each brain structure at 20-μm axial intervals along standard sections from the normalized, 1-μm resolution MOST-Nissl dataset. Each coronal plane was projected with a 20-μm thickness to accumulate enough cells, aiding in distinguishing cell density differences between regions. These projected coronal sections were then handed over to neuroanatomical experts to identify structures on the images. When delineating the structures, the experts could use our 1-μm resolution MOST-Nissl dataset to observe and track the continuous cytoarchitectural changes along the axial direction, helping to determine the start and end points of a given brain structure. Resliced sagittal and horizontal sections also provided references when coronal views were unclear.

To further validate brain structural parcellation and assist in identifying challenging areas, we incorporated auxiliary images from various labelling and staining methods. Because these auxiliary images have already been registered to the corresponding Nissl-stained coronal planes, as the previous section described, the coregistered image stacks were imported into vector drawing software where illustrators switched between and observed coronal planes of different modalities. They manually outlined the boundaries of various structures, following the principle of ‘seamless adjacency’ to ensure no ‘terra nullius’ between neighbouring structures. For special cases in which certain brain regions undergo appearance, disappearance or notable morphological changes in the axial direction, we can appropriately reduce the axial spacing to capture their fine 3D morphology. After the drawing process, the vectorized structure boundaries on the coronal planes at 20-μm intervals were automatically converted into structure annotations with a resolution of 10 μm in both horizontal and axial directions on raster images. The raster images were resliced onto coronal and horizontal planes, and the anatomical structure boundaries on coronal and horizontal planes were manually smoothed and optimized.

Isocortex

Using the cytoarchitectural information from Nissl staining, we differentiated the isocortex into different layers along the radial direction. The discrepancies in laminar appearances of different cortical areas on cytoarchitectural images also provided supporting evidence to define the regional boundaries along the tangential direction. For example, a dense layer of cells between layers 1 and 2/3 in the ventral part of the retrosplenial area served as a landmark to determine the boundary between the ventral and dorsal parts of the retrosplenial area. In high-resolution images, the lateral part of the entorhinal area showed a layer of small cells in the fourth layer, helping to determine the boundary between the lateral part of the entorhinal area and the perirhinal area. In addition to manual observation, we calculated the grey level index along the radial direction on propidium iodide-stained cytoarchitectural images to assist in determining the boundaries between visual areas and the primary somatosensory area. For some boundaries that could not be identified in cytoarchitectural images, we used neuron distribution datasets to determine the positions of cortical areas such as auditory, infralimbic and orbital areas. Furthermore, we referenced cortical parcellation methods from other literature to determine the boundaries of regions such as gustatory, visceral, temporal association and ectorhinal areas11.

Hippocampus

Parcellating the hippocampus is similar to the cortex, requiring both layering along the radial direction and zoning along the tangential direction. The MOST-Nissl dataset provided sufficient cytoarchitectonic features to aid in the differentiation of the pyramidal layer, granular layer, molecular layer and so on. In addition, we used specific neuron distribution data to determine the boundaries of CA1, CA2 and CA3 along the tangential direction. Furthermore, through high-precision nonlinear registration, we coregistered more detailed information about hippocampal subregions from the reference literature onto our atlas17. The hippocampal subregion information used for registration is available at https://cic.ini.usc.edu/ (refs. 33,34).

Other subcortical structures

Distinct boundaries of the brain structures in the thalamus, hypothalamus and midbrain, as well as white matter fibres and ventricles, can be identified on cytoarchitectural images. For some challenging-to-identify subcortical structures, we introduced other methods for assistance. For example, specific gene-type neuron distribution images helped identify nuclei such as pedunculopontine nucleus, subregions such as the dorsal and ventral parts of ACB, the posterior part of basolateral amygdalar nucleus, the lateral part of the mediodorsal nucleus of the thalamus, the lateral part of the medial preoptic nucleus and the ventral part of medial geniculate complex. We also used the Allen ISH dataset to confirm nuclei such as the retrorubral area of midbrain reticular nucleus, subparaventricular zone and subregions of the PAG. We validated the parcellation patterns of subcortical nuclei by referencing representative studies8,14.

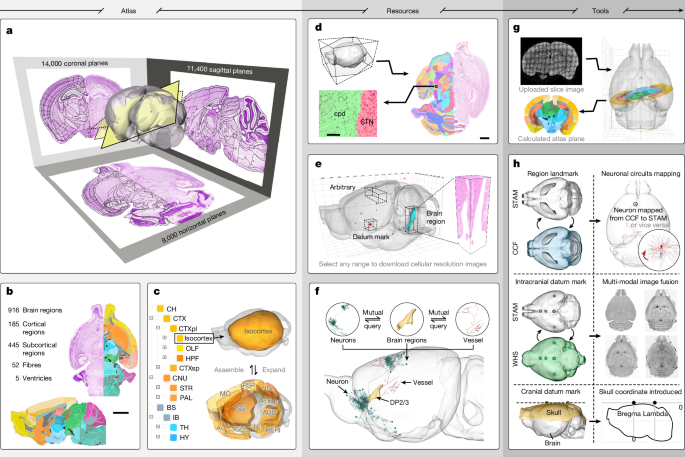

Reconstruction and visualization

Based on the 10-μm resolution annotation image, we used the marching cubes algorithm to systematically reconstruct the 3D morphology along the hierarchical nomenclature of the brain, ranging from fine nuclei to larger brain regions. The Laplacian smoothing algorithm provided by the Visualization Toolkit was used to simplify the surface models, preserving details while reducing the data volume and computational overhead for visualization35. Blender software (Blender Foundation) was then used to further compress the model dataset and convert it into an fbx format suitable for online presentation. Using the open-source framework Three.js, we established an online platform for browsing and interacting with the entire brain’s 3D surface models. The Zoomify tool (Zoomify, Inc., www.zoomify.com) was used to enable real-time web browsing of coronal, sagittal and horizontal brain slice image sequences at 1-μm horizontal resolution. Furthermore, the neuroglancer framework (Google Inc., www.neuroglancer.org) was used to achieve real-time arbitrary-angle reslicing of the 3D image dataset with an isotropic 1-μm resolution.

The brain-wide positioning system

Defining intracranial datum marks

We manually selected eight anatomical structures distributed throughout the entire brain of the normalized MOST-Nissl dataset used for atlas construction. Structures such as the anterior commissure, nucleus ambiguous, corpus callosum and facial nerve VIIn are also clearly visible in images of immunohistochemistry staining sections, including acetylcholinesterase staining, whereas the DGsg, lateral amygdalar and the medial geniculate complex are identifiable in the images acquired by MRI. On the basis of the reconstructed detailed 3D morphology, a total of 18 easily recognizable geometric feature points were further selected from these anatomical structures, such as the midpoint of the anterior commissure, and the posterior–dorsal endpoint of the dorsal raphe. The selection of these points considers the anterior–posterior, left–right and superior–inferior directions within the brain. The initial coordinates for each geometric feature point were determined on the basis of the reconstructed 3D models.

Using the 1-μm resolution MOST-Nissl dataset, local volumes were cropped around each feature point, with dimensions of 1,000 pixels for length, width and height. These volumes were imported into a custom-designed MATLAB (MathWorks, Inc.) App capable of simultaneously generating 20-μm thickness projection images on coronal, sagittal and horizontal planes. Several experts with neuroanatomical knowledge observed and determined the precise coordinate positions of these feature points on these standard planes. The results from various experts were then consolidated to establish the intracranial datum marks.

Defining cranial datum marks

We down-sampled the acquired whole-head images from an adult male C57 mice to isotropic 20-μm resolution. These images were imported into Amira Software (Thermo Fisher Scientific) for volume rendering to determine the initial coordinates of the bregma and lambda points, which served as cranial datum marks. Subsequently, we cropped the surrounding images of these two points from the original resolution whole-head images. These cropped images were then placed into Amira Software for 3D reconstruction. On the basis of the reconstructed morphology of the skull sutures, the precise coordinates of the two points were determined.

Calculating the spatial relationship among datum marks

We used a nonlinear registration algorithm to register the full-head dataset to the MOST-Nissl dataset, obtaining a spatial mapping relationship between the two sets of images13. This mapping was then applied to the coordinates of intracranial datum marks, allowing us to establish the precise spatial relationship between intracranial and cranial datum marks.

Constructing the brain-wide stereotaxic coordinate system

The coordinate system was established using the length, width and height directions of the normalized MOST-Nissl dataset as the x, y and z axes, respectively. This coordinate system could be oriented based on surgical requirements, using any cranial or intracranial datum marks as the origin.

Inter-atlas mapping and neuronal circuits mapping

STAM and CCF

We initially used only the brain outline as the landmark, registered the MOST-Nissl dataset onto the CCF for global correction, ensuring that its position and azimuth in 3D space kept consistent with the CCF. For more precise mapping, we selected several brain regions as anatomical landmarks to register the STAM onto CCF, using the BrainsMapi tool. Because of differences in brain-region definitions and boundaries between the two atlases, this mapping achieves a brain-region level precision with the average Dice score greater than 0.8, as shown in the ‘Fine registration for inter-atlas mapping’ column of Supplementary Table 6.

STAM and WHS

Using STAM’s brain-wide positioning system, which offers rich reference data for image registration, we first selected five pairs of intracranial datum marks visible in both Nissl and MRI images to linearly align WHS with STAM. Then, several brain regions were used as landmarks to perform a nonlinear registration of WHS onto STAM by means of the BrainsMapi tool. This method can also be applied to establish spatial mapping relationships between STAM and other atlases.

STAM and MBSC

We integrated the delineation and nomenclature of MBSC into STAM, using supplementary data from ref. 19, which connect MBSC’s nomenclature with that of the ARA. First, we used these data to construct a hierarchically organized MBSC nomenclature. We then extracted anatomical label slices of MBSC from these supplementary data, determined their spatial relationship to the corresponding CCF slices and accurately located them in STAM’s coordinate system, which is initially registered to the CCF. These label slices were converted into vectorized borders and visualized in STAM’s 2D viewer. Owing to the 100-μm interval of the MBSC slices, direct 3D reconstruction is challenging, so we show them at present in 2D, and only one in every five atlas levels in STAM corresponds to an MBSC atlas level.

Neuronal circuits mapping

Using the fMOST imaging technology24, we can obtain datasets containing both neural circuit and propidium iodide imaging channels. The cytoarchitectural images from the propidium iodide channel provide anatomical features that allow us to establish a spatial mapping between neural circuit images and STAM. For single-neuron circuit datasets without propidium iodide images, the intrinsic fluorescence contours of cell-dense regions can be used to identify landmarks such as DGsg-mid, enabling spatial mapping with STAM. Moreover, the method of constructing precise spatial mapping between STAM and CCF can be generalized to other 3D mouse brain atlases.

Nomenclature

The construction of STAM primarily used the nomenclature of the ARA and Brain maps v.4.0, with extra references to the MBSC for the delineation of certain subregions6,7,8. During the construction of STAM, we also annotated some new subregions and nuclei. For the newly defined subregions in the hippocampal area, we directly adopted the names provided in the literature, as these subregions follow the naming conventions of ARA. For instance, for the subregions identified in ACB, PAG and zona incerta, we adhered to the naming conventions of ARA, using the nucleus name followed by the lowercased abbreviation defining their locations. The naming of other newly defined brain areas and nuclei in STAM also follows the ARA naming conventions.

Web service construction

Open access to the MOST-Nissl dataset

We converted the 1-μm resolution MOST-Nissl dataset into TDat format. The TDat-formatted image dataset is stored as multi-resolution image pyramid, and is diced into cubes with the same 256 voxels in each direction. We developed a server-side program cropping any local image from the TDat-formatted MOST-Nissl dataset, with given information about the desired spatial range and resolution. The program returned an organized 3D image in .tiff format to the client-side for downloading.

Brain slice registration

We selected two sets of propidium iodide- and DAPI-stained whole-brain datasets acquired by the fMOST system and nonlinearly registered them to the MOST-Nissl dataset. Using the registered data as a foundation, we generated a training set by extracting slices from different angles and positions. This training set was then input into a prediction network built on the SVRnet framework36. On the basis of the trained prediction network, we established an online registration web service for 2D brain slices to the 3D brain atlas. This service receives a single propidium iodide- or DAPI-stained brain slice image uploaded by the user. The image is input into the corresponding mode of the prediction network in the backend, where the prediction network calculates the slice parameters, computes the corresponding brain atlas slice and returns the result to the user. Simultaneously, the slice angle and position parameters are passed to the arbitrary-angle reslice browsing service, which returns 1-μm resolution MOST-Nissl dataset images at the same angle as the user’s brain slice.

Virtual surgery planning

We used the 10-μm resolution annotation image of STAM to create a table, recording the list of brain structures that every 10-μm sampled point from the 3D space of STAM belonged to. Using this table, we then developed a server-side program calculating the brain structures that any given injection path passed through, and returned the result to the client-side for visualization. This program is used for both the manual and intelligent modes. We also developed a program detecting a path that avoided to pass through the user-given structures. This program randomly emitted rays from the user-assigned injection target, and decided whether any of these emitted rays did not penetrate the given structures. If no ray fitted the requirement, the program emitted new rays again until a qualified path was found or the time limit of calculation was hit. This program is used for intelligent mode only.

Mutual query of neuroinformation

After multi-type neuroinformation was registered onto the STAM, we computed the brain regions or nuclei where their key nodes were located. For each neuron morphology data, the key nodes are its soma, terminals and branching points, the coordinates of which are recorded in a .swc format file. On the basis of the calculated locations, we could construct a table describing the relationship between the brain structure list and neuroinformation list. We then developed a query service based on this table and integrated this service to the webpage.

As mentioned previously, all these web services are integrated into single entry-point. They are organized as different tabs in one page, facilitating fast switching between different services. Users can get familiar with these services both by reading the manuals and following the website tours provided on this webpage.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.