Mice

This study was based on data from 36 mice (age more than postnatal day 60, both male and female mice). Fifteen GP4.3 mice (Thy1-GCaMP6s; Jackson laboratory, JAX 024275) were used for longitudinal two-photon calcium imaging. Among them, one mouse was removed from subsequent neuronal data analyses due to the low number of matched neurons across days (see âPreprocessing of two-photon imaging dataâ). Five GAD2-IRES-Cre mice (JAX 010802) were used for ALM photoinhibition in home cage. Five additional GAD2-IRES-Cre mice were used only for behaviour training in home cage. Eleven Slc17a7-Cre mice (JAX 023527) crossed to Cre-dependent GCaMP6f reporter Ai148 mice (JAX 030328) were used for behaviour training but were not used for calcium imaging due to poor behavioural performance (Extended Data Fig. 1i).

All procedures were in accordance with protocols approved by the Institutional Animal Care and Use Committees at Baylor College of Medicine. Mice were housed in a 12:12 reversed light:dark cycle and tested during the dark phase. On days not tested, mice received 0.5â1âml of water. On other days, mice were tested in experimental sessions lasting 1â2âh where they received all their water (0.5â1âml). If mice did not maintain a stable body weight, they received supplementary water65. All surgical procedures were carried out aseptically under 1â2% isoflurane anaesthesia. Buprenorphine Sustained Release (1âmgâkgâ1) and Meloxicam Sustained Release (4âmgâkgâ1) were used for preoperative and postoperative analgesia. A mixture of bupivacaine and lidocaine was administered topically before scalp removal. After surgery, mice were allowed to recover for at least 3 days with free access to water before water restriction.

Surgery

Mice were prepared with a clear skull cap and a headpost41,65. The scalp and periosteum over the dorsal skull were removed. For ALM photoinhibition in GAD2-ires-cre mice, AAV8-Ef1a-DIO-ChRmine-mScarlet46 (Stanford Gene Vector and Virus Core; titre 8.44âÃâ1012 viral genomes (vg) per ml) was injected in the left ALM (anterior 2.5âmm from bregma, lateral 1.5âmm, depth 0.5 and 0.8âmm, 200ânl at each depth) using a Nanoliter 2010 injector (World Precision Instruments) with glass pipettes (20â30âµm diameter tip and beveled). A layer of cyanoacrylate adhesive was applied to the skull. A custom headpost was placed on the skull and cemented in place with clear dental acrylic. A thin layer of clear dental acrylic was applied over the cyanoacrylate adhesive covering the entire exposed skull.

For two-photon calcium imaging in GP4.3 mice, a glass window was additionally implanted over ALM. A circular craniotomy with diameter 3.2âmm was made over the left ALM (anterior 2.5âmm from bregma, lateral 1.5âmm). Dura inside craniotomy was removed. A glass assembly consisting of a single 4âmm diameter coverslip (Warner Instruments; CS-4R) on the top of two 3âmm diameter coverslips (Warner Instruments; CS-3R) was combined using optical adhesive (Norland Products; NOA 61) and UV light (Kinetic instruments Inc.; SpotCure-B6). The glass window was affixed to the surrounding skull of craniotomy using cyanoacrylate adhesive (Elmer; Krazy Glue) and dental acrylic (Lang Dental Jet Repair Acrylic; 1223-clear).

Behaviour tasks and training in home cage

Details of behaviour task and training in the autonomous home-cage system have been described previously43. In brief, a headport (~20âÃâ20âmm) was in the frontal side of the home cage. The two sides of the headport were fitted with widened tracks that guided a custom headpost (26.5âmm long, 3.2âmm wide) into a narrow spacing where the headpost could trigger two snap action switches (D429-R1ML-G2, Mouser) mounted on both sides of the headport. Upon switch trigger, two air pistons (McMaster; 6604K11) were pneumatically driven (Festo; 557773) to clamp the headpost. A custom 3D-printed platform was placed inside the home cage in front of the headport. The stage was embedded with a load cell (Phidgets; CZL639HD) to record mouse body weight. This body weight-sensing stage was also used to detect struggles during head fixations and triggered self-release. A lickport with two lickspouts (5âmm apart) was placed in front of the headport. Each of the lickspout was electrically coupled to the custom circuit board that detected licks via completion of an electrical circuit upon licking contacts41,66. Water rewards were dispensed by two solenoid valves (The Lee Company; LHDA1233215H). The sensory stimulus for the tactile-instructed licking task was a mechanical pole (1.5âmm diameter) on the right side of the headport. The pole was motorized by a linear motor (Actuonix; L12-30-50-12-I) and presented at different locations to stimulate the whiskers. The sensory stimuli for the auditory-instructed licking task were pure tones (2âkHz or 10âkHz) provided by a piezo buzzer (CUI Devices; CPE-163) placed in front of the headport. The auditory âgoâ cue (3.5âkHz) in both tactile and auditory tasks was provided by the same piezo buzzer.

Protocols stored on microcontrollers (Arduino; A000062) operated the home-cage system and autonomously trained mice in voluntary head fixation and behavioural tasks, as well as carrying out optogenetic testing. In brief, mice were placed inside the home cage and could freely lick both lickspouts that were placed inside the home cage through the headport. The rewarded lickspout alternated between the left and right lickspouts (3 times each) to encourage licking on both lickspouts. This phase of the training acclimatized mice to the lickport and the lickport was gradually retracted into the headport away from the home cage. The lickport retraction continued until the tip of the lickspouts was approximately 14âmm away from the headport. At this point, mice could only reach the lickspouts by entering the headport with the headpost triggering the head-fixation switches. After 30 successful voluntary head-fixation switch triggers, the pneumatic pistons were activated to clamp the headpost upon the switch trigger (âvoluntary head fixationâ; Fig. 1c). The head-fixation training protocol continuously increased the pneumatic clamping duration (from 3âs to 30âs). This clamping was self-released when the body weight readings from the load-sensing platform exceeded either an upper (30âg) or lower (â1âg) threshold. Overt movements of the mice during the head fixation typically produced large fluctuations in weight readings exceeding the thresholds. These thresholds were dynamically adjusted during the training process.

When mice successfully performed head-fixation training protocol by reaching 30âs head-fixation duration, the next training protocol for the tactile-instructed licking task began. In the tactile-instructed licking task, mice used their whiskers to discriminate the location of a pole and reported choice using directional licking for a water reward41,65 (Fig. 1d). The pole was presented at one of two positions that were 6âmm apart along the anteriorâposterior axis. The posterior pole position was approximately 5âmm from the right whisker pad. The sample epoch was defined as the time between the pole movement onset to 0.1âs after the pole retraction onset (sample epoch, 1.3âs). A delay epoch followed during which the mice must keep the information in short-term memory (delay epoch, 1.3âs). An auditory âgoâ cue (0.1âs duration) signalled the beginning of response epoch and mice reported choice by licking one of the two lickspouts. Task training had three subprotocols that shaped mice behaviour in stages. First, a âdirectional lickingâ subprotocol trained mice to lick both lickspouts and switch between the two. Then, a âdiscriminationâ subprotocol taught mice to report pole position with directional licking. Finally, a âdelayâ subprotocol taught mice to withhold licking during the delay epoch and initiate licking upon the âgoâ cue by gradually (in 0.2âs steps) increasing the delay epoch duration up to 1.3âs. At the end of the delay subprotocol, the head-fixation duration was further increased from 30âs to 60âs. The head-fixation duration was increased by 2âs after every 20 successful head fixations. This was done to obtain more behavioural trials in each head fixation. The program also adjusted the probability of each trial type to correct biased licking of the mice.

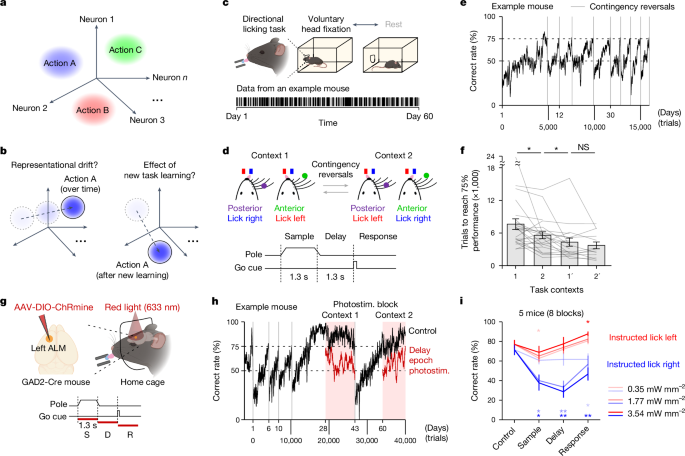

Mice were first trained in one sensorimotor contingency (Fig. 1b, task context 1; anterior pole positionâlick left, posterior pole positionâlick right). Then, the correspondence between pole locations and lick directions was reversed (task context 2; anterior pole positionâlick right, posterior pole positionâlick left). Over multiple months, mice could learn multiple rounds of sensorimotor contingency reversal depending on experiment (see âPerformance criteria for contingency reversals and acclimatization to imaging setupâ).

For auditory-instructed licking task, mice were trained to perform directional licking to report the frequency of a pure tone presented during the sample epoch (Fig. 5b, task context 3; 2âkHz (low tone)âlick left, 10âkHz (high tone)âlick right). Task structures such as the delay epoch (1.3âs) and auditory go cue (3.5âkHz, 0.1âs) were the same as the tactile-instructed licking task.

Performance criteria for contingency reversals and acclimatization to imaging setup

For mice that underwent optogenetic experiment in home cage, contingency reversal was automatically introduced when mice reached performance criteria of >75% correct and <50% early lick for 100 trials in a given task contingency (Fig. 1e,h). Mice learned multiple rounds of contingency reversals before optogenetic experiment initiated. Optogenetic experiment was manually initiated based on inspections of behavioural performance (Fig. 1h).

Mice for two-photon imaging were over-trained in each task context to reach performance criteria of >80â85% correct for 100 trials. Over-training facilitated faster habituation after transferring to the two-photon setup. After mice acquired this high level of task performance in home-cage training, we transferred the mice to the imaging setup where they performed the same task in daily sessions under the two-photon microscope. During this period, mice were singly housed outside of the automated home-cage system. A brief acclimatization period lasting for a few days was required to habituate the mice to perform the task under the microscope (Extended Data Fig. 1eâg). We started imaging sessions once mice recovered their task performance (typically >75%). After imaging across multiple sessions, mice were returned to the automated home cage again in which they learned other tasks. In this manner, we repeatedly transferred mice between the automated home cage and two-photon setup for as long as possible (Extended Data Fig. 1f,g).

For tactile-instructed licking task, mice were first trained and imaged in one sensorimotor contingency (Fig. 3b, task context 1). After imaging under the two-photon microscope, we transferred the mice back to the home cage and reversed the sensorimotor contingency (Fig. 3b, task context 2). The mice were over-trained in the new task contingency before transferring to the two-photon setup to re-image the same ALM populations across task contexts (task context 1â2; 10 mice). In a subset of mice, after imaging, we re-trained the mice in the previous contingency in the home cage (Fig. 4b, task context 1â²). After achieving proficient task performance, we translocated the mice to the two-photon setup and imaged the same ALM populations again (task context 1â2â1â²; 5 mice). In a subset of mice, we further repeated the contingency reversal one more time and imaged across four task contexts (task context 1â2â1â²â2â²; 3 mice).

For auditory-instructed licking task, mice were imaged first in the tactile task contexts 1 and 2 before training in the auditory task to image the same ALM populations across task contexts (task context 1â2â3; 8 mice).

ALM photoinhibition in home cage

The procedure for ALM photoinhibition in home cage has been described previously43. Light from a 633ânm laser (Ultralaser; MRL-III-633L-50 mW) was delivered via an optical fibre (Thorlabs; M79L005) placed above the headport (Fig. 1g). Photostimulation of the virus injection site was through a clear skull. The photostimulus was a 40âHz sinusoid lasting for 1.3âs, including a 100âms linear ramp during photostimulus offset to reduce rebound neuronal activity67. Photostimulation was delivered in a random subset of trials (18%) during either the sample, delay, or response epoch. Photostimulation started at the beginning of the task epoch. Photostimulation power was 2.5, 12.5, or 25âmW, randomly selected in each trial. Therefore, the probability of each photostimulation condition was 2% (total of 9 conditions). The size of the light beam on the skull surface was 7.07âmm2 (3.0âmm diameter). 2.5, 12.5, and 25.0âmW power corresponded to 0.35, 1.77, and 3.54âmWâmmâ2 in light intensity. This range of the light intensity was much lower than the previous studies41,42 (typically 1.5âmW with a light beam diameter of 0.4âmm, corresponding to 11.9âmWâmmâ2). To prevent the mice from distinguishing photostimulation trials from control trials using visual cues, a masking flash was delivered using a 627ânm LED on all trials near the eyes of the mice. The masking flash began at the start of the sample epoch and continued through the end of the response epoch in which photostimulation could occur.

Videography

Two CMOS cameras (Teledyne FLIR; Blackfly BFS-U3-04S2M) were used to measure orofacial movements of the mouse from the bottom and side views (Extended Data Figs. 1a,b and 5e). Both the bottom and side views were acquired at 224âÃâ192 pixels and 400 frames per second. Mice performed the task in complete darkness, and videos were recorded under infrared 940ânm LED illumination (Luxeon Star; SM-01-R9). A custom written software controlled the video acquisition68.

Two-photon imaging

A Thorlabs Bergamo II two-photon microscope equipped with a tunable femtosecond laser (Coherent; Chameleon Discovery) is controlled by ScanImage 2016a (Vidrio). GCaMP6s was excited at 920ânm. Images were collected with a 16à water immersion lens (Nikon, 0.8 NA, 3âmm working distance) at 2à zoom (512âÃâ512 pixels, 600âÃâ600âµm). For all imaging sessions, we performed volumetric imaging by serially scanning five planes (30 or 40âμm equally spaced along the z axis) at 6âHz each. The range of depth from all imaging planes was 120â500 μm below the pial surface, and the range of laser power was 80â225âmW, measured below the objective. To identify the spatial locations of individual field of view (FOV), we imaged at the pial surface before imaging during the task (Extended Data Fig. 2b). To monitor the same ALM neurons across days, we saved 6 reference images with 10âµm interval around the most superficial imaging plane for all imaging sessions and identified the most similar imaging plane based on visual inspection across sessions.

Multiple FOVs were imaged across multiple days in each task context. The same set of FOVs were imaged across multiple task contexts. Across all experiments, the total duration from the first imaging session to the last imaging session was 26â233 days (Extended Data Fig. 1g; 95.86â±â71.95 days, meanâ±âs.d. across mice).

Behaviour data analysis

Performance was computed as the fraction of correct choices, excluding early lick trials and no lick trials. Mice whose performance never exceeded 70% after 35â40 days of training were considered unsuccessful in task learning (Extended Data Fig. 1h,i). Chance performance was 50%. Behavioural effects of photoinhibition were quantified by comparing the performance under photoinhibition with control trials using paired two-tailed t-test (Fig. 1i). To quantify the speed of task learning in a given task context (Fig. 1f and Extended Data Figs. 1c, 6g and 10d), we calculated the number of trials to reach performance criteria of >75% correct and <50% early lick for 100 trials. We excluded the trials in the head-fixation training protocol from the initial task learning for a fair comparison.

Video data analysis

We used DeepLabCut69 to track manually defined body parts. Separate models were used to track tongue and jaw movements (Extended Data Fig. 1a,b). The development dataset for model training and validation contained manually labelled videos from multiple mice and multiple sessions (correct trials only). For tongue network model, 6 markers were manually labelled in 500 video frames. For jaw network model, 5 markers were manually labelled in 300 video frames. The frames for labelling were automatically and uniformly selected by the program at different timepoints within trials. The labelled frames of the training dataset were split randomly into a training dataset (95%) and a test dataset (5%). Training was performed using the default settings of DeepLabCut. All models were trained up to 500,000 iterations with a batch size of one. The trained models tracked the body features in the test data with an average tracking error of less than 2.5 pixels68.

To analyse tongue and jaw movements during the response epoch, we defined single lick events based on continuous presence of the tongue volume in each frame44. Tongue volume was determined from the internal area of the four tongue markers (Extended Data Fig. 1a, left), which were located at the corners of tongue. Lick events were separately grouped based on the lick duration for further time-bin-matched correlation analysis. x and y pixel positions of the tongue tip trajectories were calculated by averaging the frontal tongue markers in each frame. x and y pixel positions of the jaw tip trajectories were calculated by averaging the three frontal jaw markers in each frame. For each lick event, we obtained four time series (x position, y position, x velocity and y velocity) for the tongue (or jaw) tip trajectories (Extended Data Fig. 1a,b, middle). To calculate the similarity between the tongue (or jaw) tip trajectories across lick events (within lick left or lick right), we computed Pearson correlation on the time series for all pairwise lick events within and across sessions. We then calculated the average correlation for the four parameters (x position, y position, x velocity and y velocity) and compared them within session and across sessions (Extended Data Fig. 1a,b, right).

To examine jaw movements during the delay epoch across task contexts, we calculated the x and y displacement jaw tip position by subtracting the average jaw position in a baseline period (1.57âs) before the sample epoch (Extended Data Fig. 6f).

Preprocessing of two-photon imaging data

Imaging data were preprocessed using Suite2p package70 to perform motion correction and extract raw fluorescence signals (F) from automatically identified regions of interest (ROIs). ROIs with >1 skewness were used for further analyses. Neuropil corrected trace was estimated as Fneuropil_corrected(t)â=âF(t)âââ0.7âÃâFneuropil(t). To visualize activity (Fig. 1d, top and Extended Data Fig. 2j, left), ÎF/F0 (type 1) was separately calculated in each trial as (FâââF0)/F0, where F0 is the baseline fluorescence signal averaged over a 1.57âs period immediately before the start of each trial. For all other analyses, we calculated deconvolved activity to avoid the spillover influence of slow-decaying calcium dynamics across task epochs (Extended Data Fig. 2j). To calculate deconvolved activity, Fneuropil_corrected from all trials were concatenated and ÎF/F0 (type 2) was calculated as (FâââF0)/F0, where F0 is a running baseline calculated as the median fluorescence within a sliding window of 60âs. Subsequently, ÎF/F0 (type 2) was deconvolved using the OASIS algorithm48 (Extended Data Fig. 2j) after estimating the time constant by auto-regressive model with order pâ=â1. Deconvolved activities were used for all the analyses in this study, except in Fig. 2d (top) and Extended Data Fig. 2j (left) where ÎF/F0 (type 1) traces were shown. Type 1 and type 2 ÎF/F0 only differed in their F0 calculation.

To track the activity of the same neurons across days, spatial footprints of individual ROIs from the same FOVs were aligned across different imaging days using the CellReg pipeline47. This probabilistic algorithm computes the distributions of centroid distance and spatial correlation between neuronal pairs of the nearest neighbour and all other neighbours within a 10âμm distance (Extended Data Fig. 2g,h). Based on the bimodality between distributions (nearest neighbours versus other neighbours), CellReg algorithm calculates the estimated false positive and false negative probabilities. By minimizing both estimated error rates for each pair of ROIs, this probabilistic algorithm identifies co-registered neurons and quantifies registration scores for these co-registered neurons (Extended Data Fig. 2i). If the mean squared errors of both centroid distance and spatial correlation model are above 0.1 (a pre-determined hyperparameter), CellReg algorithm generates an error and the FOV is considered as a failure to find co-registered neurons across days. One mouse was removed from all subsequent neuronal data analyses due to failures to find matched neurons across days from all imaging sessions, primarily due to poor imaging window quality. Among co-registered neurons, only neurons with reliable responses in at least one imaging session (i.e., Pearson correlation between trial-averaged and trial-type-concatenated ÎF/F0 (type 1) peristimulus time histograms (PSTHs) calculated using the first versus second halves of the trials >0.5) were used for further analyses.

In the experiment where we imaged the same FOV across multiple sessions in the same task context, we define the sessions as expert-early and expert-late sessions (Fig. 2). In cases where we imaged the same FOV twice over time, the 2 sessions were defined as expert-early and expert-late sessions accordingly. In cases where we imaged more than 2 sessions from the same FOV over time, the expert-early and expert-late sessions were defined for pairs of sessions. Specifically, for single neuron analyses (for example, Fig. 2e,k), we only compared the first and second imaging sessions to avoid inclusion of duplicate data points from the same session. These two sessions are defined as expert-early and expert-late sessions, respectively. For population level activity projection and decoding analyses (Fig. 2i,j), we included all the possible pairwise comparisons. For each pair, the two sessions used are defined as expert-early and expert-late sessions, respectively.

Two-photon imaging data analysis

Neurons were tested for significant trial-type selectivity during the sample, delay, and response epochs, using deconvolved activities from different trial types (non-paired two-tailed t-test, Pâ<â0.001; correct trials only). We used the early sample epoch (first 0.83âs, 5 imaging frames), late delay epoch (last 0.67âs, 4 frames), and early response epoch (first 1.33âs, 8 frames) as the respective time windows for the statistical comparisons and all the following analyses (Extended Data Fig. 4aâc). To examine the stability of single neuron selectivity index, we first identified significantly selective neurons in each task epoch. We then determined each neuronâs preferred trial type (âlick leftâ versus âlick rightâ) using the earlier imaging session in task context 1. Next, selectivity index was calculated as the difference in activity between trial types divided by their sum (anterior versus posterior pole position for sample epoch selectivity; lick left versus lick right for delay and response epoch selectivity; correct trials only). To define preferred trial types in earlier sessions, a portion of the trials were used for statistical tests to determine significant selectivity and the preferred trial type, then independent trials were used to calculate selectivity index within the same session. We then calculated selectivity for the defined neurons in later sessions or across different task contexts.

For error trial analysis (Extended Data Fig. 2k,l), only the imaging sessions with more than ten error trials for each trial type were analysed. Selectivity was calculated as the difference in trial-averaged activity (deconvolved calcium activity) between instructed lick right and lick left trials, using correct and error trials separately. Selectivity was calculated during the early sample epoch, late delay epoch, and response epoch.

To analyse the encoding of trial types in ALM population activity, we built linear decoders that were weighted sums of ALM neuron activities to best differentiate trial types. We examined the encoding of four kinds of trial types: (1) anterior versus posterior pole position trials for stimulus encoding during the sample epoch in the tactile-instructed lick task; (2) low tone (2âkHz) versus high tone (10âkHz) for stimulus encoding during the sample epoch in the auditory-instructed lick task; (3) lick left versus lick right for lick direction encoding during the delay epoch; and (4) lick left versus lick right for lick direction encoding during the response epoch.

To build the linear decoder for a population of n ALM neurons, we found a nâÃâ1 vector coding direction (CD) in the n dimensional activity space that maximally separates response vectors in different trial types during defined task epochsâthat is, CDSample for stimulus encoding during the sample epoch, CDDelay for lick direction encoding during the delay epoch, and CDResponse for lick direction encoding during the response epoch. To estimate the CD vectors, we first computed CDt at different time points as:

$${\bf{C}}{{\bf{D}}}_{t}({\rm{tactile}}\,{\rm{stimulus}},{\rm{sample}}\,{\rm{epoch}})={\bar{{\bf{x}}}}_{{\bf{posterior\; pole}}}-{\bar{{\bf{x}}}}_{{\bf{anterior\; pole}}}\,{\rm{for}}\,{\bf{C}}{{\bf{D}}}_{{\bf{S}}{\bf{a}}{\bf{m}}{\bf{p}}{\bf{l}}{\bf{e}}}$$

$${\bf{C}}{{\bf{D}}}_{t}({\rm{a}}{\rm{u}}{\rm{d}}{\rm{i}}{\rm{t}}{\rm{o}}{\rm{r}}{\rm{y}}\,{\rm{s}}{\rm{t}}{\rm{i}}{\rm{m}}{\rm{u}}{\rm{l}}{\rm{u}}{\rm{s}},{\rm{s}}{\rm{a}}{\rm{m}}{\rm{p}}{\rm{l}}{\rm{e}}\,{\rm{e}}{\rm{p}}{\rm{o}}{\rm{c}}{\rm{h}})={\bar{{\bf{x}}}}_{{\bf{high\; tone}}}-{\bar{{\bf{x}}}}_{{\bf{low\; tone}}}\,{\rm{f}}{\rm{o}}{\rm{r}}\,{\bf{C}}{{\bf{D}}}_{{\bf{S}}{\bf{a}}{\bf{m}}{\bf{p}}{\bf{l}}{\bf{e}}}$$

$${\bf{C}}{{\bf{D}}}_{t}({\rm{lick}}\,{\rm{direction}},{\rm{delay}}\,{\rm{epoch}})={\bar{{\bf{x}}}}_{{\bf{lick\; right}}}-{\bar{{\bf{x}}}}_{{\bf{lick\; left}}}\,{\rm{for}}\,{\bf{C}}{{\bf{D}}}_{{\bf{D}}{\bf{e}}{\bf{l}}{\bf{a}}{\bf{y}}}$$

$${\bf{C}}{{\bf{D}}}_{t}({\rm{l}}{\rm{i}}{\rm{c}}{\rm{k}}\,{\rm{d}}{\rm{i}}{\rm{r}}{\rm{e}}{\rm{c}}{\rm{t}}{\rm{i}}{\rm{o}}{\rm{n}},{\rm{r}}{\rm{e}}{\rm{s}}{\rm{p}}{\rm{o}}{\rm{n}}{\rm{s}}{\rm{e}}\,{\rm{e}}{\rm{p}}{\rm{o}}{\rm{c}}{\rm{h}})={\bar{{\bf{x}}}}_{{\bf{lick\; right}}}-{\bar{{\bf{x}}}}_{{\bf{lick\; left}}}\,{\rm{f}}{\rm{o}}{\rm{r}}\,{\bf{C}}{{\bf{D}}}_{{\bf{R}}{\bf{e}}{\bf{s}}{\bf{p}}{\bf{o}}{\bf{n}}{\bf{s}}{\bf{e}}}$$

where \(\bar{{\bf{x}}}\) are n à 1 trial-averaged response vectors that described the population response for each trial type at each time point, t, during the defined task epochs. Next, we averaged the CDt vectors within the defined task epoch to separately estimate the CDSample, CDDelay, and CDResponse. CDSample, CDDelay, and CDResponse were computed using 50% of trials and the remaining trials from the same session or from different sessions were used for activity projections and decoding (Fig. 2g; correct trials only).

To project the ALM population activity along the CDSample, CDDelay, and CDResponse, we computed the deconvolved activity for individual neurons and assembled their single-trial activity at each time point into population response vectors, x (nâÃâ1 vectors for n neurons). The activity projection in Figs. 2â5 and Extended Data Figs. 3â5, 7 and 9 were obtained as CDSampleTx, CDDelayTx, and CDResponseTx.

To decode trial types using ALM population activity projected onto the CDSample, CDDelay and CDResponse (Figs. 2â5 and Extended Data Figs. 4, 5, 7 and 9), we calculated ALM activity projections (CDSampleTx, CDDelayTx and CDResponseTx) within defined time windows and we computed a decision boundary (DB) to best separate different trial types:

$${\rm{D}}{\rm{B}}({\rm{t}}{\rm{a}}{\rm{c}}{\rm{t}}{\rm{i}}{\rm{l}}{\rm{e}}\,{\rm{s}}{\rm{t}}{\rm{i}}{\rm{m}}{\rm{u}}{\rm{l}}{\rm{u}}{\rm{s}},{\rm{s}}{\rm{a}}{\rm{m}}{\rm{p}}{\rm{l}}{\rm{e}}\,{\rm{e}}{\rm{p}}{\rm{o}}{\rm{c}}{\rm{h}})=\frac{\langle {{{{\bf{C}}{\bf{D}}}_{{\bf{S}}{\bf{a}}{\bf{m}}{\bf{p}}{\bf{l}}{\bf{e}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{posterior\; pole}}}\rangle /{\sigma }_{{\rm{p}}{\rm{o}}{\rm{s}}{\rm{t}}{\rm{e}}{\rm{r}}{\rm{i}}{\rm{o}}{\rm{r}}\,{\rm{p}}{\rm{o}}{\rm{l}}{\rm{e}}}^{2}+\langle {{{{\bf{C}}{\bf{D}}}_{{\bf{S}}{\bf{a}}{\bf{m}}{\bf{p}}{\bf{l}}{\bf{e}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{anterior\; pole}}}\rangle /{\sigma }_{{\rm{a}}{\rm{n}}{\rm{t}}{\rm{e}}{\rm{r}}{\rm{i}}{\rm{o}}{\rm{r}}\,{\rm{p}}{\rm{o}}{\rm{l}}{\rm{e}}}^{2}}{{1/\sigma }_{{\rm{p}}{\rm{o}}{\rm{s}}{\rm{t}}{\rm{e}}{\rm{r}}{\rm{i}}{\rm{o}}{\rm{r}}\,{\rm{p}}{\rm{o}}{\rm{l}}{\rm{e}}}^{2}+{1/\sigma }_{{\rm{a}}{\rm{n}}{\rm{t}}{\rm{e}}{\rm{r}}{\rm{i}}{\rm{o}}{\rm{r}}\,{\rm{p}}{\rm{o}}{\rm{l}}{\rm{e}}}^{2}}$$

$${\rm{DB}}({\rm{auditory}}\,{\rm{stimulus}},{\rm{sample}}\,{\rm{epoch}})=\frac{\langle {{{{\bf{CD}}}_{{\bf{Sample}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{high\; tone}}}\rangle /{\sigma }_{{\rm{high}}\,{\rm{tone}}}^{2}+\langle {{{{\bf{CD}}}_{{\bf{Sample}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{low\; tone}}}\rangle /{\sigma }_{{\rm{low}}\,{\rm{tone}}}^{2}}{{1/\sigma }_{{\rm{high}}\,{\rm{tone}}}^{2}+{1/\sigma }_{{\rm{low}}\,{\rm{tone}}}^{2}}$$

$${\rm{D}}{\rm{B}}({\rm{l}}{\rm{i}}{\rm{c}}{\rm{k}}\,{\rm{d}}{\rm{i}}{\rm{r}}{\rm{e}}{\rm{c}}{\rm{t}}{\rm{i}}{\rm{o}}{\rm{n}},{\rm{d}}{\rm{e}}{\rm{l}}{\rm{a}}{\rm{y}}\,{\rm{e}}{\rm{p}}{\rm{o}}{\rm{c}}{\rm{h}})=\frac{\langle {{{{\bf{C}}{\bf{D}}}_{{\bf{D}}{\bf{e}}{\bf{l}}{\bf{a}}{\bf{y}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{lick\; right}}}\rangle /{\sigma }_{{\rm{l}}{\rm{i}}{\rm{c}}{\rm{k}}\,{\rm{r}}{\rm{i}}{\rm{g}}{\rm{h}}{\rm{t}}}^{2}+\langle {{{{\bf{C}}{\bf{D}}}_{{\bf{D}}{\bf{e}}{\bf{l}}{\bf{a}}{\bf{y}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{lick\; left}}}\rangle /{{\sigma }}_{{\rm{l}}{\rm{i}}{\rm{c}}{\rm{k}}\,{\rm{l}}{\rm{e}}{\rm{f}}{\rm{t}}}^{2}}{{1/{\sigma }}_{{\rm{l}}{\rm{i}}{\rm{c}}{\rm{k}}\,{\rm{r}}{\rm{i}}{\rm{g}}{\rm{h}}{\rm{t}}}^{2}+{1/{\sigma }}_{{\rm{l}}{\rm{i}}{\rm{c}}{\rm{k}}\,{\rm{l}}{\rm{e}}{\rm{f}}{\rm{t}}}^{2}}$$

$${\rm{DB}}({\rm{lick}}\,{\rm{direction}},{\rm{response}}\,{\rm{epoch}})=\frac{\langle {{{{\bf{CD}}}_{{\bf{Response}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{lick\; right}}}\rangle /{\sigma }_{{\rm{lick}}\,{\rm{right}}}^{2}+\langle {{{{\bf{CD}}}_{{\bf{Response}}}}^{{\rm{T}}}{\bf{x}}}_{{\bf{lick\; left}}}\rangle /{\sigma }_{{\rm{lick}}\,{\rm{left}}}^{2}}{{1/\sigma }_{{\rm{lick}}\,{\rm{right}}}^{2}+{1/\sigma }_{{\rm{lick}}\,{\rm{left}}}^{2}}$$

Ï2 is the variance of the activity projection \({{\bf{CD}}}^{{\rm{T}}}{\bf{x}}\) within each trial types. Decision boundaries were computed using the same trials used to compute the CD vectors and independent trials were used to predict trial types. To examine decoding performance across task contexts, we restricted the analysis to decoders with accuracy of >0.7 within the session it was trained in (cross-validated performance). This is because if a decoder exhibited low decoding performance to begin with, its decoding performance will be generally low in other sessions due to poor training of the decoder.

To analyse activity changes along other dimensions of activity space across task contexts, we defined a âuniform shift (US) axis7â using trial-type-averaged activity:

$${{\bf{US}}}_{{\bf{context\; 1}}\to {\bf{2}}}=\left(\frac{{\bar{{\bf{R}}}}_{{\bf{context\; 2}}}+{\bar{{\bf{L}}}}_{{\bf{context\; 2}}}}{2}\right)-\left(\frac{{\bar{{\bf{R}}}}_{{\bf{context\; 1}}}+{\bar{{\bf{L}}}}_{{\bf{context\; 1}}}}{2}\right)$$

where \(\bar{{\bf{R}}}\) and \(\bar{{\bf{L}}}\) are n à 1 response vectors that described the trial-averaged population response for lick left and lick right trials at the end of the delay epoch. We separately calculated US axes for each task context changeâthat is, US1â2 for task context 1â2, US2â1â² for task context 2â1â², US1â²â2â² for task context 1â²â2â² (Extended Data Fig. 8b). For activity projections (Extended Data Fig. 8c), the US axes are further orthogonalized to the CD vectors using the GramâSchmidt process to capture activity changes along dimensions of activity space that were not selective for lick direction (âmovement-irrelevant subspaceâ). We computed the US vectors using 50% of the trials and the remaining 50% of the trials were used for activity projections (Extended Data Fig. 8c). The dot products in Extended Data Fig. 8d were calculated without any orthogonalization.

Modelling

The instructed directional licking task with a delay epoch was modelled with simulations lasting for two seconds. The first second of the simulation was the sample epoch during which time trial-specific external inputs were provided and the last second was the delay epoch in which the inputs were removed. The coding direction, \({{\bf{CD}}}_{{\bf{Delay}}}\) was calculated as the difference between network activity on lick left and lick right trials at the end of the delay epoch (\(t=0\)), similar to the neural data. The trial type was always defined by instructed lick direction in different task contexts (across contingency reversals).

Recurrent neural networks

RNNs consisted of 50 units with dynamics governed by the equations

$$\frac{\tau {\rm{d}}{r}_{i}(t)}{{\rm{d}}t}=-{r}_{i}(t)+f\left(\sum _{j}{W}_{i,j}{r}_{j}(t)+{I}_{i}^{{\rm{TT}}}(t)\right)$$

where \({r}_{i}(t)\) is the spike rate of neuron i, the synaptic time constant \(\tau \) was set equal to 200âms, \({W}_{i,j}\) is the synaptic strength from neuron j to neuron i, \({I}_{i}^{{\rm{TT}}}(t)\) is the trial-type (TT)-dependent external input to neuron i, and \(f(x)=\tanh (x)\) is the neural activation function.

The connection matrix W was randomly initialized from a Gaussian distribution. The network was scaled to have a maximum eigenvalue equal to 0.9. To generate persistent activity, networks must have an eigenvalue greater than or equal to one. Networks initialized with eigenvalues greater than one tended to learn the task with high-dimensional persistent activity, inconsistent with ALM dynamics14. Initializing with eigenvalues less than one tended to produce lower dimensional persistent activity.

External input strengths \({I}_{i}^{{\rm{TT}}}\) were drawn from a Gaussian distribution with mean equal to zero and s.d. of 0.3. Two distinct input vectors were used for anterior \({I}_{i}^{A}\) and posterior \({I}_{i}^{P}\) pole position trials.

Behavioural readout B was given by the linear projections \(B={\sum }_{i}{r}_{i}(t=0){W}_{{\rm{out}}}^{R}-{\sum }_{i}{r}_{i}(t=0){W}_{{\rm{out}}}^{L}\), where \(t=0\) is the time at the end of the delay epoch, \({W}_{{\rm{out}}}^{R}\) and \({W}_{{\rm{out}}}^{L}\) are Gaussian random readout vectors corresponding to rightward and leftward movements, respectively.

RNNs were trained using backpropagation through time (BPTT). The input (\({I}_{i}^{{\rm{TT}}}\)) and readout weights (\({W}_{{\rm{out}}}^{R}\) and \({W}_{{\rm{out}}}^{L}\)) were fixed and only the recurrent weights \({W}_{i,j}\) internal to the RNN were trained. For each trial type, activity along the correct readout direction was trained to match a linear ramp of activity starting at the beginning of the sample epoch and the incorrect readout direction was trained to have zero activation. For task context 1, presentation of \({I}_{i}^{A}\) was associated with ramping along \({W}_{{\rm{out}}}^{L}\) and zero activation along \({W}_{{\rm{out}}}^{R}\), presentation of \({I}_{i}^{P}\) was associated with the opposite behaviour. These associations were reversed for task context 2. Networks were trained for 100 iterations.

In the RNNs, the behaviour readout relied on many units (dense \({W}_{{\rm{out}}}^{R}\) and \({W}_{{\rm{out}}}^{L}\)). Because only 2 units in the AFF networks contributed to behaviour output, this difference in readout may affect how these networks learned to produce reversed output. We therefore also tested RNNs in which we fixed the behaviour readout to only 2 units like the AFF network (sparse \({W}_{{\rm{out}}}^{R}\) and \({W}_{{\rm{out}}}^{L}\)), but all results remained unchanged.

Amplifying feedforward network

ALM circuitry contains an AFF circuit motif54. The AFF network is a recurrent circuit in which preparatory activity during the delay epoch flows through a sequence of activity states. Each activity state can be modelled as a layer within a feedforward network. In addition, the late layers in the network are connected to early layers through feedback connections. Here we develop a framework for training AFF networks to generate choice-selective persistent activity.

Before detailing the learning rules used for training AFF networks, we first introduce several features that make AFF networks advantageous for training. Training neural networks require pathways linking input units to output units for computation, and pathways linking outputs to inputs for learning. In the simplest cases, output to input feedback may interfere with the input to output computations. AFF networks, and non-normal networks in general, do not generate reverberating feedback. For this reason, it is possible to construct AFF networks that bidirectionally link inputs to outputs through separate channels that do not interfere with each other.

AFF (also commonly referred to as non-normal) networks are constructed by applying orthonormal transformations to purely feedforward networks. Orthonormal transformations to feedforward networks serve two useful anatomical purposes: (1) they form feedback connections from late layers to early layers; and (2) they form stabilizing excitatory/inhibitory connections to eliminate any reverberation that may result from the newly formed feedback connections. In this model, we use the feedback connections from late layers to early layers to convey performance feedback signals allowing the AFF network to learn via error backpropagation.

We first constructed a purely feedforward network with 4 layers referred to as input (n; 30 units), hidden layer 1 (h1; 200 units), hidden layer 2 (h2; 5 units) and output (o; 2 units) (Extended Data Fig. 11). Trial-type (TT)-dependent external inputs, \({I}_{i}^{{\rm{TT}}}(t)\), were provided only to the input layer. Feedforward connection matrices (\({W}_{i,j}^{n,{\rm{h1}}},{W}_{i,j}^{{\rm{h1,h2}}}\) and \({W}_{i,j}^{{\rm{h2}},o}\)) conveyed these inputs to downstream layers and were initialized from a uniform positive distribution. Next, we added feedback connections from o to h2 (\({W}_{j,i}^{o,{\rm{h2}}}\)) and from h2 to h1 (\({W}_{j,i}^{{\rm{h2,h1}}}\)) to provide performance feedback for training the feedforward connections. Feedback connections were matched to feedforward connections so that \({W}_{j,i}^{o,{\rm{h2}}}={W}_{i,j}^{{\rm{h2}},o}\). These feedback connections provide scaffolding to precisely implement error backpropagation to train feedforward connections. However, the presence of feedback connections in the circuit will introduce feedback to the network that will interfere with its feedforward computations.

To cancel out the reverberations caused by this feedback we incorporated additional stabilization hidden layers s1 (200 units) and s2 (5 units) (Extended Data Fig. 11). Each hidden unit in layer h1 is matched with a stabilizing neuron in the stabilization layer s1 which receives the same feedback connections as its paired excitatory neuron and projects inhibitory connections of the same strength as its excitatory partner. Similarly, each neuron in h2 has a corresponding unit in s2. Mathematically this relationship is written as

$${W}_{i,j}^{({\rm{s1,h2}})}=-{W}_{i,j}^{({\rm{h1,h2}})}\,{\rm{and}}\,{W}_{i,j}^{({\rm{s2}},o)}=-{W}_{i,j}^{({\rm{h2}},o)}$$

and

$${W}_{j,i}^{({\rm{h2,s1}})}={W}_{j,i}^{({\rm{h2,h1}})}\,{\rm{and}}\,{W}_{j,i}^{(o,{\rm{s2}})}={W}_{j,i}^{(o,{\rm{h2}})}$$

Because of the precisely balanced excitation and inhibition, this recurrent network is non-normal; all eigenvalues are equal to zero. This non-normal network has two independent pathways, one linking the input layer to the output layer, useful for computation; and the other linking the output layer to the input layer, useful for learning.

The network is trained using error backpropagation; an error signal is computed and then sent back into each unit in the output layer. This error signal is conveyed to the early layers by the feedback connections. The stabilizing network ensures that this error signal does not reverberate. The backpropagated signal in neuron \(i\) in the hidden layers h1 and h2 are thus given by the equations

$$\tau \frac{{\rm{d}}{B}_{i}^{{\rm{h2}}}(t)}{{\rm{d}}t}=-{B}_{i}^{{\rm{h2}}}(t)+\sum _{j}{e}_{j}{(t)W}_{j,i}^{o,{\rm{h2}}}$$

$$\tau \frac{{\rm{d}}{B}_{i}^{{\rm{h1}}}(t)}{{\rm{d}}t}=-{B}_{i}^{{\rm{h1}}}(t)+\sum _{j}{B}_{j}^{{\rm{h2}}}(t){W}_{j,i}^{{\rm{h2,h1}}}$$

As in error backpropagation, feedforward weights (that is, \({W}_{i,j}^{{\rm{h1,h2}}}\)) are updated by taking the product of the forward pass activity and the backward pass activity. For example, connections from neuron i in layer h1 onto neuron j in layer h2 are updated according to the rule

$$\Delta {W}_{i,j}^{({\rm{h1,h2}})}={\sum }_{t}{r}_{i}^{{\rm{h1}}}(t){B}_{j}^{{\rm{h2}}}(t)\,{\rm{and}}\,\Delta {W}_{i,j}^{({\rm{h2}},o)}={\sum }_{t}{r}_{i}^{{\rm{h2}}}(t){B}_{j}^{o}(t)$$

This rule is applied to all feedforward connections (that is, \(n\to {\rm{h1}}\), \({\rm{h1}}\to {\rm{h2}}\) and \({\rm{h2}}\to o\)). Changing the feedforward weights will necessarily disrupt the precise balance in the network. To maintain stability, the stabilizing weights must be updated to precisely cancel the changes to the feedforward weights

$$\Delta {W}_{i,j}^{({\rm{s1,h2}})}=-\Delta {W}_{i,j}^{({\rm{h1,h2}})}$$

Compensatory weight changes based on this equation are applied to all connections in the stabilization layers (that is, \({\rm{s1}}\to {\rm{h2}}\) and \({\rm{s2}}\to o\)).

The AFF network was trained to form the same associations as the RNN. Unlike the RNN, the AFF utilized a linear neuronal activation (\(f(x)=x\)) so that dynamics are governed by the equation

$$\frac{\tau {\rm{d}}{r}_{i}(t)}{{\rm{d}}t}=-{r}_{i}(t)+\sum _{j}{W}_{i,j}{r}_{j}(t)+{I}_{i}^{{\rm{TT}}}(t)$$

Additionally, because the AFF naturally generates ramping signals54, the output units were not trained to match a ramping signal at all time points, but rather trained to be activated at a specific level at the end of the delay. For example, the target for the lick right output unit (TR) on posterior trials was TR(tâ=â0)â=â6 and TR(tââ=â0)â=â0 on anterior trials.

Analysis of neural dynamics within RNN and AFF networks

For each network, we calculated the selectivity of each unit as the activity difference between the lick right and lick left trials in each task context. We calculated eigenvectors of the network selectivity matrix using singular value decomposition (SVD). The data for the SVD were an nâÃât matrix containing the selectivity of n units over t time bins (selectivity from task contexts 1 and 2 were concatenated). Three vectors usually captured most of the network activity variance across both task contexts (Extended Data Fig. 11f). We then rotated the 3 eigenvectors so that the first vector was aligned to the dimension that maximized the difference in network selectivity matrix between task contexts 1 and 2. Network activity projected on the first vector was correlated with the network input across task contexts, thus referred to as the stimulus mode (Extended Data Fig. 12a,b). Network activity projected on the second vector was correlated with the network output across task contexts and exhibited ramping activity during the delay epoch, thus referred to as the output mode (Extended Data Fig. 12a,b).

To examine the CDDelay reorganization across task contexts as a function of stimulus mode strength (Extended Data Fig. 12c), we summed the network activity projected on the stimulus mode across time. This activity strength was normalized to the mean activity of each network to enable comparisons across different networks.

Statistics and reproducibility

The sample sizes were similar to sample sizes used in the field: for behaviour and two-photon calcium imaging, three mice or more per condition. No statistical methods were used to determine sample size. All key results were replicated in multiple mice. Mice were allocated into experimental groups according to their strain or by experimenter. Unless stated otherwise, the investigators were not blinded to mouse group allocation during experiments and outcome assessment. Trial types were randomly determined by a computer program. Statistical comparisons using t-tests and other statistical tests are described above. All statistics are two-sided unless otherwise noted. We used Pearsonâs correlation for the linear regression. Error bars indicate meanâ±âs.e.m. unless noted otherwise. Representative images in Fig. 2c and Extended Data Fig. 2a,c,d were reproduced across all FOVs (nâ=â78 fields of view, 14 mice).

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.