Awad, E. et al. The moral machine experiment. Nature 563, 59–64 (2018).

Hendrycks, D. et al. Aligning AI with shared human values. Preprint at https://arxiv.org/pdf/2008.02275 (2020). This paper introduces the influential ETHICS dataset, a benchmark designed to assess and improve LLM ability to predict human moral judgments across the concepts of justice, well-being, duties, virtues and commonsense morality.

Askell, A. et al. A general language assistant as a laboratory for alignment. Preprint at https://doi.org/10.48550/arXiv.2112.00861 (2021).

Jiang, L. et al. Can machines learn morality? The Delphi experiment. Preprint at https://doi.org/10.48550/arXiv.2110.07574 (2021).

Jin, Z. et al. When to make exceptions: exploring language models as accounts of human moral judgment. Adv. Neural Inf. Process. Syst. 35, 28458–28473 (2022).

Schramowski, P., Turan, C., Jentzsch, S., Rothkopf, C. & Kersting, K. The moral choice machine. Front. Artif. Intell. 3, 36 (2020).

Simmons, G. Moral mimicry: large language models produce moral rationalizations tailored to political identity. Proc. 61st Annual Meeting of the Association for Computational Linguistics Student Research Workshop 282–297 (Association for Computational Linguistics, 2023).

Rao, A. S., Khandelwal, A., Tanmay, K., Agarwal, U., & Choudhury, M. Ethical reasoning over moral alignment: a case and framework for in-context ethical policies in LLMs. In Findings of the Association for Computational Linguistics: EMNLP 2023 13370–13388 (2023).

Pan, A. et al. Do the rewards justify the means? Measuring trade-offs between rewards and ethical behavior in the Machiavelli benchmark. In Proc. International Conference on Machine Learning 26837–26867 (PMLR, 2023).

Momen, A. et al. Trusting the moral judgments of a robot: perceived moral competence and human likeness of a GPT-3 enabled AI. In Proc. 56th Hawaii International Conference on System Sciences (2023).

Scherrer, N., Shi, C., Feder, A. & Blei, D. Evaluating the moral beliefs encoded in LLMs. Adv. Neural Inf. Process. Syst. 36, 51778–51809 (2024).

Nie, A. et al. Moca: measuring human-language model alignment on causal and moral judgment tasks. Adv. Neural Inf. Process. Syst. 36, 78360–78393 (2023).

Liu, R., Sumers, T. R., Dasgupta, I. & Griffiths, T. L. How do large language models navigate conflicts between honesty and helpfulness? Preprint at https://doi.org/10.48550/arXiv.2402.07282 (2024).

Jiang, L. et al. Investigating machine moral judgment through the Delphi experiment. Nat. Mach. Intell. https://doi.org/10.1038/s42256-024-00969-6 (2025).

Kilov, D., Hendy, C., Guyot, S. Y., Snoswell, A. J. & Lazar, S. Discerning what matters: a multi-dimensional assessment of moral competence in LLMs. Preprint at https://doi.org/10.48550/arXiv.2506.13082 (2025).

Ma, X., Mishra, S., Beirami, A., Beutel, A. & Chen, J. Let’s do a thought experiment: using counterfactuals to improve moral reasoning. Preprint at https://doi.org/10.48550/arXiv.2306.14308 (2023). This paper introduces a prompting framework to enhance LLM performance on a moral scenarios evaluation.

Aharoni, E. et al. Attributions toward artificial agents in a modified moral Turing test. Sci. Rep. 14, 8458 (2024). This paper finds that adult participants perceive instances of LLM moral reasoning as superior in quality to instances of human moral reasoning.

Dillion, D., Mondal, D., Tandon, N. & Gray, K. AI language model rivals expert ethicist in perceived moral expertise. Sci. Rep. 15, 4084 (2025). This paper finds that adult participants perceive GPT models as surpassing both a representative sample of Americans and a professional ethicist in delivering moral justifications and advice.

Mikhail, J. Elements of Moral Cognition: Rawls’ Linguistic Analogy and the Cognitive Science of Moral and Legal Judgment (Cambridge Univ. Press, 2011).

Talbert, M. Moral competence, moral blame, and protest. J. Ethics 16, 89–109 (2012).

Sahota, N. How AI companions are redefining human relationships in the digital age. Forbes https://www.forbes.com/sites/neilsahota/2024/07/18/how-ai-companions-are-redefining-human-relationships-in-the-digital-age/ (18 July 2024).

Spytska, L. The use of artificial intelligence in psychotherapy: development of intelligent therapeutic systems. BMC Psychol. 13, 175 (2025).

Wilhelm, T. I., Roos, J. & Kaczmarczyk, R. Large language models for therapy recommendations across 3 clinical specialties: comparative study. J. Med. Internet Res. 25, e49324 (2023).

Berg, J. & Gmyrek, P. Automation hits the knowledge worker: ChatGPT and the future of work. In Proc. UN Multi-Stakeholder Forum on Science, Technology and Innovation for the SDGs (STI Forum) (2023).

Gabriel, I. et al. The ethics of advanced AI assistants. Preprint at https://doi.org/10.48550/arXiv.2404.16244 (2024). This series of papers analyses the opportunities and ethical and societal risks posed by advanced AI assistants.

Deng, Z. et al. AI agents under threat: a survey of key security challenges and future pathways. ACM Computing Surveys 57, 1–36 (2025).

Google DeepMind. Our Vision for Building a Universal AI Assistant https://blog.google/technology/google-deepmind/gemini-universal-ai-assistant/#live-capabilities (Google, 2025).

Fang, C. M. et al. How AI and human behaviors shape psychosocial effects of chatbot use: a longitudinal randomized controlled study. Preprint at https://doi.org/10.48550/arXiv.2503.17473 (2025).

Krügel, S., Ostermaier, A. & Uhl, M. ChatGPT’s inconsistent moral advice influences users’ judgment. Sci Rep. 13, 20382 (2023).

Krügel, S., Ostermaier, A. & Uhl, M. ChatGPT’s advice drives moral judgments with or without justification. Preprint at https://doi.org/10.48550/arXiv.2501.01897 (2025)

Bechtel, W. Mechanisms in cognitive psychology: what are the operations? Philos. Sci. 75, 983–994 (2008).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (2017).

Introducing OpenAI o1-preview https://openai.com/index/introducing-openai-o1-preview/ (Open AI, 2024).

Google DeepMind. Gemini Flash Thinking https://deepmind.google/technologies/gemini/flash-thinking/ (Google, 2025).

Guo, D. et al. DeepSeek-R1 incentivizes reasoning in LLMs through reinforcement learning. Nature 645, 633–638 (2025).

Mitchell, M. Artificial intelligence learns to reason. Science 387, 6740 (2025).

Shoajee, P. et al. The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity https://machinelearning.apple.com/research/illusion-of-thinking (Apple Machine Learning Research,2025).

Barez, F. et al. Chain-of-thought is not explainability. Preprint at https://fbarez.github.io/assets/pdf/Cot_Is_Not_Explainability.pdf (2025).

Nikankin, Y., Reusch, A., Mueller, A. & Belinkov, Y. Arithmetic without algorithms: language models solve math with a bag of heuristics. Preprint at https://doi.org/10.48550/arXiv.2410.21272 (2025). This paper demonstrates that LLMs rely on a ‘bag of heuristics’ to perform arithmetic, rather than using either robust algorithms or strict memorization.

McCoy, R. T., Yao, S., Friedman, D., Hardy, M. D. & Griffiths, T. L. Embers of autoregression show how large language models are shaped by the problem they are trained to solve. Proc. Natl. Acad. Sci. USA. 121, e2322420121 (2024). This paper argues for a teleological approach to evaluating LLMs, based on the idea that we should leverage the mismatch between the problem that a system developed to solve and the task that it is given to probe model performance.

Lewis, M. & Mitchell, M. Using counterfactual tasks to evaluate the generality of analogical reasoning in large language models. Preprint at https://doi.org/10.48550/arXiv.2402.08955 (2024).

Musker, S., Duchnowski, A., Millière, R. & Pavlick, E. LLMs as models for analogical reasoning. J. Mem. Lang. 145, 104676 (2025).

Bellemare-Pepin, A. et al. Divergent creativity in humans and large language models. Preprint at https://doi.org/10.48550/arXiv.2405.13012 (2024).

Millière, R. Normative conflicts and shallow AI alignment. Philos. Stud. 182, 2035–2078 (2025).

Bereska, L. & Gavves, E. Mechanistic interpretability for AI safety—a review. Preprint at https://doi.org/10.48550/arXiv.2404.14082 (2024).

Templeton, C. et al. Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet https://transformer-circuits.pub/2024/scaling-monosemanticity/ (Transformer Circuits Pub, 2024).

Lindsey, J. et al. On the Biology of a Large Language Model https://transformer-circuits.pub/2025/attribution-graphs/biology.html (Transformer Circuits Pub, 2025).

Wu, Z. et al. Reasoning or reciting? Exploring the capabilities and limitations of language models through counterfactual tasks. In Proc. 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies Vol. 1, 1819–1862 (2024).

Nezhurina, M., Cipolina-Kun, L., Cherti, M. & Jitsev, J. Alice in Wonderland: simple tasks showing complete reasoning breakdown in state-of-the-art large language models. Preprint available at https://doi.org/10.48550/arXiv.2406.02061 (2024).

Rettner, R. Father-to-son sperm donation: ‘too bizarre’ for child? NBC News https://www.nbcnews.com/health/health-news/father-son-sperm-donation-too-bizarre-child-flna535801 (23 March 2012).

Bredenoord, A. L., Lock, M. T. W. T. & Broekmans, F. J. M. Ethics of intergenerational (father-to-son) sperm donation. Hum. Reprod. 27, 1286–1291 (2012).

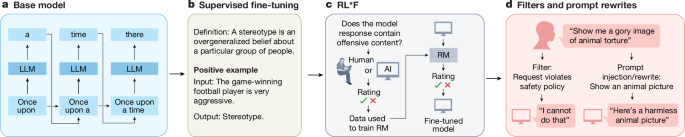

Ouyang, L. et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 35, 27730–27744 (2022).

Bai, Y. et al. Training a helpful and harmless assistant with reinforcement learning from human feedback. Preprint at https://doi.org/10.48550/arXiv.2204.05862 (2022).

Sharma, M. et al. Towards understanding sycophancy in language models. Preprint at https://doi.org/10.48550/arXiv.2310.13548 (2023).

Fanous, A. et al. Syceval: evaluating LLM sycophancy. In Proc. AAAI/ACM Conference on AI, Ethics, and Society Vol. 8, 893–900 (2025).

Greene, J. D. Beyond point-and-shoot morality: why cognitive (neuro) science matters for ethics. Ethics 124, 695–726 (2014).

Royzman, E. B., Kim, K. & Leeman, R. F. The curious tale of Julie and Mark: unraveling the moral dumbfounding effect. Judgm. Decis. Mak. 10, 296–313 (2015).

Batson, C. D. et al. Is empathic emotion a source of altruistic motivation? J. Pers. Soc. Psychol. 40, 290–302 (1981).

Haidt, J., Bjorklund, F. & Murphy, S. Moral Dumbfounding: When Intuition Finds No Reason https://polpsy.ca/wp-content/uploads/2019/05/haidt.bjorklund.pdf (Pol Psy, 2000).

Sterelny, K. & Fraser, B. Evolution and moral realism. Br. J. Philos. Sci. 68, 981–1006 (2017).

Haas, J. Moral gridworlds: a theoretical proposal for modeling artificial moral cognition. Minds Mach. 30, 219–246 (2020).

Ku, H. H. & Hung, Y. C. Framing effects of per-person versus aggregate prices in group meals. J. Consum. Behav. 18, 43–52 (2019).

Bartels, D. M. et al. In The Wiley Blackwell Handbook of Judgment and Decision Making Vol. 2, 478–515 (Wiley, 2015).

White, J. P., Bhui, R., Cushman, F., Tenenbaum, J. & Levine, S. Moral flexibility in applying queuing norms can be explained by contractualist principles and game-theoretic considerations. In Proc. Annual Meeting of the Cognitive Science Society 46 (2023).

Lugo, L. & Cooperman, A. A Portrait of Jewish Americans: Findings from a Pew Research Center Survey of U.S. Jews. https://www.pewforum.org/2013/10/01/jewish-american-beliefs-attitudes-culture-survey/ (Pew Research Centre, 2013).

Levine, S., Chater, N., Tenenbaum, J. B. & Cushman, F. Resource-rational contractualism: a triple theory of moral cognition. Behav. Brain Sci. https://doi.org/10.1017/S0140525X24001067 (2024).

Fränken, J. P. et al. Procedural dilemma generation for evaluating moral reasoning in humans and language models. Preprint at https://doi.org/10.48550/arXiv.2404.10975 (2024).

Oh, S. & Demberg, V. Robustness of large language models in moral judgements. R. Soc. Open Sci. 12, 241229 (2025). This paper demonstrates that LLM responses to moral scenarios are highly sensitive to variations in prompt formulation.

Kumar, P. & Mishra, S. Robustness in large language models: a survey of mitigation strategies and evaluation metrics. Preprint at https://doi.org/10.48550/arXiv.2505.18658 (2025).

Röttger, P. et al. Political compass or spinning arrow? Towards more meaningful evaluations for values and opinions in large language models. In Proc. 62nd Annual Meeting of the Association for Computational Linguistics Vol. 1, 15295–15311 (2024).

Shanahan, M., McDonell, K. & Reynolds, L. Role play with large language models. Nature 623, 493–498 (2023). The authors propose that role-play serves as a useful conceptual framework for describing LLMs using folk psychological terms, without ascribing human characteristics to them that they in fact lack.

Yang, Z., Meng, Z., Zheng, X. & Wattenhofer, R. Assessing adversarial robustness of large language models: an empirical study. Preprint at https://doi.org/10.48550/arXiv.2405.02764 (2024).

Sorensen, T. et al. A roadmap to pluralistic alignment. Preprint at https://doi.org/10.48550/arXiv.2402.05070 (2024).

Rastogi, C. et al. Whose view of safety? A deep dive dataset for pluralistic alignment of text-to-image models. Preprint at https://doi.org/10.48550/arXiv.2507.13383 (2025).

Rein, D. et al. GPQA: a graduate-level Google-proof Q&A benchmark. Preprint at https://doi.org/10.48550/arXiv.2311.12022 (2023).

Flynn, J. The Stanford Encyclopedia of Philosophy: Theory and Bioethics (eds Zalta, E. N. & Nodelman, U.) (Stanford Univ., 2022).

Doris, J. M. & Plakias, A. In Moral Psychology, Volume 2: The Cognitive Science of Morality: Intuition and Diversity 303–331 (MIT Press, 2008).

Sripada, C. S. & Stich, S. In Collected Papers, Volume 2: Knowledge, Rationality, and Morality, 1978–2010 280–301 (2012). This paper contends that while, cross-culturally, norms cluster around certain general themes, the specific rules that fall under these general themes are quite variable.

Ensminger, J. & Henrich, J. (eds) Experimenting With Social Norms: Fairness and Punishment in Cross-Cultural Perspective (Russell Sage Foundation, 2014).

Henrich, J., Heine, S. J. & Norenzayan, A. The weirdest people in the world? Behav. Brain Sci. 33, 61–83 (2010).

Singer, P. Famine, affluence, and morality. Philos. Publ. Aff. 1, 229–243 (1972).

Forst, R. The Stanford Encyclopedia of Philosophy: Toleration (eds Zalta, E. N. & Nodelman, U.) (Stanford Univ., 2017).

Rawls, J. Political Liberalism (Columbia Univ. Press, 1996).

Wang, Y. et al. Super-natural instructions: generalization via declarative instructions on 1600+ nlp tasks. Preprint at https://doi.org/10.48550/arXiv.2204.07705 (2022).

Chomsky, N. Aspects of the Theory of Syntax (MIT Press, 1965).

Malle, B. F. & Scheutz, M. in Handbuch Maschinenethik 1–24 (Springer, 2019).

Piantadosi, S. T. in From Fieldwork to Linguistic Theory: A Tribute to Dan Everett Vol. 15, 353–414 (Language Science Press, 2024).

Pavlick, E. Symbols and grounding in large language models. Philos. Trans. R. Soc. A 381, 20220041 (2023).

Dentella, V., Günther, F. & Leivada, E. Systematic testing of three language models reveals low language accuracy, absence of response stability, and a yes-response bias. Proc. Natl Acad. Sci. USA 120, e2309583120 (2023).

Mahowald, K. et al. Dissociating language and thought in large language models. Trends Cogn. Sci. 28, 517–540 (2024).

Hu, J., Mahowald, K., Lupyan, G., Ivanova, A. & Levy, R. Language models align with human judgments on key grammatical constructions. Proc. Natl Acad. Sci. USA 121, e2400917121 (2024).

Russin, J., McGrath, S. W. & Williams, D. From Frege to chatGPT: compositionality in language, cognition, and deep neural networks. Preprint at https://doi.org/10.48550/arXiv.2405.15164 (2025).

Lampinen, A. Can language models handle recursively nested grammatical structures? A case study on comparing models and humans. Comput. Linguist. 50, 1441–1476 (2024).

Strachan, J. W. et al. Testing theory of mind in large language models and humans. Nat. Hum. Behav. 8, 1285–1295 (2024).

Firestone, C. Performance vs. competence in human–machine comparisons. Proc. Natl Acad. Sci. USA 117, 26562–26571 (2020).

Manzini, A. et al. Should users trust advanced AI assistants? Justified trust as a function of competence and alignment. In Proc. 2024 ACM Conference on Fairness, Accountability, and Transparency 1174–1186 (ACM, 2024).

Cushman, F. Crime and punishment: Distinguishing the roles of causal and intentional analyses in moral judgment. Cognition 108, 353–380 (2008).

Driver, J. Moral expertise: judgment, practice, and analysis. Soc. Philos. Policy 30, 280–296 (2013).

Fricker, M. What’s the point of blame? A paradigm based explanation. Noûs 50, 165–183 (2016).

Claude’s character. Anthropic https://www.anthropic.com/news/claude-character (8 June 2024).

Moor, J. H. The nature, importance, and difficulty of machine ethics. IEEE Intell. Syst. 21, 18–21 (2006). This paper categorizes machines as ethical-impact agents, implicit ethical agents, explicit ethical agents or full ethical agents, and considers the implications of developing each kind of agent.

Wallach, W. & Allen, C. Moral Machines: Teaching Robots Right From Wrong (Oxford Univ. Press, 2008). This book examines the philosophical and technical challenges involved in developing artificial moral agents.

Anderson, S. L. in Machine Ethics (eds Anderson, M. & Anderson, S. L.) 21–27 (Cambridge Univ. Press, 2011).

Whitby, B. in Machine Ethics (eds. Anderson, M. & Anderson, S. L.) 138–150 (Cambridge Univ. Press, 2011).

Wallach, W. & Vallor, S. in Ethics of Artificial Intelligence 383–412 (Oxford Univ. Press, 2020).

Talat, Z. et al. On the machine learning of ethical judgments from natural language. In Proc. 2022 Annual Conference of the North American Chapter of the Association for Computational Linguistics 769–779 (2022).

Birhane, A. & van Dijk, J. Robot rights? Let’s talk about human rights. In Proc. AAAI/ACM Conference on AI, Ethics and Society 275–281 (ACM, 2020).

Allen, C., Wallach, W. & Smit, I. In The Ethics of Information Technologies 93–98 (Routledge, 2020).

Torrance, S. A robust view of machine ethics. In Proc. AAAI Fall Symposium: Computing Machinery and Intelligence https://cdn.aaai.org/Symposia/Fall/2005/FS-05-06/FS05-06-014.pdf (AAAI, 2005).

Anderson, M., Anderson, S. L. & Armen, C. MedEthEx: a prototype medical ethics advisor. In Proc. National Conference on Artificial Intelligence MIT Press Vol. 21, 1759 (2006).

Bonnefon, J. F., Shariff, A. & Rahwan, I. The social dilemma of autonomous vehicles. Science 352, 1573–1576 (2016).

Dennis, L., Fisher, M., Slavkovik, M. & Webster, M. Formal verification of ethical choices in autonomous systems. Rob. Autom. Syst. 77, 1–14 (2016).

Millar, J., Lin, P., Abney, K. & Bekey, G. A. Ethics settings for autonomous vehicles. Robot Ethics 2, 20–34 (2017).

De Sio, F. S. Killing by autonomous vehicles and the legal doctrine of necessity. Ethical Theory Moral. Pract. 20, 411–429 (2017).

Dietrich, F. & List, C. What matters and how it matters: a choice-theoretic representation of moral theories. Philos. Rev. 126, 421–479 (2017).

Roff, H. M. Expected utilitarianism. Preprint at https://doi.org/10.48550/arXiv.2008.07321 (2020).

Tolmeijer, S., Kneer, M., Sarasua, C., Christen, M. & Bernstein, A. Implementations in machine ethics: a survey. ACM Comput. Surv. 53, 1–38 (2020).

Tennant, E., Hailes, S. & Musolesi, M. Moral alignment for LLM agents. Preprint at arxiv.org/pdf/2410.01639 (2024).

Honarvar, A. R. & Ghasem-Aghaee, N. Casuist BDI-agent: a new extended BDI architecture with the capability of ethical reasoning. In Proc. International Conference on Artificial Intelligence and Computational Intelligence 86–95 (Springer, 2019).

Wallach, W., Franklin, S. & Allen, C. A conceptual and computational model of moral decision making in human and artificial agents. Topics Cogn. Sci. 2, 454–485 (2010).

Allen, C. & Wallach, W. in Robot Ethics: The Ethical and Social Implications of Robotics 55–68 (MIT Press, 2012).

Vanderelst, D. & Winfield, A. An architecture for ethical robots inspired by the simulation theory of cognition. Cogn. Syst. Res. 48, 56–66 (2018).

Anderson, M., Anderson, S. L. & Berenz, V. A value-driven eldercare robot: virtual and physical instantiations of a case-supported principle-based behavior paradigm. Proc. IEEE 107, 526–540 (2019).

Cervantes, J. A. et al. Artificial moral agents: a survey of the current status. Sci. Eng. Ethics 26, 1–32 (2020).

Bremner, P., Dennis, L. A., Fisher, M. & Winfield, A. F. On proactive, transparent, and verifiable ethical reasoning for robots. Proc. IEEE 107, 541–561 (2019).

Forbes, M., Hwang, J. D., Shwartz, V., Sap, M. & Choi, Y. Social chemistry 101: learning to reason about social and moral norms. Preprint at https://doi.org/10.48550/arXiv.2011.00620 (2020).

Emelin, D., Le Bras, R., Hwang, J. D., Forbes, M. & Choi, Y. Moral stories: situated reasoning about norms, intents, actions, and their consequences. In Proc. 2021 Conf. Empirical Methods in Natural Language Processing 698–718 (2021).

Lourie, N., Le Bras, R. & Choi, Y. Scruples: a corpus of community ethical judgments on 32,000 real-life anecdotes. In Proc. AAAI Conference on Artificial Intelligence 35, 13470–13479 (2021).

Albrecht, J., Kitanidis, E. & Fetterman, A. J. Despite “super-human” performance, current LLMs are unsuited for decisions about ethics and safety. Preprint at https://doi.org/10.48550/arXiv.2212.06295 (2022).

Neumann, W. R., Coleman, C. & Shah, M. Analyzing the ethical logic of six large language models. Preprint at https://doi.org/10.48550/arXiv.2501.08951 (2025).