Data acquisition followed procedures similar to those conducted in previous work12,38,106.

Participants

Thirty-four patients (17 female) were implanted subdural ECoG grids (Integra or PMT) with 4-mm centre-to-centre electrode spacing and 1.17-mm-diameter contacts (recording tens to hundreds of thousands of neurons107,108) as part of their neurosurgical treatment at either University of California, San Francisco (UCSF) or Huashan Hospital. All participants gave informed written consent to participate in the study before experimental testing. Most electrode grids were placed over the lateral surface of a single hemisphere and were centred around the temporal lobe extending to adjacent cortical areas. The precise location of electrode placement was determined by clinical assessment.

For all participants, age, gender, language background information and which hemisphere was recorded from is included in Extended Data Tables 1–4, where each table corresponds to the respective participant group: English monolingual, Spanish and Mandarin monolingual, Spanish–English bilingual and others with diverse language backgrounds. Which participants were included in each set of analyses is listed in Extended Data Table 5. ECoG recordings with the four Mandarin speakers were conducted at Huashan Hospital while patients underwent awake language mapping as part of their surgical brain-tumour treatment. ECoG recordings with all other participants were conducted at UCSF while patients underwent clinical monitoring for seizure activity as part of their surgical treatment for intractable epilepsy. Most participants in this dataset had epileptic seizure foci that were located in deep medial structures (for example, the insula) or far anterior temporal lobe outside of the main regions of interest in this study, such as the STG. All participants included in this study reported normal hearing and spoken language abilities.

Participant consent

All protocols in the current study were approved by the UCSF Committee on Human Research and by the Huashan Hospital Institutional Review Board of Fudan University. Participants gave informed written consent to take part in the experiments and for their data to be analysed. Informed consent of non-English-speaking participants at UCSF was acquired using a medically certified interpreter platform (Language-Line Solutions), and communication with research staff was facilitated by either in-person or video-call-based interpreters who were fluent in the participant’s native language.

Language questionnaire

All participants were asked to self-report speech comprehension proficiency, age of acquisition and frequency of use for all languages that they were familiar with. Participants who self-identified as Spanish–English bilingual were asked to complete a comprehensive language questionnaire by means of an online Qualtrics survey103.

Neural data acquisition

ECoG signals were recorded with a multichannel PZ5 amplifier connected to an RZ2 digital signal acquisition system (TuckerDavis Technologies (TDT)) with a sampling rate of 3 kHz. During the stimulus presentation, the audio signal was recorded in the TDT circuit and therefore time-aligned with the ECoG signal. Audio stimulus was also recorded with a microphone, and this signal was also recorded in the TDT circuit to ensure accurate time alignment.

Data preprocessing

Offline preprocessing of the data included downsampling to 400 Hz, notch-filtering of line noise (at 60 Hz, 120 Hz and 180 Hz for recording at UCSF and 50 Hz, 100 Hz and 150 Hz for recording at Huashan Hospital), extracting the analytic amplitude in the high-gamma frequency range (70–150 Hz, HFA) and excluding extended interictal spiking or otherwise noisy channel activity through manual inspection. The Hilbert transform was used to extract HFA using eight band-pass Gaussian filters with logarithmically increasing centre frequencies (70–150 Hz) and semilogarithmically increasing bandwidths. High-gamma amplitude was calculated as in previous work12,106 as the first principal component of the signal in each electrode across all eight high-gamma bands, using principal components analysis (PCA). Last, the HFA was downsampled to 100 Hz and Z-scored relative to the mean and s.d. of the neural data in the experimental block. Each sentence HFA was normalized relative to the 0.5 s of silent prestimulus baseline. All subsequent analyses were based on the resulting neural time series.

Electrode localization

For anatomical localization, electrode locations were extracted from postimplantation computer tomography scans, coregistered to the patients’ structural magnetic resonance imaging and superimposed on three-dimensional reconstructions of the patients’ cortical surfaces using an in-house imaging pipeline validated in previous work109. FreeSurfer (https://surfer.nmr.mgh.harvard.edu/) was used to create a three-dimensional model of the individual participant’s pial surfaces, run automatic parcellation to get individual anatomical labels and warp the individual participant surfaces into the cvs_avg35_inMNI152 average template.

Stimuli and procedure

All participants passively listened to roughly 30 min of speech in a language they knew (either Spanish, English or Mandarin Chinese) and one other language (either Spanish or English). Speech stimuli consisted of a selection of 239 unique Spanish sentences from the DIMEx corpus30,110, spoken by a variety of native Mexican-Spanish speakers; 499 unique English sentences from the TIMIT corpus, spoken by a variety of American English speakers31; and 58 unique Mandarin Chinese paragraphs from the ASCCD corpus from the Chinese Linguistic Data Consortium (www.chineseldc.org/), spoken by a variety of Mandarin Chinese speakers. Although the total duration of stimuli presentation was roughly equal across languages, statistical tests that were conducted at the sentence level across corpora accounted for the differing number of sentences.

We selected these specific speech corpora, originally designed to test speech recognition systems, to meet the following requirements: (1) comprehensively span the phonemic inventories of the languages in question; (2) provide validated phonemic, phonetic and word-level annotations; and (3) include a diverse and wide range of speakers with varying accents. These corpora were not intended to span specific semantic or syntactic structures in the languages or to capture long-range dependencies that extended across multiple sentences. Therefore we did not ask research questions about these specific speech representations.

The contents of each speech corpus were split across five blocks, where each block was roughly 5–7 minutes in duration. In English and Spanish speech stimuli, four blocks contained unique sentences, and a single block contained ten repetitions of ten sentences. In the Mandarin Chinese speech stimuli, four paragraphs were repeated six times, and repetitions were intermixed with unique paragraphs across blocks. Repeated sentences were used for validation of TRF models (see details below). Sentences were presented with an intertrial interval of 0.4–0.5 s in English and Mandarin and 0.8 s in Spanish. Spanish sentences were on average 4.77 s long (range: 2.5–8.03 s), English sentences were on average 3.05 s long (range: 1.99–3.60 s), and Mandarin Chinese sentences were on average 3.16 s long (range: 1.17–11.76 s). Although speech blocks of different languages could be intermixed in the same recording session, each 5- to 7-min block of speech consisted of only one language.

Speech stimuli were presented at a comfortable ambient loudness (about 70 dB) through free-field speakers placed roughly 80 cm in front of the patients’ head. All speech stimuli were presented in the experiment using custom-written MATLABR2016b scripts (MathWorks, www.mathworks.com). Participants were asked to listen to the stimuli attentively and were free to keep their eyes open or closed during the stimulus presentation. We performed all subsequent data analysis using custom-written MATLABR2024b scripts.

Electrode selection

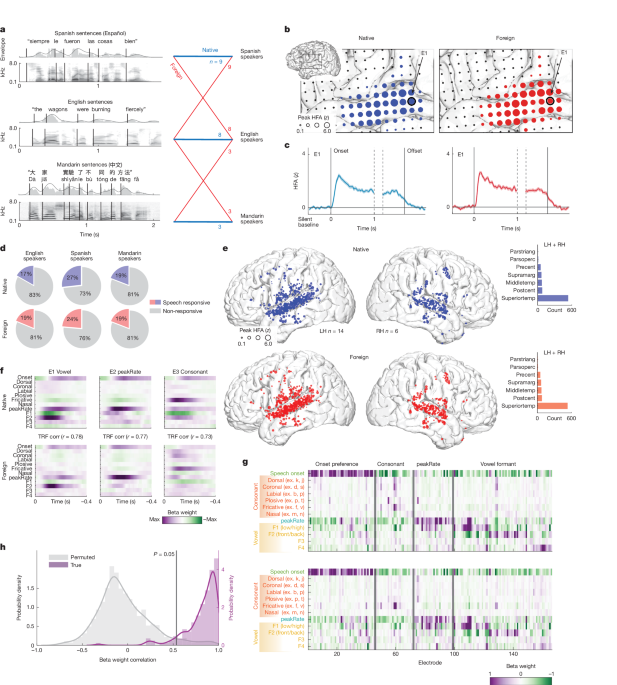

Subsequent analyses included all speech-responsive electrodes: electrodes for which at least a contiguous 0.1 s of the neural response (10 samples, 100-Hz sampling rate) time-aligned to the first or last 0.5 s of the spoken sentences was significantly different from that of the prespeech silent baseline. To test for significance, we used a one-way ANOVA F-test at each time point, Bonferroni corrected for multiple comparisons using a threshold of P < 0.01. We included the neural response aligned to the last 0.5 s of each sentence to ensure that we did not exclude electrodes for which there was a significant response time aligned to the end of sentences but not the beginning.

Feature TRF analysis

HFA responses to speech corpora were predicted using standard linear TRF models with various sets of speech features37,111,112. Many of the speech features used were extracted from pre-existing corrected acoustic, phonetic, phonemic and word-level annotations included with these speech corpora. In the feature TRF (F-TRF) models described below, HFA neural responses recorded from a single electrode were predicted as a linear combination of speech features that occurred at most 0.6 s before the current time point:

$$\rmH\rmF\rmA(t)=x_+\mathop\sum \limits_f=1^F\mathop\sum \limits_l=1^L\beta (l,\,f)X(f,\,t-l)$$

where x0 corresponds to the model intercept, F corresponds to the set of speech features included in the model and L corresponds to the number of time lags considered in the model (60 samples reflecting a total lag of 0.6 s). β(l, f) weights are therefore interpreted as the weight or importance of the specific combination of speech feature, f, and time-lag, l, in predicting the final neural response given the set of speech features and training sentences. Models were trained and tested separately for each electrode using the same cross-validation, ridge regularization and feature normalization protocols as reported in previous work12,38,106. To derive the F-TRF model performance, we fit the trained model to a held-out set of roughly ten sentences averaged across ten repeats (see ‘Stimuli and procedure’) and then calculated the correlation-based R2 fit. In subsequent analysis, we analysed both the magnitude β weights for each speech feature and the overall model R2 derived from the held-out test sentences. In addition to training and testing F-TRF models on sentences in the same language, we also trained F-TRF models on a language and tested the model on sentences from another language to test the generality of our model fits (Extended Data Fig. 5). This analysis followed the same training and testing procedure as the models that were trained and tested on the same language.

Selection of features for F-TRF

The speech features included in the acoustic–phonetic F-TRF model were selected from those that have been identified in previous studies to strongly drive HFA in the human temporal cortex. We included the sentence onset feature as well as the acoustic edges of envelope (peakRate38), both features that drive neural responses in the posterior STG and middle STG, respectively. The consonant manner of articulation and place of articulation features were selected on the basis of ref. 2, which included binary values at phoneme onset indicating whether the phoneme was dorsal, coronal, labial, nasal, plosive or fricative. The vowel features were selected on the basis of ref. 106, which included the median formant values for F1, F2, F3 and F4 (Hz) for each vowel sound because continuous formant values were shown to drive neural responses more than categorical vowel features (for example, high, front, low, back).

To test the effect of word-level features on the F-TRF neural prediction, we also included word onset, word frequency, absolute word length (as number of phonemes) and within-word phoneme surprisal. All features had been pre-annotated for each speech corpus except for word frequency, phoneme surprisal and syllable onset (in Spanish only). Word boundary and phoneme surprisal are metrics reflective of how the brain tracks sequential speech structure, with the former being a discrete, sparse marker of segmentation and the latter being a continuous measure of how predictable each phoneme is, given all preceding phonemes in the current word. Word-frequency and phoneme-surprisal features were calculated on the basis of the distribution of the language presented regardless of the participant’s language background (Spanish surprisal was used in the model in Spanish speech blocks; a precise description of how these features were generated is below). In the TRF model, we excluded phoneme surprisal at word initial positions so that the word-level features could be dissociated from the phoneme-surprisal feature in time.

Model weight correlation across conditions

We determined a particular electrode’s feature tuning by analysing the model weights, where each weight was proportional to the change in electrode response given a change in that feature’s value112. We estimated the F-TRF β weights corresponding to each unique speech feature for each electrode separately, as described in the model-fitting procedure above2. To compare electrode encoding of the base F-TRF model across speech conditions (Fig. 1), we first selected all electrodes with R2 > 0.1 for both speech conditions; this ensured that the β weight analysis would be based on TRF models that were able to explain the neural responses sufficiently well. We then extracted the mean of the 0.05-s (five samples) window around the maximum β weight averaged across features. This resulted in a single vector for each electrode per speech condition, with one β weight per speech feature; to calculate weight correlation, we performed a Pearson correlation across vectors of the same electrode from different speech conditions. This allowed us to determine the cross-language correlation between the relative β weights. To determine a distribution at chance level across electrodes, we performed permutation testing by shuffling the β weights for a single speech condition and repeating the Pearson correlation ten times per electrode.

Construction of word-frequency, word-length and within-word phoneme-surprisal features

Word-frequency values were adopted from the SUBTLEX American English113 and SUBTLEX Spanish51 frequency estimates, which included a total of 74,287 and 94,341 unique words respectively. We included frequency estimates for all words in our corpora that existed in the English and Spanish SUBTLEX word sets. All word-frequency measures were log-scaled (base 10). Word length was determined as the number of phonemes contained within the word onset and offset from the corpus annotations. We used the same word frequency and word lists documented in the SUBTLEX American English and Spanish corpus to construct within-word phoneme surprisal, where each phoneme was associated with a surprisal value. We constructed within-word phoneme surprisal as

$$-\rml\rmo\rmg_2\,\left[P(x_n|x_1\ldots x_n-1)=\frac\rmf\rmr\rme\rmq(x_1\ldots x_n)\rmf\rmr\rme\rmq(x_1\ldots x_n-1)\right]$$

where \(x_1\ldots x_n-1\) is all preceding phonemes in the current word and xn is the current phoneme. We did not include surprisal values for the first phoneme of a word, only phonemes at the second position in the word onwards. \(\mathrmfreq(x_1\ldots x_n)\) is the count of all unique words with \(x_1\ldots x_n\) as the prefix multiplied by the frequency of each of those words as estimated by the SUBTLEX English and Spanish corpus. We also calculated within-word phoneme entropy (expected value of surprisal at each phoneme) as well as biphone and triphone surprisal and entropy but ultimately did not include these features in the final F-TRF model, as for most electrodes, these features did not explain added unique variance.

Annotation of DIMEx syllable onset

We generated the syllable-onset feature for the DIMEx speech corpus through a combination of automatic syllable-onset detection and manual validation. We did not annotate instances of resyllabified word onsets, such as instances where the consonants at the end (coda) of a syllable were pronounced as part of the onset of the following syllable. For example, the phrase los otros (los.’otros) would be annotated to reflect the canonical form (los.’otros), although it may be pronounced (lo.’so.tros).

Unique variance calculation

Because several of the speech features we used in the F-TRF models were highly correlated, we determined the unique contribution of each feature to driving neural responses through the calculation of unique variance. Unique variance \((\Delta R^2)\) is defined as the difference between the portion of variance explained by the model with all relevant features and the model with the feature of interest (f) removed. Broadly, this is

$$\Delta R^2(f)=[\mathrmfull\,\mathrmmodel]R^2-[\mathrmmodel\,\mathrmwith\,f\,\mathrmremoved]R^2$$

The acoustic–phonetic F-TRF model included sentence onset, peakRate, consonant features (dorsal, coronal, labial, nasal, plosive, fricative) and vowel formants (F1, F2, F3, F4). The full F-TRF model included sentence onset, peakRate, consonant features (dorsal, coronal, labial, nasal, plosive, fricative), vowel formants (F1, F2, F3, F4), word onset, word frequency, word length and phoneme-surprisal.

Unique variance for acoustic–phonetic features (Fig. 2) was calculated as follows:

$$\Delta R^2(\mathrmsentence\,\mathrmonset)=[\mathrmfull\,\mathrmmodel]R^2-[\mathrmfull\,\mathrmmodel-\mathrmsentence\,\mathrmonset]R^2$$

$$\Delta R^2(\mathrmpeakRate)=[\mathrmfull\,\mathrmmodel]R^2-[\mathrmfull\,\mathrmmodel-\mathrmpeakRate]R^2$$

$$\Delta R^2(\mathrmconsonant\,\mathrmfeatures)=[\mathrmfull\,\mathrmmodel]R^2-[\mathrmfull\,\mathrmmodel-\mathrmconsonant\,\mathrmfeatures]R^2$$

$$\Delta R^2(\mathrmvowel\,\mathrmformants)=[\mathrmfull\,\mathrmmodel]R^2-[\mathrmfull\,\mathrmmodel-\mathrmvowel\,\mathrmformants]R^2$$

Unique variance for word-level and phoneme-surprisal features (Fig. 2) was calculated as follows:

$$\Delta R^2(\mathrmword\,\mathrmonset)=[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel+\mathrmword\,\mathrmonset]R^2-[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel]R^2$$

$$\Delta R^2(\mathrmword\,\mathrmfrequency)=[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel+\mathrmword\,\mathrmonset+\mathrmword\,\mathrmfrequency]R^2-[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel+\mathrmword\,\mathrmonset]R^2$$

$$\Delta R^2(\mathrmword\,\mathrmlength)=[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel+\mathrmword\,\mathrmonset+\mathrmword\,\mathrmlength]R^2-[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel+\mathrmword\,\mathrmonset]R^2$$

$$\Delta R^2(\mathrmphoneme\,\mathrmsurprisal)=[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel+\mathrmphoneme\,\mathrmsurprisal]R^2-[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel]R^2$$

Combined unique variance for all word-level and phoneme-surprisal features was calculated as:

$$\beginarrayc\Delta R^2(\mathrmword\,\mathrmfeatures)\\ \,=\,[\mathrmfull\,\mathrmmodel]R^2-[\mathrmacoustic\,\mathrmphonetic\,\mathrmmodel]R^2\endarray$$

To determine the significance of unique variance values for a single feature or feature family, we computed a distribution of 300 permuted unique variance values by applying random circular temporal shifts to the feature or feature family of interest. To acquire the P value associated with the unique variance value, we summed the number of permuted unique values greater than the true unique value and divided by the total number of permutations we computed.

Statistical testing

LME models as computed by the fitlme function in MATLABR2024b included random effects for participant and hemisphere as well as the interaction between participant and electrode to ensure that the effects we found were significant across participants and hemispheres. LME models were primarily used to determine whether the unique variance of a particular feature f was significantly different between native and foreign speech conditions. LME formulas took the following form: ΔR2(f) ~ speech condition + speaker language + (1|hemisphere) + (1|participant) + (1|electrode:participant).

The same LME formula (above) was used to test significance in instances in which TRF feature unique variance could be compared across speech conditions (for example, Figs. 2b,c and 5d).

For Pearson and Spearman correlation, two-tailed tests were performed unless otherwise noted.

Acoustic word-boundary decoding

Acoustic word-boundary decoding consisted of a logistic regression model trained to classify whether the mel-spectrogram from 0.2 s around a boundary event was a within-word syllable boundary or a word boundary. All single-syllable words and words at sentence onset were removed from this analysis. To match the instances of word and syllable boundaries in the Spanish and English corpora, we randomly sampled 1,900 instances of word and within-word syllable boundaries from each corpus. In Spanish, we used 1,273 within-word syllable onsets and 627 word onsets. In English, we used 1,153 within-word syllable onsets and 747 word onsets.

For each boundary event in both corpora, the 0.2-s 80-band mel-spectrogram (sampled at 100 Hz) was vectorized. PCA was then performed on the vectorized mel-spectrogram to reduce the total dimensionality. The components with the highest variance that cumulatively explained more than 90% of the total variance were ultimately used as input to the regression, which totalled about 60–80 PCs depending on the language. Logistic regression was run using standard MATLABR2024b regression functions (fitclinear) with Lasso (L1) regularization and 20-fold cross-validation. The AUC was extracted for each held-out test fold, where chance AUC would equal 0.5.

In addition to training and testing acoustic word-boundary decoding in the same language, we also trained the word-boundary decoders in one language and tested on boundary events from the other language to test the extent to which acoustic cues to word boundaries overlapped between the languages. This analysis followed the same training and testing procedure as for the decoders that were trained and tested on the same language. To assess the significance of the test-set AUC values, we performed permutation testing in which word and within-word syllable boundary labels were randomly shuffled and the same logistic regression procedure was applied.

Neural word-boundary effects

To determine whether speech-responsive electrodes showed a significant difference between boundary events (within-word syllable boundaries and word boundaries), we assessed the duration of contiguous time points for which the neural response (100 Hz) time aligned 0.2 s before and 0.4 s after word-boundary events significantly differed from responses aligned to within-word syllable boundaries. To test for significance, we used a one-way ANOVA F-test at each time point and Bonferroni corrected for multiple comparisons using a threshold of P < 0.01. We computed this for each electrode and each language separately such that every electrode was associated with the duration of significant differences value for each language.

Neural word-boundary decoding

Neural word-boundary decoding consisted of a logistic regression model trained to classify whether the contiguous window of neural activity 0.2 s before and 0.4 s after a boundary corresponded to a within-word syllable boundary or a word boundary. To compare against the acoustic word-boundary decoding, all single-syllable words and words at sentence onset were removed from this analysis. The size of the window used in neural decoding was larger than that of the acoustic decoding (0.2 s) because the neural response dynamics are slower in latency than the acoustic dynamics.

To perform neural word-boundary decoding, neural responses (sampled at 100 Hz) from all speech-responsive electrodes from a subset of participants were concatenated into a matrix of the form (electrode × time × event), which was then vectorized across the electrode and time dimensions. The subset of participants who had the maximum overlap in trials were used for neural word-boundary decoding. We performed PCA on the vectorized spatiotemporal neural data to reduce the total dimensionality. The components with the highest variance that cumulatively explained more than 90% of the total variance were ultimately used as input to the regression: about 800–1,000 PCs, depending on the participant group. Extended Data Table 6 summarizes the number of unique participants, electrodes, PCs used to train each decoder.

Logistic regression was run using standard MATLABR2024b regression functions (fitclinear) with Lasso (L1) regularization and 20-fold cross-validation. The AUC was extracted for each held-out test fold. Beta linear coefficient estimates were extracted from the trained ClassificationLinear model, and the weights of single electrodes were calculated by thresholding the percentile weight values and converting from PC space back to the original (electrode × time) space.

To characterize the temporal dynamics of decoding performance (Extended Data Fig. 9), we performed sliding-window neural word-boundary decoding. To do this, we used the same set of speech-responsive electrodes as for the fixed 0.6-s window decoding, but we used a sliding window of 0.02 s within this 0.6 s of neural response to perform the decoding. The same trials and electrodes were used for decoding across all 0.02-s windows in the same participant group and language.

We also performed single-participant neural word-boundary decoding (Extended Data Fig. 9) using all speech-responsive electrodes per participant. Single-participant word-boundary decoding was calculated similarly to the participant group decoding (with the same time window, with PCA) but included electrode responses from a single participant only. The number of electrodes included in the decoding varied by participant (electrodes: median = 47, range = 21, 79). For statistical analysis comparing decoding performance across speech conditions in single participants (Extended Data Fig. 9c), a paired Wilcoxon signed-rank test was used.

For the single participants who spoke English and at least one other language (Fig. 5), we followed the same procedure as above for calculating English word-boundary decoding AUC. To assess the significance of the effect of English proficiency on neural word-boundary classification performance (in English), we used a linear model:

$$\mathrmAUC \sim \mathrmproficiency+(1|\mathrmnumber\,\mathrmof\,\mathrmelectrodes)$$

Construction of AAI

The acoustic ambiguity index (AAI) is based on the acoustic word-boundary decoder that takes in the spectrogram values using 0.2 s around each boundary event and outputs whether the trial corresponds to a word boundary or a within-word syllable boundary. As above, single-syllable words and words at sentence onset were removed from this analysis. For each single trial, the decoder outputs both a predicted label (0, 1) and a posterior probability score (0–1). The scores of the held-out test trials are extracted as one of the outputs of the MATLABR2024b ClassificationLinear model predict function. The per-trial value abs(correct label – posterior probability score) is then calculated to produce a continuous measure of how accurate the classifier is on single trials, where larger values indicate that the classifier is probably predicting incorrectly. The corresponding neural word-boundary decoding is produced after (1) calculating the difference between the probability scores for single trials from neural decoding and correct label and (2) comparing this distribution between known and foreign groups for each bin.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.