No one could accuse Demis Hassabis of dreaming small.

In 2016, the company he co-founded, DeepMind, shocked the world when an artificial intelligence model it created beat the best human player of the strategy game Go. Then Hassabis set his sights even higher: in 2019, he told colleagues that his goal was to win Nobel prizes with the company’s AI tools.

Chemistry Nobel goes to developers of AlphaFold AI that predicts protein structures

It took only five years for Hassabis and DeepMind’s John Jumper to do so, collecting a share of the 2024 Nobel Prize in Chemistry for creating AlphaFold, the AI that revolutionized the prediction of protein structures.

AlphaFold is just one in a string of science successes that DeepMind has achieved over the past decade. When he co-founded the company in 2010, Hassabis, a neuroscientist and game developer, says his aim was to make “a world-class scientific research lab, but in industry”. In that quest, the company sought to apply the scientific method to the development of AI, and to do so ethically and responsibly by anticipating risks and reducing potential harms. Establishing an AI ethics board was a condition of the firm’s agreement to be acquired by Google in 2014 for around US$400 million, according to media reports.

Now Google DeepMind is trying to replicate the success of AlphaFold in other fields of science. “We’re applying AI to nearly every other scientific discipline now,” says Hassabis.

Demis Hassabis co-founded DeepMind in 2010.Credit: Antonio Olmos/Guardian/eyevine

But the climate for this marriage of science and industry has changed drastically since the release of ChatGPT in 2022 — an event that Hassabis calls a “wake-up moment”. The arrival of chatbots and the large language models (LLMs) that power them led to an explosion in AI usage across society, as well as a scramble by a growing number of well-funded competitors to achieve human-level artificial general intelligence (AGI).

Google DeepMind is now racing to release commercial products — including iterations of the firm’s Gemini LLMs — almost weekly, while continuing its machine-learning research and producing science-specific models. The acceleration has made doing responsible AI harder, and some staff are unhappy with the firm’s more commercial outlook, say several former employees.

All of this raises questions about where DeepMind is headed, and whether it can achieve blockbuster successes in other fields of science.

Nobel bound

At Google DeepMind’s slick headquarters in London’s King’s Cross technology hub, gleaming geometric sculptures and the smell of espresso hang in the reception hall. Time is so precious that staff members — thought to number between 500 and 1,000 worldwide — can pick up a scooter to race the few hundred metres from one office to another.

It’s a far cry from the humble origins of the company, which sought to build general AI systems by melding ideas from neuroscience and machine learning. “They were absolutely just super geniuses,” says Joanna Bryson, a computer scientist and researcher in AI ethics at the Hertie School in Berlin. “They were these 12 guys that everybody wanted.”

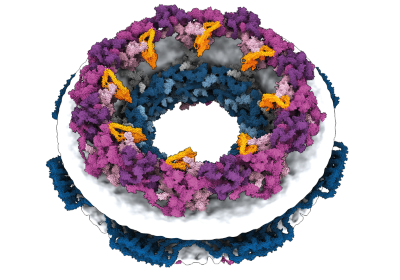

The laboratory pioneered the deep-learning AI technique, which uses simulated neurons to learn associations in data after studying real-world examples, as well as reinforcement learning, in which a model learns by trial, error and reward. After applying these to teach models how to play arcade games1 in 2015 and master the ancient game of Go2 in 2016, DeepMind turned its sights to its first scientific problem — predicting the 3D structure of proteins3 from their constituent amino acids.

A member of the AlphaFold team examines a prediction of a protein structure.Credit: Alecsandra Dragoi for Nature

Hassabis first came across the puzzle of protein structure as an undergraduate at the University of Cambridge, UK, in the 1990s and noted it as being a problem that AI might one day help to solve. AI learning techniques require a database of examples as well as clear metrics of success that guide the model’s progress. Thanks to a long-standing database of known structures and an established competition that judged the accuracy of the predictions, proteins had both.

Protein folding ticked a crucial box for Hassabis: it is a ‘root node’ problem that, once solved, opens up branches of downstream research and applications. Those kinds of problem “are worth spending five years or ten years on, and loads of computers and researchers”, he says.

What’s next for AlphaFold and the AI protein-folding revolution

DeepMind released its first iteration of AlphaFold in 2018, and by 2020, its performance far outstripped that of tools from any other team. Today, a spin-off from DeepMind, Isomorphic Labs, is seeking to use AlphaFold in drug discovery. And DeepMind’s AlphaFold database of more than 200 million protein-structure predictions has been used in a range of research efforts, from improving bee immunity to disease in the face of global population declines to screening for antiparasitic compounds to treat Chagas disease, a potentially life-threatening parasitic infection4.

Science is not just a source of problems to solve; the firm tries to approach all of its AI development in a scientific way, says Pushmeet Kohli, who leads the company’s science efforts. Researchers tend to go back to first principles for each problem and try fresh techniques, he says. Staff members at many other AI firms are more like engineers, applying ingenuity but not doing basic discovery, says Jonathan Godwin, chief executive of the AI firm Orbital Materials in London, who was a researcher at Google DeepMind until the end of 2022.

John Jumper and Pushmeet Kohli speak to researcher Olaf Ronneberger in the DeepMind offices.Credit: Alecsandra Dragoi for Nature

But replicating the success of AlphaFold will be tough: “Not many scientific endeavours work like that,” says Godwin.

Unlocking the genome

Google DeepMind is throwing its resources at several problems for which it thinks AI could speed development, and which could have “transformative impact”, says Kohli. These include weather forecasting5 and nuclear fusion, which has the potential to become a clean, abundant energy source. The company picks projects through a strict selection process, but individual researchers can choose which to work on and how to tackle a problem, he says. AI models that work on such problems often require specialized data and researchers to program knowledge into them.

One project that shows promise, says Kohli, is AlphaGenome, which launched in June as an attempt to decipher long stretches of human non-coding DNA and predict their possible functions6. But the challenge is harder than for AlphaFold, because each sequence yields multiple valid functions.

Materials science is another area in which the company hopes that AI could be revolutionary. Materials are hard to model because the complex interactions of atomic nuclei and electrons can only be approximated. Learning from a database of simulated structures, DeepMind developed its GNoME model, which in 2023 predicted 400,000 potential new substances7. Now, Kohli says, the team is using machine learning to develop better ways to simulate electron behaviour, ones that are learnt from example interactions rather than by relying on the principles of physics. The end goal is to predict materials with specific properties, such as magnetism or superconductivity, he says. “We want to see the era where AI can basically design any material with any sort of magical property that you want, if it is possible,” he says.

John Jumper and Pushmeet Kohli in the headquarters building.Credit: Alecsandra Dragoi for Nature

AI models have a variety of known safety issues, from the risk of being used to create bioweapons to the perpetuation of racial and gender-based biases, and these come to the fore when releasing models into the world. Google DeepMind has a dedicated committee on responsibility and safety that works across the company and is consulted at each major stage of development, says Anna Koivuniemi, who runs its ‘impact accelerator’, an effort to scour society for areas in which AI could make a difference. Committee members stress-test the idea to see what could go wrong, including by consulting externally. “We take it very, very seriously,” she says.

Another advantage the firm has is that its researchers are pursuing the kind of AI that the world ultimately wants, says Godwin. “People don’t really want random videos of themselves being generated and put on a social-media network; they want limitless energy or diseases being cured,” he says.

But DeepMind now has company in the quest to use AI for science. Some firms that started out making LLMs seem to be coming around to Hassabis’ vision of AI for science. In the past two months, both OpenAI and the Paris-based AI firm Mistral have created teams dedicated to scientific discovery.

Company concerns

For AI companies and researchers, OpenAI’s 2022 release of ChatGPT changed everything. Its success was “pretty surprising to everyone”, says Hassabis.