GRPO

GRPO9 is the RL algorithm that we use to train DeepSeek-R1-Zero and DeepSeek-R1. It was originally proposed to simplify the training process and reduce the resource consumption of proximal policy optimization (PPO)31, which is widely used in the RL stage of LLMs32. The pipeline of GRPO is shown in Extended Data Fig. 2.

For each question q, GRPO samples a group of outputs o1, o2,…, oG from the old policy \(\pi _\theta _\rmold\) and then optimizes the policy model πθ by maximizing the following objective:

$$\beginarrayll & \mathcalJ_\rmGRPO(\theta )=\mathbbE[q \sim P(Q),\o_i\_i=1^G \sim \pi _\theta _\rmold(O| q)]\\ & \frac1G\mathop\sum \limits_i=1^G\left(\min \left(\frac q) q)A_i,\,\textclip\left(\frac q) q),1-\epsilon ,1+\epsilon \right)A_i\right)-\beta \mathbbD_KL(\pi _\theta | | \pi _\rmref)\right),\endarray$$

(1)

$$\mathbbD_\rmKL(\pi _\theta | | \pi _\rmref)=\frac\pi _\rmref(o_i q)-\log \frac\pi _\rmref(o_i q)-1,$$

(2)

in which πref is a reference policy, ϵ and β are hyperparameters and Ai is the advantage, computed using a group of rewards r1, r2,…, rG corresponding to the outputs in each group:

$$A_i=\fracr_i-\rmmean(\r_1,r_2,\cdots \,,r_G\)\rmstd(\r_1,r_2,\cdots \,,r_G\).$$

(3)

We give a comparison of GRPO and PPO in Supplementary Information, section 1.3.

Reward design

The reward is the source of the training signal, which decides the direction of RL optimization. For DeepSeek-R1-Zero, we use rule-based rewards to deliver precise feedback for data in mathematical, coding and logical reasoning domains. For DeepSeek-R1, we extend this approach by incorporating both rule-based rewards for reasoning-oriented data and model-based rewards for general data, thereby enhancing the adaptability of the learning process across diverse domains.

Rule-based rewards

Our rule-based reward system mainly consists of two types of reward: accuracy rewards and format rewards.

Accuracy rewards evaluate whether the response is correct. For example, in the case of maths problems with deterministic results, the model is required to provide the final answer in a specified format (for example, within a box), enabling reliable rule-based verification of correctness. Similarly, for code competition prompts, a compiler can be used to evaluate the responses of the model against a suite of predefined test cases, thereby generating objective feedback on correctness.

Format rewards complement the accuracy reward model by enforcing specific formatting requirements. In particular, the model is incentivized to encapsulate its reasoning process within designated tags, specifically <think> and </think>. This ensures that the thought process of the model is explicitly delineated, enhancing interpretability and facilitating subsequent analysis.

$$\rmReward_\rmrule=\rmReward_\rmacc+\rmReward_\rmformat$$

(4)

The accuracy, reward and format reward are combined with the same weight. Notably, we abstain from applying neural reward models—whether outcome-based or process-based-to reasoning tasks. This decision is predicated on our observation that neural reward models are susceptible to reward hacking during large-scale RL. Moreover, retraining such models necessitates substantial computational resources and introduces further complexity into the training pipeline, thereby complicating the overall optimization process.

Model-based rewards

For general data, we resort to reward models to capture human preferences in complex and nuanced scenarios. We build on the DeepSeek-V3 pipeline and use a similar distribution of preference pairs and training prompts. For helpfulness, we focus exclusively on the final summary, ensuring that the assessment emphasizes the use and relevance of the response to the user while minimizing interference with the underlying reasoning process. For harmlessness, we evaluate the entire response of the model, including both the reasoning process and the summary, to identify and mitigate any potential risks, biases or harmful content that may arise during the generation process.

Helpful reward model

For helpful reward model training, we first generate preference pairs by prompting DeepSeek-V3 using the Arena-Hard prompt format, listed in Supplementary Information, section 2.2, for which each pair consists of a user query along with two candidate responses. For each preference pair, we query DeepSeek-V3 four times, randomly assigning the responses as either Response A or Response B to mitigate positional bias. The final preference score is determined by averaging the four independent judgments, retaining only those pairs for which the score difference (Δ) exceeds 1 to ensure meaningful distinctions. Furthermore, to minimize length-related biases, we ensure that the chosen and rejected responses of the whole dataset have comparable lengths. In total, we curated 66,000 data pairs for training the reward model. The prompts used in this dataset are all non-reasoning questions and are sourced either from publicly available open-source datasets or from users who have explicitly consented to share their data for the purpose of model improvement. The architecture of our reward model is consistent with that of DeepSeek-R1, with the addition of a reward head designed to predict scalar preference scores.

$$\rmReward_\rmhelpful=\rmRM_\rmhelpful(\rmResponse_\rmA,\rmResponse_\rmB)$$

(5)

The helpful reward models were trained with a batch size of 256, a learning rate of 6 × 10−6 and for a single epoch over the training dataset. The maximum sequence length during training is set to 8,192 tokens, whereas no explicit limit is imposed during reward model inference.

Safety reward model

To assess and improve model safety, we curated a dataset of 106,000 prompts with model-generated responses annotated as ‘safe’ or ‘unsafe’ according to predefined safety guidelines. Unlike the pairwise loss used in the helpfulness reward model, the safety reward model was trained using a pointwise methodology to distinguish between safe and unsafe responses. The training hyperparameters are the same as the helpful reward model.

$$\rmReward_\textsafety=\rmRM_\textsafety(\rmResponse)$$

(6)

For general queries, each instance is categorized as belonging to either the safety dataset or the helpfulness dataset. The general reward, Rewardgeneral, assigned to each query corresponds to the respective reward defined in the associated dataset.

Training details

Training details of DeepSeek-R1-Zero

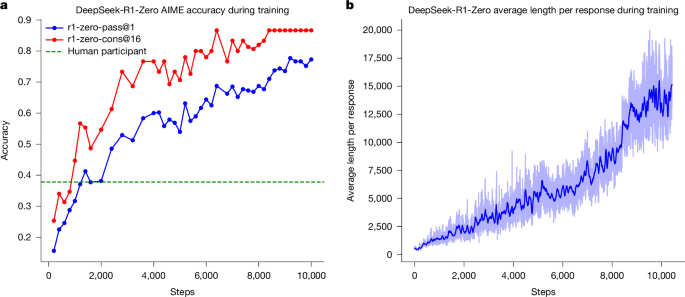

To train DeepSeek-R1-Zero, we set the learning rate to 3 × 10−6, the Kullback–Leibler (KL) coefficient to 0.001 and the sampling temperature to 1 for rollout. For each question, we sample 16 outputs with a maximum length of 32,768 tokens before the 8.2k step and 65,536 tokens afterward. As a result, both the performance and response length of DeepSeek-R1-Zero exhibit a substantial jump at the 8.2k step, with training continuing for a total of 10,400 steps, corresponding to 1.6 training epochs. Each training step consists of 32 unique questions, resulting in a training batch size of 512 per step. Every 400 steps, we replace the reference model with the latest policy model. To accelerate training, each rollout generates 8,192 outputs, which are randomly split into 16 minibatches and trained for only a single inner epoch.

Training details of the first RL stage

In the first stage of RL, we set the learning rate to 3 × 10−6, the KL coefficient to 0.001, the GRPO clip ratio ϵ to 10 and the sampling temperature to 1 for rollout. For each question, we sample 16 outputs with a maximum length of 32,768. Each training step consists of 32 unique questions, resulting in a training batch size of 512 per step. Every 400 steps, we replace the reference model with the latest policy model. To accelerate training, each rollout generates 8,192 outputs, which are randomly split into 16 minibatches and trained for only a single inner epoch. However, to mitigate the issue of language mixing, we introduce a language consistency reward during RL training, which is calculated as the proportion of target language words in the CoT.

$$\rmReward_\rmlanguage=\frac\rmNum(\rmWords_\rmtarget)\rmNum(\rmWords)$$

(7)

Although ablation experiments in Supplementary Information, sction 2.6 show that such alignment results in a slight degradation in the performance of the model, this reward aligns with human preferences, making it more readable. We apply the language consistency reward to both reasoning and non-reasoning data by directly adding it to the final reward.

Note that the clip ratio plays a crucial role in training. A lower value can lead to the truncation of gradients for a large number of tokens, thereby degrading the performance of the model, whereas a higher value may cause instability during training. Details of RL data used in this stage are provided in Supplementary Information, section 2.3.

Training details of the second RL stage

Specifically, we train the model using a combination of reward signals and diverse prompt distributions. For reasoning data, we follow the methodology outlined in DeepSeek-R1-Zero, which uses rule-based rewards to guide learning in mathematical, coding and logical reasoning domains. During the training process, we observe that CoT often exhibits language mixing, particularly when RL prompts involve several languages. For general data, we use reward models to guide training. Ultimately, the integration of reward signals with diverse data distributions enables us to develop a model that not only excels in reasoning but also assigns priority to helpfulness and harmlessness. Given a batch of data, the reward can be formulated as

$$\rmReward=\rmReward_\rmreasoning+\rmReward_\rmgeneral+\rmReward_\rmlanguage$$

(8)

in which

$$\rmReward_\rmreasoning=\rmReward_\rmrule$$

(9)

$$\rmReward_\rmgeneral=\rmReward_\textreward\_model+\rmReward_\textformat$$

(10)

The second stage of RL retains most of the parameters from the first stage, with the key difference being a reduced temperature of 0.7, as we find that higher temperatures in this stage lead to incoherent generation. The stage comprises a total of 1,700 training steps, during which general instruction data and preference-based rewards are incorporated exclusively in the final 400 steps. We find that more training steps with the model-based preference reward signal may lead to reward hacking, which is documented in Supplementary Information, section 2.5.