Lavoisier, A. Traité Élémentaire de Chimie (Elementary Treatise on Chemistry) (Cuchet, 1789).

Do, K., Tran, T. & Venkatesh, S. Graph transformation policy network for chemical reaction prediction. In Proc. 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD’19) 750–760 (ACM, 2019).

Jin, W., Coley, C., Barzilay, R. & Jaakkola, T. Predicting organic reaction outcomes with Weisfeiler-Lehman network. In Proc. 31st International Conference on Neural Information Processing Systems (NIPS’17) 2604–2613 (ACM, 2017).

Bradshaw, J., Kusner, M. J., Paige, B., Segler, M. H. & Hernández-Lobato, J. M. A generative model for electron paths. In International Conference on Learning Representations (2019).

Coley, C. W. et al. A graph-convolutional neural network model for the prediction of chemical reactivity. Chem. Sci. 10, 370–377 (2019).

Schwaller, P. et al. Molecular Transformer: a model for uncertainty-calibrated chemical reaction prediction. ACS Cent. Sci. 5, 1572–1583 (2019).

Tetko, I. V., Karpov, P., Van Deursen, R. & Godin, G. State-of-the-art augmented NLP transformer models for direct and single-step retrosynthesis. Nat. Commun. 11, 5575 (2020).

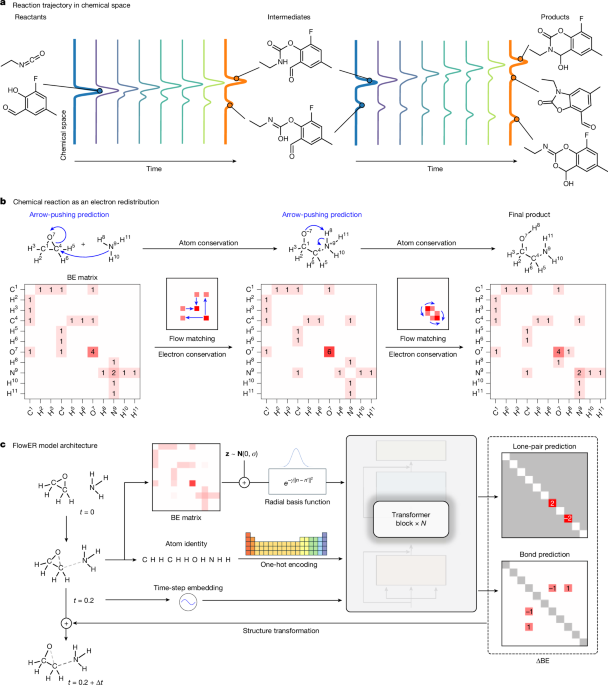

Bi, H. et al. Non-autoregressive electron redistribution modeling for reaction prediction. In Proc. 38th International Conference on Machine Learning 904–913 (PMLR, 2021).

Tu, Z. & Coley, C. W. Permutation invariant graph-to-sequence model for template-free retrosynthesis and reaction prediction. J. Chem. Inf. Model. 62, 3503–3513 (2022).

Wang, X. et al. From theory to experiment: transformer-based generation enables rapid discovery of novel reactions. J. Cheminform. 14, 60 (2022).

Schwaller, P. et al. Machine intelligence for chemical reaction space. Wiley Interdiscip. Rev. Comput. Mol. Sci. 12, e1604 (2022).

Joung, J. F. et al. Reproducing reaction mechanisms with machine-learning models trained on a large-scale mechanistic dataset. Angew. Chem. Int. Ed. 63, e202411296 (2024).

Bradshaw, J. et al. Challenging reaction prediction models to generalize to novel chemistry. ACS Cent. Sci. 11, 539–549 (2025).

Tong, A. et al. Conditional flow matching: simulation-free dynamic optimal transport. Preprint at https://arxiv.org/abs/2302.00482v1 (2023).

Lipman, Y., Chen, R. T. Q., Ben-Hamu, H., Nickel, M. & Le, M. Flow matching for generative modeling. In International Conference on Learning Representations (2023).

Liu, X., Gong, C. & Liu, Q. Flow straight and fast: learning to generate and transfer data with rectified flow. In International Conference on Learning Representations (2023).

Dugundji, J. & Ugi, I. in Computers in Chemistry, 19–64 (Springer, 1973).

Ugi, I. et al. Computer-assisted solution of chemical problems-the historical development and the present state of the art of a new discipline of chemistry. Angew. Chem. Int. Ed. Engl. 32, 201–227 (1993).

Tu, Z., Stuyver, T. & Coley, C. W. Predictive chemistry: machine learning for reaction deployment, reaction development, and reaction discovery. Chem. Sci. 14, 226–244 (2023).

Chen, J. H. & Baldi, P. No electron left behind: a rule-based expert system to predict chemical reactions and reaction mechanisms. J. Chem. Inf. Model. 49, 2034–2043 (2009).

Kayala, M. A., Azencott, C.-A., Chen, J. H. & Baldi, P. Learning to predict chemical reactions. J. Chem. Inf. Model. 51, 2209–2222 (2011).

Kayala, M. A. & Baldi, P. ReactionPredictor: prediction of complex chemical reactions at the mechanistic level using machine learning. J. Chem. Inf. Model. 52, 2526–2540 (2012).

Fooshee, D. et al. Deep learning for chemical reaction prediction. Mol. Syst. Des. Eng. 3, 442–452 (2018).

Tavakoli, M. et al. AI for interpretable chemistry: predicting radical mechanistic pathways via contrastive learning. In Proc. 37th International Conference on Neural Information Processing Systems (NIPS’23) (ACM, 2024).

Song, Y. et al. Score-based generative modeling through stochastic differential equations. In International Conference on Learning Representations (2021).

Ho, J., Jain, A. & Abbeel, P. Denoising diffusion probabilistic models. In Proc. 34th International Conference on Neural Information Processing Systems (NIPS’20) 6840–6851 (ACM, 2020).

Watson, J. L. et al. De novo design of protein structure and function with RFdiffusion. Nature 620, 1089–1100 (2023).

Ingraham, J. B. et al. Illuminating protein space with a programmable generative model. Nature 623, 1070–1078 (2023).

Krishna, R. et al. Generalized biomolecular modeling and design with RoseTTAFold all-atom. Science 384, eadl2528 (2024).

Hoogeboom, E., Satorras, V. G., Vignac, C. & Welling, M. Equivariant diffusion for molecule generation in 3D. In Proc. 39th International Conference on Machine Learning 8867–8887 (PMLR, 2022).

Xu, M., Powers, A. S., Dror, R. O., Ermon, S. & Leskovec, J. Geometric latent diffusion models for 3D molecule generation. In Proc. 40th International Conference on Machine Learning 38592–38610 (PMLR, 2023).

Igashov, I., Schneuing, A., Segler, M., Bronstein, M. & Correia, B. RetroBridge: modeling retrosynthesis with Markov bridges. In International Conference on Learning Representations (2024).

Wang, Y. et al. RetroDiff: retrosynthesis as multi-stage distribution interpolation. Preprint at https://arxiv.org/html/2311.14077v1 (2023).

Kim, S., Woo, J. & Kim, W. Y. Diffusion-based generative AI for exploring transition states from 2D molecular graphs. Nat. Commun. 15, 341 (2024).

Duan, C., Du, Y., Jia, H. & Kulik, H. J. Accurate transition state generation with an object-aware equivariant elementary reaction diffusion model. Nat. Comput. Sci. 3, 1045–1055 (2023).

Dai, H., Li, C., Coley, C., Dai, B. & Song, L. Retrosynthesis prediction with conditional graph logic network. In Proc. 33rd International Conference on Neural Information Processing Systems 8872–8882 (ACM, 2019).

O’Boyle, N. & Dalke, A. DeepSMILES: an adaptation of SMILES for use in machine-learning of chemical structures. Preprint at https://chemrxiv.org/engage/chemrxiv/article-details/60c73ed6567dfe7e5fec388d (2018).

Krenn, M., Häse, F., Nigam, A., Friederich, P. & Aspuru-Guzik, A. Self-referencing embedded strings (SELFIES): a 100% robust molecular string representation. Mach. Learn. Sci. Technol. 1, 045024 (2020).

NextMove Software. Pistachio. https://www.nextmovesoftware.com/pistachio.html.

Kotian, P. L. et al. Human plasma kallikrein inhibitors https://patents.google.com/patent/US20240150295A1/en (2024).

Kotian, P. L. et al. Human plasma kallikrein inhibitors https://patents.google.com/patent/US20240150296A1/en (2024).

Zhao, Q. & Savoie, B. M. Simultaneously improving reaction coverage and computational cost in automated reaction prediction tasks. Nat. Comput. Sci. 1, 479–490 (2021).

Suleimanov, Y. V. & Green, W. H. Automated discovery of elementary chemical reaction steps using freezing string and Berny optimization methods. J. Chem. Theory Comput. 11, 4248–4259 (2015).

Zimmerman, P. M. Automated discovery of chemically reasonable elementary reaction steps. J. Comput. Chem. 34, 1385–1392 (2013).

Ismail, I., Stuttaford-Fowler, H. B. V. A., Ochan Ashok, C., Robertson, C. & Habershon, S. Automatic proposal of multistep reaction mechanisms using a graph-driven search. J. Phys. Chem. A 123, 3407–3417 (2019).

Pesciullesi, G., Schwaller, P., Laino, T. & Reymond, J.-L. Transfer learning enables the molecular transformer to predict regio- and stereoselective reactions on carbohydrates. Nat. Commun. 11, 4874 (2020).

Wang, L., Zhang, C., Bai, R., Li, J. & Duan, H. Heck reaction prediction using a transformer model based on a transfer learning strategy. Chem. Commun. 56, 9368–9371 (2020).

Zhang, Y. et al. Data augmentation and transfer learning strategies for reaction prediction in low chemical data regimes. Org. Chem. Front. 8, 1415–1423 (2021).

Luo, Y. et al. An empirical study of catastrophic forgetting in large language models during continual fine-tuning. Preprint at https://arxiv.org/abs/2308.08747 (2025).

Tavakoli, M., Chiu, Y. T. T., Baldi, P., Carlton, A. M. & Van Vranken, D. RMechDB: a public database of elementary radical reaction steps. J. Chem. Inf. Model. 63, 1114–1123 (2023).

Tavakoli, M. et al. PMechDB: a public database of elementary polar reaction steps. J. Chem. Inf. Model. 64, 1975–1983 (2024).

Becke, A. D. Density functional thermochemistry. III. The role of exact exchange. J. Chem. Phys. 98, 5648–5652 (1993).

Krishnan, R., Binkley, J. S., Seeger, R. & Pople, J. A. Self consistent molecular orbital methods. XX. A basis set for correlated wave functions. J. Chem. Phys. 72, 650–654 (1980).

Marenich, A. V., Cramer, C. J. & Truhlar, D. G. Universal solvation model based on solute electron density and on a continuum model of the solvent defined by the bulk dielectric constant and atomic surface tensions. J. Phys. Chem. B 113, 6378–6396 (2009).

Neese, F., Wennmohs, F., Becker, U. & Riplinger, C. The ORCA quantum chemistry program package. J. Chem. Phys. 152, 224108 (2020).

Schütt, K. et al. SchNet: a continuous-filter convolutional neural network for modeling quantum interactions. In Proc. 31st International Conference on Neural Information Processing Systems (NIPS’17) 992–1002 (ACM, 2017).

DeBoer, C. Iteround. GitHub https://github.com/cgdeboer/iteround/ (2018).

NextMove Software. NameRxn. https://www.nextmovesoftware.com/namerxn.html.

Schwaller, P., Hoover, B., Reymond, J.-L., Strobelt, H. & Laino, T. Extraction of organic chemistry grammar from unsupervised learning of chemical reactions. Sci. Adv. 7, eabe4166 (2021).

Vaswani, A. Attention is all you need. In Proc. 31st International Conference on Neural Information Processing Systems (NIPS’17) 6000–6010 (ACM, 2017).

Ying, C. et al. Do transformers really perform bad for graph representation? In Proc. 35th International Conference on Neural Information Processing Systems (NIPS’21) 28877–28888 (2021).

Joung, J. F. et al. FlowER – mechanistic datasets and model checkpoint. Figshare https://figshare.com/articles/dataset/FlowER_-_Mechanistic_datasets_and_model_checkpoint/28359407 (2025).

FongMunHong. FongMunHong/FlowER: release v1.0.0. Zenodo https://zenodo.org/records/15776086 (2025).

FongMunHong. FongMunHong/FlowER: release v2.0.0. Zenodo https://zenodo.org/records/15786107 (2025).