Behaviour and electrophysiology

We analysed an experimental dataset previously described11,59. Two male monkeys (T and O, Macaca mulatta, 6 and 9 years of age) were used in the experiments. Experimental procedures were in accordance with the US National Institutes of Health (NIH) Guide for the Care and Use of Laboratory Animals, the Society for Neuroscience Guidelines and Policies, and Stanford University Animal Care and Use Committee (8856).

The monkeys were trained to discriminate the dominant colour in a static checkerboard stimulus composed of red and green squares and report their choice by touching the corresponding target. At the start of each trial, a monkey touched a central target and fixated on a cross above the central target. After a short holding period (300–485 ms), red and green targets appeared on the left and right sides of the screen. The colours of each side were randomized on each trial. After another short holding period (400–1,000 ms), the checkerboard stimulus appeared on the screen at the fixation cross and the monkey had to move its hand to the target matching the dominant colour in the checkerboard. Monkeys were free to respond when ready. Monkeys were rewarded for the correct choices and received longer inter-trial delays for the incorrect choices. Hand position was monitored by taping an infrared reflective bead to the index or middle fingers of each hand and used for measurement of speed and to estimate reaction time60. We used a real-time system combining xPC Target (v5.3) from MATLAB R2012b to control task timing and state transitions. This system communicated with a separate computer running Psychtoolbox (v3.0.9) on MATLAB R2012b for stimulus display.

The difficulty of the task was parameterized by an unsigned stimulus coherence expressed as the absolute difference between the number of red (R) and green (G) squares normalized by the total number of squares ∣R − G∣/(R + G). We used a 15 × 15 checkerboard, which led to a total of 225 squares. The task was performed with seven different unsigned coherence levels for monkey T and eight levels for monkey O. For each stimulus condition, our analysis required at least a small fraction of incorrect choices so that the neural activity fully explored the decision manifold. Therefore, we only analysed the four most difficult stimulus conditions for each monkey, which had sufficient number of error trials. To obtain sufficient data for the model fitting and validation, we merged these four stimulus conditions into two groups combining two easier conditions into one group and two harder conditions into another group. We refer to these two groups as easy and hard stimulus difficulties. As PMd neurons are selective for the chosen side but not for colour11, we further divided the trials according to the side indicated by the stimulus (left or right) for each stimulus difficulty (easy or hard), resulting in four analysed conditions in total.

We used the cerebus system (Blackrock Microsystems) and the Central software (v6.0) to record neural activity in the PMd with a linear multi-contact electrode (U-probe) with 16 channels. After rigorous online sorting in Central, we used offline spike sorting through a combination of MATLAB (MatClust v1.7.0.0) and Plexon offline sorter (v3) to identify single neurons and multi-units. The average yield was approximately 16 and approximately 9 neurons per session for monkey T and monkey O, respectively, which primarily were well-isolated single units.

Selection of units for the analyses

After spike sorting and quality control, we had 546 and 450 single neurons and multi-units recorded from monkeys T and O, respectively. From this dataset, we selected units for our analyses based on three criteria: (1) trial-averaged firing rate traces sorted by the chosen side (left versus right) reach 15 Hz for at least one reach direction at any time between stimulus onset and median reaction time; (2) the total number of trials across all conditions is at least 560; and (3) selectivity index for the chosen side is greater than 0.6 for monkey T and 0.55 for monkey O. The first two criteria ensure that a unit yields a sufficiently large number of spikes for model fitting18, and the third criterion selects for units with decision-related activity.

For the first criterion, we used trial-averaged firing rate traces aligned to stimulus onset (PSTH) sorted by the chosen side, obtained by averaging over trials the spike counts measured in 75-ms bins sliding at 10-ms steps. For the third criterion, we measured the spike count of each neuron on each trial in a (0.2–0.35 s) window aligned to stimulus onset. Selectivity index was defined as the area under the receiver operating characteristic curve for discriminating left versus right chosen side based on the spike counts. Selectivity index ranges between 0.5 (no choice selectivity) and 1. For each monkey, we imposed a selectivity index threshold at the median across all neurons (0.6 for monkey T and 0.55 for monkey O), leading to selecting half of all neurons in each monkey. This criterion implies that analysed neurons had overall lower choice selectivity in monkey O than in monkey T, because choice selectivity was generally lower for neurons from monkey O in our dataset.

For monkey T and monkey O, 128 and 88 units, respectively, passed all three selection criteria and were used in single-neuron analyses. The majority were well-isolated single neurons (127 out of 128 units (99%) for monkey T, and 76 out of 88 (86%) for monkey O), and the rest were multi-units. For population analyses, we included sessions that had at least 3 of the selected single units recorded simultaneously, yielding 15 populations for each monkey.

On each trial, we analysed PMd activity from 120 ms after stimulus onset (the appearance of a checkerboard stimulus on the screen) until the reaction time (the hand leaving the central target), which was estimated at the first time after checkerboard onset when speed of the hand was above 10% of the maximum speed for that trial. The delay of 120 ms was chosen to account for the lag in PMd response to the stimulus. We verified that the model fitting results were the same for a 80–120-ms range of delays.

Flexible inference framework

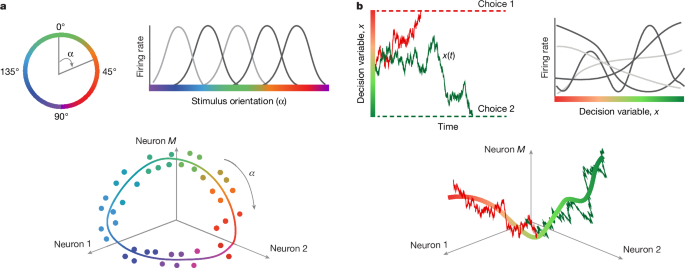

We implemented our computational framework as a Python package NeuralFlow, which is publicly available online61. We modelled latent neural dynamics x(t) as a stochastic non-linear dynamical system defined by the Langevin equation19 (equation (1)) on the domain x ∈ [−1; 1]. In this equation, the potential Φ(x) gives rise to a deterministic force flow field F(x) = −dΦ(x)/dx. As in one dimension, there are no rotational forces and any force results from the gradient of a potential, representing the flow field via potential does not restrict our model. In practice, we directly optimized the force F(x) and then computed Φ(x) from the force for visualization. The term ξ(t) is a white Gaussian noise \(\langle \xi (t)\rangle =0,\langle \xi (t)\xi (t^\prime )\rangle =\delta (t-t^\prime )\) with the magnitude D. In equation (1), we scaled the potential with D, which makes the equilibrium probability density of the Langevin dynamics invariant to the noise magnitude. This parametrization is equivalent to measuring the potential height in units of D and does not restrict the space of dynamical systems spanned by our models. We scaled all potentials by D after fitting for visualization and comparisons across conditions, neurons and populations. At the start of each trial, the initial latent state x0 is sampled from a distribution with probability density p0(x).

We modelled spikes of each neuron as an inhomogeneous Poisson process with instantaneous firing rate λ(t) = f(x(t)) that depends on the current latent state via a neuron-specific tuning function f(x). In population models, all neurons follow the same latent dynamics x(t) and each neuron i has a unique tuning function fi(x) (i = 1…M, where M is the number of neurons in the population). In this case, the population dynamics x(t) are shared by all neurons, and the tuning functions fi(x) jointly define the non-linear embedding of these dynamics into the neural population state space. Thus, the model was specified by a set of continuous functions: the potential Φ(x), the initial state distribution p0(x), a collection of tuning functions fi(x) and a scalar noise magnitude D. We inferred all model components θ = Φ(x), p0(x), fi(x), D from spike data \(\mathcalY(t)\).

The spike data consisted of multiple trials \(\mathcalY(t)=\Y_k(t)\\) (k = 1, 2…K, where K is the number of trials), and the time argument in Yk(t) indicates that the data are a sequence of event times on each trial. For independent trials, the total data likelihood is a product of likelihoods of individual trials

$$\log \mathscrL[\mathcalY(t)| \theta ]=\mathop\sum \limits_k=1^K\log \mathscrL[Y_k(t)| \theta ].$$

(2)

Therefore, here we consider data for a single trial Yk(t) and omit the trial index to simplify notation. For each trial, Y(t) = y0, y1, … , yN, yE is a marked point process, that is, a sequence of discrete observation events. Each observation is a pair yj = (tj, ij), where tj is the time of event j and ij is the type of this event. The first and last events mark the trial start time t0 and trial end time tE, and the N remaining events (j = 1, …N) are the spike observations where tj is the time of jth spike and ij is the index of the neuron that emitted this spike (ij ∈ 1, …M, where M is the number of simultaneously fitted neurons). The events are ordered according to their times.

We fitted the model by maximizing the data log-likelihood \(\log \mathscrL[\mathcalY(t)| \theta ]\) over the space of continuous functions18,19 (Supplementary Methods 2.1). The likelihood for each trial is a conditional probability density of observing the data Y(t) given the model θ marginalized over all possible latent trajectories:

$$\mathscrL[Y(t)| \theta ]=\int \,\mathcalD\mathcalX(t)\ P(\mathcalX(t),Y(t)| \theta ).$$

(3)

Here \(P(\mathcalX(t),Y(t)| \theta )\) is a joint probability density of observing the spike data Y(t) and a continuous latent trajectory \(\mathcalX(t)\) given the model θ, and the path integral is performed over all possible latent trajectories. We omit the conditioning on θ in subsequent expressions for the probability densities of the data and latent states to simplify notation.

We derived the likelihood functional for non-stationary latent Langevin dynamics as previously described19. In brief, in a reaction-time decision-making task, a participant reports the choice as soon as the neural trajectory reaches a decision boundary for the first time. Thus, trials have variable durations defined by the neural dynamics itself, and the latent trajectory always terminates at one of the decision boundaries at the trial end. Accordingly, the likelihood calculation must integrate over all latent paths that terminate at one of the boundaries at the trial end time tE and do not reach a boundary at earlier times. Two components in our framework account for the statistics of latent trajectories in this case. First, the absorbing boundary conditions ensure that latent trajectories reaching a boundary before the trial end do not contribute to the likelihood. Second, the absorption operator \(p(A| x_t_E)\) enforces that the likelihood includes only trajectories terminating on the boundaries at the trial end time. Without the absorption operator, the likelihood includes all trajectories that terminate anywhere in the latent space and do not reach the domain boundaries before the trial end. Omitting either of these components results in incorrect inference, in which erroneous features arise in the dynamics due to non-stationary data distribution19.

Here we provide a brief exposition of the likelihood calculation. The detailed mathematical derivation and extensive numerical testing of the non-parametric inference of non-stationary latent Langevin dynamics have been presented in our previous work19. In this study, we have introduced non-parametric inference of tuning functions fi(x) simultaneously with latent dynamics, as described below. To compute the path integral in equation (3), we consider a discretized latent trajectory \(X(t)=\x_t_,x_t_1,\ldots ,x_t_N,x_t_E\\), which is a discrete set of points along a continuous path \(\mathcalX(t)\) at each of the observation times t0, t1, …, tN, tE. Once we calculate the joint probability density P(X(t), Y(t)) of the discretized trajectory and data, we can obtain the data likelihood by marginalization over all discretized latent trajectories:

$$\mathscrL=\int _x_t_\int _x_t_1\ldots \int _x_t_N\int _x_t_E\rmdx_t_\ldots \rmdx_t_EP(X(t),Y(t)).$$

(4)

We do not bin spikes but process data spike by spike in continuous time. Accordingly, the calculation of the joint probability density P(X(t), Y(t)) must account for the spikes observed at times Y(t) = tj and also for the absence of spike observations during interspike intervals (ISIs; tj−1, tj). Using the Markov property of the latent Langevin dynamics in equation (1) and conditional independence of spike observations, the joint probability density P(X(t), Y(t)) can be factorized19:

$$P(X(t),Y(t))=p(x_t_)\left(\mathop\prod \limits_j=1^Np(y_j| x_t_j)p(x_t_j| x_t_j-1)\right)p(x_t_E| x_t_N)p(A| x_t_E).$$

(5)

Here the terms \(p(y_j| x_t_j)\) represent the probability density of observed spikes, and the terms \(p(x_t_j| x_t_j-1)\) represent the transition probability density of latent states that accounts for the absence of spike observations during ISIs. Each term \(p(y_j|x_t_j)\rmdt\) is the probability of observing a spike from neuron ij within infinitesimal dt of time tj given the latent state \(x_t_j\), hence \(p(y_j| x_t_j)\,=\,f_i_j(x_t_j)\) by the definition of the instantaneous Poisson firing rate. \(p(x_t_j| x_t_j-1)\) is the transition probability density from \(x_t_j-1\) to \(x_t_j\) during the time interval between the adjacent spike observations, which also accounts for the absence of spikes during each ISI in the data. This transition probability density decays with time at a rate given by the Poisson firing rate of all neurons, because it becomes less likely to observe no spikes for longer time intervals. \(p(x_t_)\) is the probability density of the initial latent state. Finally, the term \(p(A| x_t_E)\) represents the absorption operator, which ensures that only trajectories terminating at one of the domain boundaries at time tE contribute to the likelihood19.

The discretized latent trajectory \(X(t)=\x_t_,x_t_1,…,x_t_N,x_t_E\\) is obtained by marginalizing the continuous trajectory \(\mathcalX(t)\) over all latent paths connecting \(x_t_j-1\) and \(x_t_j\) during each ISI. These marginalizations are implicit in the transition probability densities \(p(x_t_j| x_t_j-1)\) in equation (5). The transition probability density \(p(x_t_j| x_t_j-1)\) accounts for the drift and diffusion in the latent space and also for the absence of spikes during each interval between adjacent spike observations. This probability density satisfies a modified Fokker–Planck equation, which we derived previously19:

$$\frac\partial p(x,t)\partial t=\left(-D\frac\partial \partial xF(x)+D\frac\partial ^2\partial x^2-\mathop\sum \limits_i=1^Mf_i(x)\right)p(x,t)\equiv -\widehat\boldsymbol\mathcalHp(x,t).$$

(6)

Here \(F(x)=-\varPhi ^\prime (x)\) is the deterministic potential force, and the term \(-\sum _i=1^Mf_i(x)\) leads to the probability decay due to spikes emitted by any neurons in the population, such that \(p(x_t_j| x_t_j-1)\) includes only trajectories consistent with no spikes emitted between tj−1 and tj. The solution of this equation \(p(x,t_j)=p(x,t_j-1)\exp (-\widehat\boldsymbol\mathcalH\cdot (t_j-t_j-1))\) propagates the latent probability density forwards in time during each ISI. To model the reaction time task, we solved equation (6) with absorbing boundary conditions, which ensure that trajectories reaching a boundary before the trial end do not contribute to the likelihood19. In addition, the absorption operator \(p(A| x_t_E)\) in equation (5) enforces that the likelihood includes only trajectories terminating on the boundaries at the trial end time tE19 (Supplementary Methods 2.1). Together, these two conditions ensure that the likelihood includes only trajectories that reach one of the boundaries for the first time at the trial end time.

To fit the model to data, we derived analytical expressions for the gradients of the model likelihood with respect to each of the model components (Supplementary Methods 2.2). We computed functional derivatives of the likelihood with respect to latent dynamics as previously described19. In this study, we computed functional derivatives of the likelihood with respect to tuning functions (Supplementary Methods 2.2). Instead of directly updating the functions Φ(x), p0(x) and fi(x) we, respectively, update the force \(F(x)=-\varPhi ^\prime (x)\) and auxiliary functions

$$F_(x)\equiv p_^\prime (x)/p_(x),\quad F_i(x)\equiv f_i^\prime (x)/f_i(x).$$

(7)

The potential Φ(x) is obtained from F(x) via

$$\varPhi (x)=-\,\int _-1^xF(s)\rmds+C,$$

(8)

where we fixed the integration constant C to satisfy \(\int _-1^1\exp [-\,\varPhi (x)]\rmdx=1\). As C is an arbitrary integration constant, a specific choice of C was not important as long as it was the same for all models. The initial state distribution p0(x) was obtained from the auxiliary function F0(x) in equation (7) via

$$p_(x)=\frac\exp \left(\int _-1^xF_(s)\rmds\right)\int _-1^1\exp \left(\int _-1^s^\prime F_(s)\rmds\right)\rmds^\prime .$$

(9)

The change of variable from p0(x) to F0(x) allowed us to perform an unconstrained optimization of F0(x), whereas equation (9) ensures that p0(x) satisfies the normalization condition for a probability density \(\int _-1^1p_(x)\rmdx=1\), p0(x) ⩾ 0. Finally, the tuning function fi(x) was obtained from the auxiliary function Fi(x) in equation (7) via

$$f_i(x)=C_i\exp \left(\int _-1^xF_i(s)\rmds\right),$$

(10)

where Ci = fi(−1) is the firing rate at the left domain boundary. This change of variable allowed us to perform an unconstrained optimization of Fi(x), whereas equation (10) ensures the non-negativity of the firing rate fi(x) ⩾ 0. We enforced the positiveness of the noise magnitude D by rectifying its value after each update \(D=\max (D,0)\), and the same for each constant Ci.

We derived analytical expressions for the variational derivatives of the likelihood with respect to each continuous function defining the model \(\delta \mathscrL/\delta F(x)\), \(\delta \mathscrL/\delta F_(x)\), \(\delta \mathscrL/\delta F_i(x)\) and the derivatives of the likelihood with respect to scalar parameters \(\partial \mathscrL/\partial D\) and \(\partial \mathscrL/\partial C_i\) (Supplementary Methods 2.2). We evaluated these analytical expressions numerically for the iterative optimization. To compute the likelihood and its gradients numerically, we used a discrete basis in which all continuous functions, such as F(x), are represented by vectors, and the transition, emission and absorption operators are represented by matrices18,19 (Supplementary Methods 2.1). Thus, equation (5) was evaluated as a chain of matrix–vector multiplications.

Optimization with ADAM algorithm

We fitted the model by minimizing the negative log-likelihood \(-\log \mathscrL[\mathcalY(t)| \theta ]\) using ADAM algorithm62 with custom modifications (Supplementary Methods 2.3). The standard ADAM update scales the gradient of each scalar parameter inversely with the running average of the squared magnitudes of its current and past gradients, computed separately for each parameter. As we optimized over continuous functions F(x), F0(x) and Fi(x), we scaled their gradients by the running average of the gradient’s squared L2-norm defined as \(\parallel \boldsymbolv\parallel _2=\sum _iv_i^2\). We used the following ADAM hyperparameters: α = 0.05 for single neurons, α = 0.01–0.05 for populations, β1 = 0.9, β2 = 0.99, ϵ = 10−8 for both single neurons and populations (the definitions of hyperparameters are in Supplementary Methods 2.3). We tuned these hyperparameters on synthetic data with known ground truth. For the scalar parameters D and all Ci, we combined ADAM updates with line searches using the L-BFGS-B algorithm (L-BFGS-B method from the scipy.optimize.minimize toolbox). As a line search is computationally expensive, we performed only 30 line searches spaced logarithmically over the 5,000 epochs range, such that most line searches are concentrated at early epochs.

We combined ADAM with mini-batch descent randomly splitting the trials from each condition into 20 batches on each epoch. When we performed shared optimization, we fitted the model to all available trials restricting F0(x), Fi(x) and D to be the same and only allowing the potential force F(x) to differ across stimulus conditions. In this case, we performed ADAM updates on all batches pooled across four stimulus conditions (80 batches total) in random order on each epoch. We updated the force Fl(x) that defines the potential in condition l only on batches from this condition, and we updated all shared components F0(x), Fi(x), D, Ci on every batch.

We accelerated the optimization algorithm on graphics processing units (GPUs) using cupy library63. GPU implementation provides a 5–10-fold acceleration over the CPU implementation with the exact factor depending on the spatial resolution of the discrete basis. The 5–10-fold GPU acceleration can only be provided by scientific-grade GPUs (for example, Tesla V100) that have sufficient number of double-precision streaming multiprocessors.

Model selection

ADAM optimization produces a series of models across epochs, and we needed a model selection procedure for choosing the optimal model. On early epochs, the fitted models miss some true features of the dynamics due to underfitting, whereas on late epochs, the fitted models develop spurious features due to overfitting to noise in the data. The optimal model is discovered on some intermediate epochs. The standard approach for selecting the optimal model is based on optimizing the ability of the model to predict new data (that is, generalization performance), for example, using likelihood of held-out validation data as a model selection metric64. However, optimizing generalization performance cannot reliably identify true features and avoid spurious features when applied to flexible models18,65, which generalize well despite overfitting66. We developed an alternative approach for model selection based on directly comparing features of the same complexity discovered from different data samples18,19 (Supplementary Methods 2.4). As true features are the same, whereas noise is different across data samples, the consistency of features inferred from different data samples can separate the true features from noise, and model selection based on feature consistency can reliably identify the correct features18,19.

To compare features discovered from different data samples, we need a metric for feature complexity \(\mathcalM\). We defined feature complexity as the negative entropy of latent trajectories generated by the model18,19 \(\mathcalM=-S[\varPhi (x),D,p_(x);\varPhi ^R(x),D^R,p_^R(x)]\). The trajectory entropy67 is a functional defined as a negative Kullback–Leibler divergence between the distributions of trajectories in the model of interest Φ(x), D, p0(x) and the distribution of trajectories in the reference model \(\\varPhi ^R(x),D^R,p_^R(x)\\). The reference model is a free diffusion in a constant potential (\(\varPhi ^R(x)=\rmconst\)) with the same diffusion coefficient D as in the model of interest. We derived the analytical expression for the trajectory entropy for non-stationary Langevin dynamics19:

$$S\,\left[\Phi (x),D,p_(x)\,;\Phi ^R(x),D,p_^R(x)\right]=-\,\int \rmdxp_(x)\textln\fracp_(x)p_^R(x)-\fracD4\int _^\infty \rmdt\int \,\rmdxF^2(x)p(x,t).$$

(11)

We chose the initial distribution \(p_^R(x)\) for the reference model to be uniform. We derived an expression for efficient numerical evaluation of equation (11) taking the integral over time analytically19 (Supplementary Methods 2.4). Qualitatively, feature complexity reflects the structure of the potential Φ(x): potentials with more structure have higher feature complexity. The reference model with constant potential has zero feature complexity. During model fitting, the feature complexity consistently grows throughout the optimization epochs19.

We compared models discovered from two non-intersecting halves of the data \(\mathcalD_1\) and \(\mathcalD_2\) to evaluate the consistency of their features (Supplementary Fig. 1). We performed the ADAM optimization independently on each data split to obtain two series of models \(\theta _n^1=\\varPhi _n^1,D_n^1,p_0,n^1(x)\\) and \(\theta _n^2=\\varPhi _n^2,D_n^2,p_0,n^2(x)\\) (where n = 1, 2…5,000 is the epoch number) fitted on \(\mathcalD_1\) and \(\mathcalD_2\), respectively. We measured feature complexity of these models, \(\mathcalM_n^1\) and \(\mathcalM_n^2\), and quantified the consistency of features of the same complexity between models fitted on different data splits. We quantified the consistency of features between two models by evaluating Jensen–Shannon divergence (DJS) between their time-dependent probability densities over the latent space19 (Supplementary Methods 2.4). At low and moderate feature complexity, the models contain true features of the dynamics in the data and their features agree between data splits reflected in low DJS values. At high feature complexity, the models overfit to noise and contain spurious features that do not replicate between data splits, resulting in large DJS values. To find the optimal feature complexity, we set the threshold \(D_\rmJS,thres=0.0015\) and selected \(\mathcalM^* \) as the maximum feature complexity for which \(D_\rmJS\le D_\rmJS,thres\). This procedure returned two models of roughly the same feature complexity that represent the consistent features of dynamics across data splits. The threshold \(D_\rmJS,thres\) sets the tolerance for mismatch between models and choosing higher \(D_\rmJS,thres\) results in greater discrepancy between models obtained from two data splits. We set \(D_\rmJS,thres=0.0015\) based on fitting synthetic data with known ground truth; at this threshold value, the selected models reliably matched the ground-truth model.

We split all trials in halves by assigning even trails to \(\mathcalD_1\) and odd trials to \(\mathcalD_2\). In the experiment, stimulus conditions were sampled randomly on each trial. Therefore, the time difference between two adjacent trials of the same condition varies broadly, limiting possible temporal correlations between \(\mathcalD_1\) and \(\mathcalD_2\).

Uncertainty quantification

We quantified the estimation uncertainty for fitted models using a bootstrap method19. To obtain confidence bounds for the inferred model, we generated ten bootstrap samples by sampling trials randomly with replacement from the set of all trials. To ensure that the two data samples \(\mathcalD_1\) and \(\mathcalD_2\) used for model selection do not overlap, we first randomly split all trials into two equal non-overlapping groups, and then sampled trials randomly with replacement from each group to generate \(\mathcalD_1\) and \(\mathcalD_2\). For shared optimization, we resampled the trials separately for each stimulus condition. For each bootstrap sample, we refitted the model and performed model selection using our feature consistency method. We then obtained the confidence bounds for the inferred potential, p0(x) distribution, and tuning functions by computing a pointwise standard deviation across 20 models produced by the model selection on two data splits from each of 10 bootstrap samples.

Outcomes of model fitting

When fitting our model to spikes of single neurons and populations and performing model selection, we observed three possible outcomes: overfitting, underfitting and good fit.

In rare cases (0 out of 128 single neurons (0%) and 1 out of 15 populations (6.7%) for monkey T; and 1 out of 88 single neurons (1%) and 1 out of 15 populations (6.7%) for monkey O), the model selection produced a model that showed signs of overfitting (Supplementary Fig. 8). We detected overfitting as models with unrealistically high firing rates in the tuning function (hundreds of Hz), disproportionally high noise magnitude (in the range of D ~ 3–5, compared with D ~ 0.2–0.6 in regular fits) compensated by deep wells in the potential (overall depth of the potential of approximately 20, compared with approximately 2 in regular fits). These models produced severely underestimated reaction times (reaction time of approximately 10 ms in the model, compared with approximately 500 ms in the data) and did not predict choice of the monkey. This type of overfitting cannot be detected with standard validation approaches18, for example, these models had similar likelihood on training and validation data.

Some selected models showed signs of underfitting in one of two types. In the first type (10 out of 128 single neurons (7.8%) and 2 out of 15 populations (13%) for monkey T; and 11 out of 88 single neurons (12.5%) and 1 out of 15 populations (6.7%) for monkey O), the potentials had the linear slope tilted towards the same boundary in all stimulus conditions, that is, the model had no decision signal (Supplementary Fig. 9a,b). In the second type (1 out of 128 single neurons (0.8%) and 1 out of 15 populations (6.7%) for monkey T; and 9 out of 88 single neurons (10%) and 0 out of 15 populations (0%) for monkey O), the potentials obtained from two data halves \(\mathcalD_1\) and \(\mathcalD_2\) had the linear slope tilted towards the opposite boundaries in at least one stimulus condition (Supplementary Fig. 9c–e). This disagreement about the correct choice side resulted in DJS values rising high early in the optimization, leading to the selection of a model with low feature complexity before all consistent features had been discovered. These both types of underfitting probably arise when a model cannot detect a weak decision signal and mainly fits the condition-independent trend in neural activity.

All remaining models were considered a good fit and were used in further analyses. In these models, we quantified the potential shape by counting the number of barriers in the potential. A barrier is a potential maximum where the force, which is the negative derivative of the potential F(x) = −dΦ(x)/dx, changes the sign from negative to positive. We also classified a potential minimum next to a boundary as a barrier, because the trajectory must get to the top of the potential to reach the boundary. At a potential minimum, the force changes the sign from positive to negative. We therefore counted the number of sign changes from negative to positive and vice versa in the force F(x) in each stimulus condition. We used two force functions F1(x) and F2(x) produced by the model selection on two data splits \(\mathcalD_1\) and \(\mathcalD_2\) (bootstrap samples were not used in this analysis). We counted a sign change to occur within a local region if both F1(x) and F2(x) were negative for ten consecutive grid points to the left and positive for ten consecutive grid points to the right of that region, or vice versa. We only counted sign changes that were at least 30 grid points away from the domain boundaries.

The overwhelming majority of models had a single-barrier potential in all four stimulus conditions (102 out of 117 single neurons (87%) and 9 out of 11 populations (82%) for monkey T; and 66 out of 67 single neurons (98.5%) and 13 out of 13 populations (100%) for monkey O; Figs. 3e,h and 4d, Extended Data Fig. 8 and Supplementary Fig. 6). Some models had a monotonic potential (no barrier) in at least 1 stimulus condition and a single-barrier potential in the remaining conditions (9 out of 117 single neurons (8%) and 1 out of 11 populations (9%) for monkey T; and 0 out of 67 single neurons (0%) and 0 out of 13 populations (0%) for monkey O). The remaining models had a second small barrier in at least 1 stimulus condition and a single-barrier potential in the remaining conditions (6 out of 117 single neurons (5%) and 1 out of 11 populations (9%) for monkey T; and 1 out of 67 single neurons (1.5%) and 0 out of 13 populations (0%) for monkey O). The second barrier was typically shallow and located near the incorrect-choice boundary, where the estimation uncertainty is higher due to lower sampling probability of this region in the data.

We also analysed the potential shape in models that showed the first type of underfitting with no decision signal. These models had feature complexity similar to good fits, suggesting that the model selection identified similar features in the dynamics. The fit, however, captured only the condition-independent dynamics and missed the weak decision signal. These models can still inform us about the mechanism of decision-making. For example, in the two-pool attractor network model33, inhibitory neurons do not have choice selectivity but they still reflect the attractor dynamics with a barrier separating correct and incorrect choices. Many of the models with no decision signal had a single-barrier potential (5 out of 10 single neurons (50%) and 0 out of 2 populations (0%) for monkey T; and 11 out of 11 single neurons (100%) and 1 out of 1 populations (100%) for monkey O), which further supports our finding that the dynamics described by a single-barrier potential were prevalent in our PMd data.

When analysing spike-time variation explained by our models on single trials (Fig. 3b), for each neuron, we included only stimulus conditions that had at least 600 spikes across all trials. This restriction was necessary for an accurate estimation of the spike-time variation explained by the model, which was computed on raw spike times without binning or smoothing. For single-neuron models, this restriction produced 111 and 50 single neurons for monkey T and monkey O, respectively (Fig. 3b). For population models, this restriction produced 80 and 32 single neurons for monkey T and monkey O, respectively, which were part of the well-fitted populations. The comparison between the residual spike-time variation unexplained by single-neuron models and the point process variation estimated by the independent method was performed for 64 neurons from monkey T and 27 neurons from monkey O, which had sufficiently high firing rate for the independent method to produce a reliable estimate32. For behaviour prediction (Fig. 4e), we additionally only included conditions that had at least five incorrect choices in both training and validation datasets, which did not change the number of analysed populations. This condition was necessary for the baseline comparison, which required training a logistic regression decoder for choice prediction. In this analysis, we used all well-fitted population models and the single-neuron models for the exact same set of neurons that were part of the used populations.

Spike-time R

2

To quantify how well our models fitted spiking activity on single trials, we designed a metric specifically for measuring the fraction of the total variation in spike times on single trials explained by a model. We used the standard coefficient of determination R2 defined as the proportion of the total variation in the data that is predicted by a statistical model:

$$R^2=1-\frac\,\rmCV_\rmresidual^2\rmCV_\rmtotal^2.$$

(12)

Here \(\rmCV_\rmtotal^2\) is the total variation in the data, and \(\rmCV_\rmresidual^2\) is the residual variation unexplained by the model. As we modelled single-trial dynamics, our metric quantified the variation in spike times on single trials. We quantified the total variation in the data \(\rmCV_\rmtotal^2\) using the squared coefficient of variation of ISIs, which is the ratio of the ISI variance to the squared mean ISI68. Then, \(\,\rmCV_\rmresidual^2\) is the residual variation in ISIs unexplained by the model. As our model predicts the firing rate on single trials but not individual spikes, the residual variation is the variation in spike times after accounting for the firing rate variation predicted by the model.

To compute the residual variation in ISIs, we used the time rescaling theorem for doubly stochastic point processes69. For a doubly stochastic point process, the total variation in spike times arises from two sources: the variability of the instantaneous firing rate λ(t) and the variability of the point process generating spikes from this firing rate. The time rescaling theorem states that we can eliminate the firing rate variation by mapping the spike times from the real time t to the operational time \(t^\prime \) via squeezing or stretching the time locally in proportion to the cumulative firing rate: \(t^\prime =\Lambda (t)=\int _^t\lambda (s)\rmds\). Accordingly, we used a model to predict the instantaneous firing rate λ(t) of a neuron on each trial, map spikes to the operational time using this predicted firing rate λ(t), and compute the residual ISI variation \(\,\rmCV_\rmresidual^2\) as the squared coefficient of variation of rescaled ISIs in the operational time. If the firing rate is predicted correctly on each trial, then the variation of rescaled ISIs in the operational time reflects only the point process variability. For example, rescaling spike times generated by an inhomogeneous Poisson process using the ground-truth firing rate yields a homogeneous Poisson process with the firing rate of 1 Hz. The total variation \(\,\rmCV_\rmtotal^2\) was calculated using the raw ISIs in the real time.

To compute the residual spike-time variation \(\rmCV_\rmresidual^2\), we predicted the instantaneous firing rate λ(t) with our model on each trial. First, we used the Viterbi algorithm to predict the most probable latent path \(\widehatX(t)\) given the observed data Y(t). We generalized the max-sum Viterbi algorithm with backtracking70 to our case of continuous-space continuous-time latent dynamical system (Supplementary Methods 2.5). Then, from the most probable latent path \(\widehatX(t)\), we computed the instantaneous firing rate for a neuron i using its tuning function \(\lambda (t)=f_i(\widehatX(t))\). Finally, we rescaled spike times via \(t_i^\prime =\int _t_^t_i\lambda (t)\rmdt\) (where t0 is the trial start time and t1, … , tN are the original spike times) using the trapezoidal rule to approximate the time integral and compute \(\rmCV_\rmresidual^2\) of rescaled ISIs.

The advantage of the R2 metric is that its value is directly interpretable as the fraction of the total variation in the data explained by a model. Specifically, R2 = 0 results from the baseline prediction of a firing rate that is constant within and across trials. Positive R2 values indicate that a prediction is better than this baseline, and negative R2 values indicate that a prediction is worse than the baseline. For a doubly stochastic point process, the value of our R2 metric can never reach 1, because our models predict firing rates on single trials but not individual spikes, hence leaving the point process variability unexplained.

We used the unexplained residual variation in spike times to compare the performance of our model to the ceiling performance expected if the model was correct (Fig. 3b). If the firing rate prediction was correct on each trial, then the residual variation \(\rmCV_\rmresidual^2\) would match exactly the expected point process variation \(\rmCV_\rmpp^2\) of rescaled ISIs in the operational time. For an inhomogeneous Poisson process, the expected \(\rmCV_\rmpp^2\) of ISIs in the operational time equals one69. However, the spiking of neurons across many brain regions deviates significantly from the Poisson irregularity. In particular, most neurons in the parietal and premotor cortical areas spike more regularly than expected for an inhomogeneous Poisson process71,72. Therefore, we used an independent method based on doubly stochastic renewal point processes to estimate the point process variability \(\rmCV_\rmpp^2\) for each neuron32. This method does not require knowledge of the firing rate dynamics on single trials and assumes only that the firing rate evolves smoothly in time. Thus, \(\rmCV_\rmpp^2\) is the expected residual variation in rescaled ISIs if the firing rate prediction was correct on each trial. The tight correspondence between \(\rmCV_\rmresidual^2\) and \(\rmCV_\rmpp^2\) indicates that the model accounts for nearly all explainable firing-rate variation in the data close to the ceiling performance.

The independent method for estimating the point process variability based on doubly stochastic renewal point processes has been derived and extensively tested in a separate publication32. In brief, for a doubly stochastic renewal point process, the variance Var(NT) of spike count NT measured in time bins of size T can be partitioned into two components: the firing rate and the point process variation32:

$$\,\rmVar(N_T)=\rmVar(\lambda T)+\frac16(1-\phi ^2)+\phi \rmE\,[N_T]+\mathcalO(T^-1).$$

(13)

Here ϕ is a parameter that controls the point process variability defined as CV2 of ISIs in the operational time, and λ(t) is the instantaneous firing rate that is assumed to be approximately constant within a single bin. To estimate ϕ from data, we applied equation (13) to spike counts measured in two bin sizes T and 2T to yield two equations, which can be solved to obtain a quadratic equation for ϕ32:

$$\frac12(\phi ^2-1)-(4\,\rmE\,[N_T]-\,\rmE\,[N_2T])\phi +4\,\rmVar(N_T)-\rmVar\,(N_2T)=0.$$

(14)

Here the spike-count mean and variance for each bin size E[NT], E[N2T], Var(NT) and Var(N2T) are measured directly from the spike data, and ϕ is the only unknown variable. Thus, we solved equation (14) to estimate \(\phi =\,\rmCV_\rmpp^2\) from data and compared this ϕ to the residual spike-time variance unexplained by our model \(\rmCV_\rmresidual^2\) (Fig. 3b).

Evaluating model performance

We evaluated model performance using multiple metrics: spike-time variance explained (R2), model likelihood relative to several baselines, and behavioural choice prediction. We performed all these analyses within a cross-validation framework using the same two non-overlapping data splits \(\mathcalD_1\) and \(\mathcalD_2\) as used for the model selection. For example, we used the model fitted on the dataset \(\mathcalD_1\) to predict the instantaneous firing rate and compute R2 of each neuron on the dataset \(\mathcalD_2\), and vice versa. We analysed each stimulus condition separately and averaged R2 across conditions. We report R2 averaged over the two data splits. The same cross-validation framework was used for all model likelihood comparisons and choice prediction analyses.

We compared the likelihoods of single-neuron and population models against each other and several baselines to determine which model components are essential for capturing spiking activity on single trials.

Single-neuron model versus PSTH

As a benchmark, we compared the performance of our single-neuron model against the baseline based on the trial-averaged firing rate (PSTH; Fig. 3a). The PSTH predicts the instantaneous firing rate λ(t) on each trial using the trial-averaged firing rate traces sorted by the chosen side and stimulus difficulty. We computed the trial-averaged firing rates for the left and right choice trials in a 75-ms window sliding in 10-ms steps on the dataset \(\mathcalD_1\), and used them as a prediction of the instantaneous firing rates for the left and right choice trials on the dataset \(\mathcalD_2\), and vice versa. Thus, the baseline PSTH prediction (but not our model) uses the information about the choice of the animal on both the training and the validation trials.

We compared the likelihood of the single-neuron model with the PSTH likelihood (Fig. 3a). The likelihood was computed for the observed spike times t1, t2, … , tN of a single neuron during the time interval [t0; tE] on each trial. The PSTH likelihood for the observed spike sequence is given by the standard likelihood of an inhomogeneous Poisson process73:

$$\mathscrL_\rmP\rmS\rmT\rmH=\exp \left(-\,\int _^t_E\lambda (t)\rmdt\right)\mathop\prod \limits_i=1^N\lambda (t_i),$$

(15)

where we take the instantaneous firing rate λ(t) to be the PSTH for each chosen side and stimulus difficulty. We computed the integral over time in equation (15) using the trapezoidal rule.

Unlike PSTH, the full likelihood of our model on each trial (equation (3)) is a joint probability density of the observed spikes t1, t2, … , tN and a latent trajectory reaching a decision boundary for the first time at the trial end time tE (the reaction time), marginalized over all possible latent trajectories. Therefore, for comparison with PSTH, we needed to compute the model likelihood \(\mathscrL_\rmmodel\) of spike times only, excluding the probability of the reaction time tE. To obtain this likelihood, we normalized the full model likelihood equation (3) by the likelihood \(\mathscrL_t_E\) of the latent trajectory reaching a boundary for the first time at time tE:

$$\mathscrL_\rmmodel=\mathscrL[Y(t)| \theta ]/\mathscrL_t_E.$$

(16)

This normalized likelihood \(\mathscrL_\rmmodel\) is the probability density of the observed spikes only, marginalized over all latent trajectories consistent with the reaction time tE. In other words, \(\mathscrL_\rmmodel\) is a probability density that the observed spikes were generated from any latent trajectory that reaches a boundary for the first time at time tE. Thus, \(\mathscrL_\rmmodel\) is the likelihood of the observed spikes t1, t2, … , tN only and is directly comparable with the PSTH likelihood \(\mathscrL_\rmPSTH\). We computed the likelihood \(\mathscrL_t_E\) analogously to the full model likelihood, but using only t0, tE observations. Specifically, we evolved the latent probability density \(p(x_t_E| x_t_)\) from the trial start t0 to end time tE by solving the Fokker–Planck equation (equation (6)) with the drift and diffusion terms but without the term \(-\sum _i=1^Mf_i(x)\) for the probability decay due to spike emissions (that is, the standard Fokker–Planck equation).

Single-neuron model versus shuffled control

We performed a permutation test to estimate the baseline for the explained variation R2 in spike times on single trials. For all single-neuron models that provided a good fit, we inferred the latent trajectories on validation trials using the Viterbi algorithm and predicted single-trial firing rates of each neuron from these latent trajectories using their tuning functions. We then computed the explained spike-time variation R2 on shuffled trials: we used the firing rate predicted on one trial to compute R2 for spikes on another randomly chosen trial. The explained spike-time variation was much lower for randomly shuffled trials than the original data (shuffled R2, median (Q1–Q3); −0.10 (−0.24 to −0.04), P < 10−10, n = 101 for monkey T; −0.02 (−0.15 to 0.01), P < 10−10, n = 35 for monkey O, Wilcoxon signed-rank test). The negative R2 values indicate that the shuffled prediction performed worse than predicting a constant firing rate, which corresponds to \(\rmCV_\rmtotal^2=\rmCV_\rmresidual^2\) and R2 = 0. The shuffled prediction was also significantly worse than the prediction based on PSTH. This result confirms that our models explain the spike times on single trials significantly better than the permutation baseline and PSTH, which shows again that our model successfully captured variable firing-rate dynamics on single trials.

Single-neuron versus population model

We compared the likelihoods of the single-neuron and population models (Fig. 4a). The single-neuron model predicts spikes of each neuron independently of other neurons in the population. Accordingly, the single-neuron model likelihood of spikes from all M neurons in the population is a product of M single-neuron model likelihoods for each neuron. However, a product of the full likelihood for M single neurons accounts M times for the probability that the latent trajectory reaches a boundary for the first time at time tE, whereas the population-model likelihood accounts for this probability only once. Therefore, we used the normalized model likelihood \(\mathscrL_\rmmodel\) (equation (16)) when comparing single-neuron and population models. \(\mathscrL_\rmmodel\) is the likelihood of spike data only, marginalized over all latent trajectories consistent with the reaction time tE on each trial, and, therefore, directly comparable between single-neuron and population models.

LONO validation versus PSTH

We compared the likelihood of the population model in leave-one-neuron-out (LONO) validation with the PSTH likelihood based on the neuron’s own trial-averaged firing rate (Fig. 4a). For each neuron k, the likelihood was computed for the observed spike times Yk(t) = (tj, k) during the time interval [t0; tE] on each trial, where index j is restricted to spike times of neuron k. We computed the PSTH likelihood for each neuron using equation (15) with the instantaneous firing rate λ(t) given by the neuron’s own PSTH for each chosen side and stimulus difficulty. We computed the LONO likelihood \(\mathscrL_\rmLONO\) also using equation (15), but with the instantaneous firing rate λ(t) of neuron k predicted by the population model from spikes of all other neurons in the population on each validation trial. Specifically, we used spikes of M − 1 neurons in the population excluding spikes of neuron k: YM−1(t) = (tj, ij), where tj is the time of jth spike and ij is the index of the neuron that emitted this spike (ij ≠ k). We applied the Viterbi algorithm to YM−1(t) to predict the most probable latent trajectory \(\widehatX(Y_M-1(t))\) on each trial. We then compute the instantaneous firing rate for neuron k using its tuning function \(\widehat\lambda =f_k(\widehatX(Y_M-1(t)))\), which provides a prediction of the firing rate of neuron k at times tj when other neurons in the population spiked. We then interpolated \(\widehat\lambda \) with cubic splines to obtain the firing rate prediction λ(t) at times when neuron k spiked and substituted it into equation (15) to compute the likelihood, approximating the integral with the trapezoidal rule.

Predicting choice from neural activity

We used our models to predict the choice of an animal from neural activity. We performed a cross-validation procedure with the same two non-overlapping data splits \(\mathcalD_1\) and \(\mathcalD_2\) as used for the model selection. We used the models fitted on the dataset \(\mathcalD_1\) to predict the choice of the animal on the dataset \(\mathcalD_2\), and vice versa, and reported the average accuracy over the two data splits. We applied the Viterbi algorithm to neural activity on validation trials to predict the most probable latent path \(\widehatX(t)\). By the design of the Viterbi algorithm with absorbing boundary conditions, the trajectory must terminate at one of the domain boundaries, therefore we predicted choice as the value of x(tE) at the trial end.

As a baseline for the comparison with our models, we also predicted the choice of the animal with a logistic regression decoder using the same two data splits for the decoder training and validation. As an input to the decoder, we have provided a vector of spike counts measured in 75-ms bins sliding in 10-ms steps on single trials. We truncated each trial at 0.5 s after stimulus onset resulting in a 42-dimensional input vector for single neurons and 42 × M dimensional input vector for populations, where M is the number of neurons in the population. We normalized the inputs to have zero mean and unit variance across trials for each condition in each time bin.

Our data are imbalanced as monkeys make more correct choices than errors, especially on easy trials. We therefore report balanced accuracy for both our models and the linear decoder (Fig. 4e). The balanced accuracy is the average between true-positive and true-negative rates.

The accuracy of choice prediction did not change when replacing the tuning functions and potentials with linear approximations (Extended Data Fig. 9b), showing that the accuracy of choice prediction does not uniquely identify single-trial dynamics.

Spiking network model

We simulated a spiking recurrent neural network model of decision-making with the same parameters as in ref. 33 using the Python package Brian 2 (ref. 74). We only changed the value of the N-methyl-d-aspartate (NMDA) conductance for inhibitory neurons from gNMDA = 0.13 nS to gNMDA = 0.128 nS to match the reaction times of the spiking network to the experimental data. We simulated four stimulus conditions based on the stimulus difficulty (easy versus hard) and side (left versus right) for comparison with our PMd data. We set the stimulus coherence parameter c = 17.5% for easy-stimulus and c = 7.5% for hard-stimulus conditions and generated approximately 3,200 trials of data per condition. The reaction time was defined on each trial as time when one of the population firing rates (smoothed with a moving average over a 200-ms time window) crosses the threshold of 30 Hz. We fitted our population model to the responses of two neurons from each of the two selective excitatory pools (that is, four simultaneous neural responses in total). We performed the same shared optimization across four conditions as for the PMd data using the same hyperparameters for optimization and model selection. To obtain the decision manifold for the network model (Fig. 5e), we plotted the inferred tuning functions of two neurons from different excitatory pools against each other, because tuning functions of all neurons from the same pool are identical.

We used the mean-field approximation to reduce the dynamics of the network to a two-dimensional dynamical system model with the same parameters as for the spiking network34. The mean-field dynamics are described by two variables si (i = 1, 2) representing activations of NMDA conductance for two excitatory neural pools:

$$\dots_i=-\fracs_i\tau _s+(1-s_i)\gamma f(I_i),$$

(17)

where γ = 0.641 and τs = 100 ms. The firing rate ri of neural pool i is a function of the total synaptic current Ii34:

$$r=f(I)=\fracaI-b1-\exp [-d(aI-b)],$$

(18)

with a = 270 Hz nA−1, b = 108 Hz, d = 0.154 s. The synaptic input to neural pool i includes recurrent and external stimulus currents:

$$I_i=J_11s_i+J_12s_j+I_\rmstim,i+I_\rmbg\,.$$

(19)

The effective connection weights are J11 = 0.2609 nA and J12 = −0.0497 nA. The background current is Ibg = 0.3260 nA. The stimulus current is \(I_\rmstim,\1,2\=0.0208\cdot (1\pm c)\) nA, with the stimulus coherence c ∈ [−1, 1].

To find the stable and unstable fixed points on the phase plane, we numerically found zeros of the flow field \(\overrightarrowR(s_1,s_2)\) of the two-variable mean-field model in equation (17). We numerically solved the equation \(\overrightarrowR(s_1,s_2)=0\) starting from a few different initial conditions using MATLAB fsolve function. To find the stable and unstable manifolds of the saddle, we followed the path along \(-\overrightarrowR(s_1,s_2)\) and \(\overrightarrowR(s_1,s_2)\), respectively, starting the trajectory near the saddle point.

Low-rank network model

To illustrate how diverse tuning to the decision variable arises from the interplay between recurrent connectivity and firing-rate non-linearity, we designed a rank-two recurrent neural network (RNN) that replicates the classical attractor dynamics with distributed connectivity. The network dynamics are governed by the equations:

$$\doty=-\,y+[Jy+b]_+.$$

(20)

Here vector \(y\in \mathbbR^N\) represents synaptic activation variables yi of RNN units (i = 1, …N), and vector \(b\in \mathbbR^N\) represents the constant input bi to each unit. The recurrent connectivity matrix \(J\in \mathbbR^N\times N\) has rank two, such that J = M ⋅ QT is an outer product of matrices \(M\in \mathbbR^N\times 2\) and \(Q^T\in \mathbbR^2\times N\). The rectified linear activation function [⋅]+ models the firing-rate non-linearity of single neurons. The firing rate of neuron i is \(r_i=[h_i+b_i]_+\), where \(h_i=\sum _j=1^NJ_ijy_j\) is the recurrent input to neuron i, and (hi + bi) is the total synaptic input current to neuron i.

The dynamics of this rank-two RNN are governed by two-dimensional mean-field variables z = QTy, which follow the equations:

$$\dotz=-\,z+Q^T[Mz+b]_+.$$

(21)

We designed the connectivity matrices39 M and QT so that the two-dimensional flow field in equation (21) replicates the flow field of the classical mean-field attractor network in equation (17) for zero stimulus coherence c = 0. We chose the elements of M to be \(m_i,1=\cos (2\pi i/N)\) and \(m_i,2=\sin (2\pi i/N)\), and sampled the elements of the input vector b randomly from a uniform distribution on [−0.06 to 0.06]. Next, by equating the RNN flow field in equation (21) to the target flow field \(\overrightarrowR(z)\) in equation (17) we obtained

$$Q^T[Mz+b]_+=\overrightarrowR(z)+z.$$

(22)

This relationship defines a linear regression problem for determining the elements of the matrix QT. Accordingly, we uniformly sampled K points zk (k = 1, …K) from the state space [0, 1] × [0, 1] and evaluated the terms in equation (22) at these points to obtain matrix \(A\in \mathbbR^N\times K\) with columns \(a_k=[Mz^k+b]_+\) and matrix \(B\in \mathbbR^2\times K\) with columns \(b_k=\overrightarrowR(z^k)+z^k\). We then found QT by solving QTA = B using ridge regression with a regularization parameter λ = 0.01.

We present results for an RNN with N = 500 units (Fig. 5 and Extended Data Fig. 10). We simulated the RNN trajectories for the left and right choices by initializing the RNN near the low-activity baseline state with a slight bias towards the corresponding attractor. We chose the initial conditions z(0) = (0.12, 0.106) for the left choice and z(0) = (0.106, 0.12) for the right choice, and computed the corresponding initial conditions for y as \(y(0)=(Q^T)^+z(0)\), where \((Q^T)^+\) is a pseudoinverse of QT. We parametrized the resulting trajectories with a decision variable x ∈ [−1, 1], where −1 and 1 correspond to the left and right choice attractors, respectively, and 0 corresponds to the symmetric initial state. We defined x to increase linearly with the cumulative arc length along the trajectories, such that x grows at a uniform rate along the trajectory length. The tuning curve of each neuron is defined by its firing rate along these trajectories as a function of the decision variable x.

Statistics and reproducibility

The sample sizes used in this study are consistent with standard practices in systems neuroscience involving non-human primates6,8,12,29. Data were collected from two macaque monkeys (75 sessions for monkey T and 66 sessions for monkey O), providing a robust number of sessions per animal. No statistical methods were used to predetermine sample size. All key findings were replicated in both monkeys. No randomization or blinding was performed because there was only one experimental group. Investigators were not blinded to the identity of the animal during data collection. Trial types were assigned randomly by the task control software. All statistical tests were two-sided unless otherwise noted.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.