Bipedal robots at Amazon’s Robotics Research and Development Hub in Sumner, Washington.Credit: Jason Redmond/AFP via Getty

Since 20 January, US science has been upended by severe cutbacks from the administration of US President Donald Trump. A series of dramatic reductions in grants and budgets — including the US National Institutes of Health (NIH) slashing reimbursements of indirect research costs to universities from around 50% to 15% — and deep cuts to staffing at research agencies have sent shock waves throughout the academic community.

The US is the world’s science superpower — but for how long?

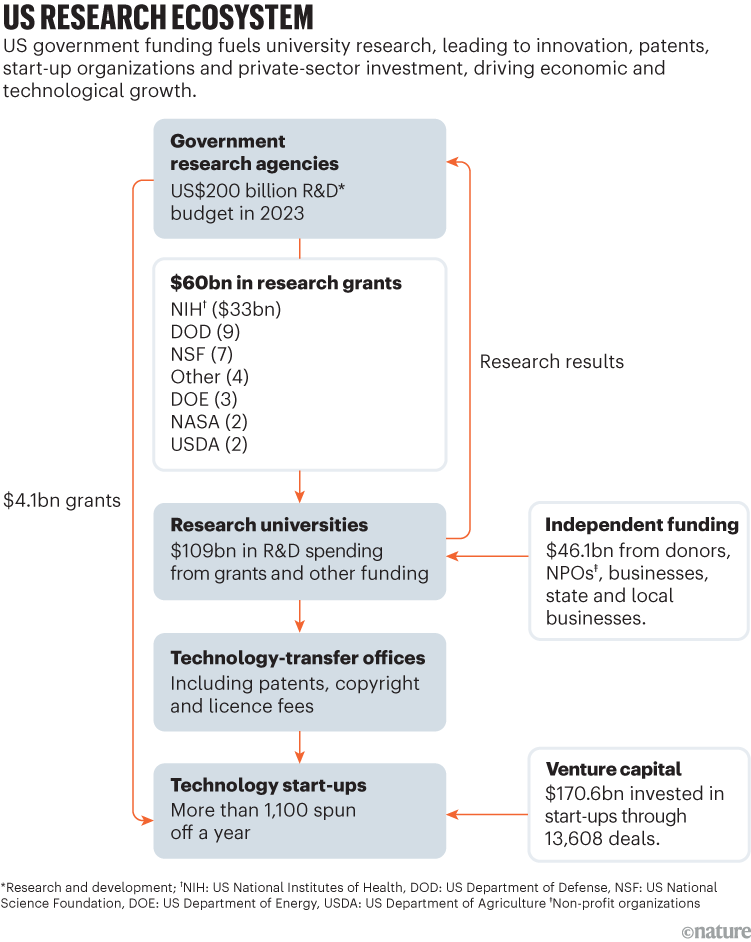

These cutbacks put the entire US research enterprise at risk. For more than eight decades, the United States has stood unrivalled as the world’s leader in scientific discovery and technological innovation. Collectively, US universities spin off more than 1,100 science-based start-up companies each year, leading to countless products that have saved and improved millions of lives, including heart and cancer drugs, and the mRNA-based vaccines that helped to bring the world out of the COVID-19 pandemic.

These breakthroughs were made possible mostly by a robust partnership between the US government and universities. This system emerged as an expedient wartime design to fund weapons research and development (R&D) in universities. It has fuelled US innovation, national security and economic growth.

But, today, this engine is being sabotaged in the Trump administration’s attempt to purge research programmes in areas it doesn’t support, such as climate change and diversity, equity and inclusion, and to rein in campus protests. But the broader cuts are also dismantling the very infrastructure that made the United States a scientific superpower. At best, US research is at risk from friendly fire; at worst, it’s political short-sightedness.

Researchers mustn’t be complacent. They must communicate the difference between eliminating ideologically objectionable programmes and undermining the entire research ecosystem. Here’s why the US research system is uniquely valuable, and what stands to be lost.

Unique innovation model

The backbone of US innovation is a close partnership between government, universities and industry. It is a well-calibrated ecosystem: federally funded research at universities drives scientific advancement, which in turn spins off technology, patents and companies. This system emerged in the wake of the Second World War, rooted in the vision of US presidential science adviser Vannevar Bush and a far-sighted Congress, which recognized that US economic and military strength hinge on investment in science (see ‘Two systems’).

It need not have been this way. Before the Second World War, the United Kingdom led the world in many scientific domains, but its focus on centralized government laboratories rather than university partnerships stifled post-war commercialization. By contrast, the United States channelled wartime research funds into universities, enabling breakthroughs that were scaled up by private industry to drive the nation’s post-war economic boom. This partnership became the foundation of Silicon Valley and the aerospace, nuclear and biotechnology industries.

Don’t wait out four hard years: speak truth to power

The US government remains the largest source of academic R&D funding globally — with a budget of US$201.9 billion for federal R&D in the financial year 2025. Out of this pot, more than two dozen research agencies direct grants to US universities, totalling $59.7 billion in 2023, with the NIH and the US National Science Foundation (NSF) receiving the most.

The agencies do this for a reason: they want professors at universities to do research for them. In exchange, the agencies get basic research from universities that moves science forward, or applied research that creates prototypes of potential products. By partnering with universities, the agencies get more value for money and quicker innovation than if they did all the research themselves.

This is because universities can leverage their investments from the government with other funds that they draw in. For example, in 2023, US universities received $27.7 billion from charitable donations, $6.2 billion in industrial collaborations, $6.7 billion from non-profit organizations, $5.4 billion from state and local government and $3.1 billion from other sources — boosting the $59.7 billion up to $108.8 billion (see ‘US research ecosystem’). This external money goes mostly to creating research labs and buildings that, as any campus visitor has seen, are often named after their donors.

Source: US Natl Center for Science and Engineering Statistics; US Congress; US Natl Venture Capital Assoc; AUTM; Small Business Administration

Thus, federal funding for science research in the United States is decentralized. It supports mostly curiosity-driven basic science, but also prizes innovation and commercial applicability. Academic freedom is valued and competition for grants is managed through peer review. Other nations, including China and those in Europe, tend to have more-centralized and bureaucratic approaches.

But what makes the US ecosystem so powerful is what then happens to the university research: it’s the engine for creating start-ups and jobs. In 2023, US universities licensed 3,000 patents, 3,200 copyrights and 1,600 other licences to technology start-ups and existing companies. Such firms spin off more than 1,100 science-based start-ups each year, which lead to countless products.

Since the 1980 Bayh–Dole Act, US universities have been able to retain ownership of inventions that were developed using federally funded research (see go.nature.com/4cesprf). Before this law, any patents resulting from government-funded research were owned by the government, so they often went unused.

Closing the loop, these technology start-ups also get a yearly $4-billion injection in seed-funding grants from the same government research agencies. Venture capital adds a whopping $171 billion to scale those investments.

It all adds up to a virtuous circle of discovery and innovation.

Facilities costs

A crucial but under-appreciated component of this US research ecosystem is the indirect-cost reimbursement system, which allows universities to maintain the facilities and administrative support necessary for cutting-edge research. Critics often misunderstand the function of these funds, assuming that universities can spend this money on other areas, such as diversity, equity and inclusion programmes. In reality, they fund essential infrastructure: laboratory space, compliance with safety regulations, data storage and administrative support that allows principal investigators to focus on science rather than paperwork. Without this support, universities cannot sustain world-class research.

Reimbursing universities for indirect costs began during the Second World War, and broke ground, just as the weapons development did. Unlike in a typical fixed-price contract, the government did not set requirements for university researchers to meet or specifications for them to design their research to. It asked them to do research and, if the research looked like it might solve a military problem, to build a prototype they could test. In return, the government paid the researchers for their direct and indirect research costs.

Vannevar Bush (right) led the US Office of Scientific Research and Development during the Second World War.Credit: Bettmann/Getty

At first, the government reimbursed universities for indirect costs at a flat rate of 25% of direct costs. Unlike businesses, universities had no profit margin, so indirect-cost recovery was their only way to pay for and maintain their research infrastructure. By the end of the war, some universities had agreed on a 50% rate. The rate is applied to direct costs, so that a principal investigator will be able to spend two-thirds of a grant on direct research costs and the rest will go to the university for indirect costs. (A common misconception is that indirect-cost rates are a percentage of the total grant, for example a 50% rate meaning that half of the award goes to overheads.)

After the Second World War, the US Office of Naval Research (ONR) began negotiating indirect-cost rates with universities on the basis of actual institutional expenses. Universities had to justify their overhead costs (administration, facilities, utilities) to receive full reimbursement. The ONR formalized financial auditing processes to ensure that institutions reported indirect costs accurately. This led to the practice of negotiating indirect-cost rates, which is still used today.

‘Silence is complicity’ — universities must fight the anti-DEI crackdown

Since then, the reimbursement process has been tweaked to prevent gaming the system, but has remained essentially the same. Universities negotiate their indirect-cost rates with either the US Department of Health and Human Services (HHS) or the ONR. Most research-intensive universities receive rates of 50–60% for on-campus research. Private foundations often have a lower rate (10–20%), but tend to have wider criteria for what can be considered a direct cost.