Real-world datasets for AMIE

AMIE was developed using a diverse suite of real-world datasets, including multiple-choice medical question-answering, expert-curated long-form medical reasoning, electronic health record (EHR) note summaries and large-scale transcribed medical conversation interactions. As described in detail below, in addition to dialogue generation tasks, the training task mixture for AMIE consisted of medical question-answering, reasoning and summarization tasks.

Medical reasoning

We used the MedQA (multiple-choice) dataset, consisting of US Medical Licensing Examination multiple-choice-style open-domain questions with four or five possible answers48. The training set consisted of 11,450 questions and the test set had 1,273 questions. We also curated 191 MedQA questions from the training set where clinical experts had crafted step-by-step reasoning leading to the correct answer13.

Long-form medical question-answering

The dataset used here consisted of expert-crafted long-form responses to 64 questions from HealthSearchQA, LiveQA and Medication QA in MultiMedQA12.

Medical summarization

A dataset consisting of 65 clinician-written summaries of medical notes from MIMIC-III, a large, publicly available database containing the medical records of intensive care unit patients49, was used as additional training data for AMIE. MIMIC-III contains approximately two million notes spanning 13 types, including cardiology, respiratory, radiology, physician, general, discharge, case management, consult, nursing, pharmacy, nutrition, rehabilitation and social work. Five notes from each category were selected, with a minimum total length of 400 tokens and at least one nursing note per patient. Clinicians were instructed to write abstractive summaries of individual medical notes, capturing key information while also permitting the inclusion of new informative and clarifying phrases and sentences not present in the original note.

Real-world dialogue

Here we used a de-identified dataset licensed from a dialogue research organization, comprising 98,919 audio transcripts of medical conversations during in-person clinical visits from over 1,000 clinicians over a ten-year period in the United States50. It covered 51 medical specialties (primary care, rheumatology, haematology, oncology, internal medicine and psychiatry, among others) and 168 medical conditions and visit reasons (type 2 diabetes, rheumatoid arthritis, asthma and depression being among the common conditions). Audio transcripts contained utterances from different speaker roles, such as doctors, patients and nurses. On average, a conversation had 149.8 turns (P0.25 = 75.0, P0.75 = 196.0). For each conversation, the metadata contained information about patient demographics, reason for the visit (follow-up for pre-existing condition, acute needs, annual exam and more), and diagnosis type (new, existing or other unrelated). Refer to ref. 50 for more details.

For this study, we selected dialogues involving only doctors and patients, but not other roles, such as nurses. During preprocessing, we removed paraverbal annotations, such as ‘[LAUGHING]’ and ‘[INAUDIBLE]’, from the transcripts. We then divided the dataset into training (90%) and validation (10%) sets using stratified sampling based on condition categories and reasons for visits, resulting in 89,027 conversations for training and 9,892 for validation.

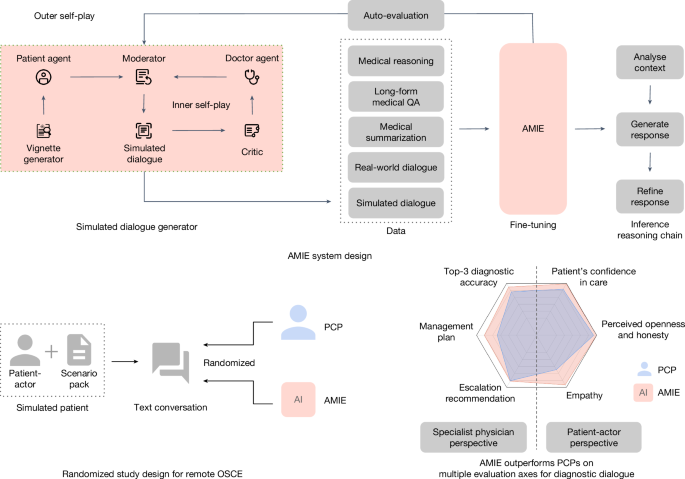

Simulated learning through self-play

While passively collecting and transcribing real-world dialogues from in-person clinical visits is feasible, two substantial challenges limit its effectiveness in training LLMs for medical conversations: (1) existing real-world data often fail to capture the vast range of medical conditions and scenarios, hindering its scalability and comprehensiveness; and (2) the data derived from real-world dialogue transcripts tend to be noisy, containing ambiguous language (including slang, jargon and sarcasm), interruptions, ungrammatical utterances and implicit references. This, in turn, may have limited AMIE’s knowledge, capabilities and applicability.

To address these limitations, we designed a self-play-based simulated learning environment for diagnostic medical dialogues in a virtual care setting, enabling us to scale AMIE’s knowledge and capabilities across a multitude of medical conditions and contexts. We used this environment to iteratively fine-tune AMIE with an evolving set of simulated dialogues in addition to the static corpus of medical question-answering, reasoning, summarization and real-world dialogue data described above.

This process consisted of two self-play loops:

-

An inner self-play loop where AMIE leveraged in-context critic feedback to refine its behaviour on simulated conversations with an AI patient agent.

-

An outer self-play loop where the set of refined simulated dialogues were incorporated into subsequent fine-tuning iterations. The resulting new version of AMIE could then participate in the inner loop again, creating a continuous learning cycle.

At each iteration of fine-tuning, we produced 11,686 dialogues, stemming from 5,230 different medical conditions. The conditions were selected from three datasets:

At each self-play iteration, four conversations were generated from each of the 613 common conditions, while two conversations were generated from each of the 4,617 less-common conditions randomly chosen from MedicineNet and MalaCards. The average simulated dialogue conversation length was 21.28 turns (P0.25 = 19.0, P0.75 = 25.0).

Simulated dialogues through self-play

To produce high-quality simulated dialogues at scale, we developed a new multi-agent framework that comprised three key components:

-

A vignette generator: AMIE leverages web searches to craft unique patient vignettes given a specific medical condition.

-

A simulated dialogue generator: three LLM agents play the roles of patient agent, doctor agent and moderator, engaging in a turn-by-turn dialogue simulating realistic diagnostic interactions.

-

A self-play critic: a fourth LLM agent acts as a critic to give feedback to the doctor agent for self-improvement. Notably, AMIE acted as all agents in this framework.

The prompts for each of these steps are listed in Supplementary Table 3. The vignette generator aimed to create varied and realistic patient scenarios at scale, which could be subsequently used as context for generating simulated doctor–patient dialogues, thereby allowing AMIE to undergo a training process emulating exposure to a greater number of conditions and patient backgrounds. The patient vignette (scenario) included essential background information, such as patient demographics, symptoms, past medical history, past surgical history, past social history and patient questions, as well as an associated diagnosis and management plan.

For a given condition, patient vignettes were constructed using the following process. First, we retrieved 60 passages (20 each) on the range of demographics, symptoms and management plans associated with the condition from using an internet search engine. To ensure these passages were relevant to the given condition, we used the general-purpose LLM, PaLM 2 (ref. 10), to filter these retrieved passages, removing any passages deemed unrelated to the given condition. We then prompted AMIE to generate plausible patient vignettes aligned with the demographics, symptoms and management plans retrieved from the filtered passages, by providing a one-shot exemplar to enforce a particular vignette format.

Given a patient vignette detailing a specific medical condition, the simulated dialogue generator was designed to simulate a realistic dialogue between a patient and a doctor in an online chat setting where in-person physical examination may not be feasible.

Three specific LLM agents (patient agent, doctor agent and moderator), each played by AMIE, were tasked with communicating among each other to generate the simulated dialogues. Each agent had distinct instructions. The patient agent embodied the individual experiencing the medical condition outlined in the vignette. Their role involved truthfully responding to the doctor agent’s inquiries, as well as raising any additional questions or concerns they may have had. The doctor agent played the role of an empathetic clinician seeking to comprehend the patient’s medical history within the online chat environment51. Their objective was to formulate questions that could effectively reveal the patient’s symptoms and background, leading to an accurate diagnosis and an effective treatment plan. The moderator continually assessed the ongoing dialogue between the patient agent and doctor agent, determining when the conversation had reached a natural conclusion.

The turn-by-turn dialogue simulation started with the doctor agent initiating the conversation: “Doctor: So, how can I help you today?”. Following this, the patient agent responded, and their answer was incorporated into the ongoing dialogue history. Subsequently, the doctor agent formulated a response based on the updated dialogue history. This response was then appended to the conversation history. The conversation progressed until the moderator detected the dialogue had reached a natural conclusion, when the doctor agent had provided a DDx, treatment plan, and adequately addressed any remaining patient agent questions, or if either agent initiated a farewell.

To ensure high-quality dialogues, we implemented a tailored self-play3,52 framework specifically for the self-improvement of diagnostic conversations. This framework introduced a fourth LLM agent to act as a ‘critic’, which was also played by AMIE, and that was aware of the ground-truth diagnosis to provide in-context feedback to the doctor agent and enhance its performance in subsequent conversations.

Following the critic’s feedback, the doctor agent incorporated the suggestions to improve its responses in subsequent rounds of dialogue with the same patient agent from scratch. Notably, the doctor agent retained access to its previous dialogue history in each new round. This self-improvement process was repeated twice to generate the dialogues used for each iteration of fine-tuning. See Supplementary Table 4 as an example of this self-critique process.

We noted that the simulated dialogues from self-play had significantly fewer conversational turns than those from the real-world data described in the previous section. This difference was expected, given that our self-play mechanism was designed—through instructions to the doctor and moderator agents—to simulate text-based conversations. By contrast, real-world dialogue data was transcribed from in-person encounters. There are fundamental differences in communication styles between text-based and face-to-face conversations. For example, in-person encounters may afford a higher communication bandwidth, including a higher total word count and more ‘back and forth’ (that is, a greater number of conversational turns) between the physician and the patient. AMIE, by contrast, was designed for focused information gathering by means of a text-chat interface.

Instruction fine-tuning

AMIE, built upon the base LLM PaLM 2 (ref. 10), was instruction fine-tuned to enhance its capabilities for medical dialogue and reasoning. We refer the reader to the PaLM 2 technical report for more details on the base LLM architecture. Fine-tuning examples were crafted from the evolving simulated dialogue dataset generated by our four-agent procedure, as well as the static datasets. For each task, we designed task-specific instructions to instruct AMIE on what task it would be performing. For dialogue, this was assuming either the patient or doctor role in the conversation, while for the question-answering and summarization datasets, AMIE was instead instructed to answer medical questions or summarize EHR notes. The first round of fine-tuning from the base LLM only used the static datasets, while subsequent rounds of fine-tuning leveraged the simulated dialogues generated through the self-play inner loop.

For dialogue generation tasks, AMIE was instructed to assume either the doctor or patient role and, given the dialogue up to a certain turn, to predict the next conversational turn. When playing the patient agent, AMIE’s instruction was to reply to the doctor agent’s questions about their symptoms, drawing upon information provided in patient scenarios. These scenarios included patient vignettes for simulated dialogues or metadata, such as demographics, visit reason and diagnosis type, for the real-world dialogue dataset. For each fine-tuning example in the patient role, the corresponding patient scenario was added to AMIE’s context. In the doctor agent role, AMIE was instructed to act as an empathetic clinician, interviewing patients about their medical history and symptoms to ultimately arrive at an accurate diagnosis. From each dialogue, we sampled, on average, three turns for each doctor and patient role as the target turns to predict based on the conversation leading up to that target turn. Target turns were randomly sampled from all turns in the dialogue that had a minimum length of 30 characters.

Similarly, for the EHR note summarization task, AMIE was provided with a clinical note and prompted to generate a summary of the note. Medical reasoning/QA and long-form response generation tasks followed the same set-up as in ref. 13. Notably, all tasks except dialogue generation and long-form response generation incorporated few-shot (1–5) exemplars in addition to task-specific instructions for additional context.

Chain-of-reasoning for online inference

To address the core challenge in diagnostic dialogue—effectively, acquiring information under uncertainty to enhance diagnostic accuracy and confidence, while maintaining positive rapport with the patient—AMIE employed a chain-of-reasoning strategy before generating a response in each dialogue turn. Here ‘chain-of-reasoning’ refers to a series of sequential model calls, each dependent on the outputs of prior steps. Specifically, we used a three-step reasoning process, described as follows:

-

Analysing patient information. Given the current conversation history, AMIE was instructed to: (1) summarize the positive and negative symptoms of the patient as well as any relevant medical/family/social history and demographic information; (2) produce a current DDx; (3) note missing information needed for a more accurate diagnosis; and (4) assess confidence in the current differential and highlight its urgency.

-

Formulating response and action. Building upon the conversation history and the output of step 1, AMIE: (1) generated a response to the patient’s last message and formulated further questions to acquire missing information and refine the DDx; and (2) if necessary, recommended immediate action, such as an emergency room visit. If confident in the diagnosis, based on the available information, AMIE presented the differential.

-

Refining the response. AMIE revised its previous output to meet specific criteria based on the conversation history and outputs from earlier steps. The criteria were primarily related to factuality and formatting of the response (for example, avoid factual inaccuracies on patient facts and unnecessary repetition, show empathy, and display in a clear format).

This chain-of-reasoning strategy enabled AMIE to progressively refine its response conditioned on the current conversation to arrive at an informed and grounded reply.

Evaluation

Prior works developing models for clinical dialogue have focused on metrics, such as the accuracy of note-to-dialogue or dialogue-to-note generations53,54, or natural language generation metrics, such as BLEU or ROUGE scores that fail to capture the clinical quality of a consultation55,56.

In contrast to these prior works, we sought to anchor our human evaluation in criteria more commonly used for evaluating the quality of physicians’ expertise in history-taking, including their communication skills in consultation. Additionally, we aimed to evaluate conversation quality from the perspective of both the lay participant (the participating patient-actor) and a non-participating professional observer (a physician who was not directly involved in the consultation). We surveyed the literature and interviewed clinicians working as OSCE examiners in Canada and India to identify a minimum set of peer-reviewed published criteria that they considered comprehensively reflected the criteria that are commonly used in evaluating both patient-centred and professional-centred aspects of clinical diagnostic dialogue—that is, identifying the consensus for PCCBP in medical interviews19, the criteria examined for history-taking skills by the Royal College of Physicians in the United Kingdom as part of their PACES (https://www.mrcpuk.org/mrcpuk-examinations/paces/marksheets)20 and the criteria proposed by the UK GMCPQ (https://edwebcontent.ed.ac.uk/sites/default/files/imports/fileManager/patient_questionnaire%20pdf_48210488.pdf) for doctors seeking patient feedback as part of professional revalidation (https://www.gmc-uk.org/registration-and-licensing/managing-your-registration/revalidation/revalidation-resources).

The resulting evaluation framework enabled assessment from two perspectives—the clinician, and lay participants in the dialogues (that is, the patient-actors). The framework included the consideration of consultation quality, structure and completeness, and the roles, responsibilities and skills of the interviewer (Extended Data Tables 1–3).

Remote OSCE study design

To compare AMIE’s performance to that of real clinicians, we conducted a randomized crossover study of blinded consultations in the style of a remote OSCE. Our OSCE study involved 20 board-certified PCPs and 20 validated patient-actors, ten each from India and Canada, respectively, to partake in online text-based consultations (Extended Data Fig. 1). The PCPs had between 3 and 25 years of post-residency experience (median 7 years). The patient-actors comprised of a mix of medical students, residents and nurse practitioners with experience in OSCE participation. We sourced 159 scenario packs from India (75), Canada (70) and the United Kingdom (14).

The scenario packs and simulated patients in our study were prepared by two OSCE laboratories (one each in Canada and India), each affiliated with a medical school and with extensive experience in preparing scenario packs and simulated patients for OSCE examinations. The UK scenario packs were sourced from the samples provided on the Membership of the Royal Colleges of Physicians UK website. Each scenario pack was associated with a ground-truth diagnosis and a set of acceptable diagnoses. The scenario packs covered conditions from the cardiovascular (31), respiratory (32), gastroenterology (33), neurology (32), urology, obstetric and gynaecology (15) domains and internal medicine (16). The scenarios are listed in Supplementary Information section 8. The paediatric and psychiatry domains were excluded from this study, as were intensive care and inpatient case management scenarios.

Indian patient-actors played the roles in all India scenario packs and 7 of the 14 UK scenario packs. Canadian patient-actors participated in scenario packs for both Canada and the other half of the UK-based scenario packs. This assignment process resulted in 159 distinct simulated patients (that is, scenarios). Below, we use the term ‘OSCE agent’ to refer to the conversational counterpart interviewing the patient-actor—that is, either the PCP or AMIE. Supplementary Table 1 summarizes the OSCE assignment information across the three geographical locations. Each of the 159 simulated patients completed the three-step study flow depicted in Fig. 2.

Online text-based consultation

The PCPs and patient-actors were primed with sample scenarios and instructions, and participated in pilot consultations before the study began to familiarize them with the interface and experiment requirements.

For the experiment, each simulated patient completed two online text-based consultations by means of a synchronous text-chat interface (Extended Data Fig. 1), one with a PCP (control) and one with AMIE (intervention). The ordering of the PCP and AMIE was randomized and the patient-actors were not informed as to which they were talking to in each consultation (counterbalanced design to control for any potential order effects). The PCPs were located in the same country as the patient-actors, and were randomly drawn based on availability at the time slot specified for the consultation. The patient-actors role-played the scenario and were instructed to conclude the conversation after no more than 20 minutes. Both OSCE agents were asked (the PCPs through study-specific instructions and AMIE as part of the prompt template) to not reveal their identity, or whether they were human, under any circumstances.

Post-questionnaires

Upon conclusion of the consultation, the patient-actor and OSCE agent each filled in a post-questionnaire in light of the resulting consultation transcript (Extended Data Fig. 1). The post-questionnaire for patient-actors consisted of the complete GMCPQ, the PACES components for ‘Managing patient concerns’ and ‘Maintaining patient welfare’ (Extended Data Table 1) and a checklist representation of the PCCBP category for ‘Fostering the relationship’ (Extended Data Table 2). The responses the patient-actors provided to the post-questionnaire are referred to as ‘patient-actor ratings’. The post-questionnaire for the OSCE agent asked for a ranked DDx list with a minimum of three and no more than ten conditions, as well as recommendations for escalation to in-person or video-based consultation, investigations, treatments, a management plan and the need for a follow-up.

Specialist physician evaluation

Finally, a pool of 33 specialist physicians from India (18), North America (12) and the United Kingdom (3) evaluated the PCPs and AMIE with respect to the quality of their consultation and their responses to the post-questionnaire. During evaluation, the specialist physicians also had access to the full scenario pack, along with its associated ground-truth differential and additional accepted differentials. All of the data the specialist physicians had access to during evaluation are collectively referred to as ‘OSCE data’. Specialist physicians were sourced to match the specialties and geographical regions corresponding to the scenario packs included in our study, and had between 1 and 32 years of post-residency experience (median 5 years). Each set of OSCE data was evaluated by three specialist physicians randomly assigned to match the specialty and geographical region of the underlying scenario (for example, Canadian pulmonologists evaluated OSCE data from the Canada-sourced respiratory medicine scenario). Each specialist evaluated the OSCE data from both the PCP and AMIE for each given scenario. Evaluations for the PCP and AMIE were conducted by the same set of specialists in a randomized and blinded sequence.

Evaluation criteria included the accuracy, appropriateness and comprehensiveness of the provided DDx list, the appropriateness of recommendations regarding escalation, investigation, treatment, management plan and follow-up (Extended Data Table 3) and all PACES (Extended Data Table 1) and PCCBP (Extended Data Table 2) rating items. We also asked specialist physicians to highlight confabulations in the consultations and questionnaire responses—that is, text passages that were non-factual or that referred to information not provided in the conversation. Each OSCE scenario pack additionally supplied the specialists with scenario-specific clinical information to assist with rating the clinical quality of the consultation, such as the ideal investigation or management plans, or important aspects of the clinical history that would ideally have been elucidated for the highest quality of consultation possible. This follows the common practice for instructions for OSCE examinations, in which specific clinical scenario-specific information is provided to ensure consistency among examiners, and follows the paradigm demonstrated by Membership of the Royal Colleges of Physicians sample packs. For example, this scenario (https://www.thefederation.uk/sites/default/files/Station%202%20Scenario%20Pack%20%2816%29.pdf) informs an examiner that, for a scenario in which the patient-actor has haemoptysis, the appropriate investigations would include a chest X-ray, a high-resolution computed tomography scan of the chest, a bronchoscopy and spirometry, whereas bronchiectasis treatment options a candidate should be aware of should include chest physiotherapy, mucolytics, bronchodilators and antibiotics.

Statistical analysis and reproducibility

We evaluated the top-k accuracy of the DDx lists generated by AMIE and the PCPs across all 159 simulated patients. Top-k accuracy was defined as the percentage of cases where the correct ground-truth diagnosis appeared within the top-k positions of the DDx list. For example, top-3 accuracy is the percentage of cases for which the correct ground-truth diagnosis appeared in the top three diagnosis predictions from AMIE or the PCP. Specifically, a candidate diagnosis was considered a match if the specialist rater marked it as either an exact match with the ground-truth diagnosis, or very close to or closely related to the ground-truth diagnosis (or accepted differential). Each conversation and DDx was evaluated by three specialists, and their majority vote or median rating was used to determine the accuracy and quality ratings, respectively.

The statistical significance of the DDx accuracy was determined using two-sided bootstrap tests57 with 10,000 samples and false discovery rate (FDR) correction58 across all k. The statistical significance of the patient-actor and specialist ratings was determined using two-sided Wilcoxon signed-rank tests59, also with FDR correction. Cases where either agent received ‘Cannot rate/Does not apply’ were excluded from the test. All significance results are based on P values after FDR correction.

Additionally, we reiterate that the OSCE scenarios themselves were sourced from three different countries, the patient-actors came from two separate institutions in Canada and India, and the specialist evaluations were triplicate rated in this study.

Related work

Clinical history-taking and the diagnostic dialogue

History-taking and the clinical interview are widely taught in both medical schools and postgraduate curricula60,61,62,63,64,65. Consensus on physician–patient communication has evolved to embrace patient-centred communication practices, with recommendations that communication in clinical encounters should address six core functions—fostering the relationship, gathering information, providing information, making decisions, responding to emotions and enabling disease- and treatment-related behaviour19,66,67. The specific skills and behaviours for meeting these goals have also been described, taught and assessed19,68 using validated tools68. Medical conventions consistently cite that certain categories of information should be gathered during a clinical interview, comprising topics such as the presenting complaint, past medical history and medication history, social and family history, and systems review69,70. Clinicians’ ability to meet these goals is commonly assessed using the framework of an OSCE4,5,71. Such assessments vary in their reproducibility or implementation, and have even been adapted for remote practice as virtual OSCEs with telemedical scenarios, an issue of particular relevance during the COVID-19 pandemic72.

Conversational AI and goal-oriented dialogue

Conversational AI systems for goal-oriented dialogue and task completion have a rich history73,74,75. The emergence of transformers76 and large language models15 have led to renewed interest in this direction. The development of strategies for alignment77, self-improvement78,79,80,81 and scalable oversight mechanisms82 has enabled the large-scale deployment of such conversational systems in the real world16,83. However, the rigorous evaluation and exploration of conversational and task-completion capabilities of such AI systems remains limited for clinical applications, where studies have largely focused on single-turn interaction use cases, such as question-answering or summarization.

AI for medical consultations and diagnostic dialogue

The majority of explorations of AI as tools for conducting medical consultations have focused on ‘symptom-checker’ applications rather than a full natural dialogue, or on topics such as the transcription of medical audio or the generation of plausible dialogue, given clinical notes or summaries84,85,86,87. Language models have been trained using clinical dialogue datasets, but these have not been comprehensively evaluated88,89. Studies have been grounded in messages between doctors and patients in commercial chat platforms (which may have altered doctor–patient engagement compared to 1:1 medical consultations)55,90,91. Many have focused largely on predicting next turns in the recorded exchanges rather than clinically meaningful metrics. Also, to date, there have been no reported studies that have examined the quality of AI models for diagnostic dialogue using the same criteria used to examine and train human physicians in dialogue and communication skills, nor studies evaluating AI systems in common frameworks, such as the OSCE.

Evaluation of diagnostic dialogue

Prior frameworks for the human evaluation of AI systems’ performance in diagnostic dialogue have been limited in detail. They have not been anchored in established criteria for assessing communication skills and the quality of history-taking. For example, ref. 56 reported a five-point scale describing overall ‘human evaluation’, ref . 90 reported ‘relevance, informativeness and human likeness’, and ref . 91 reported ‘fluency, expertise and relevance’, whereas other studies have reported ‘fluency and adequacy’92 and ‘fluency and specialty’93. These criteria are far less comprehensive and specific than those taught and practiced by medical professionals. A multi-agent framework for assessing the conversational capabilities of LLMs was introduced in ref. 88, the study, however, was performed in the restricted setting of dermatology, used AI models to emulate both the doctor and patient sides of simulated interactions, and it performed limited expert evaluation of the history-taking as being complete or not.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.