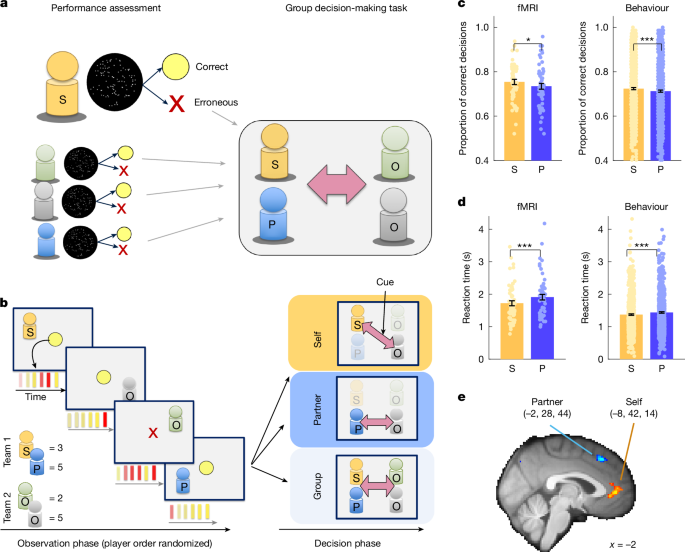

We conducted a total of four studies: a social fMRI experiment (study 1, n = 56; Fig. 3); a behavioural experiment (study 2, n = 795; Fig. 4); a control fMRI experiment (study 3, n = 32; Fig. 5); and a supplementary behavioural experiment (study 4, n = 1,022; Extended Data Fig. 5). All studies used variants of the same experimental paradigm.

Study 1: social fMRI experiment

Participants

There were initially 59 participants in the social fMRI experiment (study 1). However, two participants did not complete the scanning session and one participant repeatedly fell asleep during the experiment; these were removed from the sample, so the final sample contained 56 participants (age range 18–38 years, 33 of them female). Participants received £50 for taking part in in the experiment, as well as extra earnings that were allocated according to their performance in the tasks. The ethics committee of the University of Oxford approved the study and all participants provided informed consent (MSD reference number: R60547/RE001).

Experimental procedures

At the start of the experimental procedures, and before entering the MRI scanner, participants performed a behavioural pre-experiment. They were informed that the purpose of the pre-experiment was a performance assessment to record their performance in a perceptual decision-making task (a random dot motion task54) and that the performance would be used in the subsequent fMRI experiment. This was indeed the case, and the experimental procedure involved no deception. We used an automated computer algorithm to pair up the recorded performance of the participant with the performance of three previous participants. The algorithm was used after the pre-experiment to create the experimental schedule, and ensured that experimental schedules were comparable across participants, balanced with respect to some key features (for example, each of the four players had approximately similar performance) and decorrelated with respect to key variables of interest (such as performance estimates across players). To ensure careful balancing, participants performed many more trials than necessary given the number of trials in the main fMRI experiment, allowing the algorithm to repeatedly subsample performances until the above-mentioned key criteria for schedules were reached. We used the schedule-generation algorithm after participants performed the pre-experiment but before they entered the MRI scanner. The schedules comprising veridical performances from the participant and three other players were then transferred to the computer operating the MRI experiment. The performance of the three other players in each experiment (the partner and the two opponents) were taken from log files of previous participants. For our first few participants of the fMRI experiment (when there were no preceding participants), these log files came from participants who had taken part in pilot experiments. The fMRI experiment lasted approximately 60 min. Afterwards, participants were debriefed, filled in some questionnaires that were unrelated to the current study and left.

The behavioural pre-experiment that took place before scanning and the fMRI experiment were programmed in Matlab using Psychtoolbox-3 (ref. 55; http://psychtoolbox.org). For the presentation of random dot motion stimuli we used the Variable coherence random dot motion toolbox (version 2; https://shadlenlab.columbia.edu/resources/VCRDM.html).

Behavioural pre-experiment

During the pre-experiment, participants judged the motion direction of a random dot-motion kinematogram (RDK) stimulus. The participants pressed the left or right button to indicate the congruent leftwards or rightwards motion directions of the RDK stimuli. Participants were made aware that they would perform these motion judgements at varying levels of coherence, making the detection of the RDK stimuli easier or more difficult. The pre-experiment comprised 972 RDK trials using motion coherences of 3.2%, 12.8% and 25.6%. Each RDK stimulus was presented for 0.45 s and valid responses had to be made within 0.6 s after RDK offset. Failure to respond in this time window was counted as incorrect performance and indicated by a “Missed!” message on the screen. Participants were made aware of this and were instructed to avoid having missed trials. Importantly, participants did not receive performance feedback (except for missed trials) for their RDK direction judgements. The reason for this was that participants would witness and learn about their recorded, veridical performances in the subsequent fMRI experiment, so it was important not to give them this information this early in the experiment. The pre-experiment took approximately 25 min.

We used several measures to streamline the pre-experiment and the subsequent fMRI decision-making experiment in terms of the participants’ subjective experience. First, participants performed a few practice trials of the pre-experiment, in which they were given explicit performance feedback, and this feedback was cued using the same cues that also indicated successful and erroneous performance in the main fMRI experiment (a yellow coin for successful performance and a red X for erroneous performance). For participants, this measure underscored the fact that performance indices presented during fMRI scanning related to the participants’ own performances in the pre-experiment period. Second, during the pre-experiment, participants performed sequences of six RDK trials in a sequence, followed by an intertrial interval of 1 s. This blocking of trials corresponded to the fact that in the fMRI experiment, participants observed rapid performance sequences of six performance cues in a row for each participant on each trial. So, again, this measure was taken to align the participants’ experience of the pre-experiment with the main fMRI experiment. Third, during the entire pre-experiment, the layout of the screen was similar to the screen of the main fMRI experiment in the following way. Cues referring to self, partner and the two opponents were distributed over the screen, with each player occupying either a top-left, top-right, bottom-left or bottom-right position. These cue positions were the same in the pre-experiment and the main fMRI experiment. They remained fixed throughout the entire experimental procedures for each participant, but they were randomized and balanced across participants, with the restriction that the partner position would always be adjacent to the self position (self and partner could occupy the two upper positions, for example, or the two left-sided positions). The cue for self was not shown most of the time during the pre-experiment; instead, participants saw the RDK stimuli that they were asked to respond to in this location. However, the cues indicating the other three players were shown throughout the pre-experiment, despite being irrelevant for the task performed during the pre-experiment. This measure was used to indicate during the pre-experiment that participants’ performances would be paired with the performance of three other players in the main fMRI experiment, and to demonstrate the team pairings (self and partner versus two opponents). Hence, again, this measure served to ensure that the participants’ experiences in the pre-experiment and main fMRI experiment were similar.

Finally, in both the pre-experiment and the main fMRI experiment, we used static RDK images as cues indicating player identity, and these cues were fixed to specific screen locations (see the previous point). The RDKs were identifiable by their spatial position, their colour (white, green, orange or purple) and by two letters on them (the participant’s initials, for example MW, for self, Pa for partner and O1 and O2 for the two opponents). Static RDK images symbolized that every player’s performance cues were derived from their veridical RDK performance. This was done to remind participants in the main fMRI experiment that the performances they would observe were taken from the RDK pre-experiment. In summary, several measures were taken to illustrate to participants that their pre-experimental performance assessment seamlessly fed into the main fMRI experiment, and that their performance was important in the social context of two competing teams.

fMRI experiment

The main fMRI experiment involved completing a memory-guided social decision-making task. Each trial comprised an observation phase and a decision phase, in which participants made decisions about the information presented in the observation phase from memory. Four players’ performances were relevant in the fMRI experiment when the players were divided in two teams: the participant’s own performance (self, S), the partner’s performance (Pa) and the performance of two opponents (O1 and O2). Team membership was constant throughout the experiment, and it was predefined by the experimenters. No further information about the other players was given (such as their gender or age).

Observation phase

In the observation phase, participants observed performance cues for each player in a random but counter-balanced sequence (each player appeared first in the sequence for the same number of trials, for example). Participants’ veridical performances from the pre-experiment were paired with veridical performances from three other previous participants and displayed during this phase. The insight into pre-recorded performance was unrelated to beating the other team in the main experiment (Extended Data Fig. 2). Player identities were cued by RDK images and displayed in the same spatial positions as in the pre-experiment to reinforce continuity between the pre-experiment and the main fMRI experiment and to illustrate that the displayed performances referred to the pre-experimental performance assessment.

In the observation phase, for each player, participants observed six brief performance cues. The performance cues were always displayed centrally on the screen at the same location for each player, and successful performances were indicated by yellow coin cues and erroneous performances were indicated by a red X. Participants already knew the meaning of these cues from the pre-experiment (see the ‘Behavioural pre-experiment’ section). Because the performance cues always appeared in the same location for all players, we cued the player to which of these performances related in the following way: 400 ms before the performance cue sequence started, the RDK image of the respective player appeared in its predefined location and its dots moved in a slow and coherent fashion in a direction (randomly left or right) for 2.1 s overall. Importantly, the active RDK was simply a means to indicate the relevant player.

Participants this time did not have to discern the movement direction of the dots (which would have been easy because the coherence of the RDK was very high). They simply needed to understand that the performance cues displayed centrally represented the performance recorded for this specific player. Using the moving RDK to indicate the relevant player was, like many other small manipulations in this study, a way to enable participants to make a link between the pre-experiment and the main fMRI experiment, and to make it plausible that the displayed performance cues related to the RDK performance of the respective player. While the player’s RDK was moving, the sequence of six performance cues was shown centrally on screen. After the above-mentioned 400 ms, the first performance cue was presented for 200 ms. The remaining performance cues were shown subsequently with a delay of 100 ms between them, also for 200 ms each. During this time, the player’s RDK was still active to indicate the relevant player, and the RDK movement ended precisely at the time the sequence of six performance cues ended as well.

For each of the four players, the performance cues were presented in exactly this fashion. A sequence of six centrally presented performance cues was shown while the player-specific RDK was active. After a player’s performance was presented, their RDK stopped moving and remained static until the decision phase started. This meant that at the end of the performance phase, all four RDKs were shown statically on screen in the location associated with the respective player. Importantly, the order of the players was fully balanced (Fig. 2). At the end, after the last player’s performance was shown and the observation phase had ended, after a Poisson-distributed jitter (1–5 s, with a mean of 2 s), the decision phase started.

Decision phase

In each trial, after the observation phase came the decision phase. During the decision phase, participants compared performance scores between players, which were displayed in the previous observation phase. The decision was between whether the relevant member(s) of one’s own team had performed better in the observation or whether the relevant member(s) of the opponent team had performed better. It was unknown which players would have to be compared until the start of the decision. Participants therefore had to memorize the performance score of all players, that is, the number of successful performances. Each player’s performance score ranged between 0 and 6 (in case no performances were successful or all of them were successful, respectively), and this had to be extracted from the series of six performance cues that were shown while that player’s RDK was active (by accumulating the yellow success cues and discarding the red error cues). Importantly, each decision phase actually comprised two decisions, and both referred to the same set of performances that had just been seen in the observation phase of the trial. Both decisions followed precisely the same logic, which is why only the first of them is shown in Fig. 1 for illustration. Note that the fact that two decisions were given, and that both referred to the same information, meant that people had to remember the performances of all four players throughout the trial, beyond the first decision and until the second decision was made.

After the temporal jitter following the observation phase, the decision phase started by presenting an arrow cue that indicated which players to compare in the decision. Which decisions were possible was constrained by the team membership of the players. Three possible decision types were possible. In self decisions, the participants’ own performance was compared with the performance of one of the two opponents (each of them equally often). The performance of the other two players (the partner and an irrelevant opponent) had to be ignored. In partner decisions, the partner’s performance was compared with the performance of one of the two opponents (each of them equally often), ignoring the performances for self and the other, irrelevant opponent. Finally, in group decisions, the sum of the performances of both groups was compared. The arrow cue indicated these different decisions by pointing at the self and a relevant opponent (self decisions), the partner and a relevant opponent (partner decisions) or at both groups (group decisions), respectively. This means that for self and partner decisions, the opponents could be divided into a relevant opponent (Or) and an irrelevant opponent (Oi), and both opponents were relevant and irrelevant for the same number of trials for both self and partner decisions. In group decisions, by contrast, both opponents were relevant.

Decisions were made by comparing the performances of the cued players. However, participants also had to factor in a non-social bonus. The bonus was cued on top of the decision arrow indicating the relevant players. The bonus was displayed as yellow coins and half-coins for a positive bonus, and red coins and half-coins for a negative bonus. A positive bonus meant that points had to be added towards the performance of one’s own team, and a negative bonus meant that points had to be added towards the performance of the opponent team. Including the bonus in decisions was useful because it meant that participants had to wait until the time of the decision cue before all the information about the decision was known. This ensured that participants would make the decisions at the time of the decision phase and not beforehand. Moreover, the bonus had a value of either −1.5, −0.5, +0.5 or +1.5. This was useful because it meant that there was always a correct response in each trial. Because the performance scores were always integers, the ±0.5 meant that one of the teams had to come out as the better one when factoring in the bonus, even if performance scores were identical. This meant that the full social decision variables (DV) for the self, partner and group were: DVself = S − Or + B, DVpartner = P − Or + B and DVgroup = S + P − O1 − O2 + B, where S indicates one’s own performance score (self performance), P indicates the partner’s performance score (P performance), Or indicates the relevant opponent’s performance score (Or performance), and O1 and O2 indicate the performance scores of the two opponents (O1 performance and O2 performance). Note that these labels are used in group decisions instead of Or because both opponents are relevant in group decisions.

The decision was presented as an engage/avoid decision6,25 using two buttons. The decision was to compare the performances of the relevant players (factoring in the bonus) and indicate whether the relevant member(s) of one’s own team had performed better in the observation or whether the relevant member(s) of the opponent team had performed better. Deciding to engage meant choosing one’s own team, and this was indicated, after the respective button press, by a large box appearing around the two RDKs symbolizing one’s own team. Making that choice indicated that the relevant member(s) of one’s own team was estimated as better in performance than the other team’s relevant player(s) (also factoring in the bonus). The pay-off from the engage choice was the veridical DV for that trial. This meant that, if one’s own team was indeed better, and the DV was, say, +2 (see the equation above for the DV calculation), then the outcome would be +2. However, if the choice was engage and the DV was, say, −1, then the outcome would be −1. By contrast, the outcome of choosing the opponent team (making the avoid choice) would always lead to a pay-off of zero. This pay-off scheme meant that it was always beneficial to make the correct choice to maximize the reward outcome of a trial. It meant choosing engage only if one’s own team was indeed better, and choosing avoid when this was not the case to avoid losing points. Feedback about whether the correct choice was made was not given. Points were accumulated over the course of the experiment and translated into a small amount of extra bonus payment at the end of the experiment. Participants also received an additional pay-off that was proportional to how many points their partner had collected over the course of their experiment. The latter was based on a veridical readout of how many points their partner had accumulated when they had done the fMRI decision-making experiment.

Note that, in the context of the decision-making experiment, self and partner were equally important for the task. Both self and partner decisions were equally frequent in the experimental schedule. The pay-off from a trial with the same DV was the same whether it was a partner trial or a self trial. Therefore, estimating the partner’s performance had precisely the same influence on performing the task successfully as estimating one’s own performance correctly.

Each decision lasted until a response was given. Afterwards, for 0.5 s, a box around either one’s own team or the opponent’s team indicated whether an engage (a box around one’s own team) or an avoid decision (a box around the opponent team) had been made. After the first decision, there was a temporal jitter of 2–8 s (Poisson distributed, mean = 3.5 s) until the second decision started. Note that the second decision could not be a comparison between the same players as the first decision. This meant that after a group decision, there could not be another group decision in the same trial. After a self decision with O1 as the relevant opponent, there could be another self decision with O2 as the relevant opponent (but not another one with self and O1). After the second decision, there was an intertrial interval of 1–5 s (mean = 2 s), and then the next trial started.

Experimental schedule

The experiment comprised 144 trials. Therefore, 288 decisions were made, and these were evenly distributed between self decisions, partner decisions and group decisions. As described above, generating schedules from the pre-experiment by using an algorithm ensured precisely balanced performances for each of the four players for all participants.

Basis functions and their weight vectors

A set of three sequential basis functions form a basis for the sequential decision space in our study. We define a matrix W comprising three row vectors of basis functions (w1, w2, w3) as W = (w1, w2, w3)T, where T denotes the transpose operation. We refer to the projections of the sequential performances observed during the observation phase onto the basis functions as b = (b1, b2 and b3)T. We refer to the sequential performances scores as pos = (pos1, pos2, pos3, pos4)T. For example, pos1 is the performance score observed for the first player in the observation phase sequence irrespective of identity; it is a number between 0 and 6 on each trial, reflecting the aggregated performance scores. Performance scores per player have to be extracted from the series of performance cues indicating either successful or erroneous performance presented for each player. We used the following set of basis functions w1, w2 and w3:

-

1.

\({{\bf{\text{w}}}}_{1}=[-1,\,1,-1,\,1],\)

-

2.

\({{\bf{\text{w}}}}_{2}=[1,\,1,-1,-1],\)

-

3.

\({{\bf{\text{w}}}}_{3}=[1,-1,-1,\,1].\)

The position of the weight indicates the sequential position of the respective player in the sequence of performances that were presented at the beginning of every trial. For example, w1(2) refers to the second player in the sequence, who is given a positive weight. Importantly, basis functions are defined sequentially, and not in an agent-centric frame of reference (the latter uses positive signs for one’s own team and negative signs for the opponent’s team). The projections onto the basis functions are the dot product of the weight matrix and the sequential performance scores: b = Wpos (see the main text and Fig. 2 for an example calculation). This means the basis function projections are defined as:

-

4.

\({b}_{1}={{\bf{\text{w}}}}_{1}\cdot {\bf{\text{pos}}},\)

-

5.

\({b}_{2}={{\bf{\text{w}}}}_{2}\cdot {\bf{\text{pos}}},\)

-

6.

\({b}_{3}={{\bf{\text{w}}}}_{3}\cdot {\bf{\text{pos}}}.\)

The three basis functions (w1, w2, w3) have two important features. The first is that they are pairwise orthogonal, and the second is that all group and dyadic weight vectors can be derived from them (that is, they form a basis for sequential decision space in our task);

-

7.

\({{\bf{w}}}_{i}\cdot {{\bf{w}}}_{j}=0\) for all \(i\ne j.\)

That the three basis functions form a basis for sequential decision space means that all the possible sequential comparisons afforded by our experimental design can be defined with them. First, the three weight vectors w1, w2 and w3 already represent all possible group decisions made in a sequential frame of reference. This means that they capture all the possible pairings of a team of two players with a positive sign and a team of two other players with a negative sign in a four-player sequence. Note that the overall signs of the weight vectors are arbitrary (for example, whether it is [1, 1, −1, −1] or [−1, −1, 1, 1]) because we only care about the comparison itself, irrespective of whether a team is one’s own team or the opponent’s team. Inverted contrasts can easily be constructed by multiplication with −1 and are therefore omitted from the list of contrasts here (for example, [−1, −1, 1, 1] = −1 × [1, 1, −1, −1]).

The dyadic weight vectors require participants to ignore two players and compare only one player per team. Therefore, they are expressed as contrasts, such as [0, 1, −1, 0], that contain two zeros (the irrelevant players), and one positive and one negative weight (the relevant players being compared). For example, the contrast [0, 1, −1, 0] indicates that the performance presented at the second time point in the sequence must be compared with the third performance of the sequence (pos2 versus pos3). Again, inverted dyadic weight vectors (such as [0, −1, 1, 0]) can easily be constructed by multiplication with −1. Regarding the dyadic comparisons, below is the complete list of all possible sequential dyadic weight vectors and how these comparisons are linear combinations of the basis functions w1, w2 and w3. Again, we omit sign-inverted contrasts. Note that the numbering of the contrasts corresponds to the main text (see Fig. 2).

-

8.

\({{\bf{w}}}_{4}=[0,\,0,-1,\,1]=([-1,\,1,-1,\,1]+[1,-1,-1,\,1])/2=({{\bf{w}}}_{1}+{{\bf{w}}}_{3})/2\)

-

9.

\({{\bf{w}}}_{5}=[0,\,1,\,0,-1]=([1,\,1,-1,-1]-[1,-1,-1,\,1])/2=({{\bf{w}}}_{2}-{{\bf{w}}}_{3})/2\)

-

10.

\({{\bf{w}}}_{6}=[0,\,1,-1,\,0]=([-1,\,1,-1,\,1]+[1,\,1,-1,-1])/2=({{\bf{w}}}_{1}+{{\bf{w}}}_{2})/2\)

-

11.

\({{\bf{w}}}_{7}=[-1,\,0,\,0,\,1]=([-1,\,1,-1,\,1]-[1,\,1,-1,-1])/2=({{\bf{w}}}_{1}-{{\bf{w}}}_{2})/2\)

-

12.

\({{\bf{w}}}_{8}=[1,\,0,-1,\,0]=([1,\,1,-1,-1]+[1,-1,-1,\,1])/2=({{\bf{w}}}_{2}+{{\bf{w}}}_{3})/2\)

-

13.

\({{\bf{w}}}_{9}=[1,-1,\,0,\,0]=([1,-1,-1,\,1]-[-1,\,1,-1,\,1])/2=({{\bf{w}}}_{3}-{{\bf{w}}}_{1})/2\)

For these reasons, w1, w2 and w3 form an orthogonal basis for all sequential decision contrasts made in this experiment. Just as the three basis functions w1, w2 and w3 are sufficient to define all the relevant sequential comparisons in the context in this task, so are the projections onto the basis functions sufficient to compute the actual performance differences (the decision variables, associated with those contrasts). Note that participants also factor in the non-social bonus as well as this sequential decision variable (see the ‘Decision phase’ section). Because the weight vectors w1 to w9 define all the possible sequential comparisons, the DVs associated with these contrasts can be calculated as:

-

14.

\(\begin{array}{cc}{\text{DV}}_{i}={{\bf{w}}}_{i}\cdot {\bf{p}}{\bf{o}}{\bf{s}}, & {\rm{f}}{\rm{o}}{\rm{r}}\,i\in \,\{1,\,\ldots ,\,9\}\end{array}.\)

Because the dot product is distributive over vector addition, the fact that the three basis functions w1, w2 and w3 can be linearly combined to construct all other weight vectors implies that the projections onto the basis functions can be combined in precisely the same way to construct all the DVs:

-

15.

\({b}_{i}+{b}_{j}=\,{{\bf{w}}}_{i}\cdot {\bf{p}}{\bf{o}}{\bf{s}}+{{\bf{w}}}_{j}\cdot {\bf{p}}{\bf{o}}{\bf{s}}=({{\bf{w}}}_{i}+{{\bf{w}}}_{j})\cdot {\bf{p}}{\bf{o}}{\bf{s}}.\)

Specifically, this means:

-

16.

\({{\rm{D}}{\rm{V}}}_{k}={{a}_{i}b}_{i}+{a}_{j}{b}_{j}=\,{a}_{i}{{\bf{w}}}_{i}\cdot {\bf{p}}{\bf{o}}{\bf{s}}\) + \({a}_{j}{{\bf{w}}}_{j}\cdot {\bf{p}}{\bf{o}}{\bf{s}}=({a}_{i}{{\bf{w}}}_{i}+{a}_{j}{{\bf{w}}}_{j})\cdot {\bf{p}}{\bf{o}}{\bf{s}}\), for k ∈ {1, 2, 3} with i, j ∈ {1, 2, 3}, ai, aj ∈ {0, 1} and

-

17.

\({{\rm{D}}{\rm{V}}}_{k}=({a}_{i}{b}_{i}+{a}_{j}{b}_{j})/2=({a}_{i}{{\bf{w}}}_{i}\cdot {\bf{p}}{\bf{o}}{\bf{s}}+{a}_{j}{{\bf{w}}}_{j}\cdot {\bf{p}}{\bf{o}}{\bf{s}})/2=({a}_{i}{{\bf{w}}}_{i}+{a}_{j}{{\bf{w}}}_{j})/2\cdot {\bf{p}}{\bf{o}}{\bf{s}}\), for k ∈ {4, 5, …, 9}, with i, j ∈ {1, 2, 3} and ai, aj ∈ {−1, 1}.

Sorting of basis functions and transformation to choice

The basis functions can easily be used to derive sequential decision variables from the observed performance sequence. Basis functions can be sorted on the basis of relevance. We define the primary basis function as the one that coincides with the groupings of the two teams in the four-player sequence during the observation phase. The projection onto the primary basis function serves as a sequential DV for group decisions. The secondary basis function is defined as the one other basis function that in combination with the primary basis function results in the dyadic decision that is currently relevant. Secondary basis functions are defined only for dyadic decisions and are known only once the decision is revealed. Finally, tertiary basis functions are defined as the remaining basis function that is not relevant for a current dyadic decision.

To reach an agent-centric DV, both the primary and secondary basis functions need to be in the reference frame of the two teams. If this is already the case, the agent-centric decision variable is a simple linear addition of both primary and secondary basis functions (plus the non-social bonus). However, sometimes the basis functions have to be sign inverted to align with the agent-centric perspective. We refer to this as sign inversion and refer to the process of transforming the basis function from a sequential frame of reference to an agent-centric social frame of reference as inversion of the basis function projections. Regarding the primary basis function, this means that the weights of the corresponding weight vector must be in accordance with the player identities and assign positive weights to the players of one’s own group and negative weights to the opponents’ group. The agent-centred primary projection is therefore independent of any sequence information, and simply assigns positive weights to one’s own team and negative weights to the opponents’ team. Hence, the agent-centred primary basis function captures the difference in performance between one’s own team minus the performance of the other team. Note that our neural analyses investigating primary and secondary basis function projections contain, as control variables, these same basis function projections, but transformed in this agent-centric reference frame (inverted).

The secondary basis function also needs to be transformed into an agent-centric space to arrive at an agent-centric DV for dyadic decisions. In the same manner as the agent-centred primary basis function, the agent-centred secondary basis function assigns positive weights to the relevant player from one’s own group, and negative weights for the relevant player from the opponents’ group. However, different from the agent-centred primary basis function, the agent-centred secondary basis function assigns a negative weight to the irrelevant player from one’s own group and a positive weight to the irrelevant player from the opponents’ team. For example, in a self trial, the following set of weights is required to make the correct decision:

-

18.

\({{\bf{w}}}_{{\rm{D}}{\rm{E}}{\rm{C}}-{\rm{s}}{\rm{e}}{\rm{l}}{\rm{f}}}=[S,\,P,\,{\rm{O}}{\rm{r}},\,{\rm{O}}{\rm{i}}]=[1,\,0,-1,\,0]\)

(note that positions in this vector do not denote sequence positions, but simply refer to self (S), partner (P), relevant opponent (Or) and irrelevant opponent (Oi)).

The inverted (that is, agent-centric social) primary and secondary weight vectors for this comparison are:

-

19.

\({{\bf{w}}}_{{\rm{p}}{\rm{r}}{\rm{i}}{\rm{m}}{\rm{a}}{\rm{r}}{\rm{y}}-{\rm{i}}{\rm{n}}{\rm{v}}{\rm{e}}{\rm{r}}{\rm{t}}{\rm{e}}{\rm{d}}}\,=[{\bf{S}},\,{\bf{P}},\,{\rm{O}}{\rm{r}},\,{\rm{O}}{\rm{i}}]=[1,\,1,-1,-1],\)

-

20.

\({{\bf{w}}}_{{\rm{s}}{\rm{e}}{\rm{c}}{\rm{o}}{\rm{n}}{\rm{d}}{\rm{a}}{\rm{r}}{\rm{y}}-{\rm{i}}{\rm{n}}{\rm{v}}{\rm{e}}{\rm{r}}{\rm{t}}{\rm{e}}{\rm{d}}}=[{\bf{S}},\,{\bf{P}},\,{\rm{O}}{\rm{r}},\,{\rm{O}}{\rm{i}}]=[1,-1,-1,\,1].\)

Note that, again, in these equations, positions inside the vectors do not refer to the sequential position in the observation phase, but simply denote a player’s identity. In this manner, the irrelevant players cancel out when linearly combining the two agent-centred basis functions (compare with the example given in Fig. 3). For example, for self decisions, in which partner and one of the opponents is irrelevant:

-

21.

\({{\bf{w}}}_{{\rm{D}}{\rm{E}}{\rm{C}}-{\rm{s}}{\rm{e}}{\rm{l}}{\rm{f}}}=[{\bf{S}},\,{\bf{P}},\,{\rm{O}}{\rm{r}},\,{\rm{O}}{\rm{i}}]=({{\bf{w}}}_{{\rm{p}}{\rm{r}}{\rm{i}}{\rm{m}}{\rm{a}}{\rm{r}}{\rm{y}}-{\rm{i}}{\rm{n}}{\rm{v}}{\rm{e}}{\rm{r}}{\rm{t}}{\rm{e}}{\rm{d}}}+\,{{\bf{w}}}_{{\rm{s}}{\rm{e}}{\rm{c}}{\rm{o}}{\rm{n}}{\rm{d}}{\rm{a}}{\rm{r}}{\rm{y}}-{\rm{i}}{\rm{n}}{\rm{v}}{\rm{e}}{\rm{r}}{\rm{t}}{\rm{e}}{\rm{d}}})/2=([1,\,1,-\,1,-\,1]+[1,-\,1,-\,1,\,1])/2=[2,\,0,\,-\,2,\,0]/2=[1,\,0,\,-\,1,\,0].\)

As the dot product is distributive over vector addition (see ‘Basis functions and their weight vectors’ section), it follows that the primary and secondary basis projections, when averaged together, provide a simple route to the agent-centric decision variable for dyadic decisions (note that the non-social bonus still needs to be added and this is considered accordingly in all analyses).

Imaging data acquisition and preprocessing

Imaging data were acquired using a 3-Tesla Siemens MRI scanner with a 64-channel head coil. T1 weighted structural images were collected with an echo time (TE) of 3.97 ms, a repetition time (TR) of 1.9 s and a voxel size of 1 mm × 1 mm × 1mm. Functional images were collected using a multiband T2*-weighted echo planar imaging sequence with an acceleration factor of two with TE = 30 ms, TR = 1.2 s, a voxel size of 2.4 mm × 2.4 mm × 2.4 mm, a 60° flip angle, a field of view of 216 mm and 60 slices per volume. Most scanning data was collected with an oblique angle of 30° to the PC–AC line to avoid signal dropout in orbitofrontal regions56. Two field-map scans (sequence parameters: TE1, 4.92 ms; TE2, 7.38 ms; TR, 4482 ms; flip angle, 46°; voxel size, 2 mm × 2 mm × 2 mm) of the B0 field were also acquired and used to assist distortion–correction.

The FMRIB Software Library (FSL) was used to analyse the imaging data57. We preprocessed the data through field-map correction, and temporal (3 dB cut-off, 100 s) and spatial filtering (Gaussian using a full-width half-maximum of 5 mm) and using the FSL MCFLIRT to correct for motion. The functional scans were registered to standard MNI space using a two-step process: first, the registration of subjects’ whole-brain EPI to T1 structural image was done using BBR with (nonlinear) field-map distortion–correction; and second, the registration of the subjects’ T1 structural scan to a 1 mm standard space was done using an affine transformation followed by nonlinear registration. We used the FSL MELODIC to filter out noise components after visual inspection.

fMRI whole-brain analysis

We used FSL FEAT for first-level analysis. First, data were pre-whitened with FSL FILM to account for temporal autocorrelations57. Temporal derivatives and standard motion parameters were included in the model and we used a double gamma HRF58,59. Results were calculated using automatic outlier-deweighting and FSL FLAME 1 with a cluster-correction threshold of z > 3.1 and P < 0.05.

For all whole-brain analyses, all non-constant regressors were normalized to a mean of zero and a standard deviation of 1. In self and partner decisions, we refer to O1 and O2 as the Or and Oi, depending on whether participants were asked to compare their performance or not.

fMRI GLM1

In a first GLM (fMRI GLM1), we modelled each RDK as a 2-s constant event time-locked to its onset. This constant captured the player-unspecific variance in the BOLD signal for all random dot motion events. As well as this constant, we specified four parametric regressors that were specific to the performance of each of the players and captured their parametric performance score for this trial (0–6). These regressors also had a duration of 2 s to match the constant’s duration and were time-locked to the onset of the corresponding players’ RDK. Related to participants’ decisions, we constructed six regressors to capture the main activation for decisions, binned by condition and decision number (first or second after the RDK). This meant we had one constant for self decisions that came first (S1) and one constant for self decisions that came second (S2), and did the same for partner decisions and group decisions (termed P1, P2, G1 and G2). These constants had a duration of 2 s, which was the average time participants took to make choices. Furthermore, parametric regressors of interest were time-locked to the same constant effects. For self and partner decisions, we used the following parametric regressors: S performance (indicating performance score associated with self); P performance (indicating performance score associated with partner); Or performance (indicating performance score associated with the relevant opponent); Oi performance (indicating performance score associated with the irrelevant opponent); and bonus.

This meant that we used four sets of these parametric regressors, which were each time-locked to the onsets of S1, P1, S2 and P2. The duration of these regressors were also set to 2 s to match the main effects. For group decisions, we used the following set of parametric regressors, each of a duration of 2 s: S performance, P performance, O1 performance, O2 performance and bonus.

Using the same logic as for the other trial types, we used two sets of these regressors, separately time-locked to G1 and G2. Note that O1 and O2, the two opponents, were clearly identifiable because the letters O1 and O2 were overlaid over their cues. We coded the fMRI regressors in line with these identities, even though other features of the opponents, such as their position on screen and colour, were randomized across participants (see Extended Data Fig. 1 for details of the visual presentation). Finally, as regressors of no-interest, we modelled button responses as regressors time-locked to all button responses, setting the duration to a standard duration of 0.1 s.

In Fig. 1, we present the effects of S performance during S2 and P performance during P2.

fMRI GLM 2

In the second GLM (fMRI GLM2), we focused on the representation of the basis functions towards the end of the observation phase. As in all analyses related to the basis functions, we tested the parametric effects of the trialwise projections onto the basis functions. We modelled the constant effect of RDKs by time-locking a stick function (duration of 0.1 s) to a time 2 s after the offset of the last RDK in the sequence of four RDKs that were presented at the start of each trial. This time point coincided precisely with the average onsets of the first decision. We time-locked several parametric regressors to the same time point, each with the same standard duration of 0.1 s: b1, b2 b3, S performance, P performance, O1 performance and O2 performance.

Again, each parametric regressor was normalized. We also used two parametric regressors related to the position of self and partner in the sequence (S-position and P-position). Each could have a value between 1 and 4 depending on the sequential position of that player. We time-locked these latter two parametric effects to the offset of the last RDK in the sequence when all performances have been presented.

We took care to include regressors that account for decision-related activity. We coded the different decision types as three constants, each with a duration of 2 s, as in the previous design: S, P and G decisions. Each constant was accompanied by parametric regressors of the same timing that captured decision related activations: DV, the DV relevant for the current decision, including bonus; DV × C, DV in interaction with choice (engage or avoid on the current trial); choice, a binary variable coded as engage/avoid; DVi, the performance difference of the irrelevant players, coded as own team member versus opponent team member (only defined for self and partner, not group decisions); and DVi × C, DVi in interaction with choice.

All interactions were calculated by normalizing both components of the interaction to a mean of zero and a standard deviation of 1, and then multiplying both. Finally, as regressors of no-interest, we modelled button responses as a regressors time-locked to all button responses, setting the duration to a standard duration of 0.1 s.

On the contrast level, we combined all basis function projections (b1, b2 and b3) and the S-position regressor, each weighted evenly ([1, 1, 1, 1] contrast; Fig. 3).

fMRI GLM 3

In this design (fMRI GLM3), we again modelled each random dot-motion kinematogram as a 2 s constant event time-locked to its onset. We combined self and partner decisions to one category (dyadic trials, DY), but split by number of decisions (DY1 for the first decision of both self and partner decisions, and DY2 analogously). The duration of the decision events was set to 2 s, as in the other designs. We time-locked parametric regressors to the DY trials, but separately to DY1 and DY2. These regressors had the same timing parameters as the respective decision constants and they were:

-

1.

primary basis function;

-

2.

secondary basis function;

-

3.

tertiary basis function;

-

4.

inverted primary basis function (the primary basis function transformed to an agent-centric, social frame of reference; see above);

-

5.

inverted secondary code inversion (yes or no);

-

6.

inverted primary basis function in interaction with choice;

-

7.

inverted secondary basis function in interaction with choice;

-

8.

bonus;

-

9.

bonus in interaction with choice; and

-

10.

inverted secondary basis function (transformed to an agent-centric, social frame of reference; see above).

We calculated the combined effect across both DY1 and DY2 for the inverted primary and the inverted secondary basis function (see 4 and 10 in above list) using a [1, 1] contrast. We then averaged both of these combined contrasts to estimate the overall effect of inverted primary and secondary basis function combined. Furthermore, we modelled group decisions as a separate constant regressor, collapsed over both the first and second decisions. The duration of this regressor was set to 2 s and we time-locked the following regressors to it: primary basis function; inverted primary basis function; inverted primary basis function in interaction with choice; bonus; and bonus in interaction with choice.

For all the above regressors in the GLM, if they are related to the basis functions, they refer to the trialwise projections onto the basis functions. On the contrast level, for DY1 trials (dyadic decisions that came first), we combined the first two basis function projections linearly ([1, 1]; primary + secondary basis function; regressors 1 and 2 in the above list). We also contrasted them with the tertiary basis function projection ([1, 1, −1]; primary + secondary − tertiary function; regressors 1, 2 and 3 in the above list). We also calculated the dyadic decision variable in the reference frame of choice (as chosen versus unchosen). We did this by combining regressors 6, 7 and 9 in the list above over both DY1 and DY2 trials.

ROI analyses

ROIs had a radius of three voxels and were centred on peak voxels of significant clusters. To guarantee statistical independence, we analysed only those variables that were independent of ROI selection and only epochs that were temporally dissociated from the time period that served for ROI selection. For ROI time-course analyses, we extracted the preprocessed BOLD time courses from each ROI and averaged over all voxels of each volume. The time courses were normalized (per session, as for subsequent analyses), oversampled by a factor of ten (using cubic spline interpolation, as for subsequent analyses) and, in a trialwise manner, aligned at the time point of interest. We then applied a GLM to each time point and computed one beta weight per time point, which resulted in a time course of beta weights for each regressor. We used a leave-one-out procedure to conduct significance tests on the beta-weight time courses. For this, in a predefined time window, we calculated the absolute peak of the time course (defined as the maximal deviation from zero, either positive or negative). We did this for all participants except a left-out participant. We then determined the beta weight of the left-out participant at the time of the peak of the remaining group. In this manner, we determined a beta weight for every participant, which, importantly, was independent of the participant’s own data. We subsequently performed t-tests against zero on these beta weights.

We used two time-course designs, both time-locked to the end of the observation phase, which was on average 2 s before the onset of the first decision. All regressors were normalized to a mean of zero and a standard deviation of 1. ROI GLM1 comprised the following regressors: b1, b2, b3, S performance, P performance, O1 performance, O2 performance, S-position (the sequence position of self, which can be can be 1, 2, 3 or 4) and P-position (the same, but for the partner).

ROI GLM2 comprised a similar set of regressors: b1, b2, b3, null vector, S-position and P-position.

Note that the null vector from ROI GLM2 is the sum of the performance of all players and hence cannot be part of ROI GLM1. For the analysis of the effects, we used an analysis time window of 4–10 s after the observation phase offset. To distinguish those effects from even earlier effects linked to the observation phase itself, we conservatively used an earlier time window of 0–6 s after the offset of the last RDK (only used for S-position; see the main text). The significance of the basis function projections and S-position was tested in ROI GLM1, and the null vector was tested for significance in ROI GLM2.

Choice simulations

We simulated, analysed and visualized data using Matlab 2021a, Jasp v.0.16 and gramm60.

We simulated choices in our experiment to examine the effects of the primary and secondary basis functions on decision making. For all these analyses, primary and secondary basis function projections are inverted (expressed in an agent-centric, social reference frame (see ‘Sorting of basis functions and transformation to choice’). We consider self decisions, but all results hold when simulating partner decisions accordingly.

We simulated choices as a linear combination of primary basis projection, secondary basis projection and the non-social bonus (see the DV definitions in the beginning of the Methods section; DVself = S − Or + B). As we have shown analytically above, the correct agent-centric decision variable is given by the linear combination of these three variables, because primary and secondary basis projections in combination result in the performance difference of S and Or, ignoring the two other players. Therefore, simulated choices used a logistic link function and a set of weights for the three predictors (wprim, wsec and wbonus) to estimate choice probabilities, which were then binarized to an engage (1) or avoid (0) decision with a likelihood based on the choice probability. We simulated self decisions for all participants with 200 simulations per participant. We subsequently fitted an agent-centric logistic GLM using S performance, P performance, Or performance, Oi performance and the bonus. Finally, we averaged and plotted beta weights from this GLM and examined the qualitative effects of irrelevant players (P and Or) on self decisions.

We simulated choices under two regimes. For both, we chose weight vectors that resulted in beta weights of similar magnitudes to those observed in our behavioural analyses. The first regime was the ‘balanced’ regime, which used identical weights for primary and secondary basis functions, as one would optimally use to analytically derive the agent-centric decision variable (wprim = 1.5, wsec = 1.5 and wbonus = 1.5). The second regime used a relative ‘overweighting’ of the primary over the secondary basis function, as indicated by our previous analyses (wprim = 1.7, wsec = 1.3 and wbonus = 1.5).

Behavioural data analyses

We used logistic GLMs to capture the weights participants assigned to different pieces of information when making their decisions. We predicted participants’ choices to engage (versus avoid) as a function of a normalized set of regressors (each regressor had a mean of zero and a standard deviation of 1). We applied the GLMs separately to self and partner decisions. The GLM comprised the performance of self (S) and partner (P) as well as the two opponents, separately coded as the relevant opponent (Or; the one whose performance was to be considered in the dyadic comparison) and the irrelevant opponent (Oi; the one whose performance was irrelevant for the dyadic comparison). The GLM also contained the non-social bonus.

Drift diffusion modelling

A time-varying drift diffusion model43 (tDDM) was fitted to the choice outcome (engage or avoid) and reaction time data of our participants. The tDDM expands the standard DDM60,61 by allowing for different onset times of the attributes that influence the evidence accumulation process. Specifically, our tDDM allowed for different onset times between the primary and the secondary basis function (but in agent-centric space). We estimated a total of seven free parameters separately for each participant and experimental condition using the differential evolution algorithm62. The free parameters were the weights of the primary and secondary basis function, the weight of the bonus, the difference in onset times between the primary and the secondary basis function, the decision threshold, the starting-point bias and the non-decision time. The difference in onset times was estimated relative to the onset of the primary basis function. Thus, a positive difference indicates that the secondary basis function entered the evidence accumulation process later than the primary basis function, whereas a negative difference indicates that the secondary basis function entered the evidence accumulation process earlier. The bonus always entered the accumulation process at the same time as the function with the earlier onset. We optimized the tDDM parameters by simulating 3,000 decision outcomes and reaction times per iteration for each unique combination of primary function, secondary function and bonus that the respective participant encountered during the experiment. For any given participant, this could be a subset of all possible combinations, and some combinations could have been encountered repeatedly. The parameters were adapted from iteration to iteration to maximize the likelihood of the empirical data, given the distributions generated from the simulated decisions over a total of 150 iterations.

Study 2: behavioural experiment

Study procedures and data acquisition

We ran a behavioural experiment online using Prolific (www.prolific.com). The experiment took one hour, and participants were paid £9 for taking part. The ethics committee of the University of Oxford approved the study and all participants provided informed consent (MSD reference number: R70000/RE001). The experiment was programmed using jspsych63 and the random-dot-motion toolbox64. As inclusion criteria, we used the age range of 18–40, and fluent English speakers were recruited in the United States and the United Kingdom. As in the fMRI study, participants first performed a behavioural pre-experiment described as a performance assessment. This comprised, in quick succession, random dot-motion stimuli of varying coherence and took in total about 3 min. Participants were informed that the pre-experiment was relevant for the next part of the study, when they were shown samples of their performance in a group decision-making experiment. After the pre-experiment, the instructions for the decision-making experiment followed. This part, again, was modelled on the fMRI study. After the instructions to the decision-making experiment, participants passed a comprehension check that asked three questions about the task rules. The participants went on to do the experiment only if they responded correctly to all three questions. Otherwise, the experiment was aborted and participants were given a small amount for their time investment (about 5–10 min at this point). After the decision-making experiment, participants filled in some questionnaires unrelated to the purpose of this study. The study was conducted over a time period of three weeks. Data for all versions of the experiment were acquired in parallel. For participants who completed the study multiple times, we only considered their initial participation and discarded subsequent data sets.

Of the 805 data sets collected, we excluded participants who: took longer than 2 h to complete the experiment; took longer than 30 s to respond to decision trials in more than 10% of trials; and showed a choice repetition bias in the initial performance assessment or the decision-making part of the study. A choice repetition bias was defined as picking the same choice (left or right button) in more than 85% of trials. This led to the exclusion of 11 participants overall. The final sample comprised 795 participants.

Initial performance assessment

After starting the experiment, participants entered the performance assessment stage, which they were told was important for the second, main part of the study. Participants estimated the motion direction of an RDK stimulus and responded with left/right buttons to indicate the corresponding direction. The performance assessment comprised 120 RDK trials, lasting in total about 3 min. The motion coherences were set at 0.512 for 20% of the trials and 0.032 for 80% of the trials, with 0.512 being a higher coherence and therefore an easier decision. Each RDK stimulus was presented for a maximum duration of 1 s, requiring participants to make their decisions within that time frame. If they took longer, they would see a ‘Missed!’ message on the screen and the trial would be marked as incorrect. The performance assessment consisted of two sub-parts. In the first sub-part, participants received feedback on their decisions (yellow circle for correct or red cross for incorrect). Following that, the task continued without any feedback. The reason for this was to prevent participants from becoming fully aware of their performance levels before the main experiment, when they would be exposed to similar stimuli.

Decision-making schedules and across-subject conditions

We modelled the behavioural group decision-making experiment closely on the fMRI study. The behavioural experiment comprised 108 trials. As in the fMRI experiment, each trial consisted of an observation phase and two subsequent decisions (a total of 216 decisions). The only modifications made served to shorten and simplify the task slightly, to adjust it to the time frame and complexity of large-scale online studies. We used no temporal jitters between the observation phase and the first decision phase, and no temporal jitters between the first and the second decision phase of each trial. Furthermore, to slightly reduce the difficulty of the task, we extended the time that a performance cue was shown during the observation phase from 200 ms to 300 ms, with 100 ms delay between cues. Otherwise, the trial timeline was the same as in the fMRI study. The non-social bonus that was symbolized by yellow and red coins during the decision phase in the fMRI experiment was now symbolized by different colours of the arrow that indicated the players to be compared. A positive bonus was indicated by a yellow arrow, and a negative bonus was indicated by a red arrow. This simple colour-coding scheme was possible because we used only two magnitudes of the bonus in the behavioural study: −0.5 and 0.5.

We used four versions of the experimental schedule, arranged in a 2 × 2 between-subjects design; the two schedules comprised self, partner and group decisions (the group condition) and two schedules comprised only self and partner decisions (the no-group condition). A schedule was defined by the assignment of performance scores to players for each trial during the observation phase, and by the assignment of the bonus and decision type to each decision in the study. The comparison of participants’ choice behaviour between group and no-group conditions was the focus of this study. The group condition comprised 72 group decisions, 72 self decisions and 72 partner decisions. In half of the self decisions, participants compared their own scores with those of O1 (36 decisions), and in the other half, with O2 (36 decisions). This meant that O1 and O2 were the relevant opponent for the self equally often. The same was true in partner decisions, with O1 and O2 being the relevant opponent equally often. The schedules used in the no-group condition were generated by replacing group decisions with self and partner decisions in equal number (keeping the bonus for all decisions the same). This resulted in 108 self decisions and 108 partner decisions in total. Again, the relevant opponent for each decision type was O1 and O2 equally often. Note that we analysed only self and partner decisions that were identical across conditions (matched decisions). We discarded self and partner decisions in the no-group schedule that were replacements of the group decisions, because these decisions had no direct correspondence across conditions. This resulted in 72 matched self decisions and 72 matched partner decisions. In this way we were able to compare identical decisions in the two conditions, but the identical decisions took place in the context of group decisions in the group condition but not in the no-group condition.

Although the schedule defined precisely which performance score was presented for which player on which trial, it did not specify the order in which the players were shown. To determine this, and to avoid any possibility that idiosyncrasies of the player order confounded our results, we generated 1,000 shuffled player-order sequences. Each sequence presented each possible player order equally often. With four players, 4 × 3 × 2 × 1 = 24 different player orders are possible. We used those for 4 × 24 = 96 trials. For the final 12 trials (the experiment comprised 108 trials), the player orders were randomly selected for each of the 1,000 player sequences. Then, for each participant performing the behavioural experiment, one player order was selected randomly out of the 1,000.

Overall, we used four experimental schedules. For both group and no-group conditions, we used two schedules that differed only in the precise assignment of scores to players that were shown during the observation phase (schedule 1 and schedule 2). The behavioural differences between these schedules were not of interest for the study’s research question. The two versions were used only to assess the stability of our experimental effects across numerical differences in the information that was to be remembered. For the same schedule version, group and no-group conditions differed only in the presence or absence of the group decisions. Therefore, we made sure to acquire a similar number of participants for each schedule version for both group and no-group conditions. This ensured an equal number of participants in the conditions that we meant to compare in our study as follows: schedule 1, 192 participants in the no-group condition and 190 in the group condition; and schedule 2, 207 participants in the no-group condition and 206 in the group condition.

Data analysis by ridge regression

We analysed the behavioural data using Matlab 2021a and Jasp v.0.16. We fitted a logistic GLM to the choice data that we had also used for the fMRI sample. All regressors were normalized (mean of 0, standard deviation of 1) and predicted the choice to engage (1) or avoid (0), that is, whether one’s own team member was judged to be the better performer. As in the fMRI data set, we included S performance, P performance, Or performance, Oi performance and the bonus in the regression. Because the experiment, for timing reasons, comprised fewer trials per participant, we used ridge regression65,66 to estimate the regressors’ beta weights (the effect sizes). Ridge regression penalizes large beta weights according to a regularization coefficient λ and thereby prevents overfitting and improves generalization. This is appropriate for cases such as ours in which there are many regressors and comparatively few trials. We applied the regression model to all sessions using Matlab lassoglm (setting α to a very small value) in the following way. First, we determined an appropriate regularization coefficient λ. To do so, we repeatedly fitted the GLM to each individual dataset while varying λ between zero and 10−3 to 10−1 (log-spaced). During each fit, we used a three-fold cross-validation approach to determine the overall model deviance for each λ for all datasets combined. We repeated this procedure twice. Finally, we selected the λ that resulted in the lowest overall model deviance. This is the λ with the best cross-validated model fit, which was then used to run the ridge GLM of interest. Importantly, the same best-fitting λ was used for all participants, irrespective of condition, to enable fair statistical comparisons of beta weights within and across conditions.

Calculation of players’ decision relevance

We calculated the decision relevance of each player over an experimental schedule in the following way. If the player was irrelevant for a decision (for example, the partner in self decisions), their relevance score was zero. In dyadic trials, both relevant players’ relevance scores were set to 0.5 (that is, self and relevant opponent in self decisions). In group decisions, each player’s relevance score was 0.25 (self, partner, O1 and O2 contribute equally). We determined the relevance scores for all players for all decisions in an experimental schedule, added them up and divided by the number of trials.

Study 3: control fMRI experiment

Participants

There were 36 participants. One participant did not complete the scanning session and two participants could not perform the experiment owing to problems with the button box. One participant moved extensively in the MRI scanner and during melodic preprocessing57, and we discovered that very few fMRI components were noise free. These four participants were removed from the sample. The final sample contained 32 participants (age range 19–39 years, 22 female). Participants received £70 for taking part in in the experiment and received extra earnings that were allocated according to their task performance, mirroring the social fMRI experiment. The ethics committee of the University of Oxford approved the study and all participants provided informed consent (MSD reference number: R60547/RE001).

Experimental procedures

As in the main social fMRI experiment, participants performed a behavioural pre-experiment before entering the MRI scanner. However, the framing of the pre-experiment was very different from that of the social fMRI study. Instead of framing the task as a social decision- making experiment, we framed it as a motor task. Participants were told that the experiment was about learning and remembering motor sequences, in particular, sequences of finger taps. To this end, participants performed ‘tap training’ as pre-experiment preparation. They were shown sequences of finger taps and were asked to repeat these sequences. Four buttons were used in the pre-experiment, assigned to the index and middle fingers of the left and right hands. The screen showed the outlines of two hands with the four fingers highlighted (Fig. 5 and Extended Data Fig. 11). During the tap training, one finger at a time was highlighted and participants pressed the corresponding buttons. Fingers were highlighted repeatedly, and participants were asked to press the corresponding buttons until the sequence ended. Incorrect button presses and long response times were counted as error trials and led to the sequence being repeated until it was completed without error. The pre-experiment comprised 15 trials. Participants performed the pre-experiment for approximately 15 min.

The pre-experiment helped create the cover story that the study was about motor sequences. However, the pre-experiment was designed to have clear analogies to the observation phase of the social fMRI experiment, which presented sequences of successful (indicated by yellow coins) and erroneous (indicated by red Xs) performance scores. The motor pre-experiment similarly comprised yellow coins to indicate that a button should be pressed and a red X to indicate that a button press should be omitted. The resulting sequence of finger taps in the control fMRI experiment resembled the sequence of four players’ performance scores in the social task. In our social experiment, performance scores for four players had been shown in sequence, but now, the required number of presses for four fingers were shown in a sequence. There were always six yellow/red cues shown per finger (indicating either an executed button press or button press omission), just as six performance cues had been shown per player in our previous social experiment. Finally, in the same way that self and partner were one team, and O1 and O2 were another team in the social experiment, the four fingers naturally grouped together as the two fingers of the left hand and the two fingers of the right hand, so the two hands corresponded to the two teams in the main control fMRI experiment. Just as participants had compared performance scores across players in the social experiment, participants of the control fMRI experiment completed an fMRI experiment, in which they compared the number of finger taps between the fingers of the two hands. Participants never made decisions between fingers from the same hand, just as they had not made comparisons of players from the same team in the social task.

The behavioural pre-experiment that took place before scanning and the control fMRI experiment were programmed in Matlab using Psychtoolbox-3 (ref. 55; http://psychtoolbox.org) and in Psychopy (https://www.psychopy.org/).

The main experiment was designed to closely match the rationale and the statistics of the social fMRI experiment. The difference between the experiments comprised the framing of the task as a motor experiment versus a social experiment.

In the same way that there was continuity between the pre-experiment and the main experiment in the social study, there was a clear relationship between the pre-experiment and the main experiment here too. In both, participants were shown a display outlining their hands, highlighting the left middle (LM) and the left index finger (LI), as well as the right index (RI) and the right middle finger (RM). In the main control fMRI experiment, the hands were placed on the left and right sides of a button box. As in the pre-experiment, participants observed sequences of yellow and red cues indicating executed finger taps and finger-tap omissions. Their task was to remember the number of taps per finger (the number of yellow coin symbols per finger, ranging from 0 to 6) to make good decisions in the second part of the trial. However, importantly, participants did not concurrently press the highlighted buttons. They were purely observing them in the same way as they were observing the performance scores in the social fMRI study. After the observation phase, participants made decisions about the observed information (the number of presses per finger). These decisions were framed as decisions between the two hands (mimicking the two teams from the social fMRI experiment). We arbitrarily mapped the identities of the players from the social task on the control fMRI experiment. We identified LM as motor–self, LI as motor–partner, RI as motor–opponent 1 and RM as motor–opponent 2. This mapping was consequential, because we utilized exactly the same experimental schedules as we had used for the social experiment for the control fMRI experiment. Therefore, 33% of decisions that were previously self decisions were now LM/motor–self decisions comparing the number of button presses between LM and one of the ‘opponent fingers’ RI or RM (both in equal number); 33% of decisions were partner decisions comparing LI/motor–partner with either RI or RM (both in equal number). The remaining 33% of group decisions were now decisions that asked participants to compare the overall number of button presses for the left hand with the overall number of button presses for the right hand. In all decision types, decisions in favour of the left or right hand were to be made by a press using the thumb of the congruent hand. As in the social fMRI experiment, every decision comprised a bonus that had a value of −1.5, −0.5, +0.5 or +1.5. The timing of all trial events was precisely the same as for the social fMRI experiment. We also used the same pay-off scheme as for the social version, meaning that decisions in favour of the left hand led to win or loss of points accumulated, and decisions in favour of the right hand led to no change in overall points accumulated during the experiment. Although this pay-off scheme may have seemed arbitrary in the context of the control fMRI experiment, it ensured that the behavioural and neural results could be compared across the two experimental versions.

Experimental schedules

As in the social fMRI study, this experiment comprised 144 trials; 288 decisions were made, which were distributed evenly between motor–self, motor–partner and both-hands/group decisions. Crucially, we used the same experimental schedules that we had created for the social fMRI experiment. As described above, the four players were mapped onto the fingers. This equivalence meant that precisely the same scores seen for the players in the social experiment were now seen for the corresponding fingers of the motor study. The scores were shown in precisely the same temporal position during the observation phase of the trial, in a manner corresponding exactly to the social version of the experiment on every single trial. And the same logic applied to the decision types (including the size of the bonus) that were precisely matched with the same choice being correct and the same pay-off being at stake for corresponding trials of the two experiments. Moreover, using identical schedules also streamlined the timings across both experiments. The control fMRI experiment therefore used identical timings to the social fMRI experiment for the observation phase, the decision phases and all temporal jitters. In short, from a numerical perspective, the experimental design, as well as the requirements for solving decisions in both experiments, were identical. The experiments differed only in their framing as a social experiment versus a motor sequence task.

Behavioural and fMRI analyses

All behavioural and neural analysis of the motor study closely resembled the analyses run for the original social fMRI study. Behaviourally, we analysed the percentage of correct motor–self and motor–partner decisions, as well as the median reaction types in motor–self and motor–partner decisions. We also calculated the same logistic GLM models for motor–self and motor–partner decisions predicting engage/avoid decisions as a function of the performance scores for motor–self, motor–partner, motor–Or, motor–Oi and the bonus.

We acquired neuroimaging data using the same acquisition protocol and implemented an identical preprocessing pipeline and parameters for the whole-brain analyses (see above for details). The fMRI whole-brain designs included the same set of regressors with identical timings to the social fMRI study (fMRI GLM1 and fMRI GLM2), ensuring that main and control task GLMs comprised the same degrees of freedom. We calculated one extra contrast for fMRI GLM2, which was the mean of all basis functions (b1, b2 and b3 weighted by a [1, 1, 1] vector). We set up a new GLM (fMRI GLM4), which was identical to fMRI GLM2, except for one aspect: we replaced the parametric effects of motor–S, motor–P, motor–O1 and motor–O2 with the mean of these three parameters, which corresponded to the null vector. This mirrors to the way in which we tested the null vector in the social fMRI study, which was the purpose of this new GLM.

Masks for ROI analyses had a radius of three voxels (the same radius as in the original social fMRI experiment) and were centred on peak voxels of significant clusters. To guarantee statistical independence, we analysed only variables that were independent of ROI selection and only epochs that were temporally dissociated from the time period that served for ROI selection. Within ROIs, we extracted contrast of parameter image (COPE) values from the whole-brain design to assess significance57,67. In this way, we isolated the effects that correspond to S performance in self decisions and P performance in partner decisions (for both analysing only the second decision in a trial), as displayed in Fig. 1 for the social study (fMRI GLM1). This is how we assessed the neural effects of motor–S and motor–P. For fMRI GLM2, we extracted the COPEs for the three basis function projections as well as the contrast relating to their mean (see above). For fMRI GLM4, we extracted the null vector. Furthermore, we used pre-threshold masking to assess the whole-brain significance of the mean effect of b1, b2 and b3 combined. The mask was centred on the pgACC peak from the social fMRI study (MNI = (−8, 42, 14)) and had a radius of 20 mm. We used a threshold of z > 3.1, P = 0.05 family-wise-error corrected25,68.

Study 4: supplementary behavioural study

Study procedures and data acquisition

We ran a second behavioural experiment online (Extended Data Fig. 5). The procedures for the study and the data acquisition were the same as for the first online experiment, study 2, and the initial performance assessment was identical. The same exclusion conditions applied, with the only difference to the other behavioural study relating to the choice repetition criterion, which we applied separately to the training and the test phase of the experiment (instead of applying it to the entire decision-making session). We excluded 29 participants on the basis of our exclusion criteria, resulting in a final sample of 1,022 participants.

Decision-making schedules and across-subject conditions

As before, we modelled the group decision-making experiment closely on the fMRI study. We implemented the same timing and complexity adjustments as for the other behavioural study. This experiment comprised 96 trials. As in the fMRI experiment, each trial consisted of an observation phase and two subsequent decisions (so there were 192 decisions). For each trial, one subsequent decision was a group decision, and one subsequent decision was a dyadic decision (either a self or a partner decision). This also meant that overall, in the entire decision-making experiment, 25% of the decisions were self decisions, 25% were partner decisions and 50% were group decisions. Each decision type was presented as often as the first and second decisions.

We used a between-subject design with four different experimental schedules. These schedules differed only with respect to the sequential order in which players were presented on a given trial. The performance schedule (which performance score was assigned to which player in a given trial) and the decision schedule (which decision was a self/partner/group decision on a given trial) were identical in all four versions. Therefore, we could be certain that any systematic difference in decision-making behaviour across schedules had to be caused by the sequential player position information.

The four experimental schedules were organized in a 2 (training) × 2 (test) design. All schedules comprised 64 initial training trials and 32 subsequent test trials. The difference between the training and test trials was exclusively related to the sequential order of the players in the observation phase in each trial. All decisions and performance scores were identical across schedules for each trial. However, there were two different training schedules: pre3 and pre4. For pre3, in all training trials, when a dyadic decision was cued, the dyadic decision took place between the second and the third player of the sequence. For pre4, all dyadic decisions took place between the second and the fourth player. As a result, for all four schedules, during the training phase, a dyadic decision would always concern the second player of the four-player sequence. However, in pre3, the second player would always be compared with the third player, and in pre4, the second player would always be compared with the fourth player of the sequence. In other words, we changed the order in which players were presented in a systematic way between schedules, but we kept the assignment of performance scores to players constant. This also meant that, for a given schedule, the dyadic decision that would follow was entirely determined by the sequence in which the players were shown in the observation phase. After the 64 trials of the training phase, the test phase followed, comprising 32 trials. There were also two schedules for the test phase: post3 and post4, and they followed the same logic as before. In all test schedules, the first element of the sequence became relevant for dyadic decisions. Critically, the second relevant player was either the player in the third position (post3) or the player in the fourth position (post4). These schedule designs meant that the same player positions always remained relevant for dyadic decisions for long phases of the experiment. However, this was not easy for participants to notice because it was obscured by the fact that only half of the decisions were dyadic decisions, and the other half were group decisions. In group decisions, all four players were always relevant. As noted above, participants reported no awareness of this manipulation. We collected a similar number of participants for each of the four conditions: pre3/post3 had 270 participants; pre3/post4 had 252 participants; pre4/post3 had 250 participants; and pre4/post4 had 250 participants.

Estimation of sequential decision weights