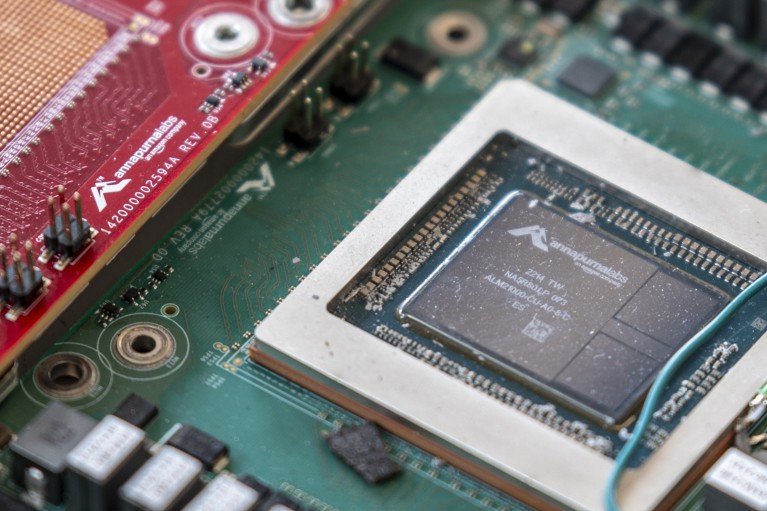

An artificial-intelligence chip. Such chips have powered the rise of generative AI systems.Credit: Sergio Flores/Bloomberg/Getty

Artificial intelligence (AI) systems with human-level reasoning are unlikely to be achieved through the approach and technology that have dominated the current boom in AI, according to a survey of hundreds of people working in the field.

More than three-quarters of respondents said that enlarging current AI systems ― an approach that has been hugely successful in enhancing their performance over the past few years ― is unlikely to lead to what is known as artificial general intelligence (AGI). An even higher proportion said that neural networks, the fundamental technology behind generative AI, alone probably cannot match or surpass human intelligence. And the very pursuit of these capabilities also provokes scepticism: less than one-quarter of respondents said that achieving AGI should be the core mission of the AI research community.

“I don’t know if reaching human-level intelligence is the right goal,” says Francesca Rossi, an AI researcher at IBM in Yorktown Heights, New York, who spearheaded the survey in her role as president of the Association for the Advancement of Artificial Intelligence (AAAI) in Washington DC. “AI should support human growth, learning and improvement, not replace us.”

The survey results were unveiled in Philadelphia, Pennsylvania, on Saturday at the annual meeting of the AAAI. They include responses from more than 475 AAAI members, 67% of them academics.

Generally smart

Generative-AI systems, which underlie tools including chatbots and image generators, are based on neural networks, computer systems that learn from vast amounts of data in a way inspired by the human brain. Over the past decade, developers seeking to improve these systems’ performance have made huge gains by scaling up their size ― for example, by increasing the amount of training data and a model’s number of ‘parameters’, the settings that an AI learns to adjust during training.

However, 84% of respondents said that neural networks alone are insufficient to achieve AGI. The survey, which is part of an AAAI report on the future of AI research, defines AGI as a system that is “capable of matching or exceeding human performance across the full range of cognitive tasks”, but researchers haven’t yet settled on a benchmark for determining when AGI has been achieved.

ChatGPT broke the Turing test — the race is on for new ways to assess AI

The AAAI report emphasizes that there are many kinds of AI beyond neural networks that deserve to be researched, and calls for more active support of these techniques. These approaches include symbolic AI, sometimes called ‘good old-fashioned AI’, which codes logical rules into an AI system rather than emphasizing statistical analysis of reams of training data. More than 60% of respondents felt that human-level reasoning will be reached only by incorporating a large dose of symbolic AI into neural-network-based systems. The neural approach is here to stay, Rossi says, but “to evolve in the right way, it needs to be combined with other techniques”.

The survey reveals concerns about unfettered AGI development. More than 75% of respondents said that pursuing AI systems with “an acceptable risk–benefit profile” should be a higher priority than achieving AGI; only 23% said that AGI should be the top priority. And about 30% of respondents agreed that research and development targeting AGI should be halted until we have a way to fully control these systems, ensuring that they operate safely and for the benefit of humanity.