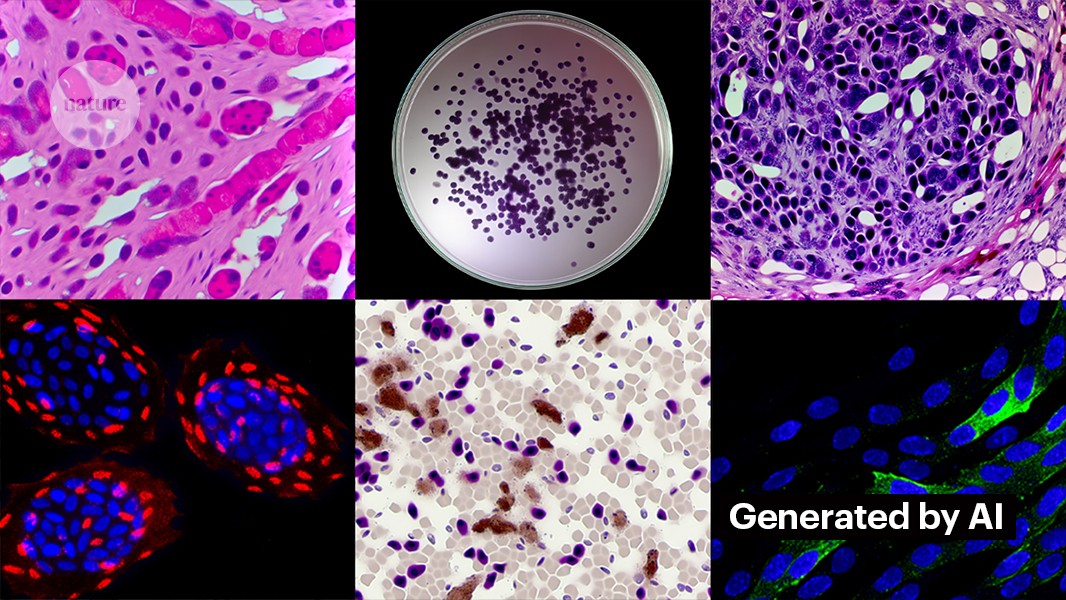

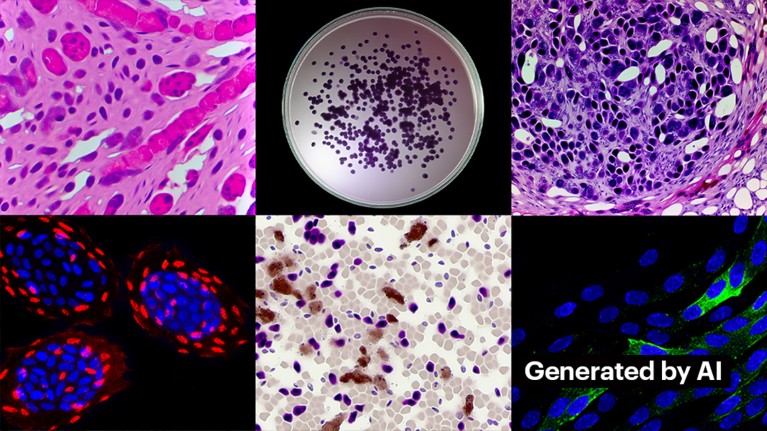

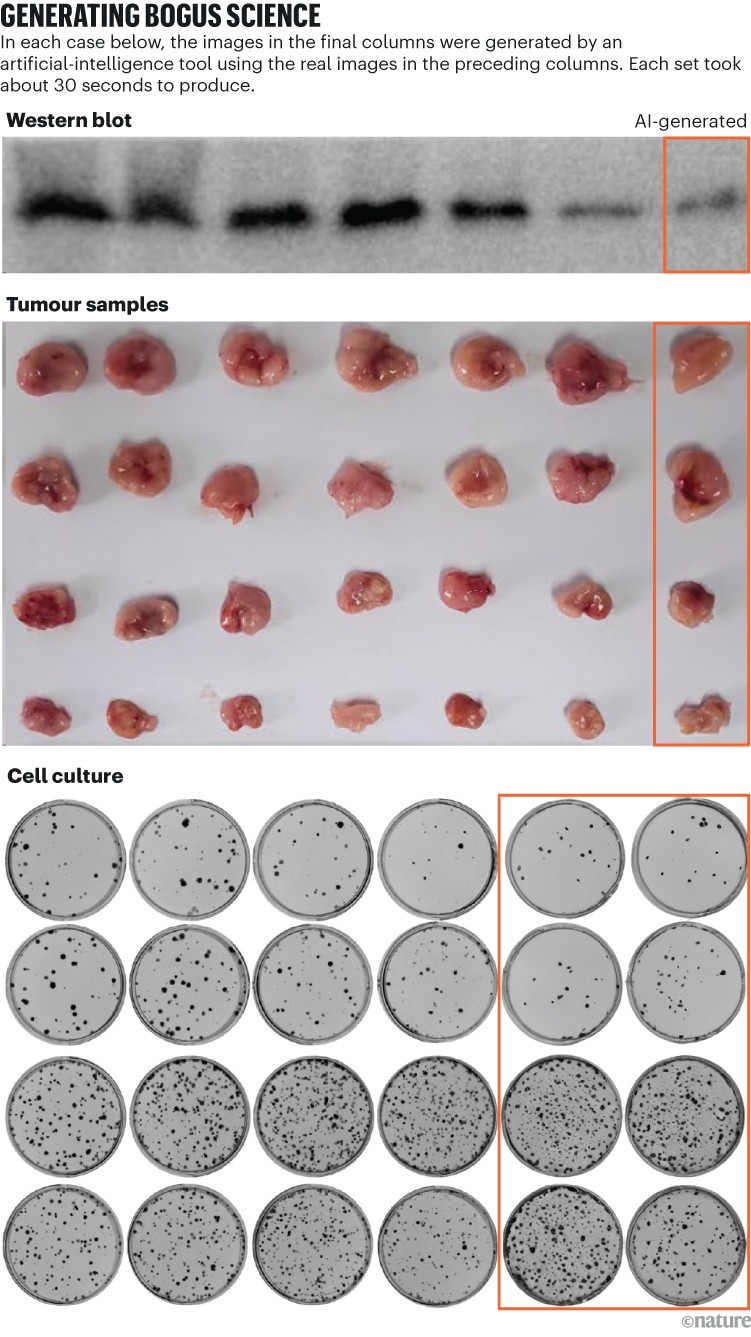

All of these images were generated by AI.Credit: Proofig AI, 2024

From scientists manipulating figures to the mass production of fake papers by paper mills, problematic manuscripts have long plagued the scholarly literature. Science sleuths work tirelessly to uncover this misconduct to correct the scientific record. But their job is becoming harder, owing to the introduction of a powerful new tool for fraudsters: generative artificial intelligence (AI).

“Generative AI is evolving very fast,” says Jana Christopher, an image-integrity analyst at FEBS Press in Heidelberg, Germany. “The people that work in my field — image integrity and publication ethics — are getting increasingly worried about the possibilities that it offers.”

AI-generated images and video are here: how could they shape research?

The ease with which generative-AI tools can create text, images and data raises fears of an increasingly untrustworthy scientific literature awash with fake figures, manuscripts and conclusions that are difficult for humans to spot. Already, an arms race is emerging as integrity specialists, publishers and technology companies race to develop AI tools that can assist in rapidly detecting deceptive, AI-generated elements of papers.

“It’s a scary development,” Christopher says. “But there are also clever people and good structural changes that are being suggested.”

Research-integrity specialists say that, although AI-generated text is already permitted by many journals under some circumstances, the use of such tools for creating images or other data is less likely to be viewed as acceptable. “In the near future, we may be okay with AI-generated text,” says Elisabeth Bik, an image-forensics specialist and consultant in San Francisco, California. “But I draw the line at generating data.”

What ChatGPT and generative AI mean for science

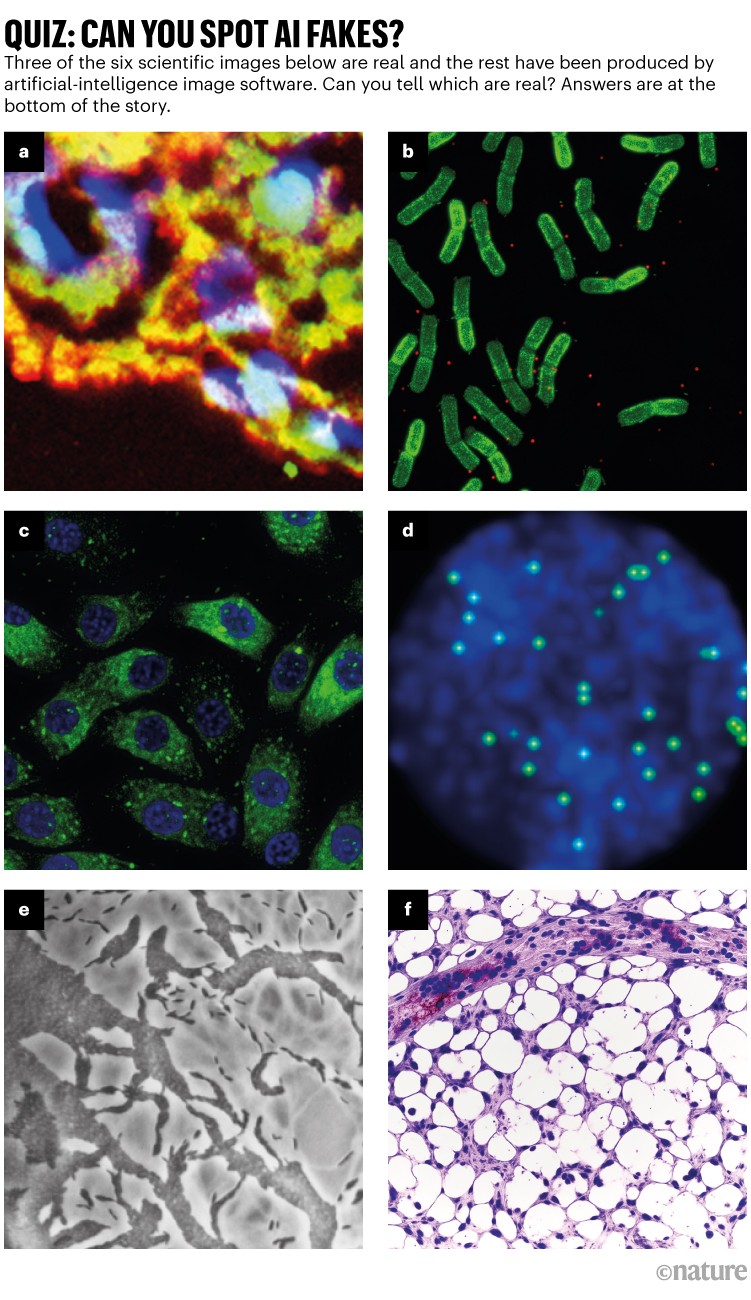

Bik, Christopher and others suspect that data, including images, fabricated using generative AI are already widespread in the literature, and that paper mills are taking advantage of AI tools to produce manuscripts en masse (see ‘Quiz: can you spot AI fakes?’).

Under the radar

Pinpointing AI-produced images poses a huge challenge: they are often almost impossible to distinguish from real ones, at least with the naked eye. “We get the feeling that we encounter AI-generated images every day,” Christopher says. “But as long as you can’t prove it, there’s really very little you can do.”

There are some clear instances of generative-AI use in scientific images, such as the now-infamous figure of a rat with absurdly large genitalia and nonsensical labels, created using the image tool Midjourney. The graphic, published by a journal in February, sparked a social-media storm and was retracted days later.

Credit: Proofig (generated images)

Most cases aren’t so obvious. Figures fabricated with Adobe Photoshop or similar tools before the rise of generative-AI — especially in molecular and cell biology — often contain telltale signs that sleuths can spot, such as identical backgrounds or an unusual absence of smears or stains. AI-made figures often lack such signs. “I see tonnes of papers where I think, these Western blots do not look real — but there’s no smoking gun,” Bik says. “You can only say they just look weird, and that of course isn’t enough evidence to write to an editor.”

But signs suggest that AI-made figures are appearing in published manuscripts. Text written using tools such as ChatGPT is on the rise in papers, given away by standard chatbot phrases that authors forget to remove and telltale words that AI models tend to use. “So we have to assume that it’s also happening for data and for images,” says Bik.

Another clue that fraudsters are using sophisticated image tools is that most of the issues that sleuths are currently detecting are in papers that are several years old. “In the past couple of years, we’ve seen fewer and fewer image problems,” Bik says. “I think most folks who have gotten caught doing image manipulation have moved on to creating cleaner images.”

How to create images

Creating clean images using generative AI is not difficult. Kevin Patrick, a scientific-image sleuth known as Cheshire on social media, has demonstrated just how easy it can be and posted his results on X. Using Photoshop’s AI tool Generative Fill, Patrick created realistic images — that could feasibly appear in scientific papers — of tumours, cell cultures, Western blots and more. Most of the images took less than a minute to produce (see ‘Generating bogus science’).

“If I can do this, certainly the people who are getting paid to generate fake data are going to be doing this,” Patrick says. “There’s probably a whole bunch of other data that could be generated with tools like this.”

Some publishers say that they have found evidence of AI-generated content in published studies. These include PLoS, which has been alerted to suspicious content and found evidence of AI-generated text and data in papers and submissions through internal investigations, says Renée Hoch, managing editor of PLoS’s publication-ethics team in San Francisco, California. (Hoch notes that AI use is not forbidden in PLoS journals, and that its AI policy focuses on author accountability and transparent disclosures.)

Credit: Kevin Patrick

Other tools might also provide opportunities for people wishing to create fake content. Last month, researchers published1 a generative-AI model for creating high-resolution microscopy images — and some integrity specialists have raised concerned about the work. “This technology can easily be used by people with bad intentions to quickly generate hundreds or thousands of fake images,” Bik says.

Yoav Shechtman at the Technion–Israel Institute of Technology in Haifa, the tool’s creator, says that the tool is helpful for producing training data for models because high-resolution microscopy images are difficult to obtain. But, he adds, it isn’t useful for generating fake because users have little control over the output. Existing imaging software such as Photoshop is more useful for manipulating figures, he suggests.

Weeding out fakes

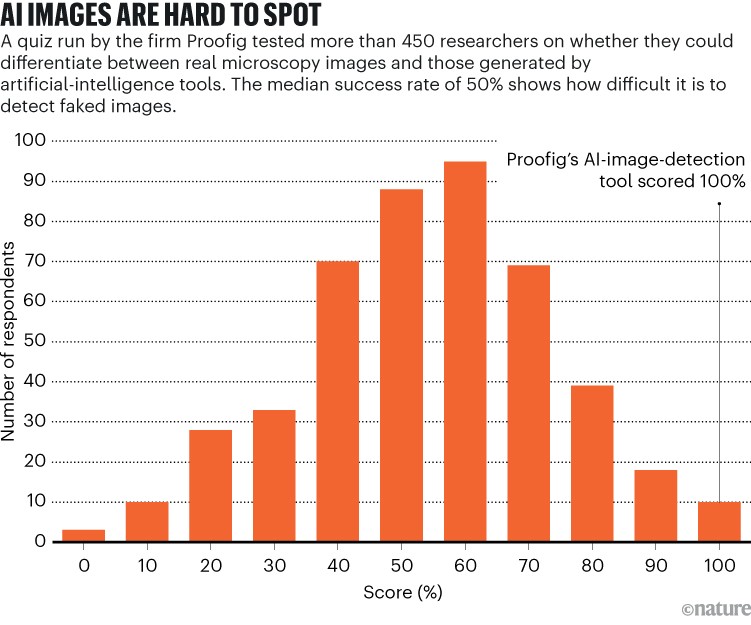

Human eyes might not be able to catch generative AI-made images, but AI might (see ‘AI images are hard to spot’).

The makers behind tools such as Imagetwin and Proofig, which use AI to detect integrity issues in scientific figures, are expanding their software to weed out images created by generative AI. Because such images are so difficult to detect, both companies are creating their own databases of generative-AI images to train their algorithms.

AI models fed AI-generated data quickly spew nonsense

Proofig has already released a feature in its tool for detecting AI-generated microscopy images. Company co-founder Dror Kolodkin-Gal in Rehovot, Israel, says that, when tested on thousands of AI-generated and real images from papers, the algorithm identified AI images 98% of the time and had a 0.02% false-positive rate. Dror adds that the team is now working on trying to understand what, exactly, their algorithm detects.

“I have great hopes for these tools,” Christopher says. But she notes that their outputs will always need to be assessed by an expert who can verify the issues they flag. Christopher hasn’t yet seen evidence that AI image-detection software are reliable (Proofig’s internal evaluation has not been published). These tools are “limited, but certainly very useful, as it means we can scale up our effort of screening submissions,” she adds.

Source: Proofig quiz

Multiple publishers and research institutions already use Proofig and Imagetwin. The Science journals, for example, use Proofig to scan for image-integrity issues. According to Meagan Phelan, communications director for Science in Washington DC, the tool has not yet uncovered any AI-generated images.

Springer Nature, which publishes Nature, is developing its own detection tools for text and images, called Geppetto and SnapShot, which flag irregularities that are then assessed by humans. (The Nature news team is editorially independent of its publisher.)

Fraudsters, beware

Publishing groups are also taking steps to address AI-made images. A spokesperson for the International Association of Scientific, Technical and Medical (STM) Publishers in Oxford, UK, said that it is taking the problem “very seriously” and pointed to initiatives such as United2Act and the STM Integrity Hub, which are tackling paper mills and other scientific-integrity issues.

ChatGPT one year on: who is using it, how and why?

Christopher, who is chairing an STM working group on image alterations and duplications, says that there is a growing realization that developing ways to verify raw data — such as labelling images taken from microscopes with invisible watermarks akin to those being used in AI-generated text — might be the way forward. This will require new technologies and new standards for equipment manufacturers, she adds.

Patrick and others are worried that publishers will not act quickly enough to address the threat. “We’re concerned that this will just be another generation of problems in the literature that they don’t get to until it’s too late,” he says.

Still, some are optimistic that the AI-generated content that enters papers today will be discovered in the future.

“I have full confidence that technology will improve to the point that it can detect the stuff that’s getting done today — because at some point, it will be viewed as relatively crude,” Patrick says. “Fraudsters shouldn’t sleep well at night. They could fool today’s process, but I don’t think they’ll be able to fool the process forever.”