Study population

Ten donorârecipient pairs were selected from the original 45 HCT recipients and HLA-matched sibling donors who were enrolled in the original study3. All donors and recipients gave written informed consent, and the original study and subsequent amendment were approved by the local ethics committee (KEK-ZH, 2015-0053 and 2019-02290; Kantonale Ethikkommission-Zurich) in accordance with the declaration of Helsinki. The priority for selecting patients for our analysis was the potential availability of follow-up material, as some patients had gone on to develop haematological malignancies, been found to have lost donor chimerism or been lost to follow-up since the initial study. Beyond that, we aimed to include a variety of sibling pair ages, stem cell source and conditioning type. We also had awareness of some CHIP clones detected in recipients and/or donors in the original study, and hence also wanted a mixture of scenarios.

Cell isolation

Granulocytes were isolated from 10âml of EDTA anticoagulated peripheral blood using the EasySep Direct Neutrophil Isolation Kit (Stem Cell Technologies) according to the manufacturerâs instructions. CD34+ HSPCs were isolated from 20âml of EDTA anticoagulated peripheral blood using the human CD34 MicroBead Kit (Miltenyi Biotec) according to the manufacturerâs recommendations. B cells, T cells and monocytes were flow-sorted from CD34â cell fractions using the FACSAria III flow cytometer (BD Biosciences). Antibodies used were PE/Cyanine7 anti-human CD14 (BioLegend, 301814), APC anti-human CD3 (BioLegend, 317318) and FITC anti-human CD19 (BioLegend, 363008), diluted and used according to manufacturer instructions, with the validation of antibodies performed by the manufacturer.

Clonal expansion

CD34+ HSPCs were plated in 9âml cytokine-supplemented methylcellulose medium (Stem Cell Technologies) as described previously41. After 14 days of culture at 37â°C and 5% CO2 single colony-forming units were picked and were each resuspended and processed in 20âµl QuickExtract DNA Extraction Solution (Lucigen).

DNA extraction

DNA from granulocytes, monocytes, B cells and T cells was isolated using the QIAamp DNA Mini Kit according to the manufacturerâs recommendations.

Library preparation and WGS

A target of 1â6âng of DNA from each colony underwent low-input library preparation as previously described using 12 cycles of PCR amplification42. Paired-end sequencing reads (150âbp) were generated using the Illumina NovaSeq 6000 platform resulting in around 8â15Ã coverage per colony (Supplementary Fig. 1a). BWA-MEM was used to align sequences to the human reference genome (NCBI build37).

Mutation calling in clonal WGS data

SNVs and indels were initially called against a synthetic unmatched reference genome using the in-house pipelines CaVEMan (cgpCaVEMan) and Pindel (cgpPindel)43,44. For all mutations passing quality filters in at least one sample, in-house software (cgpVAF; https://github.com/cancerit/vafCorrect) was used to produce matrices of variant and normal reads at each site for all HSPC colonies from that donorârecipient pair.

Multiple post hoc filtering steps were then applied to remove germline mutations, recurrent library preparation and sequencing artefacts, and probable in vitro mutations, as detailed below:

-

(1)

A custom filter to remove artefacts specifically associated with the low-input library prep process (https://github.com/MathijsSanders/SangerLCMFiltering). This is predominantly targeted at artefacts introduced by cruciform DNA structures.

-

(2)

A binomial filter was applied to aggregated counts of normal and variant reads across all samples. Sites with aggregated count distributions consistent with germline single-nucleotide polymorphisms were filtered.

-

(3)

A beta-binomial filter was applied to retain only mutations of which the count distributions across samples came from an overdispersed beta-binomial distribution consistent with an acquired somatic mutation.

-

(4)

Mutations called at sites with abnormally high or low mean coverage were considered unreliable/possible mapping artefacts and were filtered.

-

(5)

For each mutation call, normal and variant reads were aggregated from positive samples (â¥2 variant reads). Sites with counts inconsistent with a true somatic mutation were filtered.

-

(6)

The remaining mutations were retained only if there was at least one sample that met all minimum thresholds for variant read count (â¥3 for autosomes, â¥2 for XY chromosomes), total depth (â¥6 for autosomes, â¥4 for XY chromosomes) and a VAFâ>â0.2 for autosomal mutations or >0.4 for XY mutations in males.

Copy-number changes were called using ASCAT-NGS (ascatNgs)45 and SVs with GRIDSS46. Protein-coding consequences were annotated using VAGrENT47 and these were used for inferring the presence of positive selection using dNdScv40.

Genotyping each sample for somatic mutations

Each sample was genotyped for each somatic mutation in the filtered mutation set. For each mutation, samples with a VAFâ>â0.15 and at least 2 variant reads were considered positive; samples with no variant reads and a depth of at least 6 were considered negative; samples not meeting either of these criteria were considered uninformative.

Phylogeny inference

Phylogenies were inferred using the maximum parsimony algorithm MPBoot48. This efficient algorithm has been shown to be effective for the robust genotypes built with WGS of clonal samples, as is performed here, and is comparable to other maximum-likelihood-based algorithms18,19. To test this, we performed phylogeny inference for all trees with the maximum-likelihood algorithm IQtree (http://www.iqtree.org/) and compared the resulting phylogenies to those from MPBoot. These showed extremely similar structures in all cases as shown by high RobinsonâFoulds (range, 0.955â0.933) and Quartet similarity scores (range, 0.903â1.000). In almost all cases, the differences were in the orientation of early developmental splits that would have no bearing on the downstream analysis (Supplementary Fig. 6).

Many different algorithms have been developed to reconstruct phylogenetic trees based on DNA sequences. These character-based algorithms rely on different approaches: maximum parsimony, maximum likelihood or Bayesian inference49. Maximum parsimony-based algorithms seek to produce a phylogeny that requires the fewest discrete changes on the tree. As the number of nucleotide changes is minimized, this approach implicitly assumes that mutations are likely to occur only once. Thus, maximum parsimony may produce erroneous phylogenies when there is a high likelihood of recurrent or reversal mutations, such as with long divergence times or high mutation rates, neither of which generally apply to mutations in normal somatic cells. Phylogenetic tree algorithms relying on maximum likelihood or Bayesian inference are model-based, in that they require a specific notion of the parameters governing genetic sequence evolution to calculate either distances or likelihoods. Often, this involves a general time-reversible model of sequence evolution50. All these approaches have been widely applied to the reconstruction of phylogenetic trees between species or individuals49. However, the task of constructing a phylogeny of somatic cells derived from a single individual is fundamentally different from reconstructing species trees in three ways:

-

(1)

Precise knowledge of the ancestral state: in contrast to the unknown ancestral genetic state in alignments of sequences from multiple species, the ancestral DNA sequence at the root of the tree (namely the zygote) can readily be inferred from the data. As all cells in the body are derived from the fertilized egg, any post-zygotic mutation will be present in only a subset of the leaves of the tree. Thus, the genetic sequence at the root of the tree is defined by the absence of all of these mutations. This simple observation effectively roots the phylogeny.

-

(2)

Unequal rates of somatic mutation versus reversion: to accommodate the uncertainty in the ancestral state and the direction of nucleotide substitutions, model-based phylogeny reconstruction has relied on a time-reversible model of nucleotide changes50. In principle, this states that the probability of a certain substitution (for example, C>T) is equal to its inverse (T>C). In somatic mutagenesis, as the direction of change is known, assuming general reversibility of mutational probabilities fails to acknowledge the genuine discrepancies in the likelihood of certain (trinucleotide) substitutions. For example, a C>T mutation in a CpG context is much more probable than a T>C at TpG due to the specific mutational processes acting on the genomeâin this case, spontaneous deamination of methylated cytosine (commonly referred to as SBS1).

-

(3)

Low somatic mutation rates in a human lifespanâwhen accounting for the size of the human genome, the number of mutations that are informative for purposes of phylogeny reconstruction, namely SNVs shared between two or more samples, is generally low compared to the settings of phylogenies of species or organisms. This means that the probabilities of independent, recurrent mutations at the same site or reversals of those nucleotide changes (back mutations) are small and have negligible effects on the accuracy of phylogenetic reconstruction. Thus, a mutation shared between multiple samples can generally be assumed to represent a single event in an ancestral cell that has been retained in all its progenyâthe underlying principle of maximum parsimony.

Thus, on both empirical metrics and theoretical grounds, maximum parsimony methods perform as accurately as model-based methods for reconstructing phylogenies of somatic cells, and require fewer additional assumptions.

Exclusion of non-clonal samples

Haematopoietic colonies embedded within methylcellulose may grow into one another, or derive from more than one founder cell, resulting in colonies that are not single-cell derived. Such samples may interfere with phylogeny building and have lower numbers of called mutations, and were therefore excluded. Detection was done in two steps. The first was based on the principle that somatic mutations from clonal samples should have a peak VAF density of around 0.5. Thus, after exclusion of germline mutations and recurrent artefacts using the exact binomial and beta-binomial filtering steps, the VAF distributions of positive mutations in a sample were assessed. Samples with a maximum VAF distribution density of <0.4 (corresponding to a sample purity of <80%) were excluded. The second step was performed following a first iteration of phylogeny building using samples passing the first step. Each sample was tested against the phylogeny to see if the mutation VAFs across the tree were as expected for a clonal sample. A clonal sample should have either branches that are positive (mutation VAFs, ~0.5) or negative (mutation VAFs, ~0). Thus, for each branch in each sample, the variant and total read counts were combined across all branch mutations. These counts were then tested for how likely they were to come from either (1) at least that expected for a heterozygous somatic mutation distribution, with some contamination allowed (one-sided exact binomial test, alternative hypothesisâ=âless than probability, probabilityâ=â0.425) or (2) no more than that expected for absent mutations, with some false positives allowed (one-sided exact binomial test, alternative hypothesisâ=âgreater than probability, probabilityâ=â0.05). If the samples had any branches with read counts that were highly inconsistent with both tests (maximum q-valueâ<â0.05, Bonferroni correction) or had three or more branches that were minorly inconsistent with both tests (maximum P value of 0.05, no multiple-hypothesis testing correction) the sample was considered to be non-clonal and excluded. A second iteration of phylogeny inference was then performed without the non-clonal samples. These steps have a degree of tolerance of minimally contaminated samples, and samples with >80â85% purity will generally be retained. However, even this lower level of contamination will have an impact on the sensitivity of mutation calling and sample purity was therefore taken into account for mutation burden correction.

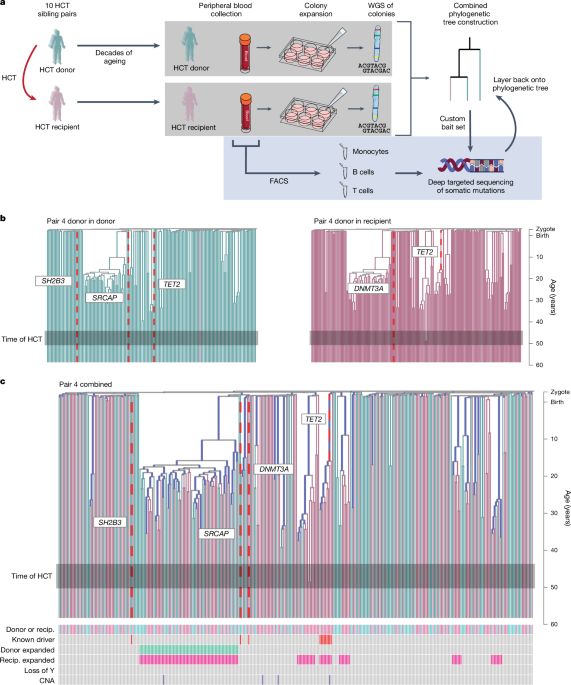

Recognition of different germline background for samples

Initial phylogeny building was done using all samples with a maximum VAF distribution density of >0.4. In three cases (pairs 3, 4 and 9), this initial phylogeny revealed an outlier clade with an apparent extremely high mutation burden of >30,000. The outlier clades contained only colonies grown from recipient samples, which raised the possibility that these may represent recipient haematopoiesis. For pair 3, the samples within the outlier clade were in fact identified as deriving from pair 10, and therefore represented interindividual contamination. This was clear, as 80% of the mutations in this clade were germline mutations from pair 10, and it also included the DNMT3A p.R899G and TET2 p.A996fs*11 mutations. For pairs 4 and 9, this was not the case. There were no known pathogenic variants in the outlier clade. Feasibly, the samples may derive from residual recipient-derived haematopoiesis, or from contamination from another individual not in the study. As the donors are siblings, recipients will share around half the same germline variants of the donor. Accordingly, if the outlier clade were from residual recipient chimerism, the branch length of the outlier clade should be half the number of the ~30,000 germline mutations identified in the donors, that is, 15,000 mutations. However, in all cases, the outlier clade contained around 30,000 mutations, consistent with contamination from an unrelated individual rather than residual recipient haematopoiesis. In the two individuals where there was >1 sample within the outlier clade, these were from adjacent wells of the 96-well plate into which colonies were picked, making it likely that in fact the separate samples derived from the same original founder cell, that presumably grew into a large branching colony structure that was picked multiple times. Mutation filtering and phylogeny building was rerun excluding contaminating samples.

Removal of sample duplicates

Some haematopoietic colonies grown in methylcellulose have an irregular branching appearance and are misinterpreted as multiple separate colonies, resulting in several samples being inadvertently picked from the same colony. Such samples appear highly related on the phylogenetic tree, with only a few private mutations, representing predominantly in vitro acquired mutations. Recognition of these duplicates is aided by the fact that (1) in many cases, duplicates are picked into adjacent/nearby wells, as colony picking is performed systematically around the well; and (2) in most biological scenarios, such highly related sample pairs are extremely rare due to the larger short-term HSC/HSPC pool19. Thus, pairs of samples with fewer than 30 private mutations, and close positions on the 96-well plate were assumed to be duplicates of the same colony, and one sample was removed.

CNAs

CNAs were called from WGS data using ASCAT45,46. A good-quality matched sample from the same pair was used as a ânormal referenceâ after manual inspection of raw copy-number plots to exclude abnormalities. Copy-number profiles were manually reviewed, and alterations that were clearly distinguishable from background noise were tabulated.

SV calling

SVs were called with GRIDSS46 (v.2.9.4) with the default settings. SVs larger than 1âkb in size with QUALââ¥â250 were included. For SVs smaller than 30âkb, only SVs with QUALââ¥â300 were included. Furthermore, SVs that had assemblies from both sides of the breakpoint were considered only if they were supported by at least four discordant and two split reads. SVs with imprecise breakends (that is, the distance between the start and end positionsâ>â10âbp) were filtered out. We further filtered out SVs for which the s.d. of the alignment positions at either ends of the discordant read pairs was smaller than five. Filtered SVs were rescued if the same SVs passing the criteria were found in the other samples. To remove potential germline SVs and artefacts, we generated the panel of normal by adding in-house normal samples (nâ=â350) to the GRIDSS panel of normal. SVs found in at least three different samples in the panel of normal were removed. Variants were confirmed by visual inspection and by checking whether they fit the distribution expected based on the SNV-derived phylogenetic tree.

Mutational signature extraction

Mutational signatures were extracted de novo using a hierarchical Dirichlet process51 as implemented in R package HDP (https://github.com/nicolaroberts/hdp). These reflect the signatures of underlying mutational processes that have been active in the HSPC colonies. Each branch on the phylogeny was treated as an independent sample, and counts of mutations at each trinucleotide context were calculated. Branches with <50 mutations were excluded as, below this threshold, random sampling noise in the mutation proportions becomes problematic.

Plots of signature contributions in each sample in Extended Data Fig. 3 represent the means of signature contributions of individual branches included within the sample (weighted by the branch length), with final values then scaled by the sample total mutation burden to reflect absolute signature contributions. Note that branches with <50 mutationsâprimarily early embryonic branchesâare not included in this estimate as they are excluded from the signature extraction step. This means that processes primarily operative in embryogenesis are under-represented in these estimates.

Correction of mutation burden

The number of somatic mutations called in any given sample depends not only on the number of mutations present, but also on the sequencing coverage and on the colony purity. For each individual, reference sets of germline polymorphisms (separate sets for SNVs and indels) were defined (nâ>â30,000 SNVs in all cases). These were mutations that had been called in many samples (as mutation calling was performed against an unmatched synthetic normal), and for which aggregated variant/reference mutation counts across samples from an individual were consistent with being present in the germline. For each sample, the proportion of germline SNVs called by CaVEMan and passing the low-input filter was considered the âgermline SNV sensitivityâ, and the proportion of germline indels called by Pindel was the âgermline indel sensitivityâ. For pure clonal samples, the sensitivity for germline variants should be the same as for somatic variants. Therefore, for samples with a peak VAFâ>â0.48 (corresponding to a purity of >96%), this germline sensitivity was also considered the âsomatic variant sensitivityâ and was used to correct the number of somatic variants. However, for less pure samples (purity, 80â96%), the sensitivity for somatic variants will be lower than for germline variants as the former will not be present in all cells of the sample. Thus, an additional âclonality correctionâ step was applied. The expected number of variant reads sequenced for a heterozygous somatic mutation in a non-clonal sample will be nvâ~âbinomial(N,p) where N is the sequencing coverage at the mutation position, and p is the sample peak VAF (rather than pâ=â0.5 as is the case for a pure clonal sample). The likelihood of the mutation being called given nv variant reads and N total reads was taken from a reference sensitivity matrix. This matrix was defined from the germline polymorphism sensitivity data across 20 samples, where for all combinations of nv and N, the proportion of mutations called in each sampleâs final mutation set was assessed. The sequencing coverage distribution across putative somatic mutations was considered the same as that across the germline polymorphism set. Thus, for each value of N (the depths across all germline polymorphisms in that sample), a simulated number of variant reads nv was taken as a random binomial draw as described above, and whether this resulted in a successful mutation call taken as a random draw based on the probability defined in the sensitivity matrix. The total proportion of simulated somatic mutations successfully called was defined as the âsomatic variant sensitivityâ for that sample.

The somatic variant sensitivities were then used to correct branch lengths of the phylogeny in the following manager. For private branches, the SNV component of branch lengths was scaled according to

$${n}_{{\rm{cSNV}}}=\frac{{n}_{{\rm{SNV}}}}{{p}_{i}}.$$

Where ncSNV is the corrected number of SNVs in sample i, nSNV is the uncorrected number of SNVs called in sample i and pi is the somatic variant sensitivity in sample i.

For shared branches, it was assumed (1) that the regions of low sensitivity were independent between samples, (2) if a somatic mutation was called in at least one sample within the clade, it would be ârescuedâ for other samples in the clade and correctly placed. Shared branches were therefore scaled according to

$${n}_{{\rm{cSNV}}}=\frac{{n}_{{\rm{SNV}}}}{1-{\prod }_{i}(1-{p}_{i})}.$$

Where the product is taken for 1âââpi for each sample i within the clade. Neither assumption is entirely true. First, areas of low coverage are non-random and some genomic regions are likely to have below average coverage in multiple samples. Second, while many mutations will indeed be rescued in subsequent samples once they have been called in a first sampleâbecause the treemut algorithm for mutation assignment goes back to the original read counts and therefore even a single variant read in a subsequent sample is likely to lead to the mutation being assigned correctly to a shared branchâthis will not always be the case. Sometimes samples with a very low depth at a given site will have 0 variant reads by chance. In such cases, a mutation may be incorrectly placed. Both factors may result in under-correction of shared branches, but it is a reasonable approximation. SNV burdens corrected by this approach were then taken as the sum of corrected ancestral branch lengths for each sample, going back to the root.

Custom DNA capture panel design and targeted sequencing

Three separate custom panels were designed according to the manufacturerâs instructions (SureSelectXT Custom DNA Target Enrichment Probes, Agilent) for (1) pairs 6, 7, 9 and 10, (2) pairs 2, 3 and 8 and (3) pairs 1, 4 and 5. Custom panels were designed for groups of pairs such that sequencing error rates could be estimated from individuals without the mutation, although the specific grouping was for logistic reasons. Panel design proceeded similarly for each panel. All SNVs on shared branches of the phylogeny were covered if they met the moderate stringency repeat masking applied within the SureDesign platform (around 60% of loci). For short shared branches with no covered mutation loci after moderate stringency repeat masking, loci included after low stringency repeat-masking were accepted. A total of 10,000 SNVs per transplant pair from across private branches was selected based on more stringent criteria to maximize capture efficiency. They were considered only if (1) they met more stringent mutation filtering thresholds than those used for mutation calling (VAFâ>â0.35 for autosomal mutations, or VAFâ>â0.8 for XY mutations in males; beta-binomial rho valueâ>â0.3); (2) mutation loci were included after the most stringent repeat masking; and (3) minimal capture bait boosting was required to compensate for high DNA GC content. After this, mutations were ranked according to sequencing error rates, and those with lowest error rates selected first. Error rates were taken from the site-specific error rate information used for the Shearwater mutation-calling algorithm52. Typically, 5â10% of private SNVs were covered. Indels were included only if within driver-gene-coding sequences. Moreover, ten putative driver genes from a WGS study of clonal haematopoiesis53 were covered in their entirety (DNMT3A, TET2, ASXL1, PPM1D, ATM, MTA2, ZNF318, PRKCG, SRSF2 and KPNA7).

Four separate aliquots of 50âng of DNA from each bulk sorted cell type (granulocytes, monocytes, B cells and T cells) from each individual underwent low-input library preparation using nine cycles of PCR amplification. Paired-end sequencing reads (100âbp) were generated, hybridized to the appropriate custom bait capture panel, multiplexed on flow cells and then sequenced using the NovaSeq 6000 platform. In several cases, there was insufficient DNA to permit four aliquots of 50âng. In such cases, decreased input DNA down to 25âng and/or fewer aliquots were used. If <20âng total DNA was available, aliquots of 5âng were used with 12 cycles of PCR amplification during library preparation.

Driver mutation annotation

A broad 122-gene list of driver genes associated with haematological malignancy and/or clonal haematopoiesis was compiled from the union of (1) a 54-gene Illumina myeloid panel (TruSight myeloid sequencing panel; https://www.illumina.com/products/by-type/clinical-research-products/trusight-myeloid.html); (2) the 92-gene list used in a study of chemotherapy-associated clonal haematopoiesis29,54; (3) a 32-gene list of genes identified recently as under positive selection within the UK Biobank whole-exome blood sequencing data (Supplementary Table 1). We then looked for missense, truncating or splice variants in these genes, yielding 174 such variants (Supplementary Table 2). These were then manually curated down to 70 variants considered to be potentially pathogenic, with the remainder classified as variants of unknown significance. This was done using the COSMIC database of somatic mutations (https://cancer.sanger.ac.uk/cosmic), the broader literature and, in some cases, variant effect prediction tools such as SIFT and PolyPhen.

Gibbs sampler for inferring true VAF of mutations from deep sequencing data

The data comprise deep targeted sequencing of known somatic mutations from a given sample. Control samples (typically from another patient, where the mutations are absent) are also sequenced to enable estimation of sequencing error rates at each mutation position. Clonal relationships among the somatic mutations arise from a phylogenetic treeâit is assumed that this phylogenetic tree is known (and therefore considered fixed in the algorithm that follows).

We want to estimate a posterior distribution for the true VAF of every mutation in the bait set. The structure of the phylogenetic tree provides considerable constraint on the solution space of VAFs for clonally related mutationsâfor example, a mutation on a descendant branch cannot have a higher VAF than a mutation on a direct ancestral branch. Moreover, for a given node on the tree, comprising an ancestral branch and two or more descendant branches, the sum of the maximum VAFs for mutations on the descendant branches must be less than the minimum VAF of mutations on the ancestral branch.

The blocked Gibbs sampler infers the posterior VAFs of each mutation subject to the constraints imposed by the phylogenetic tree. Essentially, we use data augmentation to assign a maximum and minimum VAF for each branch in the tree (λj and κj in the notation below)âthe VAFs for each mutation on that branch must fall within that range.

Let Ïiââ¡âVAF of mutation i in the sample, the variable of interest; εiââ¡âerror rate of mutation i in the control samples; Ïiââ¡âerror rate of mutation i in the control samples; Yiââ¡ânumber of variant-specific reads reporting mutation i in the sample; Niââ¡âtotal coverage of mutation i in the sample (read depth); Bjââ¡âbranch j from the phylogenetic tree, T, comprising a set of mutations assigned to it; λjââ¡âmaximum allowable VAF in the sample for mutations on Bj; κjââ¡âminimum allowable VAF in the sample for mutations on Bj.

Block 1: updating Ï

i for all mutations

Proceeding branch by branch, the VAF of each mutation on a given branch, Bj, must fall within the range [κj,âλj]. We assume an uninformative priorâthat is, Ïiââ¼âU(κj,âλj).

Reads reporting the variant allele can arise either from a read that correctly reports a mutant DNA molecule or a sequencing error on a read from a wild-type DNA molecule. This means that the expected proportion of reads reporting the variant allele is calculated as

$${\pi }_{i}={\rho }_{i}+{\varepsilon }_{i}-2{\rho }_{i}{\varepsilon }_{i}$$

We assume a binomial distribution of the variant read counts given the VAFâthat is, Yiââ¼âBin(Ïi,âNi).

We use a Metropolis-Hastings approach to update the estimates for Ïi. A new, proposed VAF for iteration k is drawn from a truncated Beta distribution

$${\dot{\rho }}_{i}^{\left(k\right)} \sim \text{Beta}\_\text{truncated}\,\left(\frac{{\rho }_{i}^{\left(k-1\right)}\sigma }{\left(1-{\rho }_{i}^{\left(k-1\right)}\right)},\sigma ;{\kappa }_{j}^{\left(k-1\right)},{\lambda }_{j}^{\left(k-1\right)}\right)$$

where Ï is a user-defined scale factor to be chosen to optimize the acceptance rate of the MetropolisâHastings update. The acceptance ratio is then calculated from the distribution functions of the binomial under the current and proposed values for the VAF in the usual way, and the new value is either accepted or rejected.

Block 2: updating λ

j and κ

j for all branches

To update the maximum and minimum VAFs for each branch, we proceed node by node across the tree (where a node represents coalescences in the tree, comprising one inbound, ancestral branch and two or more outbound, descendant branches). As above, the sum of the maximum VAFs for mutations on the outbound branches must be less than the minimum VAF of mutations on the inbound branch. This means that there is an amount of âunallocated VAFâ that represents the difference between these values:

$${{\rm{VAF}}}_{{\rm{Unallocated}}}=\min \left\{{\rho }_{i}^{\left(k\right)}on\,{B}_{{\rm{Inbound}}}\right\}-\sum _{{x{\epsilon }B}_{{\rm{Outbound}}}}\max \left\{{\rho }_{i}^{\left(k\right)}{on}\,x\right\}$$

We partition this unallocated VAF among the inbound and outbound branches using draws from a uniform distribution. Essentially, if there are n branches coming in or leaving the current node, we draw n values from U(0,âVAFUnallocated), sort them and take adjacent differences: u(1)âââ0,âu(2)âââu(1),ââ¯, VAFUnallocatedâââu(n). These are then allocated to the branches:

$${\kappa }_{{\rm{Inbound}}}^{\left(k\right)}=\min \,\left\{{\rho }_{i}^{\left(k\right)}on\,{B}_{{\rm{Inbound}}}\right\}-({u}_{\left(1\right)}-0)$$

$${\lambda }_{{\rm{Outbound}}}^{\left(k\right)}=\max \,\left\{{\rho }_{i}^{\left(k\right)}on\,{B}_{{\rm{Outbound}},{\rm{c}}}\right\}+\left({u}_{\left(c\right)}-{u}_{\left(c-1\right)}\right)$$

Implementation

We doubled the total read depth, Ni, for mutations on the sex chromosome in males. We used a scale parameter of Ïâ=â50. The root node was assigned a fixed VAF of 0.5 and terminal nodes a fixed VAF of 10â10. The Gibbs sampler was run for 20,000 iterations, with 10,000 discarded as burn-in and thinning to every 100 iterations.

Defining posterior distribution of post-developmental clone fractions

The output of the Gibbs sampler is the posterior distribution of the VAF of each mutation covered by the custom hybridization panel. This was converted into clonal fractions of post-development clones. First, mutation VAFs were multiplied by two to give clonal fractions (assuming heterozygosity). The tree was then cut at a height of 100 mutations of molecular time to define when clones were considered to originate. While this is somewhat empirical, any molecular timepoint soon after development (which ends ~50â60 mutations) would yield similar results. For each branch traversing the defined clone cut-off point, the position of the cut-off along the branch was calculated, for example, if the branch goes from a height of 50 mutations to 150 mutations, a molecular time of 100 mutations would be halfway along the branch. Depending on the number of mutations covered from that branch, the position along that branch best reflecting the molecular time cut-off was calculated, for example, in the above example, if 60 out of the 100 mutations on the branch were included in the custom panel, the posterior clonal fraction of the 30th ranked mutation (ordered by decreasing median posterior clonal fraction) best approximates that of a clone originating at 100 mutations of molecular time. Where point estimates are displayed, the median posterior value is used.

Measures of clonal diversity

Clonal diversity was assessed (1) from the individual phylogenetic tree structures and (2) from the clonal fractions in the targeted sequencing results of mature cell types.

Phylogenetic diversity

We first calculated the mean pairwise distance55 by taking the mean of the distance matrix obtained using the cophenetic.phylo function from the R package ape. This is the mean phylogenetic distance (that is, the sum of branch lengths of the shortest path between samples) across all sample pairs in the phylogeny. We next calculated the mean nearest taxon distance55, again starting with the distance matrix from the cophenetic.phylo function, but this time taking the minimum non-zero value from each row, and calculating the mean of these values. This represents the mean of the phylogenetic distance to the nearest sample, across all samples. For both measures, the ultrametric version of the phylogenies was used.

SDI analysis

The SDI (H) is defined as:

$$H=-\mathop{\sum }\limits_{i=1}^{k}{p}_{i}\log \left({p}_{i}\right)$$

where k is the total number of groups within a population, and pi is the size of group i as a proportion of the total population. For our purposes, k is the total number of post-developmental clones determined from the phylogeny (again defining a clone as originating at 100 mutations of molecular time) and pi is a cloneâs fraction determined from the targeted sequencing results (as described above), normalized to the total captured clonal fraction in that individual/cell type. For example, if clone i has a clonal fraction of 0.1 and the sum of fractions across all clones is 0.5, piâ=â0.2.

Estimating the relative size of driver mutations in donors and recipients

For each mutation of interest, the 100 posterior value estimates of the true mutation VAF in recipients were divided by the 100 estimates of the VAF in donors, giving a posterior distribution for the ratio. The median and 95% posterior intervals of this distribution were calculated.

Simulation frameworks

Inference of engrafting cell number and demonstration of transplant-specific selection was performed using an ABC methodology, described in the next section. In ABC, a large number of simulated datasets, generated under the proposed model, takes the place of computation of the likelihood function for the model. Such simulations will never perfectly emulate the real-life scenario, but they can be useful to get a sense of biological parameters, within the constraints of the model used. To this end, we implemented several simulation models of allogeneic transplantation within the in-house developed R package ârsimpopâ v.2.2.4 (www.github.com/nickwilliamssanger/Rsimpop). This package allows simultaneous simulation of multiple-cell compartments, each with their own target population sizes, while recording the population phylogeny. It also allows subcompartments with differential fitness, mirroring the consequences of driver mutations. Population growth occurs through a birthâdeath process. Population growth occurs without cell death until a population reaches the target size, at which point the population is maintained with balanced cell birth/death.

The starting point of our simulations was the posterior distribution for the parameters of a model of normal ageing developed previously19,20. In our study of normal ageing19, the ABC method was first applied to a neutral model of haematopoietic stem cell dynamics, which is applicable to younger individuals. Using this approach, it was possible to generate a large sample (Nâ=â2,000) of parameter values from the joint posterior distribution of the parameter Nt (where N is the HSC population size, and t is the time between symmetric HSC cell divisions). In our study of ageing haematopoiesis, we further found that the changes in haematopoietic phylogeny structure seen with increasing age could be explained by constant acquisition of driver mutations with varying selection coefficients introduced into the HSC population through life19.

The ABC method was used to generate a large sample (Nâ=â2,000) from the joint posterior distribution for the parameters of this model (specifying the rate of introduction of driver mutations into the population, and the distribution of selection coefficients of those mutations). We used this posterior distribution (as represented by the samples of parameter values) as the prior distribution for these same parameters in the ABC analysis of the transplant phylogenies reported here (Extended Data Fig. 9). We also returned to the neutral model, and applied it to the phylogeny data from the two youngest donors (aged 29 and 38) in that study, to generate a large sample from the posterior distribution of the parameter Nt. This posterior distribution was used as the prior distribution for the parameter Nt in the ABC analysis of the transplant phylogenies.

Simulation model 1: no transplant-specific selection

Simulation begins with a single cellâthe zygote of the HCT donor. Population growth occurs through a birth process until a target population sizeâthe size of the HSC poolâis reached. As the previous estimates were for the value of NHSCâÃât, we keep a fixed value of t for all simulations (the time between HSC symmetric divisionsâ=â1 year) and choose N as a random draw from the posterior estimates from a previous study19. Once reached, the target population size NHSC is maintained by matching cell division rates with cell death/differentiation. Driver mutations are added into single cells in the population at a fixed rate through time (random draw of posteriors from ref. 19), by assigning cells a selection coefficient Shomeostatis (a random draw from a gamma distribution with shape and rate parameters themselves taken as a random draw from the posteriors from ref. 19), which is then passed on to all future cell progeny. This Shomeostatis results in cells from driver clones being more likely to undergo symmetric cell division than others.

Simulation of donor haematopoietic ageing continues accordingly until the age of the donor at HCT, Donor_ageHCT. At this point, a number of HSCs (Ntrans) are selected at random from the donor population of HSCs to be transplanted into the recipient. This number was picked from a prior distribution:

$${\text{log}}_{10}\left({N}_{{\rm{trans}}}\right) \sim {\rm{Uniform}}\left(\min =2.7,\text{max}=4.7\right)$$

This results in absolute values of Ntrans ranging between 500 and 50,000. Within rsimpop, these engrafting HSCs are assigned to a new recipient compartment. Selection coefficients of transplanted clones harbouring driver mutations are maintained, but not altered, during HCT. Regrowth of the HSC population from Ntrans to the target NHSC population size and subsequent homeostatic haematopoietic ageing then proceeds independently within the donor and recipient until the time of the blood draw, donor_ageBD. At this point, the simulation is stopped and HSCs are picked at random from the donor and recipient compartments, corresponding experimentally to the cells grown into colonies that underwent WGS.

Simulation model 2: incorporation of engraftment-specific selection

Simulations initially proceed as in model 1. However, at the point of selecting the Ntrans HSCs to be transplanted, clones harbouring driver mutations were given an additional âengraftment fitnessâ coefficient Sengraftment, independent of the usual steady-state selection coefficient Shomeostasis, which then was used as a weighting for the probability of their selection for transplant within the base R function sample. Engraftment fitness coefficients for each driver clone were chosen as a random draw from a truncated gamma distribution:

$${S}_{{\rm{engraftment}}} \sim {\rm{Gamma}}\,\left({\rm{shape}}\,=\,0.5,rate\,=\,0.5\right)$$

These gamma distribution parameters were chosen empirically. The engraftment fitness of non-driver-containing cells was then set as the 30th centile value of all values of Sengraftment, such that some clones with driver mutations, conferring a selective advantage during homeostasis, may in fact have reduced fitness at engraftment.

Simulation model 3: incorporation of post-engraftment selection

Simulations proceed as in model 1. However, after transplantation, 10â30% of driver-containing clones within the recipient may have an exaggeration of their selection coefficient Shomeostasis by 50â600%. This exaggeration of their selective advantage in the post-engraftment period is time-limited, continuing for 5 years, before reverting to the previous value. The motivation for the time-limited selective advantage is that the immediate post-transplant environment is unusual for several reasons: there is profound pancytopenia and the recipient bone marrow is hypoplastic after conditioning chemotherapy; the marrow microenvironment has recently been affected by leukaemia and intensive chemotherapy that may alter the selective landscape; there are frequently multiple infective or inflammatory episodes during the first few years after transplant as the innate and adaptive immune systems reconstitute; there is often residual host immunity that wanes over time. All of these factors are most pronounced in the early post-transplant period and are likely to resolve, at least partially, with time.

ABC of engrafting cell number

Simulations were run for each pair (nâ=â100,000) and key features of the separate donorârecipient phylogenies summarized by 13 statistics (illustrated examples of summary statistics from the recipient phylogenies shown in Extended Data Fig. 6): (1â3) the sizes of the largest 3 clades within the donor phylogeny; (4â6) as 1â3, but for the recipient phylogeny; (7) the number of singleton samples within the donor phylogeny (singleton is defined as a sample with no related samples from after the time of development); (8) as 7, but for the recipient phylogeny; (9) the number of coalescences within the donor phylogeny from around the estimated time of HCT, where this peri-HCT window is defined as coalescences occurring at an estimated age of between 5 years before, and 5 years after HCT; (10) as 9, but for the separate recipient phylogeny; (11) the number of coalescences in the donor phylogeny from an estimated timepoint after development, but before HCT, where this pre-HCT window is defined as coalescences occurring at an estimated age of between 5 years old, and 5 years before HCT; (12) as 11, but for the separate recipient phylogeny; (13), the maximum number of coalescences in the peri-HCT window (as defined in 9) within a single clade of the recipient phylogeny. This statistic was designed the capture the features of growth selection seen in the data.

Each vector of summary statistics computed from a simulated dataset was then compared to the vector of summary statistics computed from the experimentally generated data by calculating a Euclidean distance between these vectors. For this purpose, empirically modified versions of the experimentally generated phylogenies were used to provide best estimates of time trees, that is, those for which the height of a branch point represents the actual age at which that cell division occurred. For this, branch lengths were first corrected for sensitivity and sample clonality. The branch lengths were then shortened based on the estimated contribution of platinum and APOBEC mutational signaturesâthe sporadic signatures that are unlinked to time. Finally, terminal branches were shortened by 60 mutations, an estimate for the combined number of in vitro– and differentiation-associated mutations. This number was approximated based on (1) the excess of the y intercept of the linear regression of SNV burden against age (y interceptâ=â137; Extended Data Fig. 5a) over the known mutation burden at birth from other studies (SNV burden of ~60 in cord blood19). Moreover, the sum of estimates of the number of differentiation associated mutations (~30 mutations19) and typical numbers of in vitro acquired mutations during clonal expansion on methylcellulose (10â20 mutations, unpublished data) are of a similar order. After these branch-length corrections, the tree was made ultrametric using the previously described iteratively reweighted means algorithm, which assumes greater confidence for branch lengths where branches are shared by multiple samples19.

Inevitably, the definitions of transplant epoch used in the summary statistics could have a key role in informing the parameter estimates. It is also the case that the timing of the coalescences is subject to some random variation in that mutations are acquired at a fairly constant rate, but the absolute numbers acquired in a given time period are subject to at least Poisson variation. To assess the robustness of the ABC analysis, we assessed whether this variation leads to significant uncertainty in the numbers of coalescences in each epoch. First, we used a bootstrapping approach whereby all branch lengths were redrawn from a negative binomial distribution with µ equal to the original number of mutations, and the Πoverdispersion parameter estimated from the distribution of HSPC mutation burdens in that pair (100 bootstraps performed for each pair). We then repeated the steps of making the tree ultrametric and scaling to time, and calculated the number of coalescences falling in each epoch used in the ABC. This demonstrated that the numbers are robust, with only subtle variation in some values where coalescences fall close to the borders between epochs (Supplementary Fig. 7).

Second, we assessed whether varying the specific definitions of the epochs used for summary statistics meaningfully altered the posterior distributions of the ABC. Specifically, we assessed four alternative sets of epochs: (1) dividing the pre-transplant interval into more epochs; (2) dividing the peri-transplant interval into more epochs; (3) using a narrower range of molecular time for the peri-transplant interval; and (4) using a wider range of molecular time for the peri-transplant interval. Reassuringly, across the different ABC models and parameters, the different donorârecipient pairs and the different methods for estimating the posterior, we found that the four alternative definitions of HCT epochs had minimal effect on the inferred posterior distributions (Supplementary Fig. 8).

In more detail, in the original set of summary statistics, the peri-transplant interval was an interval of duration 10 years, centred on the time of transplant; and the pre-transplant interval began at age 5 years and ended at the timepoint where the peri-transplant interval begins (5 years before the time of transplant). In the pre_interval_divided set of summary statistics, the pre-transplant interval was replaced by two pre-transplant intervals, the first beginning at age 5 years and ending at the mid-point between 5 years of age and 5 years before the time of transplant. In the peri_interval_divided set of summary statistics, the peri-transplant interval was replaced by two peri-transplant intervals, each of duration 5 years. In the peri_interval_narrower set of summary statistics, the peri-transplant interval was an interval of duration 5 years, centred on the time of transplant. In the peri_interval_wider set of summary statistics, the peri-transplant interval was an interval of duration 15 years, centred on the time of transplant. At the same time as we compared the posterior densities generated using each of the five alternative sets of summary statistics, we also extended this comparison across four alternative ABC methods. These are the ABC rejection method and three ABC regression methods (ridge regression, local linear regression and a neural network method).

Comparisons were performed using the abc function of R package abc. Within this function, each summary statistic is standardized using an estimate of the standard deviation (the median absolute deviation). The Euclidean distance of each set of summary statistics from the data is then calculated. The closest 1% of simulations are accepted. The parameters from the accepted simulations represent a sample from the (approximate) posterior distribution. In the rejection sampling method, no regression step is performed. Where a regression model is used, this is applied as implemented within the abc function. However, for the primary results present in Fig. 2, the rejection sampling method was used as this was most robust to alternate summary statistics.

ABC for estimates of phylogenetic age

Phylogenetic structure has been shown to become increasingly oligoclonal with age. In a previous study, the phylogenetic trees of 8 adults of varying ages were used to inform posterior estimates of fundamental parameters governing these features19. We ran an identical simulation frameworkâincorporating introduction of driver mutations into the HSC population at a constant rateâusing the posterior parameter estimates from ref. 19 as starting parameter values. We ran 25,000 simulations, varying the age of the final trees from 20 to 100 years, and varying the size of the simulated phylogenetic trees to match that of the different individuals.

We used the abc function from the R package abc to infer posterior estimates of the age of each individual, looking at recipient and donor phylogenies separately. In contrast to the other ABC, phylogenies were assessed per individual (not HCT pair) and therefore a smaller set of seven summary statistics was used to compare with the data: (1â3) the size of the largest 3 clades; (4) the number of singleton samples; (5â6) the number of coalescences in the 20â40th and 40â60th centile bins of the tree; and (7) the proportion of samples lying within expanded clades, defined here clades containing at least 3 of the sequenced samples. A clade here is defined as a set of samples with a common ancestor after 50 mutations of molecular time (corresponding approximately to post-embryonic development).

The age of the top 5% of simulations were chosen for initial estimates of phylogenetic age. As before, a neural network regression was then performed to refine these estimates.

Using the lme function from the R package lme4, we performed a linear mixed-effects regression to estimate the impact of donor/recipient status on phylogenetic age. All individual posterior estimates of phylogenetic age were used in the regression. Fixed effects in the model were donor age (continuous predictor variable), and donor/recipient status (categorical predictor variable). No interaction term was used. HCT pair ID was considered a random effect to account for the non-independence of the sets of posterior estimates.

Statistics for pruning and growth selection

We wanted to design statistics to capture and quantify the features of pruning and growth selection described in the âCauses of reduced clonal diversityâ section and shown in Fig. 4aâc, in a clone-specific manner, to reflect that different clones may experience an advantage at different points.

For each expanded clade, we wanted to quantify the increase of coalescences either (1) before the time of HCT, or (2) around the time of HCT, in the recipient compared to the donor. However, the growth selection statistic may be increased by neutral mechanisms in the context of a population bottleneck, and therefore is only strong evidence of selection where the total number of peri-transplant coalescences from across the tree are biased to that specific clade.

Pruning selection statistic

We first calculate 1â+âthe number of recipient coalescences in an expanded clade that time to the pre-HCT time window as a proportion of 1â+âthe total number of coalescences in that clade.

$${\rm{Pruning}}\;{\rm{selection}}\;{\rm{statistic}}=\,\left(\frac{1+\,{n}_{{\rm{pre}},R}}{1+{N}_{R}}\right)\,/\,\left(\frac{1+\,{n}_{{\rm{pre}},D}}{1+{N}_{D}}\right)$$

Where npre,R is the number of recipient coalescences in the specific expanded clade that time to the pre-HCT time window, NR is the total number of recipient coalescences in that expanded clade, and npre,D and ND are the equivalent numbers for the same expanded clade in the donor phylogeny. All values have one added to avoid dividing by zero.

Growth selection statistic

This is similar to the pruning selection statistic, but is focused instead on coalescences in the peri-HCT time window (those that time from five years before until five years after HCT).

$${\rm{Growth}}\;{\rm{selection}}\;{\rm{statistic}}=\,\left(\frac{1+\,{n}_{{\rm{peri}},R}}{1+{N}_{R}}\right)\,/\,\left(\frac{1+\,{n}_{{\rm{peri}},D}}{1+{N}_{D}}\right)$$

Where nperi,R is the number of recipient coalescences in the specific expanded clade that time to the peri-HCT time window, NR is the total number of recipient coalescences in that expanded clade, and nperi,D and ND are the equivalent numbers for the same expanded clade in the donor phylogeny. All values have one added to avoid dividing by zero.

Estimating the anticipated impact of long-lived T cell clones on T cell clonality

Our targeted sequencing results show that substantially lower proportions of T cells compared to myeloid cells derive from expanded clones at a given timepoint (when considering clones known from the phylogeny). We put forward several potential contributors to this difference in the âClonal output within lymphoid compartmentsâ section, one of which is that, at any given time, the clonal make-up of T cells reflects HSC output from up to 8â15 years earlier. Given that oligoclonality of the HSC compartment increases with age, the decreased clonality of T cells may simply reflect the more polyclonal output of these younger HSCs.

To assess how much of the observed difference in expanded clone proportions may result from this, we performed simulations (according to simulation framework 1) in which we compared the oligoclonality of the HSC population from 4â8 years before the time of blood sampling (reflecting the average age of T cells that have a lifespan of 8â15 years, that is, the average age is approximately half the lifespan at steady state), compared to that at the time of blood sampling (reflecting the age of the short-lived myeloid cells). We performed 3,000 simulations per individual, varying the lifespan between 8â15 years, and comparing the total proportion of T cells from expanded clones to myeloid cells from expanded clones as a function of the life span of T cell clones.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.