A family physician points to an on-screen transcription produced by an AI program used to automate clinical documentation.

Credit: Kendall Warner/The Virginian-Pilot/Tribune News Service/Getty

In 2022, an eight-year-old girl in Birmingham, Alabama, was puzzling her physicians. Having been diagnosed with SHINE syndrome, a neurodevelopmental disease with only 130 recorded cases worldwide, she also presented with several atypical symptoms – such as a loss of motor control when colouring in spaces between the lines of a picture.

Owing to the rarity of SHINE syndrome, physicians at the Precision Medicine Institute of the University of Alabama at Birmingham had few resources to draw on when looking for treatment options. So they turned for help to Joni Rutter, director of the National Center for Advancing Translational Sciences (NCATS), and her team.

NCATS, which is based in Rockville, Maryland, had been developing what is known as the Biomedical Data Translator (or simply Translator). Driven by artificial intelligence (AI), the diagnostic tool uses a patient’s symptoms, or phenotype, or specific genes, to search through an extensive database to identify treatments related to the person’s profile. The database includes data sets on proteins, genes and metabolites. After the girl’s symptoms were loaded into Translator, the tool suggested guanfacine, a drug commonly used to control high blood pressure, as a treatment option. When the girl had been on the drug for five months, her mother reported a measurable improvement in her daughter’s behavioural and motor skills.

Nature Spotlight: Bench to bedside

Such work — in which innovative technologies quickly make an impact on patient health — has historically been rare. But translational science of this kind is at a crossroads, says Rutter. For an intervention to get from the laboratory bench to a patient’s bedside, it must first go through a series of preclinical and clinical trials to test its safety and effectiveness. This is easier said than done. For more than a decade, the success rate for phase I trials of investigational drugs — the first series of tests of a new drug on humans —

has remained at just above 10%

. And things don’t improve much further down the pipeline, Rutter says.

“For years, we have had trouble predicting the success of drug compounds,” she says. “Ninety per cent of the time they fail — and they fail largely in phase II or phase III. We’re not doing something right here.”

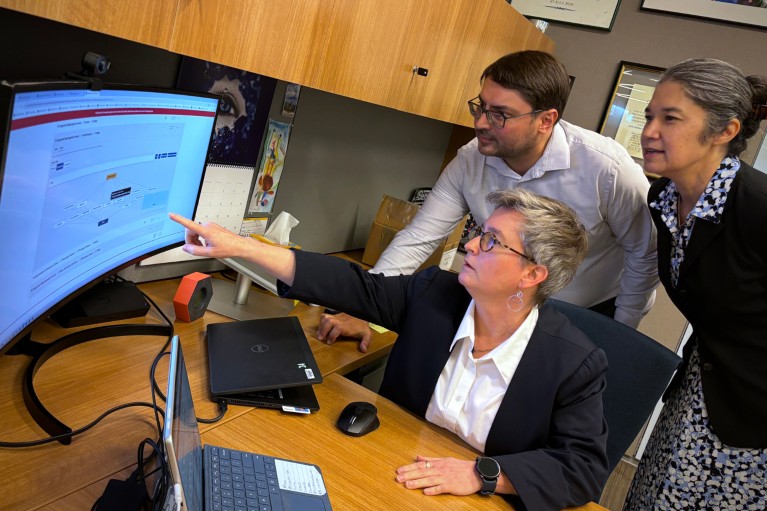

Translator is just one early example of how AI is beginning to affect translational science. Although it’s not yet ready for prime time, Rutter says that it’s only a matter of time until this AI tool, or others like it, takes on a bigger role.

“This is a promising proof of concept of how AI has really helped shape the narrative,” she says. “Ready or not, it’s happening.”

Translational troubles

According to a

2022 World Health Organization (WHO) report

, only 27 antibiotic treatments were in development worldwide in 2021, down from 31 in 2017. As of 2022, existing classes of antibiotics have only about a

one in 15 chance of passing trials and reaching patients

. For new antibiotics, that success rate has fallen to just 1 in 30.

“Time is running out to get ahead of antimicrobial resistance [AMR], the pace and success of innovation is far below what we need to secure the gains of modern medicine against age-old but devastating conditions like neonatal sepsis,” said Haileyesus Getahun, then a coordinator at the WHO Stop TB Department, in a

2013 talk on behalf of the organization

.

This gap between bench and bedside in drug development is known among translational-medicine experts as the valley of death and has been a persistent barrier for researchers working to move clinical solutions forwards. Part of the problem, says Christine Colvis, director of NCATS’ Office of Drug Development Partnership Programs, is that many complex drug compounds can have effects that are difficult to predict.

“The complexity comes from the fact that sometimes the drug interacts with a ‘target’ other than that for which it was designed or intended,” Colvis says. “This can lead to unanticipated side effects.”

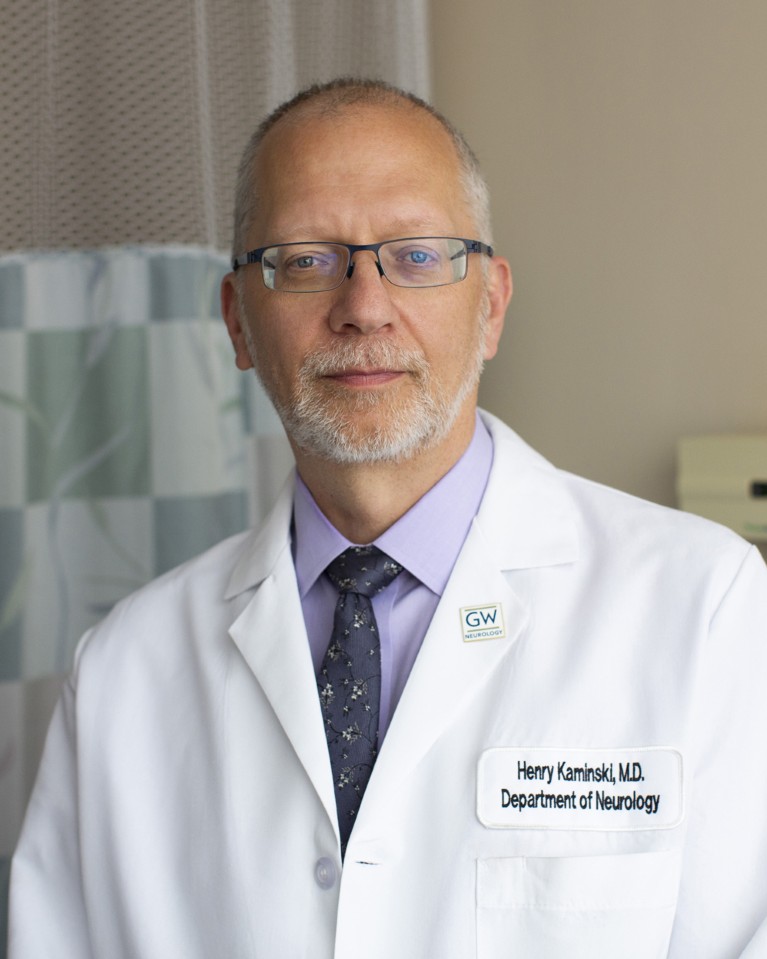

For Henry Kaminski, a neurologist at the George Washington University in Washington DC, another factor that drives clinical-trial failures, particularly for rare diseases, is a lack of standardized data.

“For any human-administered test, whether a physical examination by a physician or a coordinator instructing a patient on how to perform a patient-reported outcome measure, there is variability in performance,” Kaminski says.

Neurologist Henry Kaminski highlights a lack of standardized data as a key factor behind clinical trial failures.

Credit: George Washington University

It’s also possible that data collected in specific populations, such as from patients at a medical school, don’t necessarily carry over into a larger population for a clinical trial, he says. Similarly, preclinical trials carried out in animal models are often good but not perfect, and sometimes diseases cannot be modelled in animals at all.

For example, Kaminski says, rodents do not have the same immune systems as humans. As a result, some biological drugs, such as humanized antibody proteins, cannot be tested successfully. However, studying human cells alone and outside the added complexity offered by an animal physiology model makes it difficult for researchers to determine the drug’s effects on people.

Beyond the scientific barriers, translational medicine also faces administrative obstacles, Kaminski says. Before a clinical trial can begin, it must go through a multistep process in which the trial managers contract researchers, analyse the budget, evaluate conflicts of interest and train onsite personnel, he says. These steps are important, but they are also time-consuming.

Enter, AI

Researchers are looking towards technology, such as AI, to help translational medicine through these growing pains. Although AI has become synonymous with large language models (LLMs) such as those that form the basis of ChatGPT, other forms of AI, including deep learning and machine learning, have been playing a part in medicine for years, Rutter says.

Translator, for example, used machine learning to comb through hundreds of databases and draw connections between SHINE syndrome and blood-pressure medication, Rutter says.

She is excited about how AI could improve on preclinical and clinical results, such as by learning from mistakes made in past clinical trials and designing approaches to improve on them, or by helping to identify patient populations for trial outreach.

“One classic issue faced by clinical researchers is ‘nuisance assays’, which always show positive responses for some reason,” Rutter says. “What AI could do is help with pattern recognition in these processes … and then start to screen those [false positives] out, so that the compounds that we push through then are much more predictive.”

From left: Joni Rutter and her colleagues Tyler Beck and Christine Colvis discuss a knowledge graph produced by the Biomedical Data Translator tool.

Credit: NCATS

In a

2024 interview Khair ElZarrad

at the US Food and Drug Administration echoed these hopes, saying that machine-learning tools could be used in clinical trials to improve patient monitoring and adherence to study protocols. Papers published in 2021 in both

Trials

1

and

Nature

2

also say that AI could be beneficial in improving trial efficiency and evaluating patient eligibility.

AI could also have a frontline role in a health-care setting, according to Bill Hersh, a specialist in medical informatics and clinical epidemiology at Oregon Health & Science University in Portland, who says that LLMs can be used as a listening tool during medical appointments.

“We’ve known for a decade now that [recording notes] for electronic health records slows physicians down,” Hersh says. “New LLM technology can listen in on a physician–patient encounter and generate the note — speeding up this process.”

An electronic-health-record system called Epic already uses this system, allowing physicians to request AI-drafted notes or summaries of a person’s health records. By using templates included in systems such as Epic, Kaminski says, it’s possible to standardize information more effectively, which in turn makes it easier for AI training systems to handle.

“This pool of information can then be used to teach an AI to identify patterns of disease,” Kaminski says. “After some time of review and optimization, there could be systems to alert a physician regarding the potential of a diagnosis. This would be particularly impactful for rare diseases, which often take years to identify.”

The problem with data

Despite the promise shown by AI, some experts in the field emphasize the need for caution.

Hongfang Liu is a specialist in biomedical informatics at the University of Texas Health Science Center at Houston, and a lead researcher at the institute’s Center for Translational AI Excellence and Applications in Medicine. She says that although AI has the potential to advance translational medicine, it runs into the same problem that is slowing the field down generally: a lack of quality data.

“Real-world data reflects the real-world challenges we face,” Liu says. “Data quality is heavily linked to social determinants of health, so even a data record is not the whole picture of a patient.”

This means that when AI models are trained on health records, they will often be fed less, and poorer-quality, information on underserved patients, Liu says — thereby strengthening the models’ biases towards advantaged populations.

Hongfang Liu cautions that AI struggles to capture the complexity of the real world.

Credit: McWilliams School of Biomedical Informatics at UTHealth Houston

Likewise, using standardized templates in systems such as Epic might well help an AI to consume data more efficiently. But so far, Rutter says, this has resulted in AI models understanding such clear-cut health records better than they understand the actual testimony of patients, meaning that

they are less useful in the real world of clinical diagnosis

.

“I think this provides a key opportunity for us to think about how we ethically use AI and ensure that we’re meeting the needs of different patients and patient populations,” Rutter says.

One way in which researchers in other fields have got round the data-scarcity problem facing AI systems is to create synthetic data; in other words, AI-created data that are designed to mimic real-world data input. However, Colvis says that our understanding of disease itself is still too rudimentary to be recreated effectively in a synthetic form.

These issues are why it’s important to study the creation and quality of data, Liu says, so as to identify the factors affecting data collection.

Building trust

Beyond the problem of data, there is also the old standby problem that follows AI — specifically LLMs — anywhere it goes. And that’s that it is often confidently incorrect. The worst of these errors occur when an AI “hallucinates”, essentially making connections between concepts that are false or invented. If unnoticed by a human user, this could lead researchers down dangerous and misleading rabbit holes.

A paper

3

published in

Nature Machine Intelligence

in 2021 found systematic flaws and biases across a number of AI tools that attempted to diagnose COVID-19 from chest X-rays. In their literature review, the researchers identified that 110 papers of the 320 they evaluated failed on at least three mandatory criteria that are used to ensure the reproducibility and transparency of results. Those criteria are listed in the Radiological Society of North America’s Checklist for Artificial Intelligence in Medical Imaging.

One way to improve awareness of these errors is to design an AI that can explain its thought process, says Hersh. Unfortunately, this is easier said than done, because AI’s ‘thoughts’ are often a black box, as a result of its nonlinear and non-symbolic reasoning processes.

“Automation bias is when we become too dependent or we trust the technology so much that if the technology says we should do this, then let’s do it,” Hersh says. “This is actually a big issue.”

This might mean that AI is better suited to less “sexy” jobs than to standing in for a doctor, Hersh says. For example, an AI could help to optimize a physician’s work, giving them more time to interact with patients.

With this in mind, Kaminski says we should also remember that AI is only in its infancy, and that mistakes as well as improvements are to be expected. “Humans also make mistakes when they’re first learning,” he says, even when they’re experts in other fields.

Ultimately, Liu says, it’s important to remember that medicine is a ‘team sport’, and that AI is just one new part of that team. To ensure that this tool is used effectively and ethically, she says, it will need to be exercised with a human-first perspective.

“I do think AI has potential,” Liu says. “But it all needs to be human-centred. Don’t do AI for the sake of AI, do AI for the sake of care.”